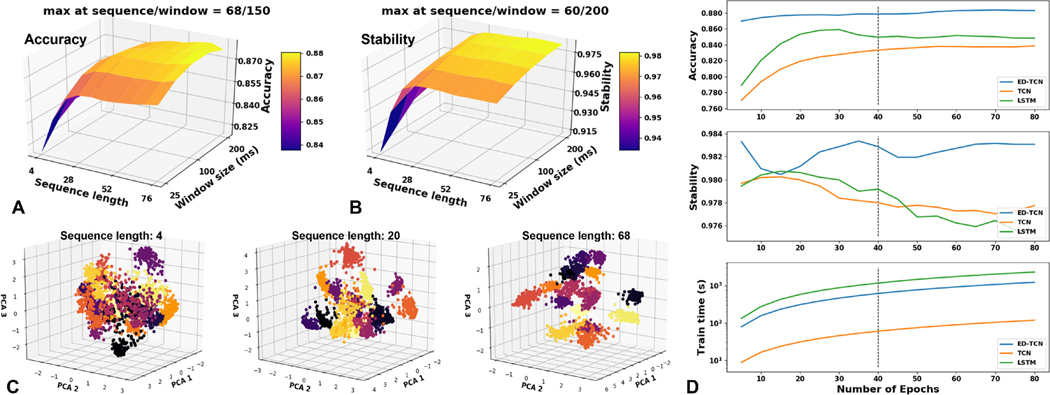

Fig. 3.

Parameter-tuning ED-TCN to determine optimal window size, sequence length, and number of training epochs. Final parameters used for Ninapro and CapgMyo public datasets are shown in Table I, and parameters used for our experiments are shown in Table II. (A) Importantly, sequential models demonstrated that the inclusion of sequence data improved accuracy while reducing the model’s dependence on window size, and (B) model stability was generally improved with longer sequences and larger windows. (C) Principle component projection of the latent temporal class patterns learned by ED-TCN when trained on different sequence lengths (18 classes shown). The added temporal data taught ED-TCN to better organize classes into distinct and separable clusters. (D) Accuracy, stability, and training time as a function of epochs. For this subject, ED-TCN outperformed other models after only 5 epochs.