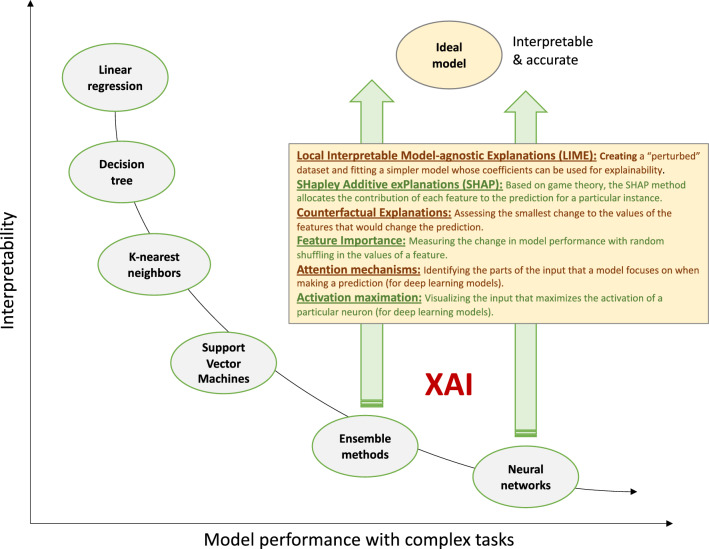

Fig. 5.

Explainability and interpretability of medical machine learning. Broadly speaking, more complex algorithms demonstrate better performance when dealing with complex tasks and data inputs. For instance, the recognition of cardiomyopathy using echocardiographic videos may require a deep learning algorithm to model the full extent of temporal and spatial features that carry diagnostic value, whereas predicting the risk of re-admission using electronic health record data may be modelled using generalized linear models. Simpler models, such as decision trees and linear models are intuitive and interpretable, whereas ensemble and neural network-based methods are too complex for the human mind to fully understand. Explainable artificial intelligence (XAI) methods aim to bridge this interpretability gap by offering direct or indirect insights into the inner workings of complex algorithms