Abstract

Objective and Impact Statement. We propose a rapid and accurate blood cell identification method exploiting deep learning and label-free refractive index (RI) tomography. Our computational approach that fully utilizes tomographic information of bone marrow (BM) white blood cell (WBC) enables us to not only classify the blood cells with deep learning but also quantitatively study their morphological and biochemical properties for hematology research. Introduction. Conventional methods for examining blood cells, such as blood smear analysis by medical professionals and fluorescence-activated cell sorting, require significant time, costs, and domain knowledge that could affect test results. While label-free imaging techniques that use a specimen’s intrinsic contrast (e.g., multiphoton and Raman microscopy) have been used to characterize blood cells, their imaging procedures and instrumentations are relatively time-consuming and complex. Methods. The RI tomograms of the BM WBCs are acquired via Mach-Zehnder interferometer-based tomographic microscope and classified by a 3D convolutional neural network. We test our deep learning classifier for the four types of bone marrow WBC collected from healthy donors (): monocyte, myelocyte, B lymphocyte, and T lymphocyte. The quantitative parameters of WBC are directly obtained from the tomograms. Results. Our results show >99% accuracy for the binary classification of myeloids and lymphoids and >96% accuracy for the four-type classification of B and T lymphocytes, monocyte, and myelocytes. The feature learning capability of our approach is visualized via an unsupervised dimension reduction technique. Conclusion. We envision that the proposed cell classification framework can be easily integrated into existing blood cell investigation workflows, providing cost-effective and rapid diagnosis for hematologic malignancy.

1. Introduction

Accurate blood cell identification and characterization play an integral role in the screening and diagnosis of various diseases, including sepsis [1-3], immune system disorders [4, 5], and blood cancer [6]. While patient’s blood is examined with regard to the morphological, immunophenotypic, and cytogenetic aspects for diagnosing such diseases [7], the simplest yet the most effective inspection in the early stages of diagnosis is a microscopic examination of stained blood smears obtained from peripheral blood or bone marrow aspirates. In a standard workflow, medical professionals create a blood-smeared slide, fix, and stain the slide with chemical agents such as hematoxylin-eosin and Wright-Giemsa stains, followed by the careful observation of blood cell alternations and cell count as per specific diseases. This not only requires time, labor, and associated costs but also is vulnerable to the variability of staining quality that depends on the staining of trained personnel.

To address this issue, several label-free techniques for identifying blood cells have recently been explored, including multiphoton excitation microscopy [8, 9], Raman microscopy [10-12], and hyperspectral imaging [13, 14]. Each method exploits the endogenous contrast (e.g., tryptophan, Raman spectra, and chromophores) of a specimen with the objective of visualizing and characterizing it without using exogenous agents; however, these modalities require rather complex optical instruments with demanding system alignments and long data acquisition time. More recently, quantitative phase imaging (QPI) technologies that enable relatively simple and rapid visualization of biological samples [15-18] have been utilized for various hematologic applications [19-21]. By measuring the optical path length delay induced by a specimen and by reconstructing a refractive index using the analytic relation between the scattered light and sample, QPI can identify and characterize the morphological and biochemical properties of various blood cells.

Recent advances in artificial intelligence (AI) have suggested unexplored domains of QPI beyond simply characterizing biological samples [22]. As datasets obtained from QPI do not rely on the variability of staining quality, various machine learning and deep learning approaches can exploit uniform-quality and high-dimensional datasets to perform label-free image segmentation [23, 24], classification [25-32], and inference [33-39]. Such synergetic approaches for label-free blood cell identification have also been demonstrated, which are of interest to this work [25, 26, 28, 40-43]. However, these often necessitate manual extraction of features for machine learning or do not fully utilize the high-complexity data of three-dimensional (3D) QPI, possibly improving the performance of deep learning.

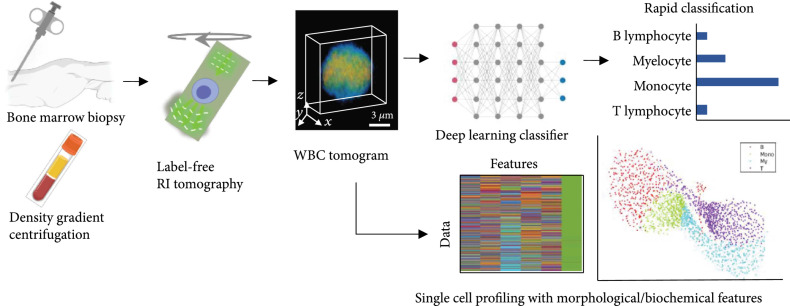

In this study, we leverage optical diffraction tomography (ODT), a 3D QPI technique, and a deep neural network to develop a label-free white blood cell profiling framework (Figure 1). We utilized ODT to measure the 3D refractive index of a cell, which is an intrinsic physical property, and extract various morphological/biochemical parameters of the RI tomograms such as cellular volume, dry mass, and protein density. Subsequently, we use the optimized deep learning algorithm for accurately classifying WBCs obtained from the bone marrow. To test our method, we performed two classification tasks for a binary differential (myeloid and lymphoid, >99% accuracy) and a four-group differential (monocyte, myelocyte, B lymphocyte, and T lymphocyte, >96% accuracy). We demonstrate the representation learning capability of our algorithm using unsupervised dimension reduction visualization. We also compare conventional machine learning approaches and two-dimensional (2D) deep learning with this work, verifying the superior performance of our 3D deep learning approach. We envision that this label-free framework can be extended to a variety of subtype classifications and has the potential to advance hematologic malignancy diagnosis.

Figure 1.

Overview of label-free bone marrow white blood cell (WBC) assessment. Bone marrow WBCs obtained from a minimal process of density gradient centrifugation are tomographically imaged without any labeling agents. Subsequently, individual refractive index (RI) tomograms can be accurately (>95%) and rapidly (<1 s) classified via an optimized deep-learning classifier along with the analysis of morphological/biochemical features such as cellular volume, dry mass, and protein density.

2. Results

2.1. Morphological Biochemical Properties of WBCs

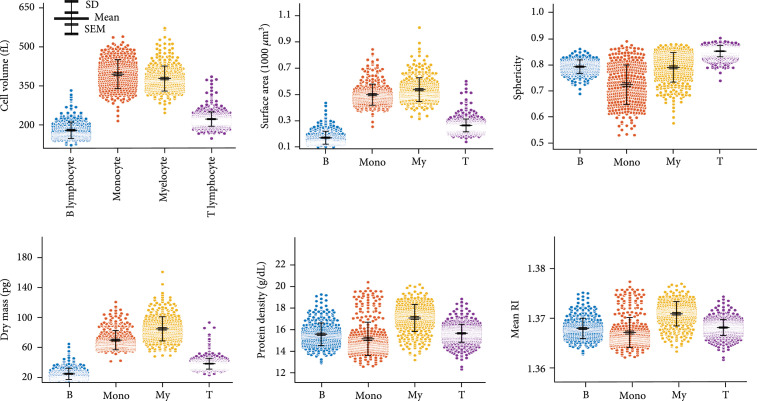

We first quantified morphological (cell volume, surface area, and sphericity), biochemical (dry mass and protein density), and physical (mean RI) parameters of bone marrow WBCs and statistically compared them in Figure 2. These parameters can be directly obtained from the 3D RI tomograms (see Materials and Methods). The mean and standard deviation of cell volumes for B lymphocytes, monocytes, myelocytes, and T lymphocytes are fL, fL, fL, and fL, respectively; in the same order, the surface areas are μm2, μm2, μm2, and μm2; the sphericities are , , , and ; the dry masses are pg, pg, pg, and ; the protein densities are g/dL, g/dL, g/dL, and g/dL; the mean RIs are , , , and .

Figure 2.

Quantitative analysis of morphological (cell volume, surface area, and sphericity), biochemical (dry mass and protein density), and physical (mean RI) parameters. The protein density is directly related to the mean RI. The scatter plots represent the entire population of the measured tomograms. SD: standard deviation; SEM: standard error of the mean; B: B lymphocyte; Mono: monocyte; My: myelocyte; T: T lymphocyte.

Several observations are noteworthy. First, the mean cellular volume, surface, and dry mass of lymphoid cells (B and T lymphocytes) are smaller than those of myeloid cells (monocytes and myelocytes). The morphological properties of the B and T cells are directly related to the dry mass because we assumed that the cells mainly comprised proteins (0.2 mL/g). Furthermore, we observed that the sphericity of the lymphoid group was larger than that of the myeloid group. The B and T lymphocytes, which commonly originate from small lymphocytes, have one nucleus and spherical shapes; the monocyte and myelocyte cells have a more irregular morphological distribution. Overall, the standard deviations of all parameters except the mean RI for the myeloid group were larger than those of the lymphoid group, indicating the larger morphological and biochemical variability in the myeloid group. Finally, it is challenging to accurately classify the four types of WBCs by simply comparing these parameters (e.g., thresholding). Although lymphoid cells could be rather differentiated from myeloid cells based on the cellular volume, surface area, or dry mass at the “population” level, the overlapped population on all parameters across the two groups still impedes the accurate classification at the “single-cell” level. More importantly, classifying within lymphoid or myeloid (e.g., classification of B and T) would be even more difficult as their statistical parameters are very similar.

2.2. Three-Dimensional Deep Learning Approach for Accurate Classification

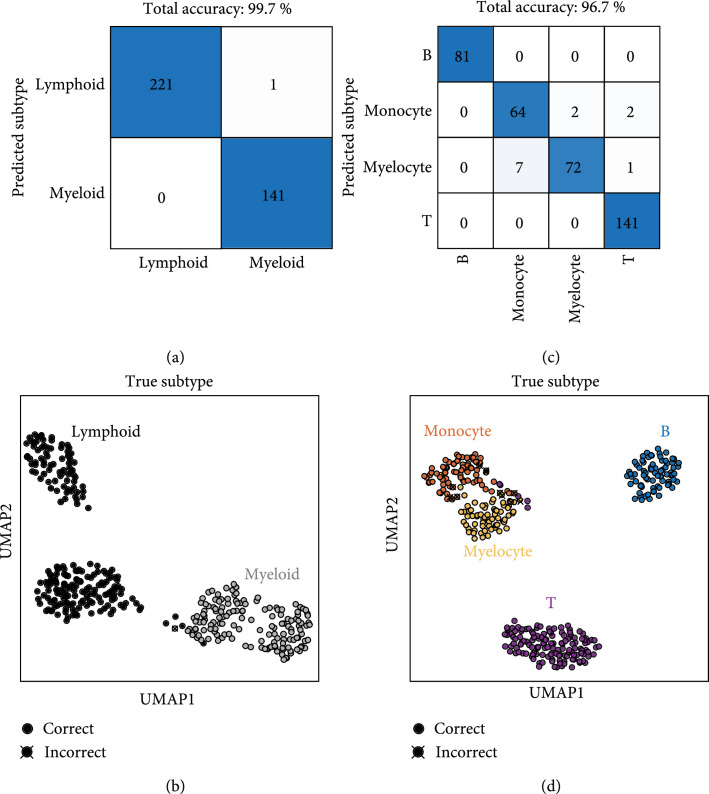

To achieve an accurate classification of the WBCs at the single-cell level, we designed and optimized a deep-learning-based classifier by fully exploiting the ultrahigh dimension of WBC RI voxels, presenting two independent classification results in Figure 3. We first tested the 3D deep-learning classifier for the binary classification of lymphoid and myeloid cell groups. For the unseen test dataset, the binary classification accuracy of the trained algorithm was 99.7%, as depicted in Figure 3(a). Remarkably, only one lymphoid-group cell was misclassified as a myeloid cell. The powerful learning capability of our network is visualized by the unsupervised dimension reduction technique, UMAP (see Materials and Methods, Section 4.6) in Figure 3(b). High-dimensional features were extracted from the last layer of the second downsampling block of the trained network. A majority of test data points are clearly clustered, while few data points of myeloids and lymphoids are closely located. This indicates that our well-trained algorithm not only extracts various features that differentiate the two groups but also finely generates a complex decision boundary for such unclustered data points for accurate classification. It is also interesting that roughly four clusters were generated through deep learning, although we trained the algorithm to classify the two groups, implying that the learning capacity of our algorithm would be sufficient for the classification of more diverse subtypes (see also Figure S1).

Figure 3.

Classification results using our approach. Two classifiers are independently designed for (a, b) the binary classification of lymphoid and myeloid and (c, d) the classification of B/T lymphocytes, monocyte, and myelocyte, respectively. The powerful learning capability of the optimized classifiers is illustrated via uniform manifold approximation and projection (UMAP).

We also tested another deep neural network that classifies the four types of WBCs with a test accuracy of 96.7%. The predictions of the trained algorithm for the four different subtypes are shown in Figure 3(c). Our algorithm correctly classified all B lymphocytes, while monocyte and myelocyte groups were misclassified, and a few T lymphocytes were misclassified into myeloid group cells. UMAP visualization was also performed for this result (Figure 3(d)). The B cell cluster is clearly distant from the remaining clusters. Meanwhile, the monocyte and myelocyte clusters are closely located, thereby providing the most cases of misclassification. A few data points of T cells were also found near these clusters. Despite the significantly similar statistics across the four subtypes as confirmed in the previous section, our algorithm is capable of extracting useful visual features from millions of voxels and generating a sophisticated decision boundary, achieving high test accuracy for the unseen data. It is also noteworthy that a small subset (e.g., 10% of the training set) could be sufficient to achieve a test accuracy of 90% or more (Figure S2).

2.3. Conventional Machine Learning and 2D Deep Learning

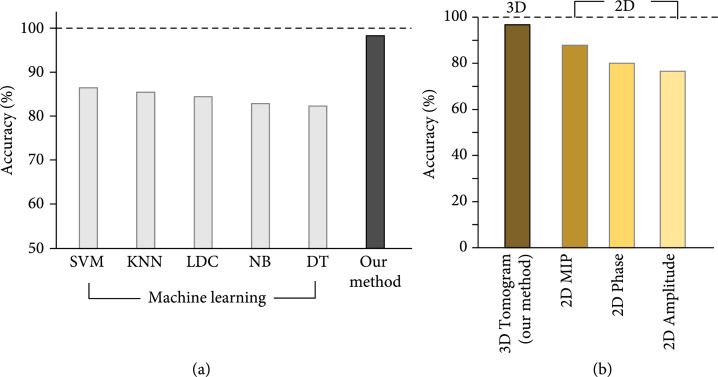

For further validation of our method, we benchmarked our 3D deep learning approach against conventional machine learning (ML) approaches that require handcrafted features. First, widely used ML algorithms, such as support vector machines, -nearest neighbors, linear discriminant classifiers, naïve trees, and decision trees, were performed and compared with our method for the four-type classification (see Materials and Methods, Section 4.5). The test accuracies for the five algorithms along with our method are shown in Figure 4(a). While the machine learning algorithms do not exceed 90% accuracy, our method achieved more than 96% test accuracy, as confirmed in the previous section. We reasoned that the six parameters obtained from 3D RI tomograms would not be sufficient for the conventional algorithms to generate an accurate classification boundary of the 3D deep network, although additional feature engineering or extractions may help improve the performance of the machine learning algorithms. Yet, the machine learning approaches are capable of classifying the lymphoid and myeloid groups of which morphological and biochemical parameters are differentiated enough (Figure S3).

Figure 4.

Conventional approaches in comparison with the present method. (a) Comparison of classification accuracy between conventional machine learning algorithms that require hand-crafted features and our 3D deep learning. (b) Performance of deep learning with various input data. SVM: support vector machine; KNN: -nearest neighbors algorithm; LDC: linear discriminant classifier; NB: naïve Bayes; DT: decision tree; MIP: maximum intensity projection.

A 2D deep neural network that processes various 2D input data was also explored. We used a 2D maximum intensity projection (MIP) image that can be directly obtained from a 3D RI tomogram, 2D phase, and amplitude to train the deep network. The classification accuracies for the four different inputs are shown in Figure 4(b). While the 2D MIP obtained directly from the 3D tomogram achieved an accuracy of 87.9%, which is the closest to our approach, the network trained with 2D phase and amplitude presented accuracies of 80.1% and 76.6%. These results suggest that it is important to fully utilize 3D cellular information with an optimal 3D deep-learning classifier for accurate classification.

3. Discussion

In this study, we have demonstrated how synergistically utilizing optical diffraction tomography and deep learning can be employed to profile bone marrow white blood cells. With minimal sample processing (e.g., centrifugation), our computational framework can accurately classify white blood cells (lymphoids and myeloids with >99% accuracy; monocytes, myelocytes, B lymphocytes, and T lymphocytes with >96% accuracy) and their useful properties such as cellular volume, dry mass, and protein density without using any labeling agents. Moreover, the presented approach, capable of extracting optimal features from captured RI voxels, outperformed the well-known machine learning algorithms requiring handcrafted features and deep learning with 2D images. The powerful feature learning capability of our deep neural networks was demonstrated via UMAP, an unsupervised dimension reduction technique. We anticipate that this label-free workflow that does not require laborious sample handlings and domain knowledge can be integrated into existing blood tests, enabling the cost-effective and faster diagnosis of related hematologic disorders.

Despite successful demonstrations, several future studies need to be conducted for our approach to be applicable in clinical settings. First, diverse generalization tests across imaging devices and medical institutes should be performed. In this study, we acquired the training and test datasets from only one imaging system installed at a single site. Exploring diverse samples and cohort studies at multiple sites using several imagers would strengthen the reliability of our approach. Second, the current data acquisition rate needs to be improved. While we manually obtained and captured the tomograms of WBCs within the limited field of view of high numerical aperture ( μm2, 1.2 NA), the use of a motorized sample stage well synchronized with a high-speed camera can significantly boost the data acquisition rate. The microfluidic system might be integrated into the current system to improve the imaging speed; however, tomographically imaging rapidly moving samples as accurately as static ones would be challenging. Ultimately, we need to extend the classification to other types of bone marrow blood cells for diagnosing various hematologic diseases such as leukemia. As conducted in manual cell differentials by medical professionals, standard cell types to be counted, such as promyelocytes, neutrophils, eosinophils, plasma cells, and erythroid precursors, should be included the training dataset for clinical uses. As the diversity of cell types and the complexity of information increases, it may require the significant tuning of our network hyperparameters or even redesigning of the network architecture.

4. Materials and Methods

4.1. Sample Preparation and Data Acquisition

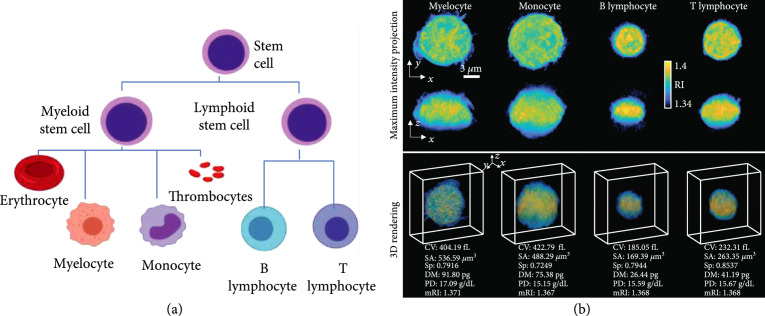

Four types of white blood cells were collected from the bone marrow of ten healthy donors investigated: myelocyte, monocyte, B lymphocyte, and T lymphocyte (Figure 5(a)). We also note that we obtained 1-2 types of WBCs per donor owing to a limited amount of blood sample and low magnetic-activated cell sorting yield (refer to Figure S4). In WBC lineage, myelocytes and monocytes stem from myeloids; B and T lymphocytes stem from lymphoids.

Figure 5.

WBC lineage relevant to our study and representative RI tomograms. (a) Two myeloid cells and two lymphoid cells are used to demonstrate our framework. (b) Representative RI tomograms are visualized through two different perspectives of maximum intensity projection and 3D isosurface rendering. CV: cellular volume; SA: surface area; Sp: sphericity; DM: dry mass; PD: protein density; mRI: mean RI.

First, the bone marrow was extracted via a needle biopsy. To isolate mononuclear cells (MC), the bone marrow was diluted in phosphate-buffered saline (PBS; Welgene, Gyeongsan-si, Gyeongsangbuk-do, Korea) in a 1 : 1 ratio, centrifuged using Ficoll-Hypaque (d =1.077, Lymphoprep™; Axis-Shield, Oslo, Norway), and washed twice with PBS. Next, magnetic-activated cell sorting (MACS) was performed to obtain the four types of WBCs from the isolated MC. CD3+, CD19+, and CD14+ MACS microbeads (Miltenyi Biotec, Germany) were used to positively select T lymphocytes, B lymphocytes, and monocytes. Myelocyte cells were isolated through the negative selection of CD14 and positive selection of CD33. For optimal sample density and viability, we prepared each isolated sample in a mixed solution of 80% Roswell Park Memorial Institute (RPMI) 1640 medium, 10% heat-inactivated fetal bovine serum (FBS), and 10% 100 U/mL penicillin and 100 μg/mL streptomycin (Lonza, Walkersville, MD, USA). While imaging, we kept the samples in an enclosed iced box.

A total of 2547 WBC tomograms were obtained using our imaging system. After sorting out noisy dataset (e.g., moving cells, coherent noises) [44], the number of datasets for myelocytes, monocytes, B lymphocytes, and T lymphocytes was 403, 379, 399, and 689, respectively. For deep learning, we randomly shuffled the entire dataset and split the training, validation, and test set by a 7 : 1 : 2 ratio.

The representative 3D RI tomograms for each subtype are visualized as a maximum intensity projection image and a 3D-rendered image in Figure 5(b). The morphological and biochemical parameters can be directly computed from the measured RI tomograms, which is further explained in the next section.

4.2. Subjects and Study Approval

The study was approved by the Institutional Review Board (No. SMC 2018-12-101-002) at the Samsung Medical Center (SMC). All blood samples were acquired at the SMC between November 2019 and June 2020 (see Figure S4). We selected patients suspected of having lymphoma who underwent bone marrow biopsy and were all diagnosed with normal bone marrow at the end.

4.3. Quantification of Morphological/Biochemical Properties

Six parameters calculated from a reconstructed tomogram were obtained: cellular volume, surface area, sphericity, dry mass, protein density, and mean refractive index. First, the cellular volume and surface area were directly acquired by thresholding the tomogram. The voxels with RI values higher than the thresholding RI value were segmented; the thresholding value was 1.35, considering a known medium RI of approximately 1.33 and experimental noises. Sphericity was calculated by relating the obtained surface area and volume as follows: .

Next, the biochemical properties such as protein density and dry mass were obtained from RI values via a linear relation between the RI of a biological sample and its local concentration of nonaqueous molecules (i.e., proteins, lipids, and nucleic acids inside cells). Considering that the proteins are major components and have mostly uniform values, protein density can be directly converted from RI values as follows: , where and are the RI values of a voxel and the medium, respectively, and is the refractive index increment (RII). In this study, we used an RII value of 0.2 mL/g. The total dry mass can be calculated by integrating the protein density over the cellular volume.

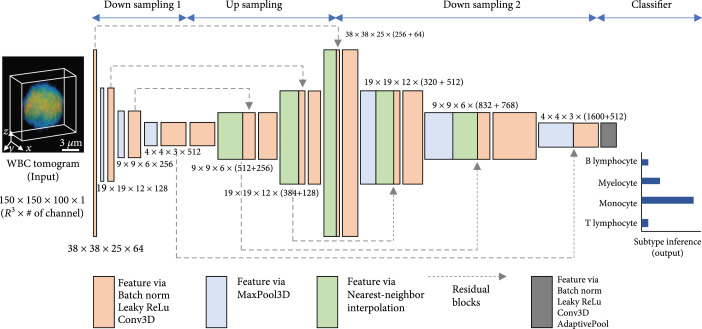

4.4. Deep Learning Classifier

We implemented a deep neural network to identify the 3D RI tomogram of myelocytes, monocytes, T lymphocytes, and B lymphocytes. Our convolutional neural network, inspired by FISH-Net [45], comprises two downsampling (DS) blocks, an upsampling (US) block, and a classifier at the end (Figure 6). The first DS block, comprising batch norm, leaky ReLu, 3D convolution, and 3D max pooling, extracts various features at low resolution. Next, the US block upsamples using nearest-neighbor interpolation and refines the features obtained from the previous block via residual blocks that connect all three DS and US blocks together. These residual blocks help the improved flow of information across different layers and relax the well-known vanishing gradient. The second DS block processes not only the previous features from the US block but also the features transmitted via the residual blocks with batch norm, leaky ReLu, 3D convolution, and 3D max pooling. Ultimately, the extracted features, after being processed by the classifier comprising batch norm, leaky ReLu, 3D convolution, and adaptive 3D pooling, are set to the most probable subtype for the classification task.

Figure 6.

Three-dimensional deep neural network for WBC classification. RI tomograms are processed to extract distinguishing features of each WBC through a convolutional neural network comprising downsampling and upsampling operations, leading to the subtype inference at the end.

Our network was implemented in PyTorch 1.0 using a GPU server computer (Intel® Xeon® silver 4114 CPU and 8 NVIDIA Tesla P40). We trained our network using an ADAM optimizer [46] (learning , , and learning rate ) with a cross-entropy loss. The learnable parameters were initialized by He initialization. We augmented the data using random translating, cropping, elastic transformation, and adding Gaussian noise. We trained our algorithm with a batch of 8 for approximately 18 hours. We selected our best model at 159 epochs and monitored the validation accuracy based on MSE. The prediction time for a single cell ( voxels) takes approximately 150 milliseconds using the referred computing specifications. Though the inference time depends on a batch and voxel size, our approach is expected to take hundreds of milliseconds in most of practical cases.

4.5. Conventional Machine Learning Classifier

To compare our deep learning approach with existing machine learning approaches, we implemented support vector machine (SVM), -nearest neighbors (KNN), linear discriminant classifier (LDC), naïve Bayes (NB), and decision tree (DT) algorithms to classify the four types of WBCs using six extracted features (cellular volume, surface area, sphericity, dry mass, protein density, and mean RI). Six binary-SVM models with error-correcting output codes were trained to make a decision boundary for the four classes. For the KNN classifier, was chosen. All machine learning algorithms were implemented using MATLAB.

4.6. Uniform Manifold Approximation and Projection (UMAP) for Visualization

To effectively visualize the learning capability of our deep neural network, we employed a cutting-edge unsupervised dimension reduction technique known as uniform manifold approximation and projection (UMAP) [47]. UMAP constructs the high-dimensional topological representations of the entire dataset and optimizes them into low-dimensional space (e.g., two dimensions) to be as topologically similar as possible. We extracted the learned features from the last layer of the second downsampling block immediately before entering the classifier block and applied UMAP to demonstrate the feature learning capability of the trained classifier in two dimensions.

Acknowledgments

This work was supported by KAIST UP program, BK21+ program, Tomocube, National Research Foundation of Korea (2015R1A3A2066550), and Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (2021-0-00745).

Contributor Information

Duck Cho, Email: duck.cho@skku.edu.

YongKeun Park, Email: yk.park@kaist.ac.kr.

Authors’ Contributions

DongHun Ryu and Jinho Kim contributed equally to this work.

Supplementary Materials

Figure S1: UMAP visualization of the binary classification. Figure S2: stability of our classifier depending on the size of training set. Figure S3: conventional approaches for the binary classification of lymphoids and myeloids. Figure S4: donor information. Figure S5: tomographic system and reconstruction.

References

- 1.Murphy K., and Weiner J., “Use of leukocyte counts in evaluation of early-onset neonatal sepsis,” Pediatric Infectious Disease Journal, vol. 31, no. 1, pp. 16-19, 2012 [DOI] [PubMed] [Google Scholar]

- 2.Chandramohanadas R., Park Y. K., Lui L., Li A., Quinn D., Liew K., Diez-Silva M., Sung Y., Dao M., Lim C. T., Preiser P. R., and Suresh S., “Biophysics of malarial parasite exit from infected erythrocytes,” PLoS One, vol. 6, no. 6, article e20869, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Baskurt O. K., Gelmont D., and Meiselman H. J., “Red blood cell deformability in sepsis,” American Journal of Respiratory and Critical Care Medicine, vol. 157, no. 2, pp. 421-427, 1998 [DOI] [PubMed] [Google Scholar]

- 4.Ueda H., Howson J. M. M., Esposito L., Heward J., Snook, Chamberlain G., Rainbow D. B., Hunter K. M. D., Smith A. N., di Genova G., Herr M. H., Dahlman I., Payne F., Smyth D., Lowe C., Twells R. C. J., Howlett S., Healy B., Nutland S., Rance H. E., Everett V., Smink L. J., Lam A. C., Cordell H. J., Walker N. M., Bordin C., Hulme J., Motzo C., Cucca F., Hess J. F., Metzker M. L., Rogers J., Gregory S., Allahabadia A., Nithiyananthan R., Tuomilehto-Wolf E., Tuomilehto J., Bingley P., Gillespie K. M., Undlien D. E., Rønningen K. S., Guja C., Ionescu-Tîrgovişte C., Savage D. A., Maxwell A. P., Carson D. J., Patterson C. C., Franklyn J. A., Clayton D. G., Peterson L. B., Wicker L. S., Todd J. A., and Gough S. C. L., “Association of the T-cell regulatory gene CTLA4 with susceptibility to autoimmune disease,” Nature, vol. 423, no. 6939, pp. 506-511, 2003 [DOI] [PubMed] [Google Scholar]

- 5.von Boehmer H., and Melchers F., “Checkpoints in lymphocyte development and autoimmune disease,” Nature Immunology, vol. 11, no. 1, pp. 14-20, 2010 [DOI] [PubMed] [Google Scholar]

- 6.Sant M., Allemani C., Tereanu C., de Angelis R., Capocaccia R., Visser O., Marcos-Gragera R., Maynadié M., Simonetti A., Lutz J. M., Berrino F., and and the HAEMACARE Working Group, “Incidence of hematologic malignancies in Europe by morphologic subtype: results of the HAEMACARE project,” Blood, vol. 116, no. 19, pp. 3724-3734, 2010 [DOI] [PubMed] [Google Scholar]

- 7.Norris D., and Stone J.. WHO classification of tumours of haematopoietic and lymphoid tissues, WHO, Geneva, 2017 [Google Scholar]

- 8.Li C., Pastila R. K., Pitsillides C., Runnels J. M., Puoris’haag M., Côté D., and Lin C. P., “Imaging leukocyte trafficking in vivo with two-photon-excited endogenous tryptophan fluorescence,” Optics Express, vol. 18, no. 2, pp. 988-999, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kim D., Oh N., Kim K., Lee S. Y., Pack C. G., Park J. H., and Park Y. K., “Label-free high-resolution 3-D imaging of gold nanoparticles inside live cells using optical diffraction tomography,” Methods, vol. 136, pp. 160-167, 2018 [DOI] [PubMed] [Google Scholar]

- 10.Ramoji A., Neugebauer U., Bocklitz T., Foerster M., Kiehntopf M., Bauer M., and Popp J., “Toward a spectroscopic hemogram: Raman spectroscopic differentiation of the two most abundant leukocytes from peripheral blood,” Analytical Chemistry, vol. 84, no. 12, pp. 5335-5342, 2012 [DOI] [PubMed] [Google Scholar]

- 11.Orringer D. A., Pandian B., Niknafs Y. S., Hollon T. C., Boyle J., Lewis S., Garrard M., Hervey-Jumper S. L., Garton H. J. L., Maher C. O., Heth J. A., Sagher O., Wilkinson D. A., Snuderl M., Venneti S., Ramkissoon S. H., McFadden K. A., Fisher-Hubbard A., Lieberman A. P., Johnson T. D., Xie X. S., Trautman J. K., Freudiger C. W., and Camelo-Piragua S., “Rapid intraoperative histology of unprocessed surgical specimens via fibre- laser-based stimulated Raman scattering microscopy,” Nature Biomedical Engineering, vol. 1, no. 2, article 0027, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nitta N., Iino T., Isozaki A., Yamagishi M., Kitahama Y., Sakuma S., Suzuki Y., Tezuka H., Oikawa M., Arai F., Asai T., Deng D., Fukuzawa H., Hase M., Hasunuma T., Hayakawa T., Hiraki K., Hiramatsu K., Hoshino Y., Inaba M., Inoue Y., Ito T., Kajikawa M., Karakawa H., Kasai Y., Kato Y., Kobayashi H., Lei C., Matsusaka S., Mikami H., Nakagawa A., Numata K., Ota T., Sekiya T., Shiba K., Shirasaki Y., Suzuki N., Tanaka S., Ueno S., Watarai H., Yamano T., Yazawa M., Yonamine Y., di Carlo D., Hosokawa Y., Uemura S., Sugimura T., Ozeki Y., and Goda K., “Raman image-activated cell sorting,” Nature Communications, vol. 11, no. 1, p. 3452, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Verebes G. S., Melchiorre M., Garcia-Leis A., Ferreri C., Marzetti C., and Torreggiani A., “Hyperspectral enhanced dark field microscopy for imaging blood cells,” Journal of Biophotonics, vol. 6, no. 11-12, pp. 960-967, 2013 [DOI] [PubMed] [Google Scholar]

- 14.Ojaghi A., Carrazana G., Caruso C., Abbas A., Myers D. R., Lam W. A., and Robles F. E., “Label-free hematology analysis using deep-ultraviolet microscopy,” PNAS, vol. 117, no. 26, pp. 14779-14789, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kim Y., Shim H., Kim K., Park H. J., Jang S., and Park Y. K., “Profiling individual human red blood cells using common-path diffraction optical tomography,” Scientific Reports, vol. 4, article 6659, 2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kastl L., Isbach M., Dirksen D., Schnekenburger J., and Kemper B., “Quantitative phase imaging for cell culture quality control,” Cytometry Part A, vol. 91, no. 5, pp. 470-481, 2017 [DOI] [PubMed] [Google Scholar]

- 17.Bettenworth D., Bokemeyer A., Poremba C., Ding N. S., Ketelhut S., and Kemper P. L. B., “Quantitative phase microscopy for evaluation of intestinal inflammation and wound healing utilizing label-free biophysical markers,” Histology and Histopathology, vol. 33, no. 5, pp. 417-432, 2018 [DOI] [PubMed] [Google Scholar]

- 18.Park Y., Depeursinge C., and Popescu G., “Quantitative phase imaging in biomedicine,” Nature Photonics, vol. 12, no. 10, pp. 578-589, 2018 [Google Scholar]

- 19.Chhaniwal V., Singh A. S. G., Leitgeb R. A., Javidi B., and Anand A., “Quantitative phase-contrast imaging with compact digital holographic microscope employing Lloyd’s mirror,” Optics Letters, vol. 37, no. 24, pp. 5127-5129, 2012 [DOI] [PubMed] [Google Scholar]

- 20.Lee S., Park H. J., Kim K., Sohn Y. H., Jang S., and Park Y. K., “Refractive index tomograms and dynamic membrane fluctuations of red blood cells from patients with diabetes mellitus,” Scientific Reports, vol. 7, no. 1, p. 1039, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Javidi B., Markman A., Rawat S., O’Connor T., Anand A., and Andemariam B., “Sickle cell disease diagnosis based on spatio-temporal cell dynamics analysis using 3D printed shearing digital holographic microscopy,” Optics Express, vol. 26, no. 10, pp. 13614-13627, 2018 [DOI] [PubMed] [Google Scholar]

- 22.Jo Y., Cho H., Lee S. Y., Choi G., Kim G., Min H. S., and Park Y., “Quantitative phase imaging and artificial intelligence: a review,” IEEE Journal of Selected Topics in Quantum Electronics, vol. 25, no. 1, 2018 [Google Scholar]

- 23.Lee M., Lee Y. H., Song J., Kim G., Jo Y. J., Min H. S., Kim C. H., and Park Y. K., “Deep-learning-based three-dimensional label-free tracking and analysis of immunological synapses of CAR-T cells,” eLife, vol. 9, article e49023, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lee J., Kim H., Cho H., Jo Y. J., Song Y., Ahn D., Lee K., Park Y., and Ye S. J., “Deep-learning-based label-free segmentation of cell nuclei in time-lapse refractive index tomograms,” IEEE Access, vol. 7, pp. 83449-83460, 2019 [Google Scholar]

- 25.Chen C. L., Mahjoubfar A., Tai L. C., Blaby I. K., Huang A., Niazi K. R., and Jalali B., “Deep learning in label-free cell classification,” Scientific Reports, vol. 6, no. 1, article 21471, 2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ozaki Y., Yamada H., Kikuchi H., Hirotsu A., Murakami T., Matsumoto T., Kawabata T., Hiramatsu Y., Kamiya K., Yamauchi T., Goto K., Ueda Y., Okazaki S., Kitagawa M., Takeuchi H., and Konno H., “Label-free classification of cells based on supervised machine learning of subcellular structures,” PLoS One, vol. 14, no. 1, article e0211347, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Nissim N., Dudaie M., Barnea I., and Shaked N. T., “Real-time stain-free classification of cancer cells and blood cells using interferometric phase microscopy and machine learning,” Cytometry Part A, vol. 99, no. 5, pp. 511-523, 2021 [DOI] [PubMed] [Google Scholar]

- 28.Wang H. D., Ceylan Koydemir H., Qiu Y., Bai B., Zhang Y., Jin Y., Tok S., Yilmaz E. C., Gumustekin E., Rivenson Y., and Ozcan A., “Early detection and classification of live bacteria using time-lapse coherent imaging and deep learning,” Light: Science & Applications, vol. 9, no. 1, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wu Y., Zhou Y., Huang C. J., Kobayashi H., Yan S., Ozeki Y., Wu Y., Sun C. W., Yasumoto A., Yatomi Y., Lei C., and Goda K., “Intelligent frequency-shifted optofluidic time-stretch quantitative phase imaging,” Optics Express, vol. 28, no. 1, pp. 519-532, 2020 [DOI] [PubMed] [Google Scholar]

- 30.Zhang J. K., He Y. R., Sobh N., and Popescu G., “Label-free colorectal cancer screening using deep learning and spatial light interference microscopy (SLIM),” APL Photonics, vol. 5, no. 4, article 040805, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zhou Y., Yasumoto A., Lei C., Huang C. J., Kobayashi H., Wu Y., Yan S., Sun C. W., Yatomi Y., and Goda K., “Intelligent classification of platelet aggregates by agonist type,” eLife, vol. 9, article e52938, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Jo Y., Park S., Jung J. H., Yoon J., Joo H., Kim M. H., Kang S. J., Choi M. C., Lee S. Y., and Park Y. K., “Holographic deep learning for rapid optical screening of anthrax spores,” Science Advances, vol. 3, no. 8, article e1700606, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Rivenson Y., Zhang Y., Günaydın H., Teng D., and Ozcan A., “Phase recovery and holographic image reconstruction using deep learning in neural networks,” Light-Science & Applications, vol. 7, no. 2, p. 17141, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Choi G., Ryu D. H., Jo Y. J., Kim Y. S., Park W., Min H. S., and Park Y. K., “Cycle-consistent deep learning approach to coherent noise reduction in optical diffraction tomography,” Optics Express, vol. 27, no. 4, pp. 4927-4943, 2019 [DOI] [PubMed] [Google Scholar]

- 35.Pitkäaho T., Manninen A., and Naughton T. J., “Focus prediction in digital holographic microscopy using deep convolutional neural networks,” Applied Optics, vol. 58, no. 5, pp. A202-A208, 2019 [DOI] [PubMed] [Google Scholar]

- 36.Dardikman-Yoffe G., Roitshtain D., Mirsky S. K., Turko N. A., Habaza M., and Shaked N. T., “PhUn-Net: ready-to-use neural network for unwrapping quantitative phase images of biological cells,” Biomedical Optics Express, vol. 11, no. 2, pp. 1107-1121, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kandel M. E., Kim E., Lee Y. J., Tracy G., Chung H. J., and Popescu G., “Multiscale assay of unlabeled neurite dynamics using phase imaging with computational specificity (PICS),” 2020, https://arxiv.org/abs/2008.00626. [DOI] [PMC free article] [PubMed]

- 38.Nygate Y. N., Levi M., Mirsky S. K., Turko N. A., Rubin M., Barnea I., Dardikman-Yoffe G., Haifler M., Shalev A., and Shaked N. T., “Holographic virtual staining of individual biological cells,” Proceedings of the National Academy of Sciences of the United States of America, vol. 117, no. 17, pp. 9223-9231, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Jo Y., Cho H., Park W. S., Kim G., Ryu D., Kim Y. S., Lee M., Joo H., Jo H. H., Lee S., Min H.-s., Do Heo W., and Park Y. K., “Data-driven multiplexed microtomography of endogenous subcellular dynamics,” 2020, https://www.biorxiv.org/content/10.1101/2020.09.16.300392v1.full. [DOI] [PubMed]

- 40.Go T., Kim J. H., Byeon H., and Lee S. J., “Machine learning-based in-line holographic sensing of unstained malaria-infected red blood cells,” Journal of Biophotonics, vol. 11, no. 9, article e201800101, 2018 [DOI] [PubMed] [Google Scholar]

- 41.Nassar M., Doan M., Filby A., Wolkenhauer O., Fogg D. K., Piasecka J., Thornton C. A., Carpenter A. E., Summers H. D., Rees P., and Hennig H., “Label-free identification of white blood cells using machine learning,” Cytometry Part A, vol. 95, no. 8, pp. 836-842, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Singh V., Srivastava V., and Mehta D. S., “Machine learning-based screening of red blood cells using quantitative phase imaging with micro-spectrocolorimetry,” Optics and Laser Technology, vol. 124, article 105980, 2020 [Google Scholar]

- 43.Shu X., Sansare S., Jin D., Zeng X., Tong K. Y., Pandey R., and Zhou R., “Artificial intelligence enabled reagent-free imaging hematology analyzer,” 2020, https://arxiv.org/abs/2012.08518.

- 44.Ryu D., Jo Y. J., Yoo J., Chang T., Ahn D., Kim Y. S., Kim G., Min H. S., and Park Y. K., “Deep learning-based optical field screening for robust optical diffraction tomography,” Scientific Reports, vol. 9, no. 1, p. 15239, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Sun S., Pang J., Shi J., Yi S., and Ouyang W., “Fishnet: A versatile backbone for image, region, and pixel level prediction,” 2018, https://arxiv.org/abs/1901.03495.

- 46.Kingma D. P., and Ba J., “Adam: a method for stochastic optimization,” 2014, https://arxiv.org/abs/1412.6980.

- 47.McInnes L., Healy J., and Melville J., “Umap: uniform manifold approximation and projection for dimension reduction,” 2018, https://arxiv.org/abs/1802.03426.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figure S1: UMAP visualization of the binary classification. Figure S2: stability of our classifier depending on the size of training set. Figure S3: conventional approaches for the binary classification of lymphoids and myeloids. Figure S4: donor information. Figure S5: tomographic system and reconstruction.