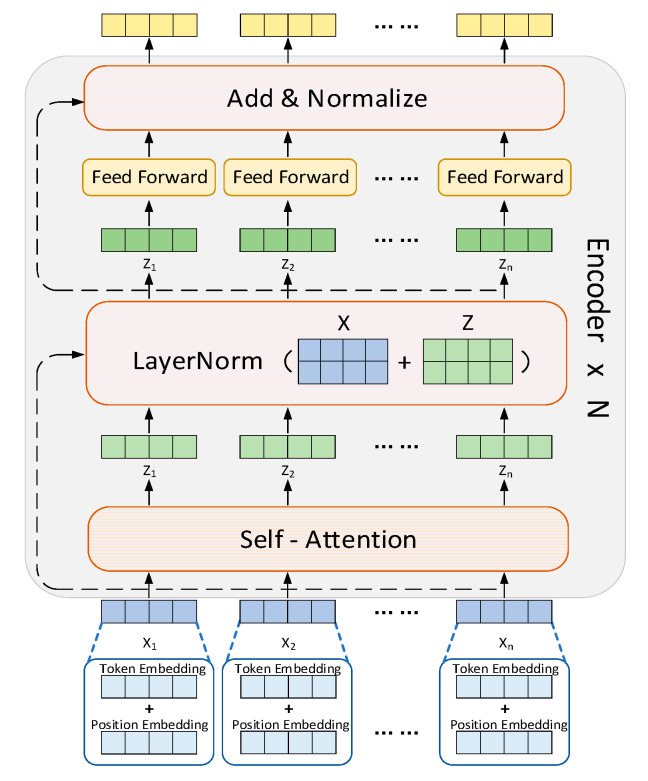

Figure 5.

Structure of the BERT module, which consists of three parts: input embedding with multiple transformer encoding layers, a multi-head self-attention mechanism, and a feedforward neural network. First, the input vector Xn is transformed into vector Zn through multi-head self-attention, and the two vectors are then added together using a residual connection. Subsequently, layer normalization and linear transformation are applied to the vectors to enhance the model’s capacity to capture complex patterns.