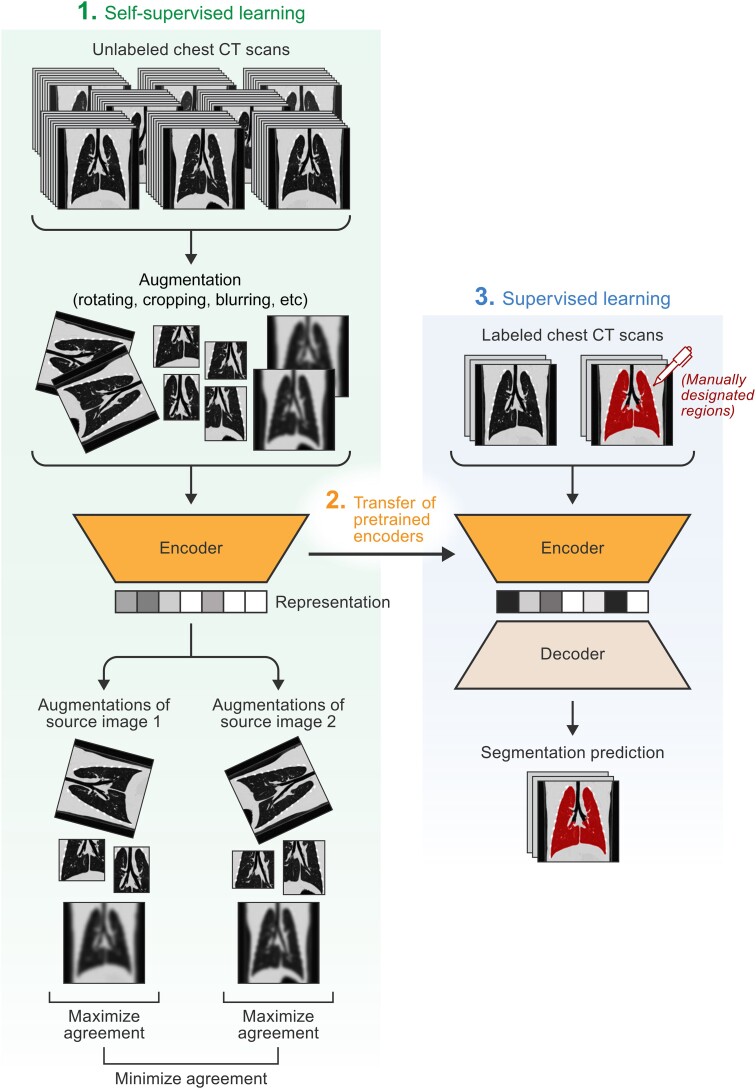

Figure 1.

Self-supervised learning for medical image segmentation. The diagram describes an implementation of how unlabeled computed tomographic (CT) scans and self-supervised learning (specifically contrastive learning) can be used to enhance the performance of a supervised learning segmentation model. First, unlabeled scans are augmented using simple transformations, such as cropping, rotation, and blurring. These augmented scans are inputted into the self-supervised model, and the model is tasked with distinguishing augmented images that come from the same source image from augmented images that come from different images (ie, pretask). After training, the pretrained encoders can be transferred to a supervised learning model, which is given a small batch of labeled scans and tasked with producing the segmentation masks. Pretraining with a self-supervised learning task has been shown to enhance the performance of supervised learning models.