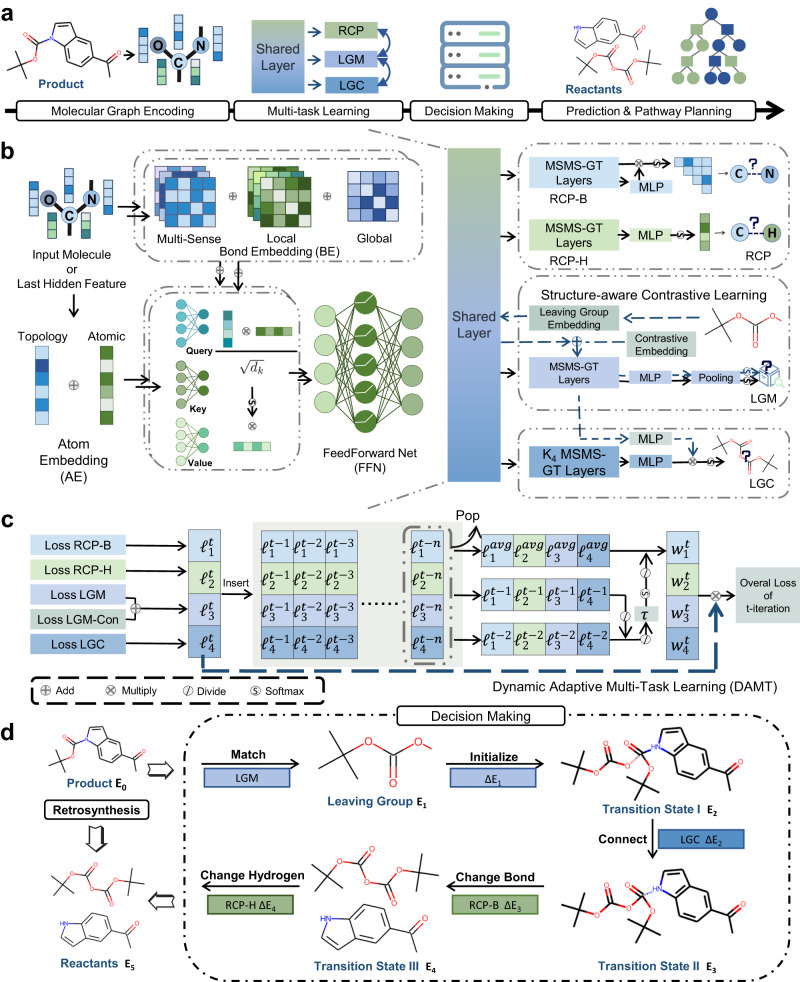

Fig. 1. Overview of RetroExplainer.

a The pipeline of RetroExplainer. We formulated the whole process as four distinct phases: (1) molecular graph encoding, (2) multi-task learning, (3) decision-making, and (4) prediction or multi-step pathway planning. b The architecture of the multi-sense and multi-scale Graph Transformer (MSMS-GT) encoder and retrosynthetic scoring functions. We considered the integration of multi-sense bond embeddings with both local and global receptive fields, blending them as attention biases during the self-attention execution phase. Upon obtaining shared features, we employed three distinct modules to evaluate the probabilities of five retrosynthetic events. These comprise: the reaction center predictor (RCP), which includes both a bond change predictor (RCP-B) and a hydrogen change evaluator (RCP-H); the leaving group matcher (LGM), enhanced with an additional contrastive learning strategy; and the leaving group connector (LGC). It is noteworthy to mention that the acronym MLP stands for multi-layer perceptron. c The dynamic adaptive multi-task learning (DAMT) algorithm. This algorithm is intended to acquire a group of weights according to the descent rates of losses and their value ranges to optimize the five evaluators equally. denotes the th kind of loss score in the th iteration. The means the average of th type of loss value over the loss queue from to , where is the length of queue we take into consideration. is the obtained weight of the th kind of loss score at the th iteration. is a temperature coefficient. d. The chemical-mechanism-like decision process. We designed a transparent decision process with six stages, assessed by five evaluators to obtain the energy scores . The is the gap between the and .