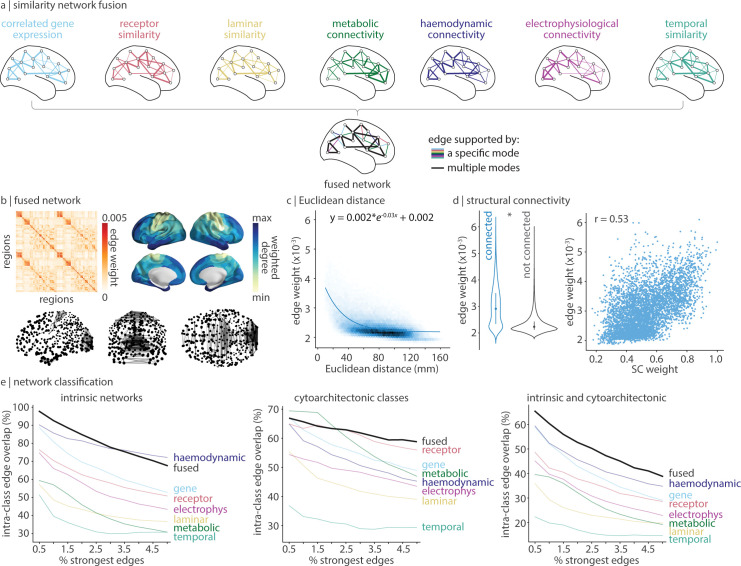

Fig 6. Network fusion.

SNF was applied to all 7 connectivity modes to construct a single integrated network [92,169]. (a) Toy example of SNF. SNF iteratively combines the 7 connectivity modes in a manner that gives more weight to edges between observations that are consistently high-strength across data types (black edges). (b) The fused network. We show the matrix-representation of the network (left), the top 0.5% strongest edges of the network (bottom), and the weighted degree of each brain region (right). Note that the edge weights in the fused network is a byproduct of the iterative multiplication and normalization steps (see Methods for details) and therefore can become very small. Greater edge magnitude represents greater similarity (no negative edges exist, by design). (c) Edge weight decreases exponentially with Euclidean distance. (d) Structurally connected edges have greater edge weight than edges without an underlying structural connection, against a degree and edge-length preserving null model [56] (left), and is correlated with structural connectivity (right). (e) For a varying threshold of strongest edges (0.5%–5% in 0.5% intervals), we calculate the proportion of edges that connect 2 regions within the same intrinsic network (left), cytoarchitectonic class (middle), and the union of intrinsic networks and cytoarchitectonic classes (right). The data underlying this figure can be found at https://github.com/netneurolab/hansen_many_networks. SNF, similarity network fusion.