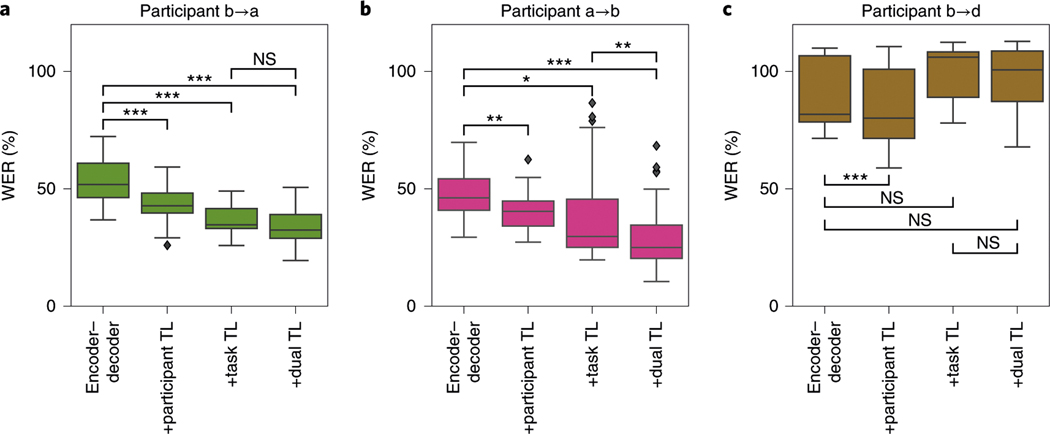

Fig. 3 |. WER of the decoded MoCHA-1 sentences for encoder–decoder models trained with transfer learning.

Each panel corresponds to a participant (color code as in Fig. 2). The four boxes in each panel show WEr without transfer learning (‘encoder–decoder’, as in the final points in Fig. 2b), with cross-participant transfer learning (+participant TL), with training on sentences outside the test set (+task TL) and with both forms of transfer learning (+dual TL). The box and whiskers show, respectively, the quartiles and the extent (excepting outliers which are shown explicitly as black diamonds) of the distribution of WErs across networks trained independently from scratch and evaluated on randomly selected held-out blocks. Significance, indicated by asterisks (* < 0.05; ** < 0.005; *** < 0.0005; NS, not significant), was computed with a one-sided Wilcoxon signed-rank test and Holm–Bonferroni corrected for 14 comparisons: the 12 shown here plus two others noted in the text. Exact values appear in Supplementary Table 6. a, participant a, with pretraining on participant b/pink (second and fourth bars). b, participant b, with pretraining on participant a/green (second and fourth bars). c, participant d, with pretraining on participant b/pink (second and fourth bars).