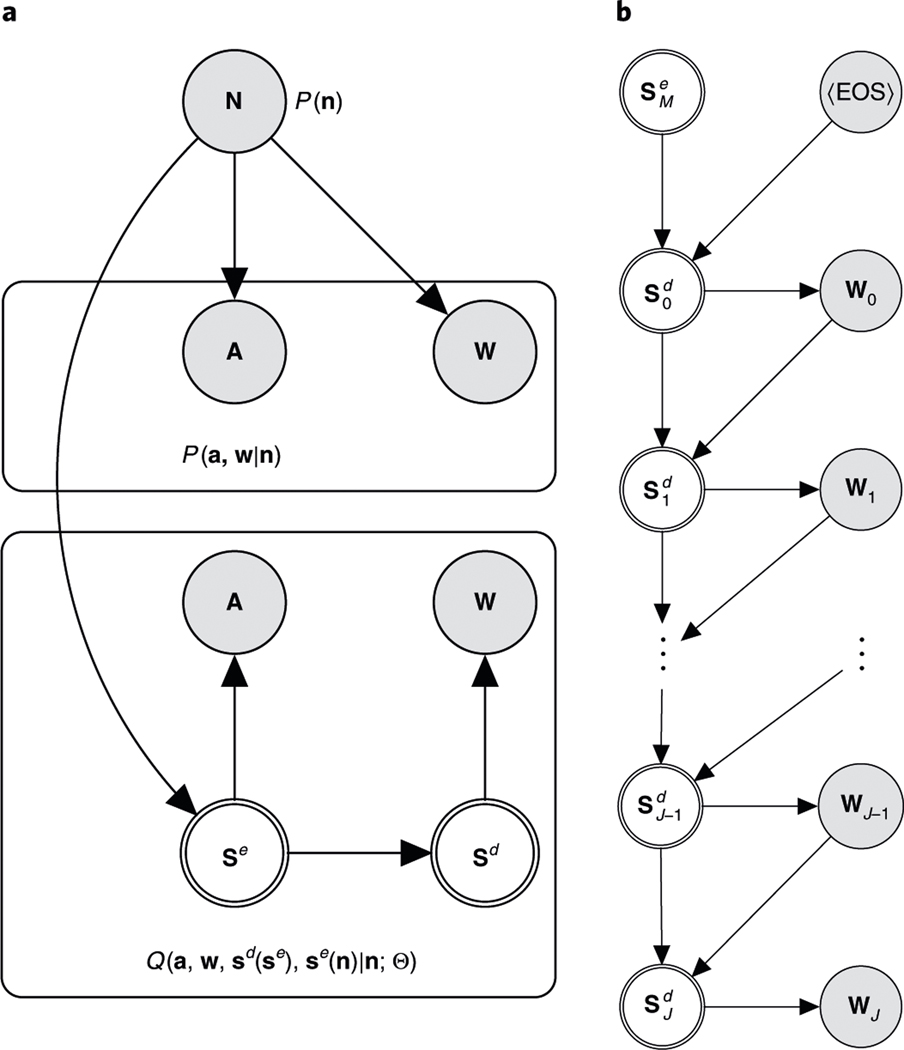

Fig. 6 |. Graphical model for the decoding process.

Circles represent random variables; doubled circles are deterministic functions of their inputs. a, The true generative process (above) and the encoder–decoder model (below). The true relationship between neural activity (), the speech-audio signal () and word sequences (), denoted , is unknown (although we have drawn the graph to suggest that and are independent given ). However, we can observe samples from all three variables, which we use to fit the conditional model, , which is implemented as a neural network. The model separates the encoder states, , which directly generate the audio sequences, from the decoder states, , which generate the word sequences. During training, model parameters are changed so as to make the model distribution, , over and look more similar to the true distribution, . b, Detail of the graphical model for the decoder, unrolled vertically in sequence steps. Each decoder state is computed deterministically from its predecessor and the previously generated word or (in the case of the zeroth state) the final encoder state and an initialization token, .