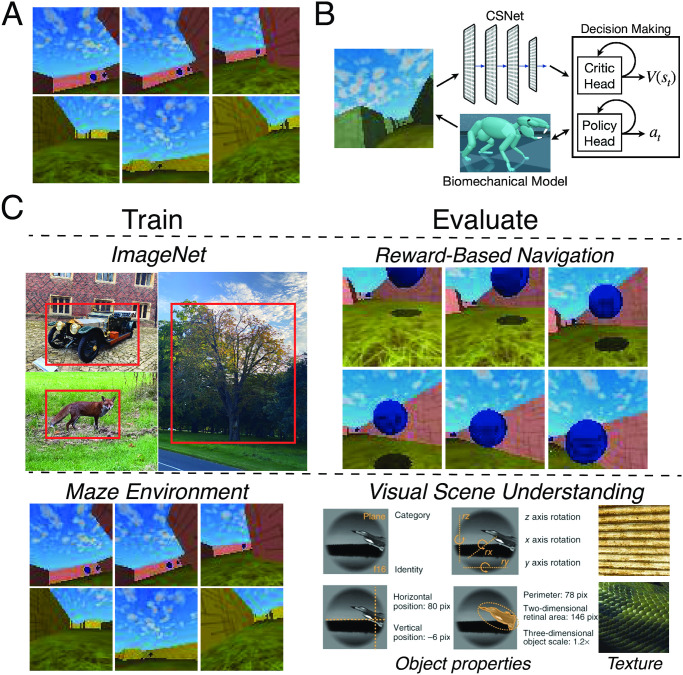

Fig 6. Evaluating the generality of learned visual representations.

A. Each row shows an example of an episode used to train the reinforcement learning (RL) policy in an offline fashion [56]. The episodes used to train the virtual rodent (adapted from Merel et al. [53]) were previously generated and are part of a larger suite of RL tasks for benchmarking algorithms [57]. In this task (“DM Locomotion Rodent”), the goal of the agent is to navigate the maze to collect as many rewards as possible (blue orbs shown in the first row). B. A schematic of the RL agent. The egocentric visual input is fed into the visual backbone of the model, which is fixed to be the first four convolutional layers of either the contrastive or the supervised variants of AlexNet. The output of the visual encoder is then concatenated with the virtual rodent’s proprioceptive inputs before being fed into the (recurrent) critic and policy heads. The parameters of the visual encoder are not trained, while the parameters of the critic head and the policy head are trained. Virtual rodent schematic from Fig 1B of Merel et al. [53]. C. A schematic of the out-of-distribution generalization procedure. Visual encoders are either trained in a supervised or self-supervised manner on ImageNet [19] or the Maze environment [57], and then evaluated on reward-based navigation or datasets consisting of object properties (category, pose, position, and size) from Hong et al. [55], and different textures [58].