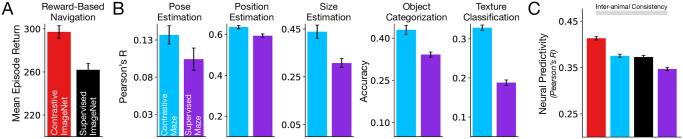

Fig 7. Self-supervised, contrastive visual representations better support transfer performance on downstream, out-of-distribution tasks.

Models trained in a contrastive manner (using either ImageNet or the egocentric maze inputs; red and blue respectively) lead to better transfer on out-of-distribution downstream tasks than models trained in a supervised manner (i.e., models supervised on labels or on rewards; black and purple respectively). A. Models trained on ImageNet, tested on reward-based navigation. B. Models trained on egocentric maze inputs (“Contrastive Maze”, blue) or supervised on rewards (i.e., reward-based navigation; “Supervised Maze”, purple), tested on visual scene understanding tasks: pose, position, and size estimation, and object and texture classification. C. Median and s.e.m. neural predictivity across units in the Neuropixels dataset.