Abstract

This paper presents Integrated Information Theory (IIT) 4.0. IIT aims to account for the properties of experience in physical (operational) terms. It identifies the essential properties of experience (axioms), infers the necessary and sufficient properties that its substrate must satisfy (postulates), and expresses them in mathematical terms. In principle, the postulates can be applied to any system of units in a state to determine whether it is conscious, to what degree, and in what way. IIT offers a parsimonious explanation of empirical evidence, makes testable predictions concerning both the presence and the quality of experience, and permits inferences and extrapolations. IIT 4.0 incorporates several developments of the past ten years, including a more accurate formulation of the axioms as postulates and mathematical expressions, the introduction of a unique measure of intrinsic information that is consistent with the postulates, and an explicit assessment of causal relations. By fully unfolding a system’s irreducible cause–effect power, the distinctions and relations specified by a substrate can account for the quality of experience.

Author summary

As a theory of consciousness, IIT aims to answer two questions: 1) Why is experience present vs. absent? and 2) Why do specific experiences feel the way they do? The theory’s starting point is the existence of experience. IIT then aims to account for phenomenal existence and its essential properties in physical terms. It concludes that a substrate—a set of interacting units—can support consciousness if it can take and make a difference for itself (intrinsicality), select a specific cause and effect as an irreducible whole with a definite border and grain, and specify a structure of causes and effects through subsets of its units. To that end, IIT provides a mathematical formalism that can be employed to “unfold’’ the substrate’s cause–effect structure. This allows IIT to answer the two questions above: 1) Experience is present for any substrate that fulfills the essential properties of existence, and 2) specific experiences feel the way they do because of the specific cause-effect structure specified by their substrates. The theory is consistent with neurological data, and some of its core principles have been successfully tested empirically.

Introduction

A scientific theory of consciousness should account for experience, which is subjective, in objective terms [1]. Being conscious—having an experience—is understood to mean that “there is something it is like to be” [2]: something it is like to see a blue sky, hear the ocean roar, dream of a friend’s face, imagine a melody flow, contemplate a choice, or reflect on the experience one is having.

IIT aims to account for phenomenal properties—the properties of experience—in physical terms. IIT’s starting point is experience itself rather than its behavioral, functional, or neural correlates [1]. Furthermore, in IIT “physical” is meant in a strictly operational sense—in terms of what can be observed and manipulated.

The starting point of IIT is the existence of an experience, which is immediate and irrefutable [3]. From this “zeroth” axiom, IIT sets out to identify the essential properties of consciousness—those that are immediate and irrefutably true of every conceivable experience. These are IIT’s five axioms of phenomenal existence: every experience is for the experiencer (intrinsicality), specific (information), unitary (integration), definite (exclusion), and structured (composition).

Unlike phenomenal existence, which is immediate and irrefutable (an axiom), physical existence is an explanatory construct (a postulate), and it is assessed operationally (from within consciousness): in physical terms, to be is to have cause–effect power. In other words, something can be said to exist physically if it can “take and make a difference”—bear a cause and produce an effect—as judged by a conscious observer/manipulator.

The next step of IIT is to formulate the essential phenomenal properties (the axioms) in terms of corresponding physical properties (the postulates). This formulation is an “inference to a good explanation” and rests on basic assumptions such as realism, physicalism, and atomism (see Box 1: Methodological guidelines of IIT). If IIT is correct, the substrate of consciousness (see (1) in S1 Notes), beyond having cause–effect power (existence), must satisfy all five essential phenomenal properties in physical terms: its cause–effect power must be for itself (intrinsicality), specific (information), unitary (integration), definite (exclusion), and structured (composition).

Box 1. Methodological guidelines of IIT

Inference to a good explanation

We should generally assume that an explanation is good if it can account for a broad set of facts (scope), does so in a unified manner (synthesis), can explain facts precisely (specificity), is internally coherent (self-consistency), is coherent with our overall understanding of things (system consistency), is simpler than alternatives (simplicity), and can make testable predictions (scientific validation). For example, IIT 4.0 aims at expressing the postulates of intrinsicality, information, integration, and exclusion in a self-consistent manner when applied to systems, causal distinctions, and relations (see formulas).

Realism

We should assume that something exists (and persists) independently of our own experience. This is a much better hypothesis than solipsism, which explains nothing and predicts nothing. Although IIT starts from our own phenomenology, it aims to account for the many regularities of experience in a way that is fully consistent with realism.

Operational physicalism

To assess what exists independently of our own experience, we should employ an operational criterion: we should systematically observe and manipulate a substrate’s units and determine that they can indeed take and make a difference in a way that is reliable. Doing so demonstrates a substrate’s cause–effect power—the signature of physical existence. Ideally, cause–effect power is fully captured by a substrate’s transition probability matrix (TPM) (1). This assumption is embedded in IIT’s zeroth postulate.

Operational reductionism (“atomism”)

Ideally, we should account for what exists physically in terms of the smallest units we can observe and manipulate, as captured by unit TPMs. Doing so would leave nothing unaccounted for. IIT assumes that, in principle, it should be possible to account for everything purely in terms of cause–effect power—cause–effect power “all the way down” to conditional probabilities between atomic units (see (5) in S1 Notes). Eventually, this would leave neither room nor need to assume intrinsic properties or laws.

Intrinsic perspective

When accounting for experience itself in physical terms, existence should be evaluated from the intrinsic perspective of an entity—what exists for the entity itself—not from the perspective of an external observer. This assumption is embedded in IIT’s postulate of intrinsicality and has several consequences. One is that, from the intrinsic perspective, the quality and quantity of existence must be observer-independent and cannot be arbitrary. For instance, information in IIT must be relative to the specific state the entity is in, rather than an average of states as assessed by an external observer. Similarly, it should be evaluated based on the uniform distribution of possible states, as captured by the entity’s TPM (1), rather than on an observed probability distribution. By the same token, units outside the entity should be treated as background conditions that do not contribute directly to what the system is. The intrinsic perspective also imposes a tension between expansion and dilution (see below and [12, 14]): from the intrinsic perspective of a system (or a mechanism within the system), having more units may increase its informativeness (cause–effect power measured as deviation from chance), while at the same time diluting its selectivity (ability to concentrate cause–effect power over a specific state).

On this basis, IIT proposes a fundamental explanatory identity: an experience is identical to the cause–effect structure unfolded from a maximal substrate (defined below). Accordingly, all the specific phenomenal properties of any experience must have a good explanation in terms of the specific physical properties of the corresponding cause–effect structure, with no additional ingredients.

Based again on “inferences to a good explanation” (see Box 1), IIT formulates the postulates in a mathematical framework that is in principle applicable to general models of interacting units (but see (2) in S1 Notes). A mathematical framework is needed (a) to evaluate whether the theory is self-consistent and compatible with our overall knowledge about the world, (b) to make specific predictions regarding the quality and quantity of our experiences and their substrate within the brain, and (c) to extrapolate from our own consciousness to infer the presence (or absence) and nature of consciousness in beings different from ourselves.

Ultimately, the theory should account for why our consciousness depends on certain portions of the world and their state, such as certain regions of the brain and not others, and for why it fades during dreamless sleep, even though the brain remains active. It should also account for why an experience feels the way it does—why the sky feels extended, why a melody feels flowing in time, and so on. Moreover, the theory makes several predictions concerning both the presence and the quality of experience, some of which have been and are being tested empirically [4].

While the main tenets of the theory have remained the same, its formal framework has been progressively refined and extended [5–8]. Compared to IIT 1.0 [5, 6], 2.0 [7, 9], and 3.0 [8], IIT 4.0 presents a more complete, self-consistent formulation and incorporates several recent advances [10–13]. Chief among them are a more accurate formulation of the axioms as postulates and mathematical expressions, the introduction of an Intrinsic Difference (ID) measure [12, 14] that is uniquely consistent with IIT’s postulates, and the explicit assessment of causal relations [11].

In what follows, after introducing IIT’s axioms and postulates, we provide its updated mathematical formalism. In the “Results and discussion” section, we apply the mathematical framework of IIT to representative examples and discuss some of their implications. The article is meant as a reference for the theory’s mathematical formalism, a concise demonstration of its internal consistency, and an illustration of how a substrate’s cause–effect structure is unfolded computationally. A discussion of the theory’s motivation, its axioms and postulates, and its assumptions and implications can be found in a forthcoming book (see (3) in S1 Notes) and wiki [15] as well as in several publications [1, 16–21]. A survey of the explanatory power and experimental predictions of IIT can be found in [4]. The way IIT’s analysis of cause–effect power can be applied to actual causation, or “what caused what,” is presented in [10].

From phenomenal axioms to physical postulates

Axioms of phenomenal existence

That experience exists—that “there is something it is like to be”—is immediate and irrefutable, as everybody can confirm, say, upon awakening from dreamless sleep. Phenomenal existence is immediate in the sense that my experience is simply there, directly rather than indirectly: I do not need to infer its existence from something else. It is irrefutable because the very doubting that my experience exists is itself an experience that exists—the experience of doubting [1, 3]. Thus, to claim that my experience does not exist is self-contradictory or absurd. The existence of experience is IIT’s zeroth axiom.

Existence Experience exists: there is something.

Traditionally, an axiom is a statement that is assumed to be true, cannot be inferred from any other statement, and can serve as a starting point for inferences. The existence of experience is the ultimate axiom—the starting point for everything, including logic and physics.

On this basis, IIT proceeds by considering whether experience—phenomenal existence—has some axiomatic or essential properties, properties that are immediate and irrefutably true of every conceivable experience. Drawing on introspection and reason, IIT identifies the following five:

Intrinsicality Experience is intrinsic: it exists for itself.

Information Experience is specific: it is this one.

Integration Experience is unitary: it is a whole, irreducible to separate experiences.

Exclusion Experience is definite: it is this whole.

Composition Experience is structured: it is composed of distinctions and the relations that bind them together, yielding a phenomenal structure that feels the way it feels.

To exemplify, if I awaken from dreamless sleep and experience the white wall of my room, my bed, and my body, the experience not only exists, immediately and irrefutably, but 1) it exists for me, not for something else, 2) it is specific (this one experience, not a generic one), 3) it is unitary (the left side is not experienced separately from the right side, and vice versa), 4) it is definite (it includes the visual scene in front of me—neither less, say, its left side only, nor more, say, the wall behind my head), 5) it is structured by distinctions (the wall, the bed, the body) and relations (the body is on the bed, the bed in the room), which make it feel the way it does and not some other way.

The axioms are not only immediately given, but they are irrefutably true of every conceivable experience. For example, once properly understood, the unity of experience cannot be refuted. Trying to conceive of an experience that were not unitary leads to conceiving of two separate experiences, each of which is unitary, which reaffirms the validity of the axiom. Even though each of the axioms spells out an essential property in its own right, the axioms must be considered together to properly characterize phenomenal existence.

IIT takes the above set of axioms to be complete: there are no further properties of experience that are essential. Other properties that might be considered as candidates for axiomatic status include space (experience typically takes place in some spatial frame), time (an experience usually feels like it flows from a past to a future), change (an experience usually transitions or flows into another), subject–object distinction (an experience seems to involve both a subject and an object), intentionality (experiences usually refer to something in the world, or at least to something other than the subject), a sense of self (many experiences include a reference to one’s body or even to one’s narrative self), figure–ground segregation (an experience usually includes some object and some background), situatedness (an experience is often bound to a time and a place), will (experience offers the opportunity for action), and affect (experience is often colored by some mood), among others. However, experiences lacking each of these candidate properties are conceivable—that is, conceiving of them does not lead to self-contradiction or absurdity. They are also achievable, as revealed by altered states of consciousness reached through dreaming, meditative practices, or drugs.

Postulates of physical existence

To account for the many regularities of experience (Box 1), it is a good inference to assume the existence of a world that persists independently of one’s experience (realism). From within consciousness, we can probe the physical existence of things outside of our experience operationally—through observations and manipulations. To be granted physical existence, something should have the power to “take a difference” (be affected) and “make a difference” (produce effects) in a reliable way (physicalism). IIT also assumes “operational reductionism,” which means that, ideally, to establish what exists in physical terms, one would start from the smallest units that can take and make a difference, so that nothing is left out (atomism).

By characterizing physical existence operationally as cause–effect power, IIT can proceed to formulate the axioms of phenomenal existence as postulates of physical existence. This establishes the requirements for the substrate of consciousness, where “substrate” is meant operationally as a set of units that can be observed and manipulated.

Existence The substrate of consciousness can be characterized operationally by cause–effect power: its units must take and make a difference.

Building from this “zeroth” postulate, IIT formulates the five axioms in terms of postulates of physical existence that must be satisfied by the substrate of consciousness:

Intrinsicality Its cause–effect power must be intrinsic: it must take and make a difference within itself.

-

Information Its cause–effect power must be specific: it must be in this state and select this cause–effect state.

This state is the one with maximal intrinsic information (ii), a measure of the difference a system takes or makes over itself for a given cause state and effect state.

-

Integration Its cause–effect power must be unitary: it must specify its cause–effect state as a whole set of units, irreducible to separate subsets of units.

Irreducibility is measured by integrated information (φ) over the substrate’s minimum partition.

-

Exclusion Its cause–effect power must be definite: it must specify its cause–effect state as this whole set of units.

This is the set of units that is maximally irreducible, as measured by maximum φ (φ*). This set is called a maximal substrate, also known as a complex [8, 13].

Composition Its cause–effect power must be structured: subsets of its units must specify cause–effect states over subsets of units (distinctions) that can overlap with one another (relations), yielding a cause–effect structure or Φ-structure (“Phi-structure”) that is the way it is.

Distinctions and relations, in turn, must also satisfy the postulates of physical existence: they must have cause–effect power, within the substrate of consciousness, in a specific, unitary, and definite way (they do not have components, being components themselves). They thus have an associated φ value. The Φ-structure unfolded from a complex corresponds to the quality of consciousness. The sum total of the φ values of the distinctions and relations that compose the Φ-structure measures its structure integrated information Φ (“big Phi,” “structure Phi”) and corresponds to the quantity of consciousness.

According to IIT, the physical properties characterized by the postulates are necessary and sufficient for an entity to be conscious. They are necessary because they are needed to account for the properties of experience that are essential, in the sense that it is inconceivable for an experience to lack any one of them. They are also sufficient because no additional property of experience is essential, in the sense that it is conceivable for an experience to lack that property. Thus, no additional physical property is a necessary requirement for being a substrate of consciousness.

The postulates of IIT have been and are being applied to account for the location of the substrate of consciousness in the brain [4] and for its loss and recovery in physiological and pathological conditions [22, 23].

The explanatory identity between experiences and Φ-structures

Having determined the necessary and sufficient conditions for a substrate to support consciousness, IIT proposes an explanatory identity: every property of an experience is accounted for in full by the physical properties of the Φ-structure unfolded from a maximal substrate (a complex) in its current state, with no further or “ad hoc” ingredients. That is, there must be a one-to-one correspondence between the way the experience feels and the way distinctions and relations are structured. Importantly, the identity is not meant as a correspondence between the properties of two separate things. Instead, the identity should be understood in an explanatory sense: the intrinsic (subjective) feeling of the experience can be explained extrinsically (objectively, i.e., operationally or physically) in terms of cause–effect power (see (4) in S1 Notes).

The explanatory identity has been applied to account for how space feels (spatial extendedness) and which neural substrates may account for it [11]. Ongoing work is applying the identity to provide a basic account of the feeling of temporal flow [24] and that of objects [25].

Overview of IIT’s framework

IIT 4.0 aims at providing a formal framework to characterize the cause–effect structure of a substrate in a given state by expressing IIT’s postulates in mathematical terms. In line with operational physicalism (Box 1), we characterize a substrate by the transition probability function of its constituting units.

On this basis, the IIT formalism first identifies sets of units that fulfill all required properties of a substrate of consciousness according to the postulates of physical existence. First, for a candidate system, we determine a maximal cause–effect state based on the intrinsic information (ii) that the system in its current state specifies over its possible cause states and effect states. We then determine the maximal substrate based on the integrated information (φs, “system phi”) of the maximal cause–effect state. To qualify as a substrate of consciousness, a candidate system must specify a maximum of integrated information () compared to all competing candidate systems with overlapping units.

The second part of the IIT formalism unfolds the cause–effect structure specified by a maximal substrate in its current state, its Φ-structure. To that end, we determine the distinctions and relations specified by the substrate’s subsets according to the postulates of physical existence. Distinctions are cause–effect states specified over subsets of substrate units (purviews) by subsets of substrate units (mechanisms). Relations are congruent overlaps among distinctions’ cause and/or effect states. Distinctions and relations are also characterized by their integrated information (φd, φr). The Φ-structure they compose corresponds to the quality of the experience specified by the substrate; the sum of their φd/r values corresponds to its quantity (Φ).

While IIT must still be considered as work in progress, having undergone successive refinements, IIT 4.0 is the first formulation of IIT that strives to characterize Φ-structures completely and to do so based on measures that satisfy the postulates uniquely. For a comparison of the updated framework with IIT 1.0, 2.0, and 3.0, see S2 Text.

Substrates, transition probabilities, and cause–effect power

IIT takes physical existence as synonymous with having cause–effect power, the ability to take and make a difference. Consequently, a substrate U with state space ΩU is operationally defined by its potential interactions, assessed in terms of conditional probabilities (physicalism, Box 1). We denote the complete transition probability function of a substrate U over a system update as

| (1) |

A substrate in IIT can be described as a stochastic system U = {U1, U2, …, Un} of n interacting units with state space and current state u ∈ ΩU. We define units in state u as a set of tuples, where each tuple contains the unit and the state of the unit, i.e., u = {(Ui, state(Ui)) : Ui ∈ U}. This allows us to define set operations over u that consider both the units and their states. ΩU is the set of all possible such tuple sets, corresponding to all the possible states of U. We assume that the system updates in discrete steps, that the state space ΩU is finite, and that the individual random variables Ui ∈ U are conditionally independent from each other given the preceding state of U:

| (2) |

Finally, we assume a complete description of the substrate, which means that we can determine the conditional probabilities in (2) for every system state, with [10, 26–28], where the “do-operator” do(u) indicates that u is imposed by intervention. This implies that U must correspond to a causal network [10], and is a transition probability matrix (TPM) of size |ΩU| (see (6) in S1 Notes).

The TPM , which forms the starting point of IIT’s analysis, serves as an overall description of a system’s causal evolution under all possible interventions: what is the probability that the system will transition into each of its possible states upon being initialized into every possible state (Fig 1)? (Notably, there is no additional role for intrinsic physical properties or laws of nature.) In practice, a causal model will be neither complete nor atomic (capturing the smallest units that can be observed and manipulated), but will capture the relevant features of what we are trying to explain and predict (see (7) in S1 Notes).

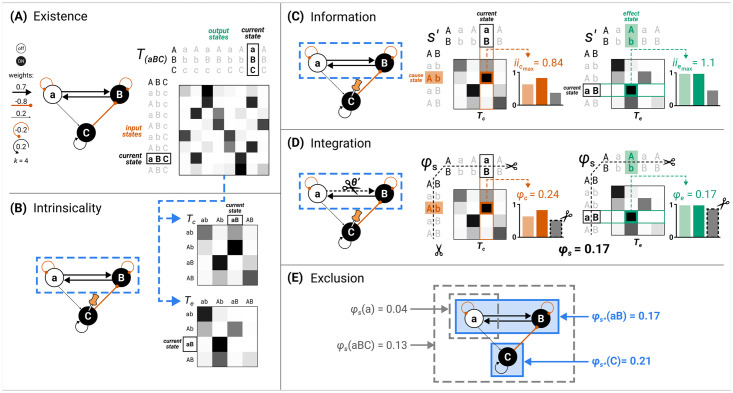

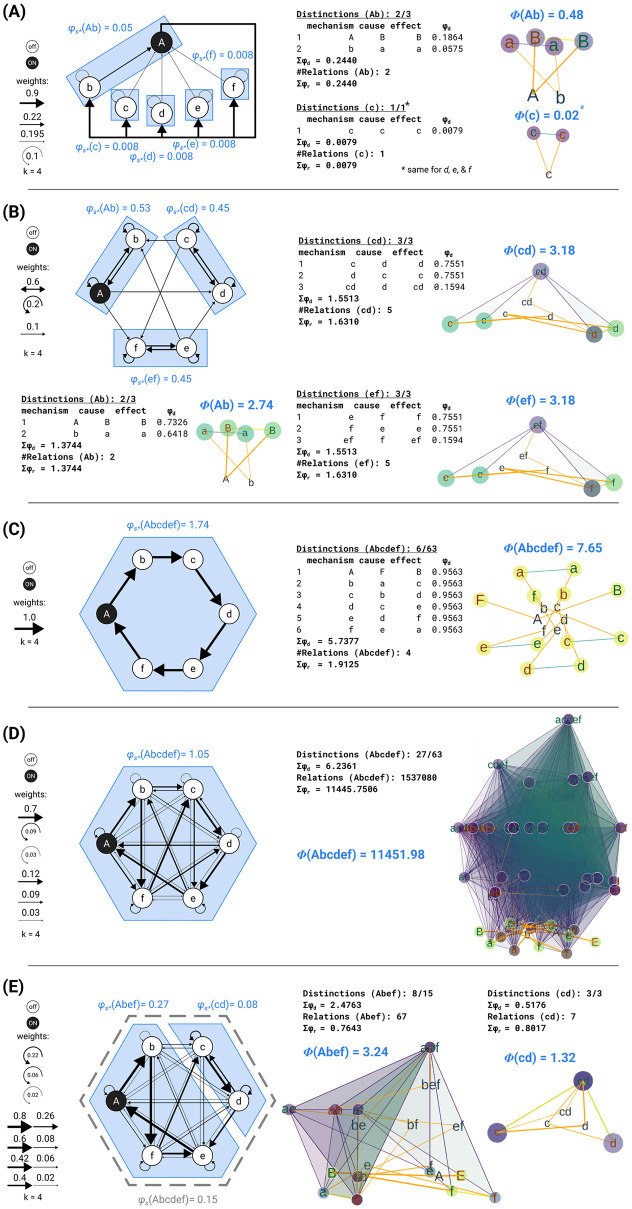

Fig 1. Identifying substrates of consciousness through the postulates of existence, intrinsicality, information, integration, and exclusion.

(A) The substrate S = aBC in state (−1, 1, 1) (lowercase letters for units indicated state “−1,” uppercase letters state “+1”) is the starting point for applying the postulates. The substrate updates its state according to the depicted transition probability matrix (TPM) (gray shading indicates probability value from white (p = 0) to black (p = 1); each unit follows a logistic equation (see “Results” for definition) with k = 4.0 and connection weights as indicated in the causal model). Existence requires that the substrate must have cause–effect power, meaning that the TPM among substrate states must differ from chance. (B) Intrinsicality requires that a candidate substrate, for example, units aB, has cause–effect power over itself. Units outside the candidate substrate (in this case, unit C) are treated as background conditions. The corresponding cause and effect TPMs (Tc and Te) of system aB are depicted on the right. (C) Information requires that the candidate substrate aB selects a specific cause–effect state (s′). This is the cause state (red) and effect state (green) for which intrinsic information (ii) is maximal. Bar plots on the right indicate the three probability terms relevant for computing iic (7) and iie (5): the selectivity (light colored bar), as well as the constrained (dark colored bar) and unconstrained (gray bar) effect probabilities in the informativeness term. (D) Integration requires that the substrate specifies its cause–effect state irreducibly (“as one”). This is established by identifying the minimum partition (MIP; θ′) and measuring the integrated information of the system (φs)—the minimum between cause integrated information (φc) and effect integrated information (φe). Here, gray bars represent the partitioned probability required for computing φc (20) and φe (19). (E) Exclusion requires that the substrate of consciousness is definite, including some units and excluding others. This is established by identifying the candidate substrate with the maximum value of system integrated information ()—the maximal substrate, or complex. In this case, aB is a complex since its system integrated information (φs = 0.17) is higher than that of all other overlapping systems (for example, subset a with φs = 0.04 and superset aBC with φs = 0.13).

In the “Results and discussion” section, the IIT formalism will be applied to extremely simple, simulated networks, rather than causal models of actual substrates. The cause–effect structures derived from these simple networks only serve as convenient illustrations of how a hypothetical substrate’s cause–effect power can be unfolded.

Implementing the postulates

In what follows, our goal is to evaluate whether a hypothetical substrate (also called “system”) satisfies all the postulates of IIT. To that end, we must verify whether the system has cause–effect power that is intrinsic, specific, integrated, definite, and structured.

Existence

According to IIT, existence understood as cause–effect power requires the capacity to both take and make a difference (see Box 2, Principle of being). On the basis of a complete description of the system in terms of interventional conditional probabilities () (1), cause–effect power can be quantified as causal informativeness. Cause informativeness measures how much a potential cause increases the probability of the current state, and effect informativeness how much the current state increases the probability of a potential effect (as compared to chance).

Box 2. Ontological principles of IIT

Principle of being

The principle of being states that to be is to have cause–effect power. In other words, in physical, operational terms, to exist requires being able to take and make a difference. The principle is closely related to the so-called Eleatic principle, as found in Plato’s Sophist dialogue [30]: “I say that everything possessing any kind of power, either to do anything to something else, or to be affected to the smallest extent by the slightest cause, even on a single occasion, has real existence: for I claim that entities are nothing else but power.” A similar principle can be found in the work of the Buddhist philosopher Dharmakīrti: “Whatever has causal powers, that really exists.” [31] Note that the Eleatic principle is enunciated as a disjunction (either to do something… or to be affected…), whereas IIT’s principle of being is presented as a conjunction (take and make a difference).

Principle of maximal existence

The principle of maximal existence states that, when it comes to a requirement for existence, what exists is what exists the most. The principle is offered by IIT as a good explanation for why the system state specified by the complex and the cause–effect states specified by its mechanisms are what they are. It also provides a criterion for determining the set of units constituting a complex—the one with maximally irreducible cause–effect power—for determining the subsets of units constituting the distinctions and relations that compose its cause–effect structure, and for determining the units’ grain. To exemplify, consider a set of candidate complexes overlapping over the same substrate. By the postulates of integration and exclusion, a complex must be both unitary and definite. By the maximal existence principle, the complex should be the one that lays the greatest claim to existence as one entity, as measured by system integrated information (φs). For the same reason, candidate complexes that overlap over the same substrate but have a lower value of φs are excluded from existence. In other words, if having maximal φs is the reason for assigning existence as a unitary complex to a set of units, it is also the reason to exclude from existence any overlapping set not having maximal φs.

Principle of minimal existence

Another key principle of IIT is the principle of minimal existence, which complements that of maximal existence. The principle states that, when it comes to a requirement for existence, nothing exists more than the least it exists. The principle is offered by IIT as a good explanation for why, given that a system can only exist as one system if it is irreducible, its degree of irreducibility should be assessed over the partition across which it is least irreducible (the minimum partition). Similarly, a distinction within a system can only exist as one distinction to the extent that it is irreducible, and its degree of irreducibility should be assessed over the partition across which it is least irreducible. Moreover, a set of units can only exist as a system, or as a distinction within the system, if it specifies both an irreducible cause and an irreducible effect, so its degree of irreducibility should be the minimum between the irreducibility on the cause side and on the effect side (see (9) in S1 Notes).

Intrinsicality

Building upon the existence postulate, the intrinsicality postulate further requires that a system exerts cause–effect power within itself. In general, the systems we want to evaluate are open systems S ⊆ U that are part of a larger “universe” U. From the intrinsic perspective of a system S (see Box 1), the set of the remaining units W = U\S merely act as background conditions that do not contribute directly to cause–effect power. To enforce this, we causally marginalize the background units, conditional on the current state of the universe, rendering them causally inert (see “Identifying substrates of consciousness” for details).

Information

The information postulate requires that a system’s cause–effect power be specific: the system in its current state must select a specific cause–effect state for its units. Based on the principle of maximal existence (Box 2), this is the state for which intrinsic information is maximal—the maximal cause–effect state. Intrinsic information (ii) measures the difference a system takes or makes over itself for a given cause and effect state as the product of informativeness and selectivity. As we have seen (existence), informativeness quantifies the causal power of a system in its current state as a reduction of uncertainty with respect to chance. Selectivity measures how much cause–effect power is concentrated over that specific cause or effect state. Selectivity is reduced by uncertainty in the cause or effect state with respect to other potential cause and effect states.

From the intrinsic perspective of the system, the product of informativeness and selectivity leads to a tension between expansion and dilution, whereby a system comprising more units may show increased deviation from chance but decreased concentration of cause–effect power over a specific state [12, 14].

Integration

By the integration postulate, it is not sufficient for a system to have cause–effect power within itself and select a specific cause–effect state: it must also specify its maximal cause–effect state in a way that is irreducible. This can be assessed by partitioning the set of units that constitute the system into separate parts. The system integrated information (φs) then quantifies how much the intrinsic information specified by the maximal state is reduced due to the partition (see (8) in S1 Notes). Integrated information is evaluated over the partition that makes the least difference, the minimum partition (MIP), in accordance with the principle of minimal existence (see Box 2).

Integrated information is highly sensitive to the presence of fault lines—partitions that separate parts of a system that interact weakly or directionally [13].

Exclusion

Many overlapping sets of units may have a positive value of integrated information (φs). However, the exclusion postulate requires that the substrate of consciousness must be constituted of a definite set of units, neither less nor more. Moreover, units, updates, and states must have a definite grain. Operationally, the exclusion postulate is enforced by selecting the set of units that maximizes integrated information over itself (), based again on the principle of maximal existence (see Box 2). That set of units is called a maximal substrate, or complex. Over a universal substrate, sets of units for which integrated information is maximal compared to all competing candidate systems with overlapping units can be assessed recursively (by identifying the first complex, then the second complex, and so on).

Composition

Once a complex has been identified, composition requires that we characterize its cause–effect structure by considering all its subsets and fully unfolding its cause–effect power.

Usually, causal models are conceived in holistic terms, as state transitions of the system as a whole (1), or in reductionist terms, as a description of the individual units of the system and their interactions (2) [29]. However, to account for the structure of experience, considering only the cause–effect power of the individual units or of the system as a whole would be insufficient [17, 29]. Instead, by the composition postulate, we have to evaluate the system’s cause–effect structure by considering the cause–effect power of its subsets as well as their causal relations.

To contribute to the cause–effect structure of a complex, a system subset must both take and make a difference (as required by existence) within the system (as required by intrinsicality). A subset M ⊆ S in state m ∈ ΩM is called a mechanism if it links a cause and effect state over subsets of units Zc/e ⊆ S, called purviews. A mechanism together with the cause and effect state it specifies is called a causal distinction. Distinctions are evaluated based on whether they satisfy all the postulates of IIT (except for composition). For every mechanism, the cause–effect state is the one having maximal intrinsic information (ii), and the cause and effect purviews are those yielding the maximum value of integrated information (φd) within the complex—that is, those that are maximally irreducible. By the information postulate, the cause–effect power of a complex must be specific, which means that it selects a specific cause–effect state at the system level. Consequently, the distinctions that exist for the complex are only those whose cause–effect state is congruent with the cause–effect state of the complex as a whole (incongruent distinctions are not components of the complex and its specific cause–effect power because they would violate the specificity postulate, according to which the experience can only be “this one”).

Distinctions whose cause or effect states overlap congruently within the system (over the same subset of units in the same state) are bound together by causal relations. Relations also have an associated value of integrated information (φr), corresponding to their irreducibility.

Together, these distinctions and relations compose the cause–effect structure of the complex in its current state. The cause–effect structure specified by a complex is called a Φ-structure. The sum of its distinction and relation integrated information amounts to the structure integrated information (Φ) of the complex.

In the following, we will provide a formal account of the IIT analysis. The first part demonstrates how to identify complexes. This requires that we (a) determine the cause–effect state of a system in its current state, (b) evaluate the system integrated information (φs) over that cause–effect state, and (c) search iteratively for maxima of integrated information () within a universe. The second part describes how the postulates of IIT are applied to unfold the cause–effect structure of a complex. This requires that we identify the causal distinctions specified by subsets of units within the complex and the causal relations determined by the way distinctions overlap, yielding the system’s Φ-structure and its structure integrated information (Φ).

Identifying substrates of consciousness

Our starting point is a substrate U in current state u with TPM (1). We consider any subset s ⊆ u as a possible complex and refer to a set of units S ⊆ U as a candidate system. (Note that s and u are sets of tuples containing both the units and their states.).

By the intrinsicality postulate, the units W = U\S are background conditions, and do not contribute directly to the cause–effect power of the system. To discount the contribution of background units, they are causally marginalized, conditional on the current state of the universe. This means that the background units are marginalized based on a uniform marginal distribution, updated by conditioning on u. The process is repeated separately for each unit in the system, and they are then combined using a product (in line with conditional independence), which eliminates any residual correlations due to the background units. Accordingly, we obtain two TPMs and (for evaluating effects and causes, respectively) for the candidate system S. For evaluating effects, the state of the background units is fully determined by the current state of the universe. The corresponding TPM, , is used to identify the effect of the current state:

| (3) |

where w = u\s. For evaluating causes, knowledge of the current state is used to compute the probability distribution over potential prior states of the background units, which is not necessarily uniform or deterministic. The corresponding TPM, , is used to evaluate the cause of the current state:

| (4) |

In both TPMs, the background units W are rendered causally inert, so that causes and effects are evaluated from the intrinsic perspective of the system.

The intrinsic information iic/e is a measure of the intrinsic cause or effect power exerted by a system S in its current state s over itself by selecting a specific cause or effect state . The cause–effect state for which intrinsic information (iic and iie) is maximal is called the maximal cause–effect state . The integrated information φs is a measure of the irreducibility of a cause–effect state, compared to the directional system partition θ′ that affects the maximal cause–effect state the least (minimum partition, or MIP). Systems for which integrated information is maximal () compared to any competing candidate system with overlapping units are called maximal substrates, or complexes.

The IIT 4.0 formalism to measure a system’s integrated information φs and to identify maximal substrates was first presented in [13]. An example of how to identify complexes in a simple system is given in Fig 1, while a comparison with prior accounts (IIT 1.0, IIT 2.0, and IIT 3.0) can be found in S2 Text. An outline of the IIT algorithm is included in S1 Fig.

Existence, intrinsicality, and information: Determining the maximal cause–effect state of a candidate system

Given a causal model with corresponding TPMs (3) and (4), we wish to identify the maximal cause–effect state specified by a system in its current state over itself and to quantify the causal power with which it does so. In this way, we quantify the cause–effect power of a system from its intrinsic perspective, rather than from the perspective of an outside observer (see Box 1).

System intrinsic information ii

Intrinsic information measures the causal power of a system S over itself, for its current state s, over a specific cause or effect state . Intrinsic information depends on interventional conditional probabilities and unconstrained probabilities of cause or effect states and is the product of selectivity and informativeness.

On the effect side, intrinsic effect information of the current state s over a possible effect state is defined as:

| (5) |

where (3) is the interventional conditional probability that the current state s produces the effect state , as indicated by .

The interventional unconstrained probability

| (6) |

is defined as the marginal probability of , averaged across all possible current states of S with equal probability (where |ΩS| denotes the cardinality of the state space ΩS).

On the cause side, intrinsic cause information iic of the current state s over a possible cause state is defined as:

| (7) |

where (4) is the interventional conditional probability that the cause state produces the current state s, as indicated by , and the interventional unconstrained probability is again defined as the marginal probability of s, averaged across all possible cause states of S with equal probability,

| (8) |

Moreover, (4) is the interventional conditional probability that the current state s ∈ ΩS was produced by ; it is derived from using Bayes’ rule, where we again assign a uniform prior to the possible cause states ,

| (9) |

Informativeness (over chance)

In (5) and (7), the logarithmic term (in base 2 throughout) is called informativeness. Note that informativeness is expressed in terms of ‘forward’ probabilities (probability of a subsequent state given the current state) for both iie (5) and iic (7). However, iie (5) evaluates the increase in probability of the effect state due to the current state based on , while iic (7) evaluates the increase in probability of the current state due to the cause state based on .

In line with the existence postulate, a system S in state s has cause–effect power (it takes and makes a difference) if it raises the probability of a possible effect state compared to chance, which is to say compared to its unconstrained probability,

| (10) |

and if the probability of the current state is raised above chance by a possible cause state,

| (11) |

Informativeness is additive over the number of units: if a system specifies a cause or effect state with probability p = 1, its causal power increases additively with the number of units whose states it fully specifies (expansion), given that the chance probability of all states decreases exponentially.

Selectivity (over states)

From the intrinsic perspective of a system, cause–effect power over a specific cause or effect state depends not only on the deviation from chance it produces, but also on how its probability is concentrated on that state, rather than being diluted over other states. This is measured by the selectivity term in front of the logarithmic term in (5) and (7), corresponding to the conditional probability or of that specific cause or effect state. (Note that here, on the cause side, we use the ‘backward’ probability (probability of a prior state given the current state) obtained through Bayes’ rule, while we use the ‘forward’ probability of the effect state given s on the effect side.) Selectivity means that if p < 1, the system’s causal power becomes subadditive (dilution) (see [14] for details). For example, as shown in [12], if an unconstrained unit is added to a fully specified unit, intrinsic information does not just stay the same, but decreases exponentially. From the intrinsic perspective of the system, the informativeness of a specific cause or effect state is diluted because it is spread over multiple possible states, yet the system must select only one state.

Altogether, taking the product of informativeness and selectivity leads to a tension between expansion and dilution: a larger system will tend to have higher informativeness than a smaller system because it will deviate more from chance, but it will also tend to have lower selectivity because it will have a larger repertoire of states to select from.

Because of the selectivity term, intrinsic information is reduced by indeterminism and degeneracy. As shown in [13], indeterminism decreases the probability of the selected effect state because it implies that the same state can lead to multiple states. In turn, degeneracy decreases the probability of the selected cause state because it implies that multiple states can lead to the same state, even in a deterministic system.

The intrinsic information ii is quantified in units of intrinsic bits, or ibits, to distinguish it from standard information-theoretic measures (which are typically additive). Formally, the ibit corresponds to a point-wise information value (measured in bits) weighted by a probability.

The maximal cause–effect state

Taking the product of informativeness and selectivity on the system’s cause and effect sides captures the postulates of existence (taking and making a difference) and intrinsicality (taking and making a difference over itself) for each possible cause or effect state, as measured by intrinsic information. However, the information postulate further requires that the system selects a specific cause or effect state. The selection is determined by the principle of maximal existence (Box 1): the cause or effect specified by the system should be the one that maximizes intrinsic information. On the effect side (and similarly for the cause side, see S1 Fig),

| (12) |

The system’s intrinsic effect information is the value of iie (5) for its maximal effect state:

| (13) |

We have made the dependency of s′ and iie on explicit in (12) and (13) to highlight that, for intrinsic information to properly assess cause–effect power, all probabilities must be derived from the system’s interventional transition probability function, while imposing a uniform prior distribution over all possible system states. If , the system S in state s has no causal power. This is the case if and only if for every [14] (and likewise, it can be shown that if and only if for every .) It is worthwhile to mention that when , the system state s always increases the probability of the intrinsic effect state compared to chance. Similarly, when the intrinsic cause state increases the probability of the system state, satisfying (11). Note also that a system’s intrinsic cause–effect state does not necessarily correspond to the actual cause and effect states (what actually happened before / will happen after) in the dynamical evolution of the system, which typically also depends on extrinsic influences. (For an account of actual causation according to the causal principles of IIT, see [10].).

Intrinsic difference

Because consciousness is the way it is, the formulation of its properties in physical, operational terms should be unique and based on quantities that uniquely satisfy the postulates [12, 32]. Intrinsic information is formulated as a product of selectivity and informativeness based on the notion of intrinsic difference (ID) [14]. This is a measure of the difference between two probability distributions which uniquely satisfies three properties (causality, intrinsicality, and specificity) that align with the postulates of IIT (but also have independent justification):

causality (Existence): the measure is zero if and only if the system does not make a difference

intrinsicality (Intrinsicality): the measure increases if the system is expanded without noise (expansion) and decreases if the system is expanded without signal (dilution)

specificity (Information): the measure reflects the cause–effect power of a specific state over a specific cause and effect state.

The properties uniquely satisfied by the ID are described in a general mathematical context in [14], as well as some additional discussion in S2 Text.

Note that, on the effect side, iie is formally equivalent to the ID between the constrained effect repertoire and the unconstrained effect repertoire . On the cause side, the application of Bayes rule to compute as the selectivity term means that iic is not strictly equivalent to the ID between two probability distributions. However, analogously to the effect formulation, it is defined as the product of selectivity and informativeness of causes.

Integration: Determining the irreducibility of a candidate system

Having identified the maximal cause–effect state of a candidate system S in its current state s, the next step is to evaluate whether the system specifies the cause–effect state of its units in a way that is irreducible, as required by the integration postulate: a candidate system can only be a substrate of consciousness if it is one system—that is, if it cannot be subdivided into subsets of units that exist separately from one another.

Directional system partitions

To that end, we define a set of directional system partitions Θ(S) that divide S into k ≥ 2 parts , such that

| (14) |

In words, each part S(i) must contain at least one unit, there must be no overlap between any two parts S(i) and S(j), and every unit of the system must appear in exactly one part. For each part S(i), the partition removes the causal connections of that part with the rest of the system in a directional manner: either the part’s inputs, outputs, or both are replaced by independent “noise” (they are “cut” by the partition in the sense that their causal powers are substituted by chance). Directional partitions are necessary because, from the intrinsic perspective of a system, a subset of units that cannot affect the rest of the system, or cannot be affected by it, cannot truly be a part of the system. In other words, to be a part of a system, a subset of units must be able to interact with the rest of the system in both directions (cause and effect).

A partition θ ∈ Θ(S) thus has the form

| (15) |

where δi ∈ {←, →, ↔} indicates whether the inputs (←), outputs (→), or both (↔) are cut for a given part. For each part S(i), we can then identify a set of units X(i) ⊆ S whose inputs to S(i) have been cut by the partition, and the complementary set Y(i) = S\X(i) whose inputs to S(i) are left intact. Specifically,

| (16) |

In the first case, if δi ∈ {←, ↔}, all inputs to S(i) from S\S(i) are cut. In the second case, if δi ∈ {→}, there may still be inputs to S(i) that are cut, which correspond to the outputs of all S(j) with δj ∈ {→, ↔}.

Given a partition θ ∈ Θ(S), we define partitioned transition probability matrices and in which all connections affected by the partition are “noised.” This is done by combining the independent contributions of each unit Sj ∈ S in line with the conditional independence assumption (2). For the effect TPM (and analogously for the cause TPM)

| (17) |

where the partitioned probability of a unit Sj ∈ S(i) is defined as

| (18) |

and y(i) = s\x(i). This means that all connections to unit Sj that are affected by the partition are causally marginalized (replaced by independent noise).

System integrated information φs

The integrated effect information φe measures how much the partition θ ∈ ΘS reduces the probability with which a system S in state s ∈ ΩS specifies its effect state (12),

| (19) |

Note that φe has the same form as the intrinsic information (5), with the partitioned effect probability taking the place of the unconstrained (marginal) probability. Here, |.|+ represents the positive part operator, which sets the negative values to 0. This ensures that the system as a whole raises the probability of the effect state compared to the partitioned probability. Likewise, the integrated cause information φc is defined as

| (20) |

(By the principle of maximal existence, if two or more cause–effect states are tied for maximal intrinsic information, the system specifies the one that maximizes φc/e.).

By the zeroth postulate, existence requires cause and effect power, and the integration postulate requires that its cause–effect power be irreducible. By the principle of minimal existence (Box 2), then, system integrated information for a given partition is the minimum of its irreducibility on the cause and effect sides:

| (21) |

Moreover, again by the principle of minimal existence, the integrated information of a system is given by its irreducibility over its minimum partition (MIP) θ′ ∈ ΘS, such that

| (22) |

The MIP is defined as the partition θ ∈ ΘS that minimizes the system’s integrated information, relative to the maximum possible value it could take for arbitrary TPMs over the units of system S

| (23) |

Accordingly, the system is reducible if at least one partition θ ∈ ΘS makes no difference to the cause or effect probability. The normalization term in the denominator of (23) ensures that is evaluated fairly over a system’s fault lines by assessing integration relative to its maximum possible value over a given partition. Using the relative integrated information quantifies the strength of the interactions between parts in a way that does not depend on the number of parts and their size. As proven in [13], the maximal value of for a given partition θ is the normalization factor , which corresponds to the maximal possible number of “connections” (pairwise interactions) affected by θ. For example, as shown in [13], the MIP will correctly identify the fault line dividing a system into two large subsets of units linked through a few interconnected units (a “bridge”), rather than defaulting to partitions between individual units and the rest of the system. Once the minimum partition has been identified, the integrated information across it is an absolute quantity, quantifying the loss of intrinsic information due to cutting the minimum partition of the system. (If two or more partitions θ ∈ Θ(S) minimize Eq (23), we select the partition with the largest unnormalized φs value as θ′, applying the principle of maximal existence.) Defining θ′ as in (23), moreover, ensures that if the system is not strongly connected in graph-theoretic terms (see (10) in S1 Notes).

In summary, the system integrated information (, also called ‘small phi’, quantifies the extent to which system S in state s has cause–effect power over itself as one system (i.e., irreducibly). is thus a quantifier of irreducible existence.

Exclusion: Determining maximal substrates (complexes)

In general, multiple candidate systems with overlapping units may have positive values of . By the exclusion postulate, the substrate of consciousness must be definite; that is, it must comprise a definite set of units. But which one? Once again, we employ the principle of maximal existence (Box 2): among candidate systems competing over the same substrate with respect to an essential requirement for existence, in this case irreducibility, the one that exists is the one that exists the most. Accordingly, the maximal substrate, or complex, is the candidate substrate with the maximum value of system integrated information (), and overlapping substrates with lower φs are thus excluded from existence.

Determining maximal substrates recursively

Within a universal substrate U0 in state u0, subsets of units that specify maxima of irreducible cause–effect power (complexes) can be identified iteratively: the substrate with maximum is identified as a complex, the corresponding units are excluded from further consideration, the remaining units are searched for the next maximal substrate. Formally, an iterative search is performed to find a sequence of systems with

| (24) |

such that

| (25) |

and until Uk+1 = ∅ or Uk+1 = Uk (the units in U0\Uk+1 still serve as background conditions, for details see [13]). If the maximal substrate is not unique, and all tied systems overlap, the next best system that is unique is chosen instead (see S1 Text).

For any complex S* in its corresponding state s* ∈ ΩS*, overlapping substrates that specify less integrated information () are excluded. Consequently, specifying a maximum of integrated information compared to all overlapping systems

| (26) |

is a sufficient requirement for a system S ⊆ U to be a complex.

As described in [13], this recursive search for maximal substrates “condenses” the universe U0 in state into a disjoint (non-overlapping) and exhaustive set of complexes—the first complex, second complex, and so on.

Determining maximal unit grains

Above, we presented how to determine the borders of a complex within a larger system U, assuming a particular grain for the units Ui ∈ U. In principle, however, all possible grains should be considered [33, 34]. In the brain, for example, the grain of units could be brain regions, groups of neurons, individual neurons, sub-cellular structures, molecules, atoms, quarks, or anything finer, down to hypothetical atomic units of cause–effect power [3, 4]. For any unit grain—neurons, for example—the grain of updates could be minutes, seconds, milliseconds, micro-seconds, and so on. However, by the exclusion postulate, the units that constitute a system S must also be definite, in the sense of having a definite grain.

Once again, the grain is defined by the principle of maximal existence: across the possible micro- and macroscopic levels, the “winning” grain is the one that ensures maximally irreducible existence () for the entity to which the units belong [33, 34].

To evaluate integrated information across grains requires a mathematical framework for defining coarser (macro) units from finer (micro) units. Such a framework has been developed in previous work [33–35], and is updated here to fully align with the postulates.

Supposing that U = u is a universe of micro units in a state, a macro unit J = j is a combination of a set of micro units , and a mapping g from the state to the state of J,

where

As constituents of a complex upon which its cause–effect power rests, the units themselves should comply with the postulates of IIT. Otherwise it would be possible to “make something out of nothing.” Accordingly, units themselves must also be maximally irreducible, as measured by the integrated information of the units when they are treated as candidate systems (φs); otherwise, they would not be units but “disintegrate” into their constituents. However, in contrast to systems, units only need to be maximally irreducible within, because they do not exist as complexes in their own right: a unit J with substrate qualifies as a candidate unit of a larger system S if its integrated information when treated as a candidate system (φs) is higher than that of any system of units (including potential macro units) that can be defined using a subset of . Out of all possible sets of such candidate units, the set of (macro) units that define a complex is the one that maximizes the existence of the complex to which the units belong, rather than their own existence.

In practice, the search for the maximal grain should be an iterative process, starting from micro units: identify potential substrates for macro units () that are maximally irreducible within, identify mappings g that maximize the integrated information of systems of macro units, then consider additional potential substrates for macro units, and so on iteratively, until a global maximum is found. The iterative approach is necessary for establishing that a substrate is maximally irreducible within, as this criterion requires consideration not only of micro units, but also of all finer grains (potential meso units defined from subsets of ).

Here we outlined an overall framework for identifying macro units consistent with the postulates. Additional details about the nature of the mapping g, and how to derive the transition probabilities for a system of macro units are also informed by the postulates (see (11) in S1 Notes).

Unfolding the cause–effect structure of a complex

Once a maximal substrate and the associated maximal cause–effect state have been identified, we must unfold its cause–effect power to reveal its cause–effect structure of distinctions and relations, in line with the composition postulate. As components of the cause–effect structure, distinctions and relations must also satisfy the postulates of IIT (save for composition).

Composition and causal distinctions

Causal distinctions capture how the cause–effect power of a substrate is structured by subsets of units that specify irreducible causes and effects over subsets of its units. A candidate distinction d(m) consists of (1) a mechanism M ⊆ S in state m ∈ ΩM inherited from the system state s ∈ ΩS; (2) a maximal cause–effect state over the cause and effect purviews (Zc, Ze ⊆ S) linked by the mechanism; and (3) an associated value of irreducibility (φd > 0). A distinction d(m) is thus represented by the tuple

| (27) |

For a given mechanism m, our goal is to identify its maximal cause in state and its maximal effect in state within the system, where .

As above, in line with existence, intrinsicality, and information, we determine the maximal cause or effect state specified by the mechanism over a candidate purview within the system based on the value of intrinsic information ii(m, z). Next, in line with integration, we determine the value of integrated information φd(m, Z, θ) over the minimum partition θ′. In line with exclusion, we determine the maximal cause–effect purviews for that mechanism over all possible purviews Z ⊆ S based on the associated value of irreducibility φd(m, Z, θ′). Finally, we determine whether the maximal cause–effect state specified by the mechanism is congruent with the system’s overall cause–effect state (, ), in which case we conclude that it contributes a distinction to the overall cause–effect structure.

The updated formalism to identify causal distinctions within a system S in state s was first presented in [12]. Here we provide a summary with minor adjustments on selecting and , the cause integrated information φc(m, Z), and the requirement that causal distinctions must be congruent with the system’s maximal cause–effect state (see S2 Text).

Existence, intrinsicality, and information: Determining the cause and effect state specified by a mechanism over candidate purviews

Like the system as a whole, its subsets must comply with existence, intrinsicality, and information. As for the system, we begin by quantifying, in probabilistic terms, the difference a subset of units M ⊆ S in its current state m ⊆ s takes and makes from and to subsets of units Z ⊆ S (cause and effect purview). As above, we start by establishing the interventional conditional probabilities and unconstrained probabilities from the TPMs and .

When dealing with a mechanism constituted by a subset of system units, it is important to capture the constraints on a purview state z that are exclusively due to the mechanism in its state (m), removing any potential contribution from other system units. This is done by causally marginalizing all variables in X = S\M, which corresponds to imposing a uniform distribution as p(X) [8, 10, 12] (see (12) in S1 Notes). The effect probability of a single unit Zi ∈ Z conditioned on the current state m is thus defined as

| (28) |

In addition, product probabilities π(z∣m) are used instead of conditional probabilities pe(z∣m) to discount correlations from units in X = S\M with divergent outputs to multiple units in Z ⊆ S [8, 10, 36]. Otherwise, X might introduce correlations in Z that would be wrongly considered as effects of M. Based on the appropriate TPM, the probability over a set Z of |Z| units is thus defined as the product of the probabilities over individual units

| (29) |

and

| (30) |

Note that for a single unit purview πe(z∣m) = pe(z∣m), and for a single unit mechanism πc(m∣z) = pc(m∣z). By using product probabilities, causal marginalization maintains the conditional independence between units (2) because independent noise is applied to individual connections. The assumption of conditional independence distinguishes IIT’s causal powers analysis from standard information-theoretic analyses of information flow [10, 27] and corresponds to an assumption that variables are “physical” units in the sense that they are irreducible within and can be observed and manipulated independently.

From Eqs (29) and (30) we can also define unconstrained probabilities

| (31) |

and

| (32) |

Given the set Y = S\Z, the backward cause probability (selectivity) for a mechanism m with |M| units is computed using Bayes’ rule over the product distributions

| (33) |

where in line with (28).

To correctly quantify intrinsic causal constraints, the marginal probability of possible cause states (for computing or πc(m; Z)) is again set to the uniform distribution. As above, all probabilities are obtained from the TPMs (3) and (4) and thus correspond to interventional probabilities throughout.

Having defined cause and effect probabilities, we can now evaluate the intrinsic information of a mechanism m over a purview state z ∈ ΩZ analogously to the system intrinsic information (5) and (7). The intrinsic effect information that a mechanism in a state m specifies about a purview state z is

| (34) |

The intrinsic cause information that a mechanism in a state m specifies about a purview state z is

| (35) |

As with system intrinsic information, the logarithmic term is the informativeness, which captures how much causal power is exerted by the mechanism m on its potential effect z (how much it increases the probability of that state above chance), or by the potential cause z on the mechanism m. The term in front of the logarithm corresponds to the mechanism’s selectivity, which captures how much the causal power of the mechanism m is concentrated on a specific state of its purview (as opposed to other states). In the following we will again focus on the effect side, but an equivalent procedure applies on the cause side (see S1 Fig).

Based on the principle of maximal existence, the maximal effect state of m within the purview Z is defined as

| (36) |

which corresponds to the specific effect of m on Z. Note that is not always unique (see S1 Text). The maximal intrinsic information of mechanism m over a purview Z is then

| (37) |

Note that, by this definition, if iie(m, Z) ≠ 0, mechanism m always raises the probability of its maximal effect state compared to the unconstrained probability. This is because there is at least one state z ∈ ΩZ such that πe(z∣m) > πe(z; M).

The intrinsic information of a candidate distinction, like that of the system as a whole, is sensitive to indeterminism (the same state leading to multiple states) and degeneracy (multiple states leading to the same state) because both factors decrease the probability of the selected state. Moreover, the product of selectivity and informativeness leads to a tension between expansion and dilution: larger purviews tend to increase informativeness because conditional probabilities will deviate more from chance, but they also tend to decrease selectivity because of the larger repertoire of states.

Integration: Determining the irreducibility of a candidate distinction

To comply with integration, we must next ask whether the specific effect of m on Z is irreducible. As for the system, we do so by evaluating the integrated information φe(m, Z). To that end, we define a set of “disintegrating” partitions Θ(M, Z) as

| (38) |

where {M(i)} is a partition of M and {Z(i)} is a partition of Z, but the empty set may also be used as a part ( denotes the power set). As introduced in [10, 12], a disintegrating partition θ ∈ Θ(M, Z) either “cuts” the mechanism into at least two independent parts if |M| > 1, or it severs all connections between M and Z, which is always the case if |M| = 1 (we refer to [10, 12] for details). Note that disintegrating partitions differ from system partitions (23), which divide the system into two or more parts in a directed manner to evaluate whether and to what extent the system is integrated in terms of its cause–effect power. Instead, disintegrating partitions apply to mechanism–purview pairs within the system, which are already directed, to evaluate the cause or effect power specified by the mechanism over its purview.

Given a partition θ ∈ Θ(M, Z), we can define the partitioned effect probability

| (39) |

with . In the case of , corresponds to the fully partitioned effect probability

| (40) |

The integrated effect information of mechanism m over a purview Z ⊆ S with effect state for a particular partition θ ∈ Θ(M, Z) is then defined as

| (41) |

The effect of m on is reducible if at least one partition θ ∈ Θ(M, Z) makes no difference to the effect probability or increases it compared to the unpartitioned probability. In line with the principle of minimal existence, the total integrated effect information φe(m, Z) again has to be evaluated over θ′, the minimum partition (MIP)

| (42) |

which requires a search over all possible partitions θ ∈ Θ(M, Z):

| (43) |

As in (23), the minimum partition is evaluated against its maximum possible value across all possible systems TPMs , which again corresponds to the number of possible pairwise interactions affected by the partition.

The integrated cause information is defined analogously, as

| (44) |

where the partitioned probability is again a product distribution over the parts in the partition, as in (39).

Taken together, the intrinsic information (37) determines what cause or effect state the mechanism m specifies. Its integrated information quantifies to what extent m specifies its cause or effect in an irreducible manner. Again, φ(m, Z) is a quantifier of irreducible existence.

Exclusion: Determining causal distinctions

Finally, to comply with exclusion, a mechanism must select a definite effect purview, as well as a cause purview, out of a set of candidate purviews. Resorting again to the principle of maximal existence, the mechanism’s effect purview and associated effect is the one having the maximum value of integrated information across all possible purviews Z ⊆ S in state (36)

| (45) |

The integrated effect information of a mechanism m within S is then

| (46) |

The integrated cause information φc(m) and the maximally irreducible cause are defined in the same way (see S1 Fig). Based again on the principle of minimal existence, the irreducibility of the distinction specified by a mechanism is given by the minimum between its integrated cause and effect information

| (47) |

Determining the set of causal distinctions that are congruent with the system cause–effect state

As required by composition, unfolding the full cause–effect structure of the system S in state s requires assessing the irreducible cause–effect power of every subset of units within S (Fig 2). Any m ⊆ s with φd > 0 specifies a candidate distinction d(m) = (m, z*, φd) (27) within the system S in state s. However, in order to contribute to the cause–effect structure of a system, distinctions must also comply with intrinsicality and information at the system level. Thus, the fact that the system must select a specific cause–effect state implies that the cause–effect state they specify over subsets of the system () must be congruent with the cause–effect state specified over itself by the system as a whole s′.

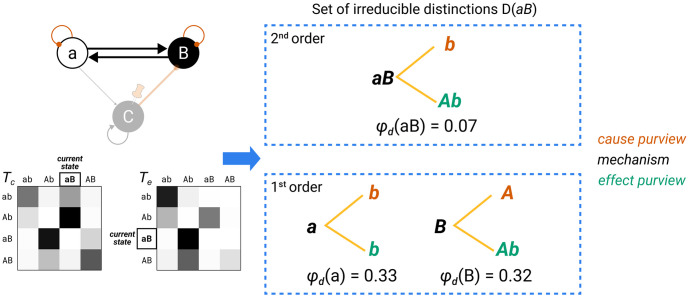

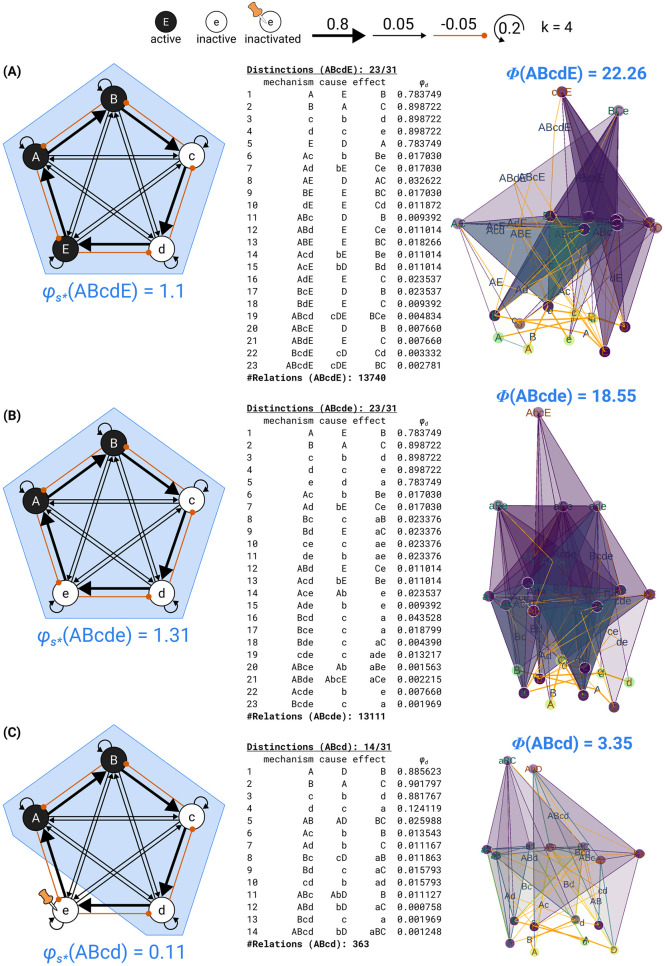

Fig 2. Composition and causal distinctions.

Identifying the irreducible causal distinctions specified by a substrate in a state requires evaluating the specific causes and effects of every system subset. The candidate substrate is constituted of two interacting units S = aB (see Fig 1) with TPMs and as shown. In addition to the two first-order mechanisms a and B, the second-order mechanism aB specifies its own irreducible cause and effect, as indicated by φd > 0.

We thus define the set of all causal distinctions within S in state s as

| (48) |

Altogether, distinctions can be thought of as irreducible “handles” through which the system can take and make a difference to itself by linking an intrinsic cause to an intrinsic effect over subsets of itself. As components within the system, causal distinctions have no inherent structure themselves. Whatever structure there may be between the units that make up a distinction is not a property of the distinction but due to the structure of the system, and thus captured already by its compositional set of distinctions. Similarly, from an extrinsic perspective, one may uncover additional causes and effects, both within the system and across its borders, at either macro or micro grains. However, from the intrinsic perspective of the system causes and effects that are excluded from its cause–effect structure do not exist [17, 29].

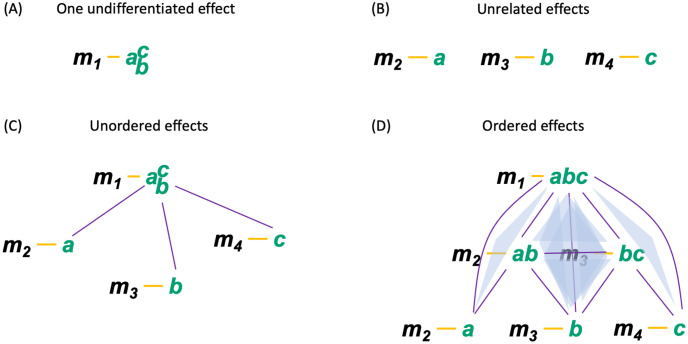

For example, as shown in Fig 3(A), a system may have a mechanism through which it specifies, in a maximally irreducible manner, the effect state of a triplet of units (e.g., , a third-order purview; again lowercase letters for units indicate state “−1,” uppercase letters state “+1”). However, if the system lacks a mechanism through which it can specify the effect state of single units, each taken individually (say, unit a, a first-order effect purview), then, from its intrinsic perspective, that unit does not exist as a single unit. By the same token, if the system can specify individually the state of unit a, b, and c, but lacks a way to specify irreducibly the state of abc together, then, from its intrinsic perspective, the triplet abc does not exist as a triplet (see Fig 3(B)). Finally, even if the system can distinguish the single units a, b, and c, as well as the triplet abc, if it lacks handles to distinguish pairs of units such as ab and bc, it cannot order units in a sequence.

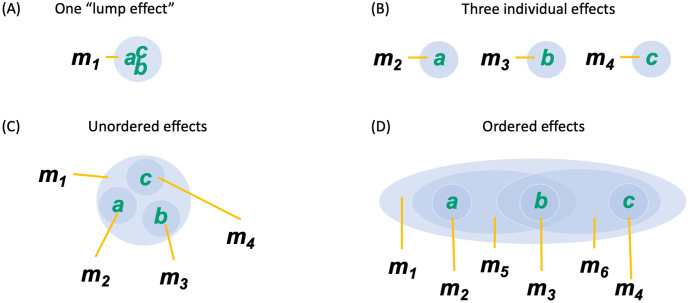

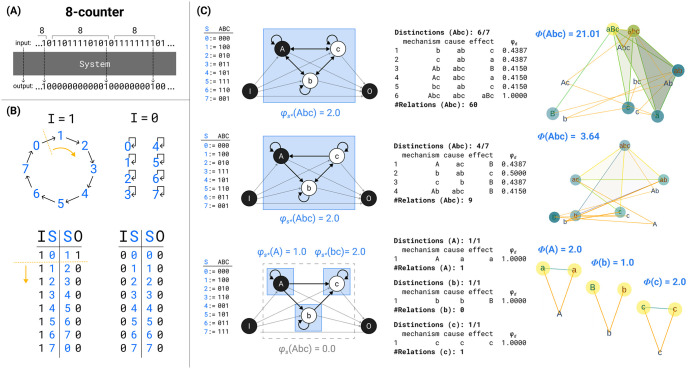

Fig 3. Composition of intrinsic effects.

From the intrinsic perspective of the system, a specific cause or effect is only available to the system if it is selected by a causal distinction d ∈ D(s). In (A), only the top-order effect is specified. From the intrinsic perspective, the system cannot distinguish the individual units. In (B), only first-order effects are specified. The system has no “handle” to select all three units together. (C) If both first- and third-order effects are specified, but no second-order effects, the system can distinguish individual units and select them together, but has no way of ordering them sequentially. (D) The system can distinguish individual units, select them altogether, as well as order them sequentially, in the sense that it has a handle for ab and bc, but not ac. The ordering becomes apparent once the relations among the distinctions are considered (see below, Fig 5).

Composition and causal relations

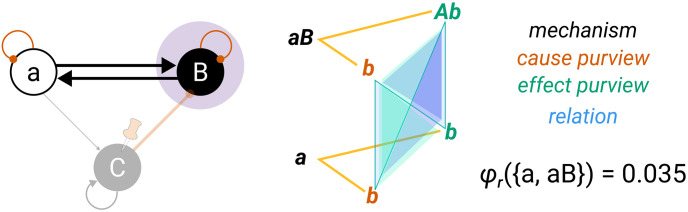

Causal relations capture how the causes and/or effects of a set of distinctions within a complex overlap with each other. Just as a distinction specifies which units/states constitute a cause purview and the linked effect purview, a relation specifies which units/states correspond to which units/states among the purviews of a set of distinctions. Relations thus reflect how the cause–effect power of its distinctions is “bound together” within a complex. The irreducibility due to this binding of cause–effect power is measured by the relations’ irreducibility (φr > 0). Relations between distinctions were first described in [11] (for differences with the initial presentation see S2 Text).

A set of distinctions d ⊆ D(s) is related if the cause–effect state of each distinction d ∈ d overlaps congruently over a set of shared units, which may be part of the cause, the effect, or both the cause and the effect of each distinction. Below we will denote the cause of a distinction d as and its effect as . For a given set of distinctions d ⊆ D(s), there are potentially many “relating” sets of causes and/or effects z such that

| (49) |

with maximal overlap

| (50) |

Since and are sets of tuples containing both the units and their states, the intersection operation considers both the units and the state of the units.