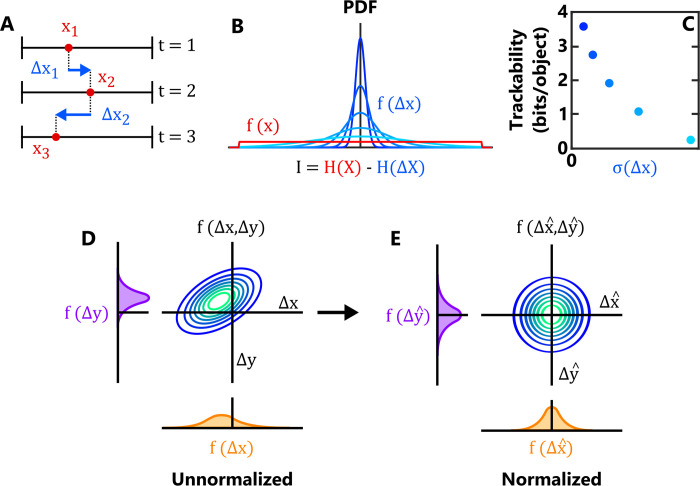

Fig 2. An information-theoretic framework for automated object tracking.

(A) For illustrative purposes, we consider here a theoretical dataset in which an object is characterised using a single feature, its position along the x-axis. The object’s position at three successive timepoints is denoted x1, x2, x3 (red circles), while the displacements are denoted Δx1, Δx2 (blue arrows). (B) We assume that feature displacements are drawn from a Normal distribution f(Δx), while the instantaneous object position (independent of knowledge of other timepoints) is drawn from a separate Uniform distribution f(x). The information content I of the feature is then calculated as the difference in the entropies of the two distributions, H(X) and H(ΔX), and represents our increase in certainty about the position of the object at time t+1 given knowledge about its position at time t. The trackability quantifies the total amount of information measured for each object, which increases when f(Δx) exhibits less variability relative to f(x). (C) Trackability decreases when the distribution of f(Δx) is broader (i.e. the feature becomes more ‘noisy’). Here the different colours correspond to the different distributions of f(Δx) shown in panel B. (D,E) Illustration of the feature normalization process for two features. In both D and E, the central plot indicates the joint distribution of a pair of feature displacements, while the left and bottom plots indicate the corresponding marginal distributions. In D, the random variables representing the unnormalized frame-frame displacements of the two features—ΔX and ΔY—are correlated and displaced from the origin. Using the joint distribution’s covariance matrix Σ(Δx) and mean vector μ(Δx), the feature space is transformed such that the resulting joint distribution of feature displacements is zero-centred and isotropic (E), ensuring that each feature exhibits an equivalent amount of stochastic variation between frames.