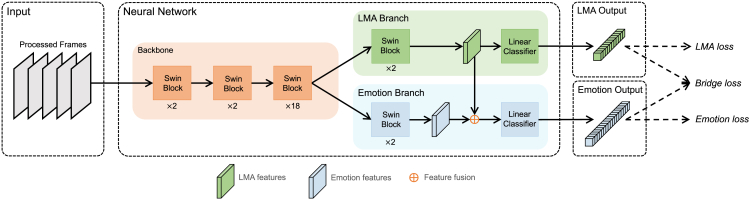

Figure 7.

The framework of the proposed MANet

MANet takes processed frames from video clips as input. The network is composed of a backbone and two distinctive branches—the LMA and emotion branches. Both branches ingest the output of the backbone, extract relevant features, and subsequently yield separate LMA and emotion outputs. Of note, within the emotion branch, a fusion operation takes place, integrating the emotion features with the LMA features. The network training is facilitated by the application of three loss functions: LMA, bridge, and emotion.