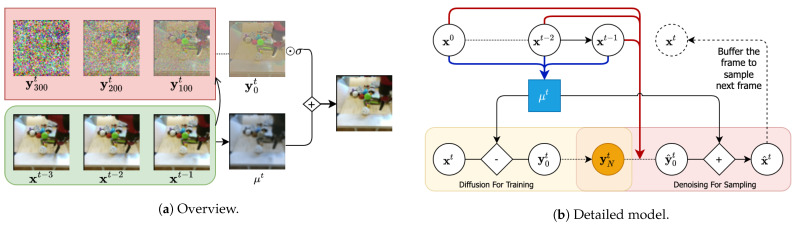

Figure 1.

Overview: Our approach predicts the next frame of a video autoregressively along with an additive correction generated by a denoising process. Detailed model: Two convolutional RNNs (blue and red arrows) operate on a frame sequence to predict the most likely next frame (blue box) and a context vector for a denoising diffusion model. The diffusion model is trained to model the scaled residual conditioned on the temporal context. At generation time, the generated residual is added to the next-frame estimate to generate the next frame as .