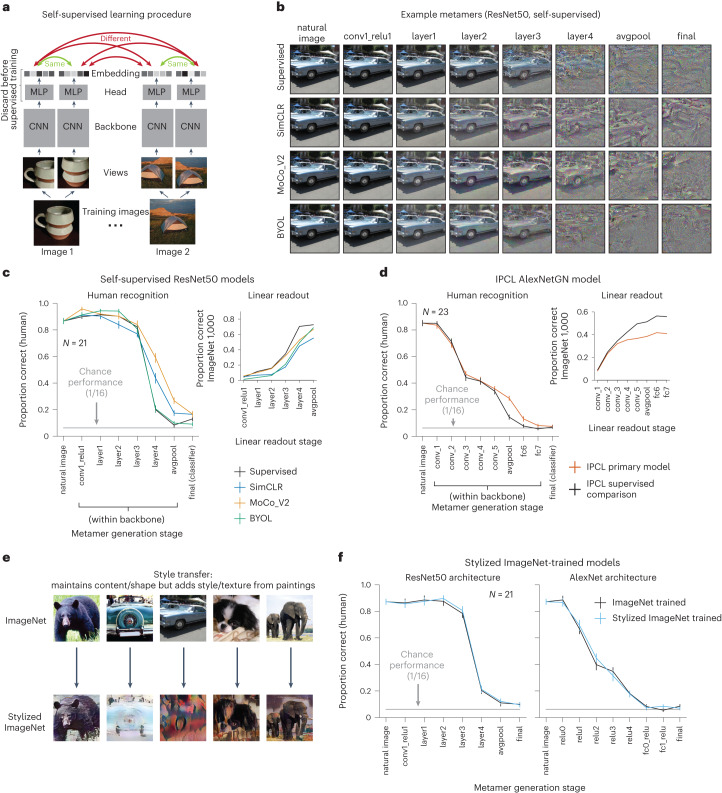

Fig. 3. Model metamers are unrecognizable to humans even with alternative training procedures.

a, Overview of self-supervised learning, inspired by Chen et al.38. Each input was passed through a learnable convolutional neural network (CNN) backbone and a multi-layer perceptron (MLP) to generate an embedding vector. Models were trained to map multiple views of the same image to nearby points in the embedding space. Three of the self-supervised models (SimCLR, MoCo_V2 and BYOL) used a ResNet50 backbone. The other self-supervised model (IPCL) had an AlexNet architecture modified to use group normalization. In both cases, we tested comparison supervised models with the same architecture. The SimCLR, MoCo_V2 and IPCL models also had an additional training objective that explicitly pushed apart embeddings from different images. b, Example metamers from select stages of ResNet50 supervised and self-supervised models. In all models, late-stage metamers were mostly unrecognizable. c, Human recognition of metamers from supervised and self-supervised models (left; N = 21) along with classification performance of a linear readout trained on the ImageNet1K task at each stage of the models (right). Readout classifiers were trained without changing any of the model weights. For self-supervised models, model metamers from the ‘final’ stage were generated from a linear classifier at the avgpool stage. Model recognition curves of model metamers were close to ceiling, as in Fig. 2, and are omitted here and in later figures for brevity. Here and in d, error bars plot s.e.m. across participants (left) or across three random seeds of model evaluations (right). d, Same as c but for the IPCL self-supervised model and supervised comparison with the same dataset augmentations (N = 23). e, Examples of natural and stylized images using the Stylized ImageNet augmentation. Training models on Stylized ImageNet was previously shown to reduce a model’s dependence on texture cues for classification43. f, Human recognition of model metamers for ResNet50 and AlexNet architectures trained with Stylized ImageNet (N = 21). Removing the texture bias of models by training on Stylized ImageNet does not result in more recognizable model metamers than the standard model. Error bars plot s.e.m. across participants.