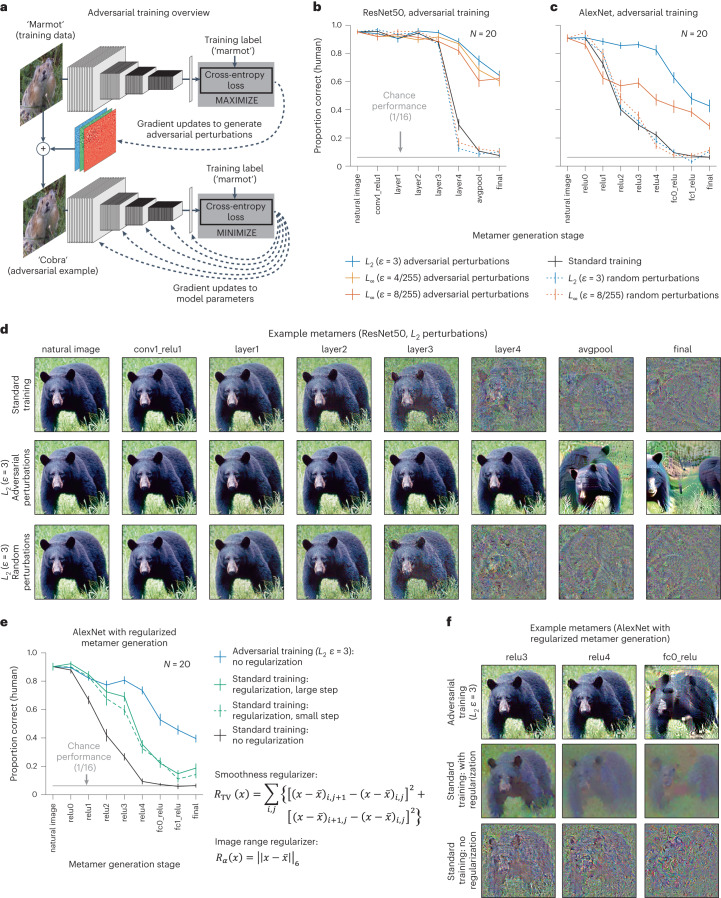

Fig. 4. Adversarial training increases human recognizability of visual model metamers.

a, Adversarial examples are derived at each training step by finding an additive perturbation to the input that moves the classification label away from the training label class (top). These derived adversarial examples are provided to the model as training examples and used to update the model parameters (bottom). The resulting model is subsequently more robust to adversarial perturbations than if standard training was used. As a control experiment, we also trained models with random perturbations to the input. b, Human recognition of metamers from ResNet50 models (N = 20) trained with and without adversarial or random perturbations. Here and in c and e, error bars plot s.e.m. across participants. c, Same as b but for AlexNet models (N = 20). In both architectures, adversarial training led to more recognizable metamers at deep model stages (repeated measures analysis of variance (ANOVA) tests comparing human recognition of standard and each adversarial model; significant main effects in each case, F1,19 > 104.61, P < 0.0001, ), although in both cases, the metamers remain less than fully recognizable. Random perturbations did not produce the same effect (repeated measures ANOVAs comparing random to adversarial; significant main effect of random versus adversarial for each perturbation of the same type and size, F1,19 > 121.38, P < 0.0001, ). d, Example visual metamers for models trained with and without adversarial or random perturbations. e, Recognizability of model metamers from standard-trained models with and without regularization compared to that for an adversarially trained model (N = 20). Two regularization terms were included in the optimization: a total variation regularizer to promote smoothness and a constraint to stay within the image range51. Two optimization step sizes were evaluated. Smoothness priors increased recognizability for the standard model (repeated measures ANOVAs comparing human recognition of metamers with and without regularization; significant main effects for each step size, F1,19 > 131.8246, P < 0.0001, ). However, regularized metamers remained less recognizable than those from the adversarially trained model (repeated measures ANOVAs comparing standard model metamers with regularization to metamers from adversarially trained models; significant main effects for each step size, F1,19 > 80.8186, P < 0.0001, ). f, Example metamers for adversarially trained and standard models with and without regularization.