Figure 5.

Reinforcement learning models of mouse escape behavior

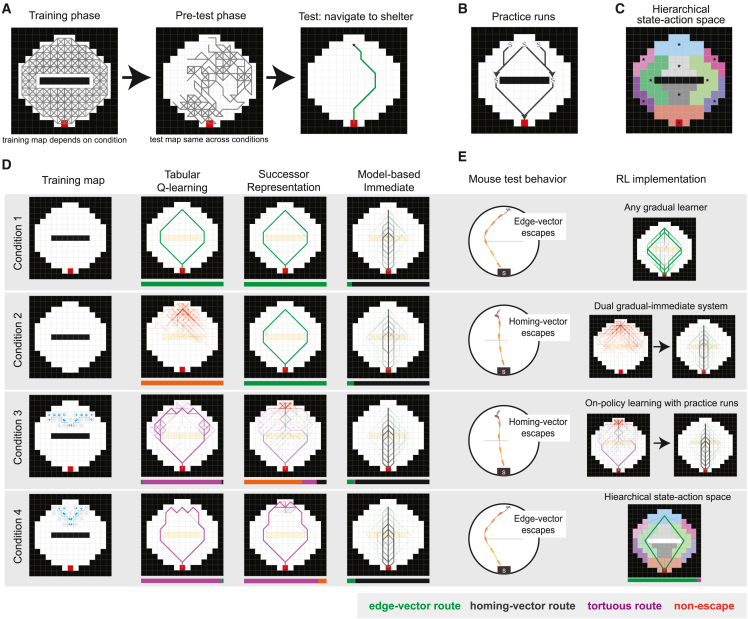

(A) Schematic illustrating the training, pre-test, and testing phases. Gray traces represent paths taken during exploration by the RL agents (training map shown is the map used in condition 1). Accessible states are white, blocked states are black, and accessible rewarded states are red. In the training phase, agents have sufficient exploration for all 100 random seeds to learn a path from the threat zone to the shelter. Middle: a representative exploration trace from the pre-test phase. Right: an example “escape” trajectory from the threat zone (asterisk) to the shelter (red square).

(B) Illustration of the practice runs included in the training phase. Each “S” represents a start point for the hard-coded action sequence, and each arrowhead shows the terminal state. The sequences were triggered with probability p = 0.2 upon entering each start state.

(C) Segmented arena used for the hierarchical state-space agent. Each colored region represents a distinct state. After selecting a neighboring high-level region to move to, the agent moves from its current location to the region central location indicated by the asterisks.

(D) Escape runs from all seeds in all four conditions for the Q-learning, Successor Representation, and model-based (immediate learner) agents. All trials are superimposed. Bar chart below each plot shows the proportion of each type of escape. Edge-vector routes go directly to the obstacle edge; homing-vector routes go directly toward the shelter; tortuous routes go around both the obstacle and the trip wire; non-escapes do not arrive at the shelter. In the training map of conditions 3 and 4, the one-way trip wire is represented by the blue line, and the blue arrows indicate the blocked transitions.

(E) Qualitative mouse behavior for each condition (left) and illustration of the type of RL agent that matches this behavior (right). Condition 1: gradual model-based shown; condition 2: Q-learning and immediate model-based shown; condition 3: SR and immediate model-based shown; condition 4: hierarchical-state-space Q-learning shown.