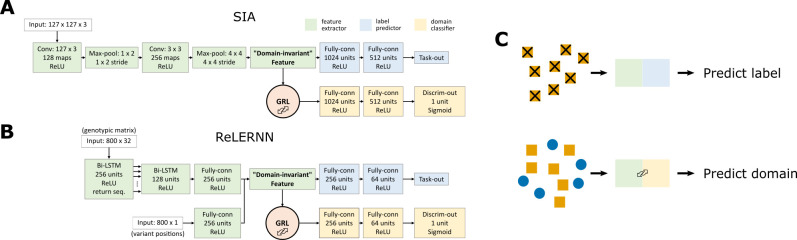

Fig 2. Neural network architecture for domain adaptation.

The model architectures incorporating gradient reversal layers (GRLs) for A) SIA and B) ReLERNN. The feature extractor of SIA contains 1.49 x 105 trainable parameters, whereas the label predictor and domain classifier contains 1.22 x 108 each. The feature extractor of ReLERNN contains 1.52 x 106 trainable parameters, whereas the label predictor and domain classifier contains 1.49 x 105 each. Note that the total number of trainable parameters includes those in batch normalization layers. C) When training the networks, each minibatch of training data consists of two components: (1) labeled data from the source domain fed through the feature extractor and the label predictor; and (2) a mixture of unlabeled data from both the source and target domains fed through the feature extractor and the domain classifier. The first component trains the model to perform its designated task. However, the GRL inverts the loss function for the second component, discouraging the model from differentiating the two domains and leading to the extraction of “domain-invariant” features.