Abstract

The convolution operation plays a vital role in a wide range of critical algorithms across various domains, such as digital image processing, convolutional neural networks, and quantum machine learning. In existing implementations, particularly in quantum neural networks, convolution operations are usually approximated by the application of filters with data strides that are equal to the filter window sizes. One challenge with these implementations is preserving the spatial and temporal localities of the input features, specifically for data with higher dimensions. In addition, the deep circuits required to perform quantum convolution with a unity stride, especially for multidimensional data, increase the risk of violating decoherence constraints. In this work, we propose depth-optimized circuits for performing generalized multidimensional quantum convolution operations with unity stride targeting applications that process data with high dimensions, such as hyperspectral imagery and remote sensing. We experimentally evaluate and demonstrate the applicability of the proposed techniques by using real-world, high-resolution, multidimensional image data on a state-of-the-art quantum simulator from IBM Quantum.

Keywords: convolution, quantum algorithms, quantum image processing, quantum computing

1. Introduction

Convolution is a common operation that is leveraged in a wide variety of practical applications, such as signal processing [1], image processing [2], and most recently, convolutional neural networks [3]. However, leveraging the widespread utility of convolution operations in quantum algorithms is limited by the lack of a systematic, generalized implementation of quantum convolution. Specifically, contemporary quantum circuits for performing quantum convolution with a given filter are designed on a case-by-case basis [4,5,6,7,8]. In other words, implementing a novel convolution filter on a quantum computer is arduous and time consuming, requiring substantial human effort. Such a workflow is impractical for applications, such as quantum convolutional neural networks, which require a generalized, parameterized quantum circuit to iteratively test thousands of unique filters per training cycle.

In this work, we propose a generalizable algorithm for quantum convolution compatible with amplitude-encoded multidimensional data that is able to implement arbitrary multidimensional filters. Furthermore, our proposed technique implements unity stride, which is essential for capturing the totality of local features in input data. We experimentally verify our technique by applying multiple filters on high-resolution, multidimensional images and report the fidelity of the quantum results against the classically computed expectations. The quantum circuits are implemented on a state-of-the-art quantum simulator from IBM Quantum [9] in both noise-free (as a statevector) and noisy environments. Compared to classical CPU- and GPU-based implementations of convolution, we achieve an exponential improvement in time complexity with respect to data size. Additionally, when compared to existing quantum implementations, we achieve improved circuit depth complexity when factoring in the data encoding.

The work is structured as follows. In Section 2, we cover important background information and review the related work. In Section 3, we introduce the proposed quantum convolution circuits and provide analyses of the corresponding circuit depth. In Section 4, we present the experimental setup and results, while in Section 5, we provide discussions of the results and comparisons to related work. Finally, in Section 6, we present our conclusions and potential avenues for future explorations and extensions.

2. Background

In this section, we discuss related work pertinent to quantum convolution. Quantum operations that are relevant to convolution, such as quantum data encoding, and quantum shift operation, are also presented.

2.1. Related Work

Classically, the convolution operation is implemented either directly or by leveraging fast Fourier transform (FFT). On CPUs, the direct implementation has a time complexity of [10], where N is the data size, while FFT-based implementation has a time complexity of [10]. On GPUs, the FFT-based implementation has a similar complexity [11]. It is also common to take advantage of the parallelism offered by GPUs to implement convolution using general matrix multiplications (GEMMs) with FLOPS [12,13], where is the filter size.

Techniques for performing quantum convolution have previously been reported [4,5,6,7,8]. However, these techniques only use fixed sizes of filter windows for specific filters, e.g., edge detection [4,5,6,7,8]. We will denote such methods as fixed-filter quantum convolution. Reportedly, these methods possess a quadratic circuit depth complexity of in terms of the number of qubits , where N is the size of the input data [4,5,6,7,8]. Because the shortest execution time of classical convolution is in the order of or [12,13] with respect to data size N, authors of the quantum counterparts often claim a quantum advantage [4]. However, the reported depth complexity of fixed-filter quantum convolution does not include the unavoidable overhead of data encoding. Furthermore, there does not exist, to the best of our knowledge, a method for performing generalized, multidimensional quantum convolution.

In reported related work [4,5,6,7,8], data encoding is performed with either the flexible representation of quantum images (FRQI) [14] or novel enhanced quantum representation (NEQR) [15] methods. In these encoding techniques, positional information is stored in the basis quantum states of n qubits, while color information is stored via angle encoding and basis encoding for FRQI and NEQR, respectively. FRQI and NEQR require a total of and qubits, respectively, where q is the number of qubits used to represent color values, e.g., for standard grayscale pixel representation. The reported circuit depth complexities of FRQI and NEQR are and , respectively. When factoring in the depth complexities of either data-encoding technique, it is evident that the referenced fixed-filter quantum convolution techniques should be expected to perform worse than classical implementations.

In [16], the authors propose a method of edge detection based on amplitude encoding and the quantum wavelet transform (QWT), which they denote as quantum Hadamard edge detection (QHED). Although the work utilizes grayscale two-dimensional images, the QHED technique is highly customized for those data and does not easily scale or generalize to data of higher dimensions, such as colored and/or multispectral images. For example, the quantum discriminator operation in their technique is applied over all qubits in the circuit, without distinguishing between qubits representing each dimension, i.e., image rows or columns. Such a procedure not only forgoes parallelism and increases circuit depth but inhibits the algorithm’s ability to be generalized beyond capturing one-dimensional features.

In our proposed work, we achieve an exponential improvement in time complexity compared to classical implementations of convolution with respect to data size. Additionally, when compared to existing quantum convolution implementations, we achieve improved circuit depth complexity when factoring in the data encoding. The contribution of our work is analyzed, experimentally verified, and discussed in detail in Section 5.

2.2. Classical to Quantum (C2Q)

Our method of quantum convolution leverages amplitude encoding, which encodes N data values directly in the complex probability amplitudes of the positional basis state for an n-qubit state , where and , see (1):

| (1) |

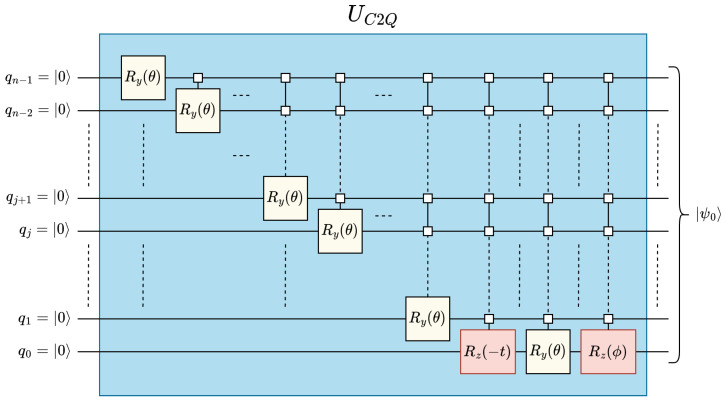

We use the classical-to-quantum (C2Q) [17] data-encoding technique to encode the amplitude encoded state from the ground state , see Figure 1 and (2). The C2Q operation has a circuit depth complexity of , a quadratic and linear improvement over FRQI and NEQR, respectively:

| (2) |

Figure 1.

Quantum circuit for classical-to-quantum (C2Q) arbitrary state synthesis [17].

2.3. Quantum Shift Operation

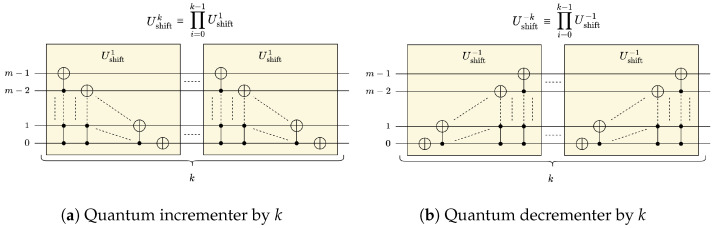

A fundamental operation for quantum convolution is the quantum shift operation, denoted in this work as , which shifts the basis states of the state vector by k positions when applied to an m-qubit state , see (3). The quantum shift operation is critical for performing the cyclic rotations needed to prepare strided windows when performing convolution. It is also common for the operation to be described as a quantum incrementer when , see Figure 2a, and a quantum decrementer when [16,18], see Figure 2b:

| (3) |

Figure 2.

Quantum shift operation using quantum incrementers/decrementers.

3. Materials and Methods

In general, a convolution operation can be performed using a sequence of shift and multiply-and-accumulate operations. In our proposed methods, we implement the generalized convolution operations as follows:

Shift: Auxiliary filter qubits and controlled quantum decrementers are used to create shifted (unity-strided) replicas of input data.

Multiply-and-accumulate: Arbitrary state synthesis and classical-to-quantum (C2Q) encoding are applied to create generic multidimensional filters.

Data rearrangement: Quantum permutation operations are applied to restructure the fragmented data into one contiguous output datum.

In Section 3.1, we present our quantum convolution technique in detail for one-dimensional data. In the following sections, we illustrate optimizations to improve circuit depth and generalize our method for multidimensional data. For evaluating our proposed methods, we used real-world, high-resolution, black-and-white (B/W) and RGB images, ranging in a resolution from pixels to pixels and pixels to pixels, respectively. We also performed experiments on 1-D real-world audio data and 3-D real-world hyperspectral data to demonstrate our method’s applicability to data and filters of any dimensionality. Further details about our experimental setup and dataset can be found in Section 4.

3.1. Quantum Convolution for One-Dimensional Data

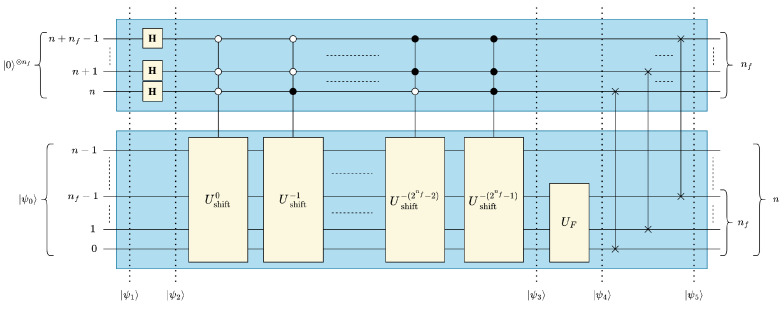

The proposed structure of quantum convolution for one-dimensional (1-D) data is shown in Figure 3. The following sections show the details of the five steps of the convolution operation procedure to transform the initial encoded data to the final state , see Figure 3.

Figure 3.

The 1-D quantum convolution circuit.

3.1.1. Shift Operation

To perform convolution with unity stride with a filter of size terms, replicas of the input data must be made, strided for . To store these replicas, we add auxiliary qubits, which we denote as “filter qubits”, to the most significant positions of the initial quantum state , see (4) and Figure 3:

| (4) |

Placing the filter qubits in superposition using Hadamard (H) gates creates identical replicas of the initial data , as shown in (5):

| (5) |

Finally, multiplexed quantum shift operations can be used to generate the strided replicas, see (6):

| (6) |

3.1.2. Multiply-and-Accumulate Operation

For the traditional convolution operation, applying a filter to an array of data produces a scalar output , which can be expressed as . In the quantum domain, we can represent an array of data as the partial state and the normalized filter as . Accordingly, the output state can be expressed as shown in (7):

| (7) |

To calculate from , it is necessary to embed 〈F| into a unitary operation as shown in (8). Since 〈F| is a normalized row vector, we can define as a matrix such that its first row is 〈F| and the remaining rows are arbitrarily determined to preserve the unitariness of such that . From (2), we can realize using an inverse C2Q operation, see (8):

| (8) |

3.1.3. Data Rearrangement

As of , the desired values of are fragmented among undesired/extraneous values, which we denote using the symbol “×”. We apply SWAP permutations to rearrange and coalesce our desired values to be contiguous in the final statevector , see (9) and Figure 3:

| (9) |

3.1.4. Circuit Depth Analysis of 1-D Quantum Convolution

When considering the circuit depth complexity of the proposed 1-D quantum convolution technique, it is evident from Figure 3 that the operations described by (5) and (9) are performed using parallel Hadamard and SWAP operations, respectively, and thus are of constant depth complexity, i.e., . In contrast, the and operations incur the largest circuit depth, as they are both serial operations that scale with the data size and/or filter size, see Figure 3.

For the implementation of in Figure 3, there are a total of controlled quantum shift operations, where the i-th shift operation is a quantum decrementer . Since all the operations are performed in series, the circuit depth of depends on the total number of unity quantum decrementers, , see (10):

| (10) |

As shown in Figure 2b, each quantum decrementer acting on m qubits can be realized using m multi-controlled CNOT (MCX) gates, where the i-th MCX gate is controlled by i qubits and . Accordingly, for each quantum decrementer that is controlled by c qubits, its i-th MCX gate is controlled by a total of qubits. Therefore, the depth of the quantum decrementer circuits can be expressed in terms of the MCX gate count as shown in (11):

| (11) |

The depth of an MCX gate with a total of m qubits can be expressed with a linear function in terms of fundamental single-qubit rotation gates and CNOT gates [19] as shown in (12), where represents the first-order coefficient and represents the constant bias term. Thus, the depth complexity of can be expressed as shown in (13):

| (12) |

| (13) |

To derive the circuit depth complexity of , we leverage the definitions of and as shown in (14), where and :

| (14) |

As discussed in Section 3.1.2, we implement the filter operation by leveraging the C2Q arbitrary synthesis operation [17]. Although C2Q incurs a circuit depth of exponential complexity in terms of fundamental quantum gates, as shown in (15), is only applied to qubits, a small subset of the total number of qubits, which somewhat mitigates the circuit depth. Furthermore, in most practical scenarios, the dimensions of the filter are typically much smaller than the dimensions of the input data, i.e., . As a result, should not incur overly large circuit depth relative to other circuit components, e.g., . Altogether, the overall circuit depth complexity of the 1-D quantum convolution operation can be expressed according to (16):

| (15) |

| (16) |

3.2. Depth-Optimized 1-D Quantum Convolution

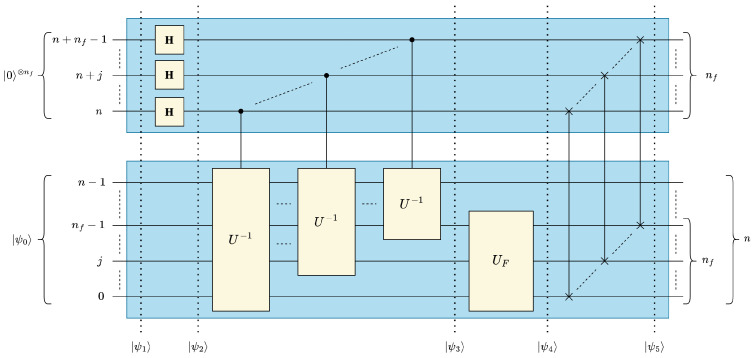

In Figure 4, we present an optimized implementation of that greatly reduces the circuit depth.

Figure 4.

Depth-optimized 1-D quantum convolution circuit.

In Section 3.1, we implemented with controlled quantum decrementers , where . We can represent each k in binary notation, as shown in (17), to express as a sequence of controlled shift operations by powers of 2. As shown in (18), we can denote such operations with the notation , where , and reflects that the shift operation is applied to an n-qubit state.

| (17) |

| (18) |

The binary decomposition of the uniformly controlled operations is conducive to several circuit depth optimizations. As shown in (19), the value of is dependent only on the state of the j-th filter qubit . In other words, each can only be controlled by one qubit , independently from the other control qubits. Accordingly, it is possible to coalesce the multiplexed operations across the k control indices. The resultant implementation of , therefore, becomes a sequence of single-controlled operations, where , which comparatively has a smaller circuit depth by a factor of . Furthermore, each operation can be implemented using a single operation in lieu of sequential operations, see (20) and Figure 4, further reducing the depth by a factor of per operation:

| (19) |

| (20) |

Circuit Depth Analysis of Optimized 1-D Quantum Convolution

With the aforementioned optimizations, the depth of the updated operation can be expressed in terms of as described by (21), where and for all . In comparison with the depth complexity of the unoptimized , see (14), the dominant term remains quadratic, i.e., , in terms of the data qubits n. However, its coefficient is improved exponentially, from to , see (14) and (21). Note that a cubic term in terms of the number of filter qubits is introduced in the optimized implementation, see (21). However, when considering the total depth for the optimized 1-D quantum convolution circuit , the term becomes negligible because of , whose complexity is exponential in terms of the number of filter qubits, see (15), (21), and (22):

| (21) |

| (22) |

3.3. Generalized Quantum Convolution for Multidimensional Data and Filters

In this section, we present the quantum circuit of our proposed quantum convolution technique generalized for multidimensional data and filters. Although quantum statevectors are one-dimensional, it is possible to map multidimensional data to a 1-D vector in either row- or column-major order. In this work, we represent multidimensional input data and convolutional filters in a quantum circuit in column-major order. In other words, for d-dimensional data of size data values, the positional information of the i-th dimension is encoded in the to qubits, where . Using this representation, the optimized 1-D quantum convolution circuit shown in Figure 4 can be generalized for d dimensions by “stacking” d 1-D circuits as shown in Figure 5.

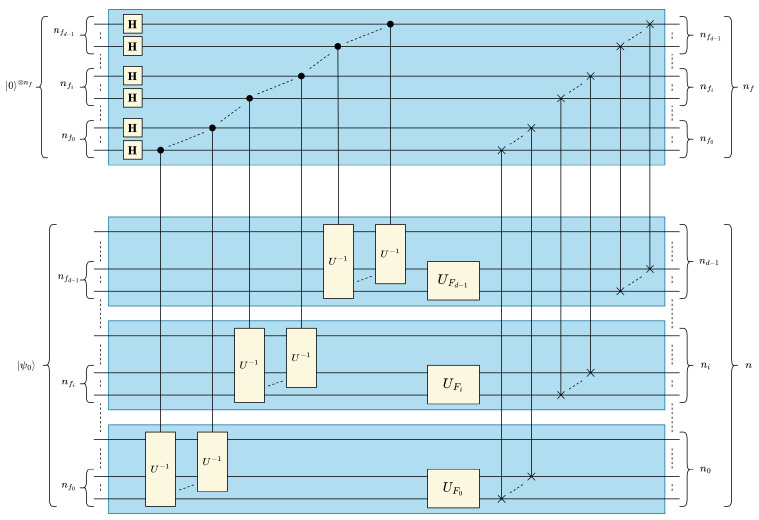

Figure 5.

Multidimensional quantum convolution circuit with distributed/stacked 1-D filters.

The “stacked” quantum circuit in Figure 5 is based on the assumption that the overall (lumped) d-dimensional filter operator is separable and decomposable into d one-dimensional filters for . However, it would be more practically useful to generalize our multidimensional quantum convolution technique independently from the separability/decomposability of . For this purpose, the identity in (23), which could be easily derived from either Figure 3 or Figure 4 for 1-D convolution, can be leveraged and generalized for multidimensional convolution circuits, see (24). The identity in (24) allows us to reverse the order of multiply-and-accumulate and data rearrangement steps and, therefore, generate one generic lumped that acts on the contiguous filter qubits, where is the number of qubits representing the filter dimension i for , see Figure 6. can be derived based on the given arbitrary multidimensional filter F using the method discussed in Section 3.1.2 when F is represented as a normalized 1-D vector in a column major ordering:

| (23) |

| (24) |

Figure 6.

Generalized multidimensional quantum convolution circuit.

Circuit Depth Analysis of Generalized Multidimensional Quantum Convolution

As a result of the “stacked" structure, the data of all d dimensions could be concurrently processed in parallel. Consequently, the circuit depth of the multidimensional quantum circuit becomes dependent on the largest data dimension , where , in lieu of the total data size N. The circuit component of the optimized 1-D circuit with the greatest depth contribution is performed in parallel on each dimension in the generic d-D circuit. Specifically, scales with the number of qubits used to represent the largest data dimension . Note that the parallelization across dimensions applies to the Hadamard and SWAP operations from (5) and (9), see Figure 6, and therefore these operations are of constant depth complexity, i.e., . The depth complexity of the multidimensional operation is determined by the total number of elements in the filter , and therefore the C2Q-based implementation of does not benefit from multidimensional stacking. Accordingly, the circuit depth of the generalized multidimensional quantum convolution operation could be derived from (22) and expressed in (25), where is the number of qubits representing the maximum filter dimension . It is worth mentioning that the generic multidimensional formula in (25) reduces to the 1-D formula in (22) when and :

| (25) |

4. Experimental Setup and Results

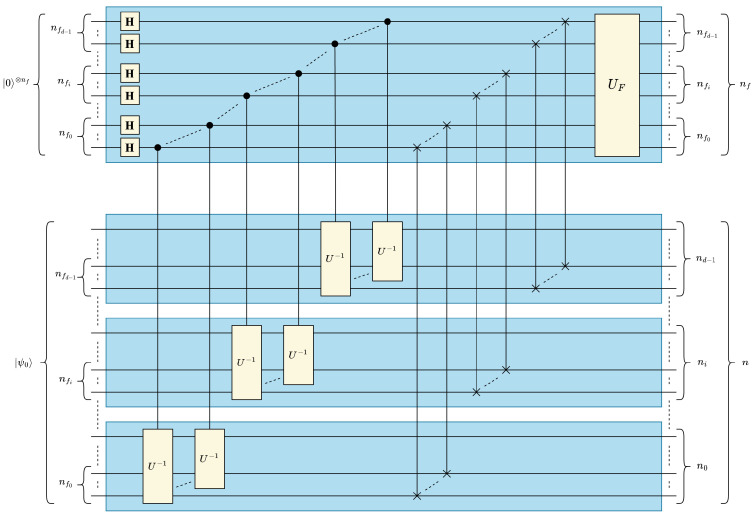

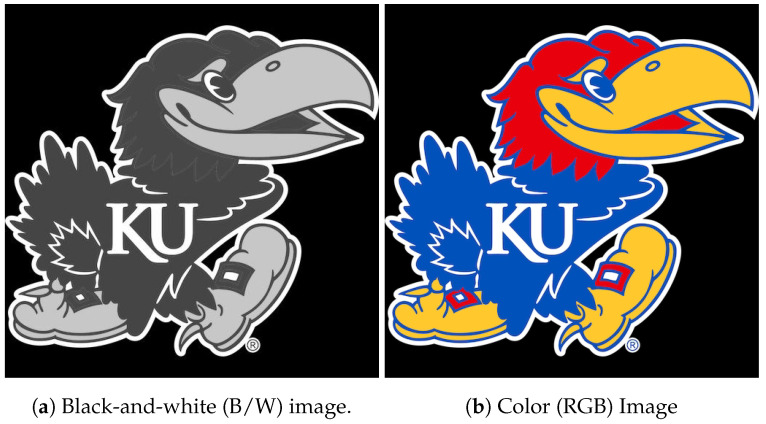

We experimentally demonstrate our proposed technique for generalized, multidimensional quantum convolution with unity stride on real-world, high-resolution, multidimensional image data, see Figure 7. By leveraging the Qiskit SDK (v0.39.4) from IBM Quantum [9], we simulate our quantum circuits in the following formats: (1) classically (to present the ideal/theoretical expectation), (2) noise-free (using statevector simulation), and (3) noisy (using 1,000,000 “shots” or circuit samples). Moreover, we present a quantitative comparison of the obtained results using fidelity [20] as a similarity metric between compared results and , see (26). Experiments were performed on a 16-core AMD EPYC 7302P CPU with frequencies up to 3.3 GHz, 128 MB of L3 cache, and access to 256 GB of DDR4 RAM. In our analysis, we evaluated the correctness of the proposed techniques by comparing classical results with noise-free results. We also evaluated the scalability of the proposed techniques for higher-resolution images by comparing the classical results with both the noise-free and noisy results. Finally, we plotted the circuit depth of our techniques with respect to the data size and filter size as shown in Figure 8.

| (26) |

Figure 7.

Real-world, high-resolution, multidimensional images used in experimental trials.

Figure 8.

Circuit depth of quantum convolution with respect to data and filter qubits.

In our experiments, we evaluated our techniques using well-known and filters, i.e., Averaging , Sobel edge-detection /, Gaussian blur , and Laplacian of Gaussian blur (Laplacian) , see (27)–(30). We applied zero padding to maintain the size of the filter dimensions at a power of two for quantum implementation. In addition, we used wrapping to resolve the boundary conditions, and we restricted the magnitude of the output between to mitigate quantization errors in the classical domain:

| (27) |

| (28) |

| (29) |

| (30) |

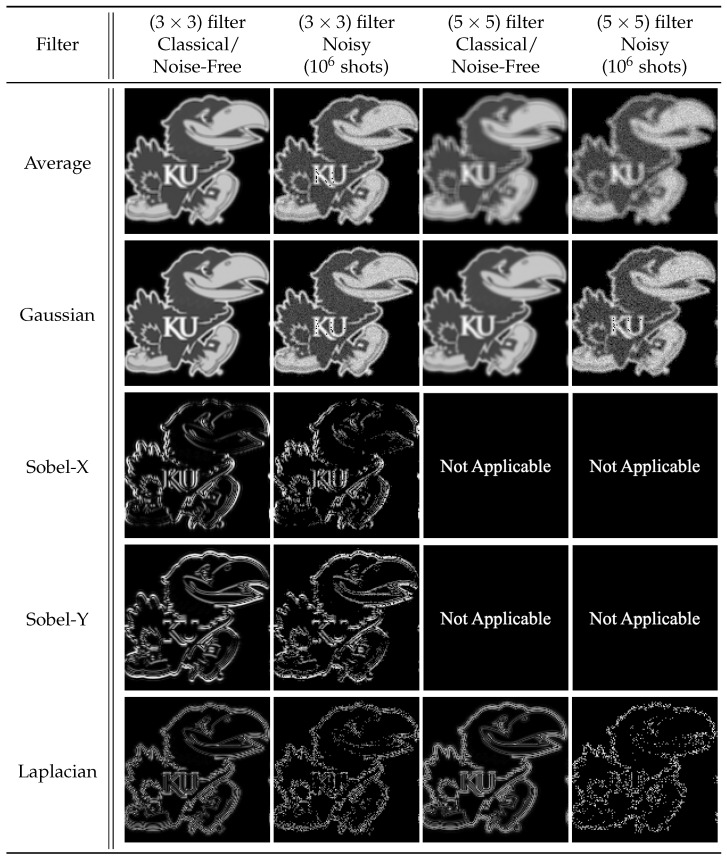

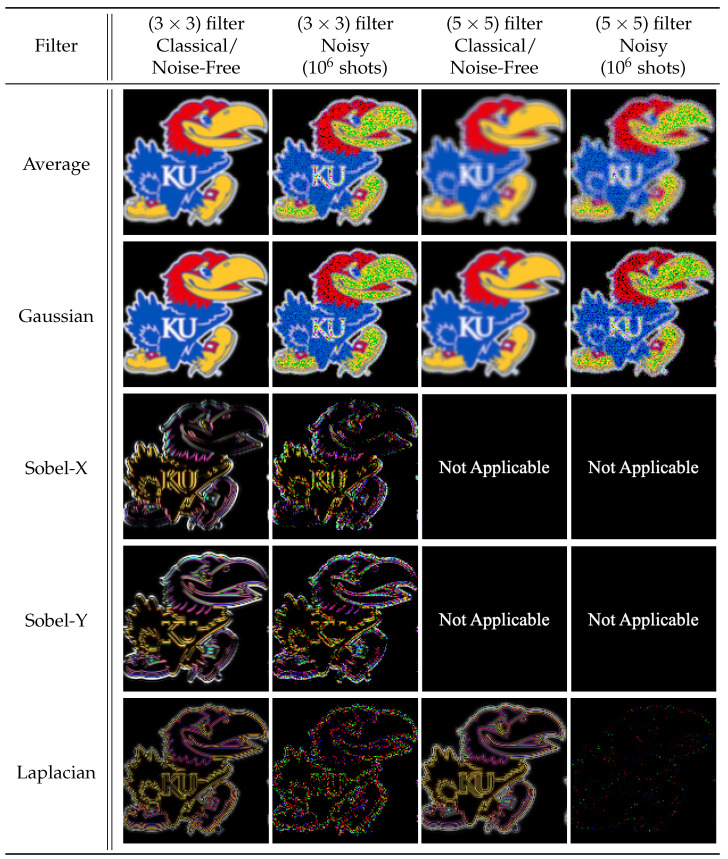

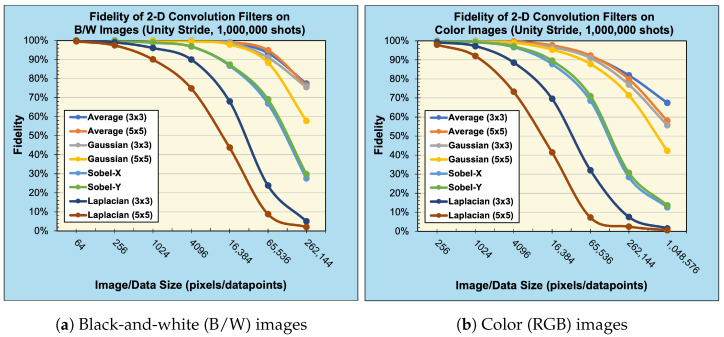

We applied 2-D convolution filters to black-and-white (B/W) and RGB images, see Figure 7, ranging in resolution from pixels to pixels and pixels to pixels, respectively. The number of filter qubits can be obtained by the size of filter dimensions, i.e., qubits for () filters and qubits for () filters. Therefore, our simulated quantum circuits ranged in size () from 10 qubits to 26 qubits. Figure 9 and Figure 10 present the reconstructed output images for classical, noise-free, and noisy experiments using and -pixel input images, respectively.

Figure 9.

The 2-D convolution filters applied to a () B/W image.

Figure 10.

The 2-D convolution filters applied to a () RGB image.

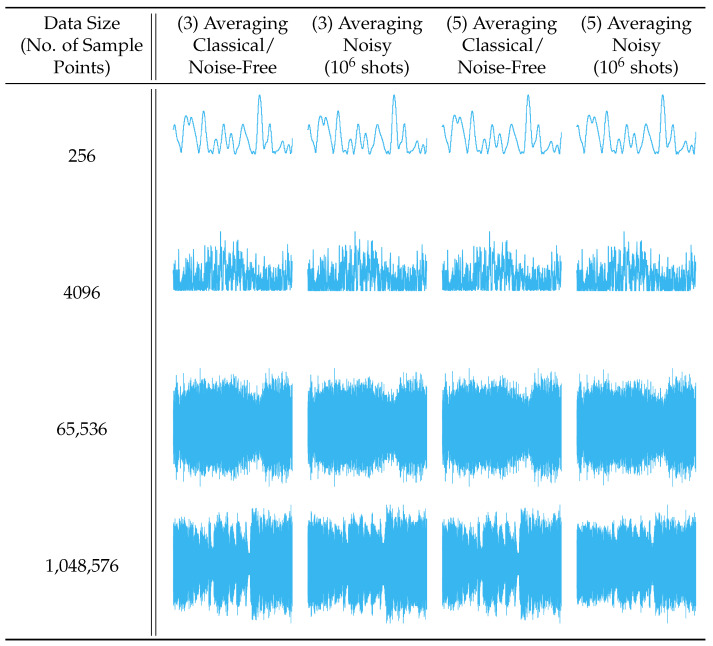

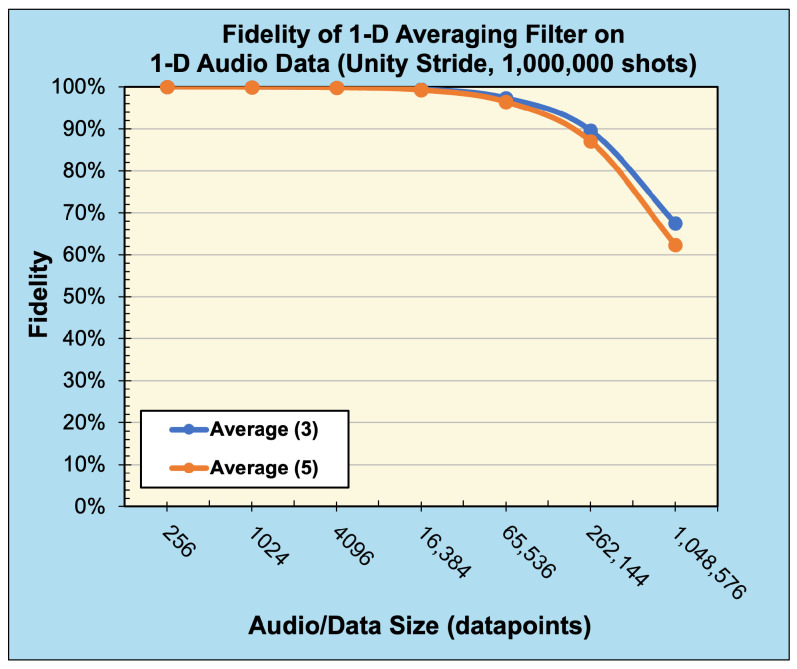

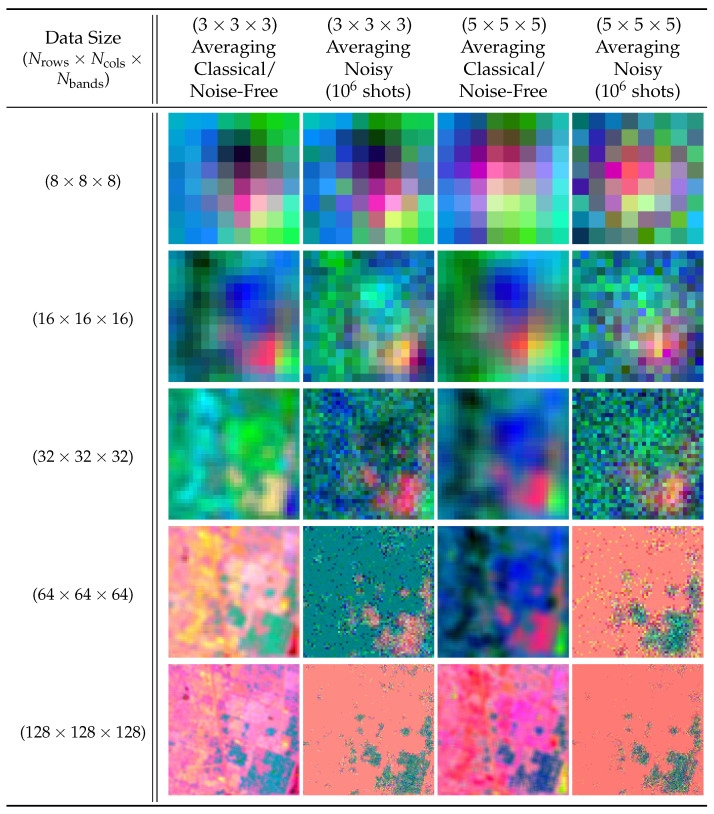

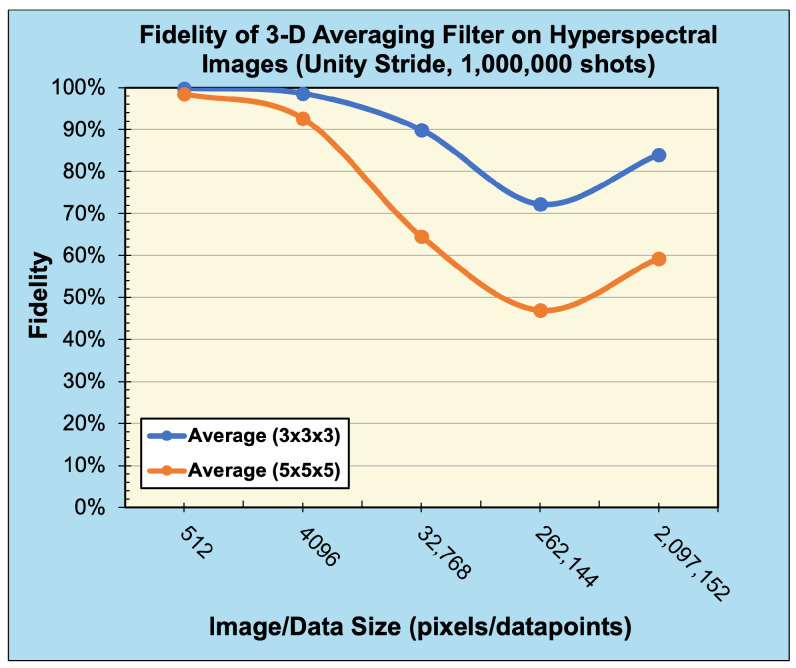

To demonstrate our method’s applicability to data and filters of any dimensionality, we also performed experiments applying the 1-D and 3-D averaging filter to 1-D real-world audio data and 3-D real-world hyperspectral data, respectively. The audio files were sourced from the publicly available sound quality assessment material published by the European Broadcasting Union and modified to be single channel with data sizes ranging from values to values when sampled at 44.1 kHz [21]. Figure 11 and Figure 12 present the reconstructed output images and fidelity, respectively, from applying (3) and (5) averaging filters. The hyperspectral images were sourced from the Kennedy Space Center (KSC) dataset [22] and resized to range from () pixels to () pixels. Figure 13 and Figure 14 present the reconstructed output images and fidelity, respectively, from applying () and () averaging filters.

Figure 11.

The 1-D convolution (averaging) filters applied to 1-D audio samples.

Figure 12.

Fidelity of 1-D convolution (averaging) filters with unity stride on 1-D audio data (sampled with 1,000,000 shots).

Figure 13.

The 3-D convolution (averaging) filters applied to 3-D hyperspectral images (bands 0, 1, and 2).

Figure 14.

Fidelity of 3-D convolution (averaging) filters with unity stride on 3-D hyperspectral data (sampled with 1,000,000 shots).

Comparison of the noise-free quantum results against the ideal classical results demonstrates a fidelity across all trials. Thus, in a noise-free (statevector) environment, our proposed quantum convolution technique correctly performs an identical operation to classical convolution given the same input parameters and boundary conditions.

When considering the behavior of noisy (sampled) environments, Figure 12, Figure 14 and Figure 15 plot the fidelity of the noisy quantum results against the ideal classical results. We observe a monotonic decrease in fidelity as the data size (image resolution) increases, consistent with previously reported behavior [23]. Such behavior derives from how the number of shots required to properly characterize a quantum state increases with the corresponding number of qubits in order to reduce the effects of statistical noise. Notably, the fidelity varies dramatically depending on the filter category and size, where the largest discrepancy occurs between the () Averaging and () Laplacian filters for a data size of values. Specifically, for a B/W image of () pixels, the Averaging filter had a fidelity of 94.84%, while the Laplacian filter had a fidelity of 8.82%—a difference of 86.02%, see Figure 15a. In general, we observed that the average and blur filters perform better than the edge detection methods (Sobel/Laplacian). Since the output data are reconstructed from only a portion of the final state , it is likely that sparse filters, represented in Figure 9 and Figure 10 as being mostly black, are significantly less likely to be recorded during sampled measurement, resulting in reduced fidelity. For practical applications, dimension reduction techniques, such as pooling, can be used to mitigate information loss [23].

Figure 15.

Fidelity of 2-D convolution with unity stride (sampled with 1,000,000 shots).

5. Discussion

In the following section, we compare our proposed method of quantum convolution to the related work discussed in Section 2.1 in terms of filter generalization and circuit depth.

5.1. Arbitrary Multidimensional Filtering

Our generalizable and parameterized technique of quantum convolution with unity stride offers distinct workflow advantages over existing fixed-filter quantum convolution techniques in variational applications, such as quantum machine learning. Our technique does not require extensive development for each new filter design. For instance, current quantum convolutional filters are primarily two dimensional, only focusing on image processing. However, the development of even similar filters targeting audio and video processing, for example, would require extensive development and redesign. Our method offers a systematic and straightforward approach for generating practical quantum circuits given fundamental input variables.

5.2. Circuit Depth

Figure 8a,b show the circuit depth for our proposed technique of generalized quantum convolution with respect to the total number of data qubits and the total number of filter qubits , respectively. The results were gathered using the QuantumCircuit.depth() method built into Qiskit for a QuantumCircuit transpiled to fundamental single-qubit and CNOT quantum gates. Figure 8a illustrates quadratic circuit depth complexity with respect to the data qubits n for a fixed filter size , aligning with our theoretical expectation in (25). Note that and for d-dimensional data. Similarly, Figure 8b (plotted on a log-scale) illustrates exponential circuit depth complexity with respect to for a fixed data size , which also aligns with our theoretical expectation in (25).

The time complexity comparison of our proposed quantum convolution technique against related work is shown in Table 1. Compared to classical direct implementations on CPUs, our proposed technique for generalized quantum convolution demonstrates an exponential improvement with respect to data size , i.e., vs. , see (25) and Table 1c. As discussed in Section 2.1, the fastest classical GEMM implementation of convolution on GPUs (excluding data I/O overhead) [12,13] has a complexity of , see Table 1c. Even when including quantum data encoding, which is equivalent to data I/O overhead, our method remains to demonstrate a linear improvement with respect to data size N by a factor of the filter size , see (31), over classical GEMM GPUs:

| (31) |

Table 1.

Comparison of depth/time complexity of proposed generalized quantum convolution technique against related work.

| a Depth complexity of quantum data encoding (I/O) techniques | |||

| FRQI [14] | NEQR [15] | C2Q [17] | |

| b Depth complexity of quantum convolution algorithms for a fixed filter | |||

| Proposed | Related Work [4,5,6,7,8] | ||

| c Complexity of proposed technique compared to classical convolution | |||

| Proposed | Direct (CPU) [10] | FFT (CPU/GPU) [10,11] | GEMM (GPU) [12,13] |

Compared to fixed-filter quantum convolution techniques [4,5,6,7,8], our proposed arbitrary filter quantum convolution technique (for unity stride) demonstrates improved circuit depth complexity with respect to data size when factoring in the circuit depth contribution from data encoding. For a fixed filter, i.e., is constant, the depth of the proposed method scales quadratically with the largest data dimension , see (25) and (31). As described in Section 2.1, fixed-filter quantum convolution techniques similarly show quadratic depth scaling with respect to the number of qubits n, see Table 1b. For data encoding, the fixed-filter techniques use either the FRQI [14] or NEQR [15] algorithms, which have circuit depth complexities of or , respectively. In contrast, our proposed technique uses C2Q data encoding [17], which has a depth complexity of —a quadratic and linear improvement over FRQI and NEQR, respectively, see Table 1a.

6. Conclusions

In this work, we proposed and evaluated a method for generalizing the convolution operation with arbitrary filtering and unity stride for multidimensional data in the quantum domain. We presented the corresponding circuits and their performance analyses along with experimental results that were collected using the IBM Qiskit development environment. In our experimental work, we validated the correctness of our method by comparing classical results to noise-free quantum results. We also demonstrated the practicality of our method for various convolution filters by evaluating the noisy quantum results. Furthermore, we presented experimentally verified analyses that highlight our technique’s advantages in terms of time complexity and/or circuit depth complexity compared to existing classical and quantum methods, respectively. Future work will focus on adapting our proposed technique for arbitrary strides. In addition, we will investigate multidimensional quantum machine learning as a potential application of our proposed technique.

Acknowledgments

This research used resources of the Oak Ridge Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC05-00OR22725.

Abbreviations

The following abbreviations are used in this manuscript:

| 1-D | One Dimensional |

| C2Q | Classical to Quantum |

| FFT | Fast Fourier Transform |

| FRQI | Flexible Representation of Quantum Images |

| GEMM | General Matrix Multiplication |

| NEQR | Novel Enhanced Quantum Representation |

| QHED | Quantum Hadamard Edge Detection |

| QWT | Quantum Wavelet Transform |

Author Contributions

Conceptualization: M.J., V.J., D.K. and E.E.-A.; Methodology: M.J., V.J., D.K. and E.E.-A.; Software: M.J., D.L., D.K. and E.E.-A.; Validation: M.J., V.J., D.L., D.K. and E.E.-A.; Formal analysis: M.J., V.J., D.L., D.K. and E.E.-A.; Investigation: M.J., A.N., V.J., D.L., D.K., M.C., I.I., M.M.R. and E.E.-A.; Resources: M.J., A.N., V.J., D.L., D.K., M.C., I.I., M.M.R. and E.E.-A.; Data curation: M.J., A.N., V.J., D.L., D.K., M.C., I.I., M.M.R. and E.E.-A.; Writing—original draft preparation: M.J., A.N., V.J., D.L., D.K., M.C., I.I. and E.E.-A.; Writing—review and editing: M.J., A.N., V.J., D.L., D.K., M.C., I.I., M.M.R. and E.E.-A.; Visualization: M.J., A.N., V.J., D.L., D.K., M.C., I.I., M.M.R. and E.E.-A.; Supervision: E.E.-A.; Project administration: E.E.-A.; Funding acquisition: E.E.-A. All authors have read and agreed to the published version of the manuscript.

Data Availability Statement

The audio samples used in this work are publicly available from the European Broadcasting Union at https://tech.ebu.ch/publications/sqamcd (accessed on 19 October 2023) as file 64.flac [21]. The hyperspectral data used in this work are publicly available from the Grupo de Inteligencia Computacional (GIC) at https://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes#Kennedy_Space_Center_(KSC) (accessed on 19 October 2023) under the heading Kennedy Space Center (KSC) [22].

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Rhodes W. Acousto-optic signal processing: Convolution and correlation. Proc. IEEE. 1981;69:65–79. doi: 10.1109/PROC.1981.11921. [DOI] [Google Scholar]

- 2.Keys R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981;29:1153–1160. doi: 10.1109/TASSP.1981.1163711. [DOI] [Google Scholar]

- 3.LeCun Y., Kavukcuoglu K., Farabet C. Convolutional networks and applications in vision; Proceedings of the 2010 IEEE International Symposium on Circuits and Systems; Paris, France. 30 May–2 June 2010; pp. 253–256. [DOI] [Google Scholar]

- 4.Fan P., Zhou R.G., Hu W., Jing N. Quantum image edge extraction based on classical Sobel operator for NEQR. Quantum Inf. Process. 2018;18:24. doi: 10.1007/s11128-018-2131-3. [DOI] [Google Scholar]

- 5.Ma Y., Ma H., Chu P. Demonstration of Quantum Image Edge Extration Enhancement Through Improved Sobel Operator. IEEE Access. 2020;8:210277–210285. doi: 10.1109/ACCESS.2020.3038891. [DOI] [Google Scholar]

- 6.Zhang Y., Lu K., Gao Y. QSobel: A novel quantum image edge extraction algorithm. Sci. China Inf. Sci. 2015;58:1–13. doi: 10.1007/s11432-014-5158-9. [DOI] [Google Scholar]

- 7.Zhou R.G., Yu H., Cheng Y., Li F.X. Quantum image edge extraction based on improved Prewitt operator. Quantum Inf. Process. 2019;18:261. doi: 10.1007/s11128-019-2376-5. [DOI] [Google Scholar]

- 8.Li P. Quantum implementation of the classical Canny edge detector. Multimed. Tools Appl. 2022;81:11665–11694. doi: 10.1007/s11042-022-12337-w. [DOI] [Google Scholar]

- 9.IBM Quantum Qiskit: An Open-source Framework for Quantum Computing. 2021. [(accessed on 19 October 2023)]. Available online: https://zenodo.org/records/7416349.

- 10.Burrus C.S., Parks T.W. DFT/FFT and Convolution Algorithms: Theory and Implementation. 1st ed. John Wiley & Sons, Inc.; Hoboken, NJ, USA: 1991. [Google Scholar]

- 11.Podlozhnyuk V. FFT-based 2D convolution. [(accessed on 19 October 2023)];NVIDIA. 2007 32 Available online: https://developer.download.nvidia.com/compute/cuda/1.1-Beta/x86_64_website/projects/convolutionFFT2D/doc/convolutionFFT2D.pdf. [Google Scholar]

- 12.NVIDIA Convolution Algorithms. 2023. [(accessed on 19 October 2023)]. Available online: https://docs.nvidia.com/deeplearning/performance/dl-performance-convolutional/index.html#conv-algo.

- 13.NVIDIA CUTLASS Convolution. 2023. [(accessed on 19 October 2023)]. Available online: https://github.com/NVIDIA/cutlass/blob/main/media/docs/implicit_gemm_convolution.md.

- 14.Le P.Q., Dong F., Hirota K. A flexible representation of quantum images for polynomial preparation, image compression, and processing operations. Quantum Inf. Process. 2011;10:63–84. doi: 10.1007/s11128-010-0177-y. [DOI] [Google Scholar]

- 15.Zhang Y., Lu K., Gao Y., Wang M. NEQR: A novel enhanced quantum representation of digital images. Quantum Inf. Process. 2013;12:2833–2860. doi: 10.1007/s11128-013-0567-z. [DOI] [Google Scholar]

- 16.Yao X.W., Wang H., Liao Z., Chen M.C., Pan J., Li J., Zhang K., Lin X., Wang Z., Luo Z., et al. Quantum Image Processing and Its Application to Edge Detection: Theory and Experiment. Phys. Rev. X. 2017;7:031041. doi: 10.1103/PhysRevX.7.031041. [DOI] [Google Scholar]

- 17.El-Araby E., Mahmud N., Jeng M.J., MacGillivray A., Chaudhary M., Nobel M.A.I., Islam S.I.U., Levy D., Kneidel D., Watson M.R., et al. Towards Complete and Scalable Emulation of Quantum Algorithms on High-Performance Reconfigurable Computers. IEEE Trans. Comput. 2023;72:2350–2364. doi: 10.1109/TC.2023.3248276. [DOI] [Google Scholar]

- 18.Li X., Yang G., Torres C.M., Zheng D., Wang K.L. A Class of Efficient Quantum Incrementer Gates for Quantum Circuit Synthesis. Int. J. Mod. Phys. B. 2014;28:1350191. doi: 10.1142/S0217979213501919. [DOI] [Google Scholar]

- 19.Balauca S., Arusoaie A. Efficient Constructions for Simulating Multi Controlled Quantum Gates. In: Groen D., de Mulatier C., Paszynski M., Krzhizhanovskaya V.V., Dongarra J.J., Sloot P.M.A., editors. Proceedings of the Computational Science—ICCS 2022; London, UK. 21–23 June 2022; Cham, Switzerland: Springer; 2022. pp. 179–194. [Google Scholar]

- 20.Nielsen M.A., Chuang I.L. Quantum Computation and Quantum Information: 10th Anniversary Edition. Cambridge University Press; Cambridge, UK: 2010. p. 409. [DOI] [Google Scholar]

- 21.Geneva S. Sound Quality Assessment Material: Recordings for Subjective Tests. 1988. [(accessed on 19 October 2023)]. Available online: https://tech.ebu.ch/publications/sqamcd.

- 22.Graña M., Veganzons M.A., Ayerdi B. Hyperspectral Remote Sensing Scenes. [(accessed on 19 October 2023)]. Available online: https://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes#Kennedy_Space_Center_(KSC)

- 23.Jeng M., Islam S.I.U., Levy D., Riachi A., Chaudhary M., Nobel M.A.I., Kneidel D., Jha V., Bauer J., Maurya A., et al. Improving quantum-to-classical data decoding using optimized quantum wavelet transform. J. Supercomput. 2023;79:20532–20561. doi: 10.1007/s11227-023-05433-7. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The audio samples used in this work are publicly available from the European Broadcasting Union at https://tech.ebu.ch/publications/sqamcd (accessed on 19 October 2023) as file 64.flac [21]. The hyperspectral data used in this work are publicly available from the Grupo de Inteligencia Computacional (GIC) at https://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes#Kennedy_Space_Center_(KSC) (accessed on 19 October 2023) under the heading Kennedy Space Center (KSC) [22].