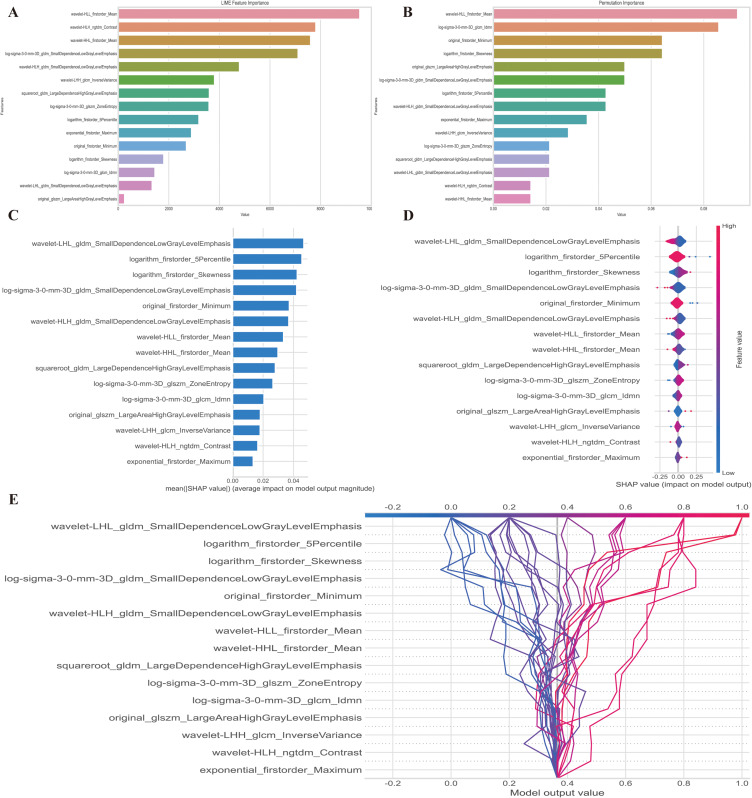

Figure 7.

Model interpretation using the LIME and SHAP framework. (A) Ranking feature importance using the LIME technique; (B) Ranking feature importance using permutation feature importance (PFI); (C) Ranking the relevance of features based on SHAP values obtained from the test set. A feature is deemed more significant if its average SHAP value is higher; (D) A summary plot illustrating the decision-making process of the radiomics model and the interactions among radiomics characteristics. Positive SHAP scores indicate a higher likelihood of correctly predicting PS, while a higher risk of PS is associated with a high value. Each point on the plot represents a patient’s forecast; (E) A decision graphic demonstrating the prediction of PS using the radiomics model. The plot shows the model’s base value and the SHAP values for each feature, highlighting the impact of each feature on the overall PS forecast as you move from bottom to top. The discrete dots on the right represent different eigenvalues, with the color indicating the magnitude of the eigenvalues (red for high and blue for low). The X-axis represents the SHAP value. In binary classification, the SHAP value can be seen as the size of the probability value influencing the model’s predicted outcome. It can also be interpreted as the extent to which each feature value influences the likelihood of a patient having PS. A positive SHAP value indicates an increased probability of PS, while a negative SHAP value suggests a decreased probability of PS (implying a likelihood of BS).

Abbreviations: LIME, local interpretable model-agnostic explanations; SHAP, SHapley Additive exPlanations.