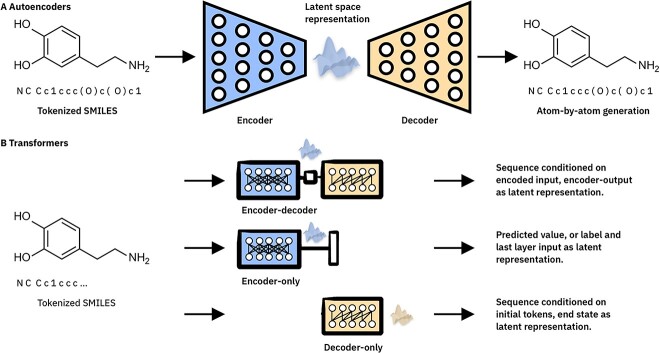

Figure 2.

High-level comparison of machine learning architectures used for unsupervised learning of molecular representations. (A) Autoencoder architectures consist of encoder, decoder and bottleneck layer elements with the latter being the location of the learned embedding representation. (B) Transformer architectures, which all consist of preprocessing and positional embedding steps, followed by multiple sequential encoder and/or decoder blocks. Top: Sequence-to-sequence original or BART-style Transformer, where the encoder output can be aggregated as learned embedding representation. Middle: Encoder-only BERT-style Transformer, typically fine-tuned on molecular classification/regression. The output of the encoder module can be used as a learned embedding representation. Bottom: Decoder-only GPT-style Transformer, where the end state can be used as a learned embedding representation.