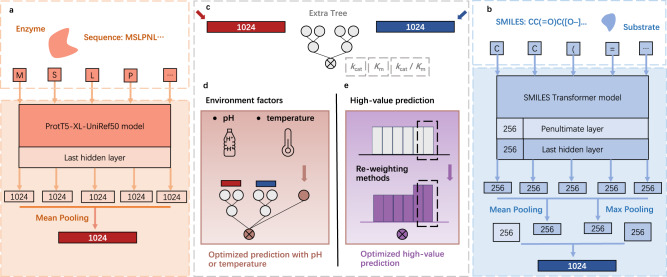

Fig. 1. The overview of UniKP.

a Enzyme sequence representation module: Information about enzymes was encoded using a pretrained language model, ProtT5-XL-UniRef50. Each amino acid was converted into a 1024-dimensional vector on the last hidden layer, and the resulting vectors were summed and averaged by mean pooling, generating a 1024-dimensional vector to represent the enzyme. b Substrate structure representation module: Information about substrates was encoded using a pretrained language model, SMILES Transformer model. The substrate structure was converted into a simplified molecular-input line-entry system (SMILES) representation and input into a pretrained SMILES transformer to generate a 1024-dimensional vector. This vector was generated by concatenating the mean and max pooling of the last layer, along with the first outputs of the last and penultimate layers. c Machine learning module: An explainable Extra Trees model took the concatenated representation vector of both the enzyme and substrate as input and generated a predicted kcat, Km or kcat / Km value. d EF-UniKP: A framework that considers environmental factors to generate an optimized prediction. It is validated on two representative datasets: pH and temperature datasets. e Various re-weighting methods were used to adjust the sample weight distribution to generate an optimized prediction for high-value prediction task.