Abstract

Background

The emergence of Chat-Generative Pre-trained Transformer (ChatGPT) by OpenAI has revolutionized AI technology, demonstrating significant potential in healthcare and pharmaceutical education, yet its real-world applicability in clinical training warrants further investigation.

Methods

A cross-sectional study was conducted between April and May 2023 to assess PharmD students’ perceptions, concerns, and experiences regarding the integration of ChatGPT into clinical pharmacy education. The study utilized a convenient sampling method through online platforms and involved a questionnaire with sections on demographics, perceived benefits, concerns, and experience with ChatGPT. Statistical analysis was performed using SPSS, including descriptive and inferential analyses.

Results

The findings of the study involving 211 PharmD students revealed that the majority of participants were male (77.3%), and had prior experience with artificial intelligence (68.2%). Over two-thirds were aware of ChatGPT. Most students (n= 139, 65.9%) perceived potential benefits in using ChatGPT for various clinical tasks, with concerns including over-reliance, accuracy, and ethical considerations. Adoption of ChatGPT in clinical training varied, with some students not using it at all, while others utilized it for tasks like evaluating drug-drug interactions and developing care plans. Previous users tended to have higher perceived benefits and lower concerns, but the differences were not statistically significant.

Conclusion

Utilizing ChatGPT in clinical training offers opportunities, but students’ lack of trust in it for clinical decisions highlights the need for collaborative human-ChatGPT decision-making. It should complement healthcare professionals’ expertise and be used strategically to compensate for human limitations. Further research is essential to optimize ChatGPT’s effective integration.

Keywords: ChatGPT, perception, clinical training, Pharm-D

Introduction

The emergence of artificial intelligence (AI) has revolutionized various fields, including healthcare and education. Among the latest innovations, the introduction of the Chat-Generative Pre-trained Transformer (ChatGPT) by OpenAI in November 2022 marks a significant stride in AI technology’s evolution. This model has harnessed the power of AI and natural language processing to simulate human-like conversation, offering both transformative potential and challenges across scientific domains, particularly in healthcare.1 While ChatGPT has shown promising capabilities in various medical applications, including some positive outcomes in medical licensing examinations, recent studies, such as the one conducted on the pharmacist licensing examination in Taiwan, highlight the need for careful consideration of its limitations in specific assessment contexts.2,3

Within healthcare, ChatGPT has showcased its ability to generate formal discharge summaries swiftly in response to brief patient profiles. It has also found applications in enhancing radiological decision-making, risk prediction, and even drug discovery processes.4,5 Beyond the technological dimension, ChatGPT extends medical information access to patients, promising personalized healthcare enhancements across diverse clinical disciplines, such as cardiology, endocrinology, and gynecology.6–8

In the sphere of pharmacy, AI-powered systems have captured attention, with potential applications encompassing prescription review, drug counseling, adverse drug reaction (ADR) monitoring, and patient medication education.1 This resonates profoundly with clinical pharmacists, who play a pivotal role in optimizing medication therapy, ensuring patient safety, and offering patient-centered care. The integration of AI-powered tools into clinical pharmacy practices could bolster efficiency, decision-making, and patient outcomes.9 However, despite the promise, the accuracy, reliability, and practical viability of ChatGPT in real-world clinical pharmacy settings have yet to be comprehensively explored.1,10 Previous studies in the field have shown promising results for ChatGPT, but the reliability and accuracy need to be thoroughly validated in real-world healthcare settings. Some studies have highlighted the importance of evaluating ChatGPT’s performance across diverse clinical scenarios to ensure its practical viability.11–13 The investigations have undertaken an exploration of ChatGPT accuracy through diverse methodologies. Notably, one of these inquiries employed a set of 20 pharmacotherapy cases to scrutinize both the accuracy and consistency of ChatGPT. The findings from this study revealed discernible variations in accuracy rates across different temporal intervals.1,14

Recent research endeavors have shed light on ChatGPT’s medical education potential, ranging from assisting students’ understanding of complex topics to providing tutorials and homework aid.2 Yet, the integration of ChatGPT to pharmacy practice training remains to be investigated. This study aimed to assess Pharm-D students’ perceptions, concerns, and practices of integrating ChatGPT into pharmacy practice training.

Method

Study Design and Setting

This cross-sectional study was conducted between April and May 2023 to assess students’ perceptions, concerns, and practices related to the integration of ChatGPT into clinical pharmacy education. The study included PharmD students studying at the two public pharmacy schools in Jordan. A convenient sampling method was used to select the participants by distributing the study questionnaire using an online platform (Facebook and WhatsApp). This sampling technique was chosen for its broad accessibility among pharmacy students and alignment with their digital communication habits, facilitating efficient data collection. Additionally, considering that the final-year students were involved in ward rotations, it may not be feasible to gather all of them in one place to distribute the questionnaires. Those who expressed interest in participating were provided with a link to view the study’s instructions and to provide their electronic consent before proceeding to the survey.

Survey Development

The initial study questionnaire was generated and evaluated for content and face validity by two survey experts. Minor modifications were made based on the notes and feedback provided. The final version of the questionnaire consisted of four parts. The first part comprised demographic characteristics of the study population. The second part included statements to assess students’ perceived benefits of ChatGPT incorporation into their clinical training. The third section included statements to evaluate students’ concerns related to ChatGPT incorporation into their pharmacy training. The last part evaluated students’ experience with the use of ChatGPT in clinical training. A 5-point Likert scale (1: strongly disagree, 2: disagree, 3: neutral, 4: agree, and 5: strongly agree) was utilized to evaluate the second and third sections. A benefit and concern scores were calculated for each student. A 5-point Likert scale ranging from strongly disagree to strongly agree was utilized to evaluate the students’ perceived benefits and concerns, with scores ranging from 1 to 5, respectively. So, the scores for perceived benefits (16 items) ranged from 16 to 80, and the overall score for perceived concerns (8 items) ranged from 9 to a max score of 45.

Sample Size Determination

The sample size was estimated using Raosoft ® sample size calculator for online survey using the following formula: n = P × (1−P) × z2/d2. With a margin of error (d) of 10%, a confidence level of 95%, a population size of 800 PharmD students, and a response distribution (P) of 50%, the minimum recommended sample size was 86 participants.

Ethical Considerations

Ethical approval for the study was granted by the Institutional Review Board at the Jordan University Hospital (Approval number: 10/2023/16,283). The current study was conducted following the Declaration of Helsinki of the World Medical Association.

Statistical Analysis

The data analysis was conducted using SPSS software, version 25 (IBM Corp., Armonk, NY, USA). Frequency and percentages were computed for categorical variables. The data normality was examined using the Kolmogorov–Smirnov test and histogram visualizations, revealing a non-normal distribution. The test yielded a significant result (p < 0.05), affirming this non-normal distribution. Mann–Whitney U-test was used to assess the differences in perceived benefit and concern median scores between ChatGPT users and non-users. A p-value of less than 0.05 was deemed to signify statistically significant results.

Results

Sociodemographic Characteristics

Table 1 provides an overview of the sociodemographic characteristics of the 211 students recruited in this study. The median age of participants was 23 years (IQR= 1.0), and the majority were males (n= 163, 77.3%). For the academic qualification, over half of the respondents were sixth-year students (n= 120, 56.9%). The sample is nearly evenly split, with 50.2% (n= 106) from the University of Jordan and 49.8% (n= 105) from the Jordan University of Science and Technology. Delving into their technological familiarity, over two-thirds of participants (n= 144, 68.2%) had prior experience with artificial intelligence. Proficiency in computer and digital technology usage varied, with the majority (n= 180, 85.3%) indicating they have good to excellent proficiency. Notably, over two-thirds of the participants (n= 145, 68.7%) were aware of ChatGPT.

Table 1.

Sociodemographic Characteristics of the Study Sample (n=211)

| Parameter | Median (IQR) | Frequency (%) |

|---|---|---|

| Age | 23.0 (1.0) | |

| Gender | ||

| ○ Male | 48 (22.7) | |

| ○ Female | 163 (77.3) | |

| Current qualification | ||

| ○ Fifth year student | 91 (43.1) | |

| ○ Sixth year student | 120 (56.9) | |

| University | ||

| ○ The University of Jordan | 106 (50.2) | |

| ○ Jordan University of Science and Technology | 105 (49.8) | |

| Any prior experience with artificial intelligence | ||

| ○ Yes | 144 (68.2) | |

| ○ No | 67 (31.8) | |

| Proficiency in using a computer and digital technologies | ||

| ○ Poor | 10 (4.7) | |

| ○ Fair | 21 (10.0) | |

| ○ Good | 79 (37.4) | |

| ○ Very good | 61 (28.9) | |

| ○ Excellent | 40 (19.0) | |

| Have you heard of ChatGPT? | ||

| ○ Yes | 145 (68.7) | |

| ○ No | 43 (20.4) | |

| ○ Not sure | 23 (10.9) |

Students’ Perceived Benefits of Incorporating ChatGPT into Clinical Training

Table 2 describes the students’ perceived benefits of incorporating ChatGPT into clinical training. A significant percentage of participants (n= 139, 65.9%) believed that PharmD students could benefit from using ChatGPT. However, when it comes to ChatGPT’s ability to provide accurate clinical practice information, only 39.8% (n= 84) believed so. Over half of the respondents believed ChatGPT could expedite the process of identifying and managing treatment-related problems (n= 121, 57.3%). Moreover, 54.5% (n= 115) perceived ChatGPT as a tool that could readily identify and manage drug-drug interactions. Additionally, half of the participants (n= 106, 50.2%) trusted ChatGPT’s ability to identify and manage adverse drug reactions.

Table 2.

Students’ Perceived Benefits of ChatGPT Integration in Clinical Training (N= 221)

| Statement | Strongly Agreed/Agreed (%) | Neutral (%) | Strongly Disagreed/Disagreed (%) |

|---|---|---|---|

| PharmD students can benefit from using ChatGPT | 139 (65.9) | 49 (23.2) | 23 (10.9) |

| ChatGPT can provide accurate information related to clinical practice | 84 (39.8) | 80 (37.9) | 47 (22.3) |

| ChatGPT can assist in making treatment decisions for patients | 82 (38.9) | 66 (31.3) | 63 (29.9) |

| Chat GPT can save time in the process of identifying and managing treatment-related problems | 121 (57.3) | 51 (24.2) | 39 (18.5) |

| Drug-drug interactions can be easily identified and managed with the help of Chat GPT. | 115 (54.5) | 60 (28.4) | 36 (17.1) |

| Chat GPT can assist in patient education by providing accurate information about medications. | 99 (46.9) | 71 (33.6) | 41 (19.4) |

| Chat GPT can be used for medication reconciliation to ensure accuracy of patient medication lists. | 94 (44.5) | 68 (32.2) | 49 (23.2) |

| Chat GPT can help in determining appropriate dosage regimens for patients. | 69 (32.7) | 73 (34.6) | 69 (32.7) |

| Chat GPT can assist in identifying and managing adverse drug reactions. | 106 (50.2) | 65 (30.8) | 40 (19.0) |

| Chat GPT can assist in identifying wrong medications prescribed to the patient. | 82 (38.9) | 73 (34.6) | 56 (26.5) |

| Chat GPT can help in identifying not needed medications prescribed to the patient. | 77 (36.5) | 74 (35.1) | 60 (17.2) |

| Chat GPT can aid in identifying further medications needed for the patient’s treatment. | 75 (35.5) | 85 (40.3) | 51 (24.2) |

| Chat GPT can aid in developing pharmacological pharmaceutical care plans. | 92 (43.6) | 68 (32.2) | 51 (24.2) |

| Chat GPT can aid in developing non-pharmacological pharmaceutical care plans. | 102 (48.3) | 70 (33.2) | 39 (18.5) |

| Chat GPT can assist in therapeutic drug monitoring. | 97 (46.0) | 69 (32.7) | 45 (21.3) |

| Chat GPT can assist in clinical pharmacokinetic calculations. | 98 (46.4) | 64 (30.3) | 49 (23.2) |

When it came to patient education, 46.9% (n= 99) believed that ChatGPT could assist in that task. The platform’s potential was also recognized in medication reconciliation, with over two-fifths (n= 94, 44.5%) believing in its utility for such purposes. On the other hand, under a third of participants (n= 69, 32.7%) agreed or strongly agreed that ChatGPT can be of assistance in determining appropriate dosage regimens for patients. Regarding medication prescription, 38.9% (n= 82) believed ChatGPT could identify wrongly prescribed medications, 36.5% (n= 77) agreed/strongly agreed it could recognize unnecessary medications, and 35.5% (n= 75) believed it could pinpoint additional medications required for patients.

Regarding the development of pharmaceutical care plans, 43.6% (n= 92) and 48.3% (n= 102) of participants believed ChatGPT could aid in crafting pharmacological and non-pharmacological plans, respectively. Less than half of students (n= 97, 46%) agreed on ChatGPT’s potential in therapeutic drug monitoring, and a similar percentage (n= 98, 46.4%) believed in its efficacy in clinical pharmacokinetic calculations. Overall, while a significant proportion of students see the potential benefits of ChatGPT in clinical training, a noteworthy percentage remains neutral or skeptical about its capabilities.

Students’ Concerns About the Utilization of ChatGPT in Clinical Training

Table 3 illustrates the students’ concerns regarding the application of ChatGPT in clinical training. Nine areas of concern have been identified. First and foremost, 61.1% of the respondents (n= 129) echoed concerns on three aspects: the potential for over-reliance causing a deficiency in essential skills such as critical thinking, concerns about ChatGPT’s accuracy and reliability, and the potential pitfalls in ChatGPT’s contextual understanding which could lead to inadequate responses. Even more notably, the digital divide’s implications for learning stood out, with 58.8% (n= 124) apprehensive that disparities in technological accessibility might jeopardize equal learning opportunities.

Table 3.

Students’ Concerns for the Utilization of ChatGPT in Clinical Training (N= 211)

| Statement | Strongly agreed/Agreed (%) | Neutral (%) | Strongly disagreed/Disagreed (%) |

|---|---|---|---|

| Lack of personal interaction: ChatGPT may not be able to provide the same level of personal interaction and feedback that students may require in their training | 123 (58.3) | 52 (24.6) | 36 (17.1) |

| Dependence on technology: Students may become overly reliant on ChatGPT and may not develop the necessary critical thinking and problem-solving skills that are essential for the practice of pharmacy | 129 (61.1) | 48 (22.7) | 34 (16.1) |

| Accuracy and reliability: Although ChatGPT has advanced natural language processing capabilities, there is still a possibility of errors and inaccuracies in its responses | 129 (61.1) | 57 (27.0) | 25 (11.8) |

| Ethical concerns: The use of ChatGPT in pharmacy training may raise ethical concerns, such as issues related to patients’ privacy and confidentiality. | 108 (51.2) | 53 (25.1) | 50 (23.7) |

| Accessibility: Some students may not have access to the necessary technology or resources to effectively use ChatGPT in their training, which could create disparities in learning opportunities. | 124 (58.8) | 54 (25.6) | 33 (24.0) |

| Lack of contextual understanding: ChatGPT may not be able to understand the context of a particular situation or question, which could result in incorrect or inadequate responses. | 129 (61.1) | 49 (23.2) | 33 (15.6) |

| Limited scope: ChatGPT may not be able to cover all the necessary topics and information required for pharmacy practice, and may not be able to provide comprehensive training to students. | 117 (55.5) | 56 (26.5) | 38 (18.0) |

| Over-reliance on ChatGPT: There is a risk that students may rely too heavily on ChatGPT, and may neglect other important sources of learning and training. | 127 (60.2) | 50 (23.7) | 34 (16.1) |

| Bias in data: There is a possibility that ChatGPT may have bias in its training data, which could result in biased responses or recommendations. | 106 (50.2) | 64 (30.3) | 41 (19.4) |

Additionally, there is a palpable apprehension about the potential compromise in personal interactions, as a significant percentage of students (n= 123, 58.3%) felt that ChatGPT might not provide the degree of personal interaction and feedback crucial for their training. Around half of the students (n= 117, 55.5%) voiced that ChatGPT might have a limited scope, potentially not encapsulating all essential topics for pharmacy training. Ethical concerns also surfaced, with 51.2% of the students (n= 108) worried about potential breaches in patients’ privacy and confidentiality. Lastly, the integrity of ChatGPT’s training data was raised, with 50.2% (n= 106) believing that this could lead to potential biases in its responses.

Students’ Adoption of ChatGPT in Clinical Training

Table 4 shows the students’ adoption of ChatGPT in clinical training. Almost half of the students (n= 98, 46.4%) did not use ChatGPT in their clinical training, with only a minority (n= 27, 12.8%) having used it frequently. When participants were asked about the likelihood of incorporating ChatGPT into their future clinical practice, over half (n= 112, 53.1%) indicated that they were somewhat likely or very likely to do so. In terms of recommending ChatGPT’s use for pharmacy students and preceptors, opinions varied: 63% (n= 123) would recommend it, 25.6% (n= 54) remained undecided, whereas 11.4% (n= 24) would not recommend it.

Table 4.

Students’ Adoption of ChatGPT in Clinical Training (n=211)

| Parameter | Median (IQR) | Frequency (%) |

|---|---|---|

| Current frequency of using ChatGPT in clinical training | ||

| a) Never | 98 (46.4) | |

| b) Rarely (once a month or less) | 47 (22.3) | |

| c) Occasionally (2–3 times a month) | 39 (18.5) | |

| d) Frequently (once a week or more) | 27 (12.8) | |

| How likely are you to use ChatGPT in your future clinical practice? | ||

| a) Not at all Likely | 15 (7.3) | |

| b) Slightly Unlikely | 20 (9.5) | |

| c) Neutral | 64 (30.3) | |

| d) Somewhat Likely | 82 (38.9) | |

| e) Very Likely | 30 (14.2) | |

| Would you recommend the use of Chat GPT to pharmacy students and preceptors for clinical practice? | ||

| a) Yes, definitely | 39 (18.5) | |

| b) Yes, probably | 94 (44.5) | |

| c) Undecided | 54 (25.6) | |

| d) No, probably not | 20 (9.5) | |

| e) No, definitely not | 4 (1.9) |

Table 5 provides insights into the students’ utilization of ChatGPT in clinical training. Among those who have ever used ChatGPT (n= 113), participants’ engagement with ChatGPT for various clinical tasks is noteworthy. Just over a third of the participants (n= 72/113, 64.6%) acknowledged employing ChatGPT to evaluate drug-drug interactions. Similarly, a slightly higher percentage of participants (n= 82/113, 72.6%) reported employing ChatGPT to acquire information about medications and diseases and aiding in the identification and management of adverse drug reactions (n= 74/113, 65.5%).

Table 5.

Students’ Experience with the Use of ChatGPT in Clinical Training (n=113)

| Have you ever used ChatGPT | Participants agreed |

|---|---|

| n (%) | |

| To check for Drug-drug interactions? | 72 (64.6) |

| To obtain drug and disease information (for example: ask Chat GPT questions about particular conditions, treatments, medications, or lifestyle changes) | 82 (72.6) |

| For medication reconciliation | 66 (58.4) |

| To determine appropriate dosage regimens for patients | 67 (59.3) |

| To identify or manage adverse drug reactions | 74 (64.5) |

| To aid you in developing a pharmacological pharmaceutical care plan | 63 (55.8) |

| To aid you in developing a non-pharmacological pharmaceutical care plan (diet, lifestyle modifications)? | 80 (70.8) |

| To aid you in therapeutic drug monitoring | 66 (58.4) |

| To aid you in clinical pharmacokinetic calculations? | 64 (56.6) |

Furthermore, the utilization of ChatGPT extended to other critical clinical functions as well. Approximately one-third of participants expressed employing ChatGPT for medication reconciliation (n= 66/113, 58.4%) and determining appropriate dosage regimens (n= 67/113, 59.3%). Moreover, 55.7% of the participants (n= 63/113) utilized ChatGPT to aid in the development of pharmacological pharmaceutical care plans. Notably, a higher proportion of respondents (n= 80/113, 70.8%) had utilized ChatGPT for the development of non-pharmacological pharmaceutical care plans. Interestingly, ChatGPT’s utility extended to aiding students in therapeutic drug monitoring (n= 67/113, 59.3%) and clinical pharmacokinetic calculations (n= 64/113, 56.6%), highlighting its potential in supporting complex clinical decision-making processes.

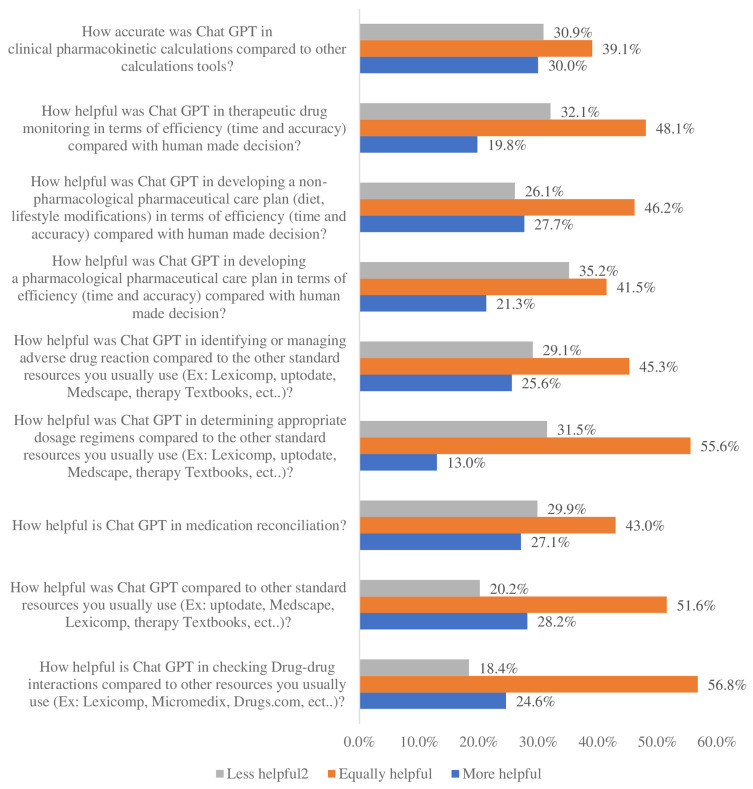

Figure 1 shows the results of Pharm-D students’ perceptions regarding their experience with ChatGPT across various facets of pharmacy training, while considering the efficiency and accuracy compared to established methods. In terms of developing pharmaceutical care plans, students indicated a higher level of perceived usefulness for non-pharmacological care plans, with 46.2% finding ChatGPT equally helpful and 27.7% finding it more helpful compared to human-made decisions. This contrasts with pharmacological care plans, where 41.5% reported equal helpfulness and 21.3% reported more helpfulness.

Figure 1.

Students’ perception about how they found ChatGPT when they used it in various pharmacy training aspects.

Notes: Percentages were calculated out of those who had used it as presented in Table 5.

For clinical pharmacokinetic calculations, approximately 39.1% of participants found ChatGPT to be “equally helpful”, while 30.9% considered it “more helpful” compared to other calculation tools. In contrast, the perceived usefulness of ChatGPT in determining appropriate dosage regimens presents a different trend. Only 13% of participants reported finding ChatGPT “more helpful”, while a larger percentage (55.6%) indicated that it was “equally helpful” compared to other standard resources. When considering therapeutic drug monitoring, a notable 48.1% of participants found ChatGPT to be “equally helpful”, 32.1% reported finding it “less helpful”, while 19.8% found it “more helpful”.

The results of Figure 1 also underscore the remarkable perceived usefulness of ChatGPT in the domain of checking Drug-Drug Interactions (DDI). This aspect stands out as one of the most positively received functionalities, with a substantial majority of participants expressing high levels of perceived helpfulness. Remarkably, over 80% of respondents indicated that ChatGPT’s utility in this domain was either “equally helpful” or “more helpful” compared to other resources typically utilized for assessing drug interactions.

When comparing previous experience in using ChatGPT with their perceived benefit and concern scores (Table 6), previous users showed higher perceived benefit perception scores and lower perceived concerns scores compared to non-users, but these differences were not statistically significant (P>0.05 for both). However, participants more inclined to use ChatGPT in future clinical practice tended to exhibit lower concern scores, showing a statistically significant correlation (p-value of 0.038 for those “Somewhat likely” to use). Additionally, those more likely to recommend ChatGPT (“Yes, definitely”) presented higher perceived benefit scores (3.8 with a p-value < 0.001). These correlations suggest a link between users’ attitudes towards ChatGPT, their perceived benefits, and concerns, potentially influencing their inclination to use or recommend it in clinical practice.

Table 6.

Correlation Analysis of Participants’ Concerns and Benefits Scores with Prior Usage, Future Intent, and Recommendation for ChatGPT Implementation in Clinical Practice

| Category | Subcategory | Perceived Benefits Score** | P value | Perceived Concerns SCORE** | P value |

|---|---|---|---|---|---|

| Use of ChatGPT | Users | 3.3 (0.9) | 0.061# | 3.4 (1.1) | 0.367# |

| Non-users | 3.2 (0.8) | 3.6 (0.9) | |||

| How likely are you to use ChatGPT in your future clinical practice? | Not at all likely | 3 (1.7) | 0.002* | 3 (1.6) | 0.038* |

| Slightly unlikely | 3 (1.3) | 4 (1.9) | |||

| Neutral | 3.2 (0.7) | 3.7 (1.0) | |||

| Somewhat likely | 3.5 (0.9) | 3.9 (1.1) | |||

| Very likely | 3.5 (1.8) | 3.7 (1.0) | |||

| Would you recommend the use of Chat GPT to pharmacy students and preceptors for clinical practice? | Yes, definitely | 3.8 (1.3) | < 0.001* | 3.7 (1.2) | 0.003* |

| Yes, probably | 3.4 (0.9) | 3.8 (1.0) | |||

| Undecided | 3.0 (0.7) | 3.7 (1.1) | |||

| No, probably not | 2.9 (1.0) | 4.2 (1.1) | |||

| No, definitely not | 2.7 (1.4) | 4.9 (0.6) |

Notes: #Using Mann–Whitney U-test, *Using Kruskal Wallis test. **Median (interquartile range). Note, Users are those who have used ChatGPT in their clinical training, while non-users are those who have never used ChatGPT in their clinical training.

Discussion

The implementation and application of the large-scale language model, ChatGPT, is increasing tremendously in clinical training and education. It offers capabilities that, in some respects, exceed the limitations of humans, but in others, it is surpassed by human´s versatility, emotional intelligence, and comprehending complex intersecting concepts.15

Results revealed that two-thirds of the participants believed that ChatGPT offers them benefits, especially in the identification of treatment-related problems, drug-drug interactions, and adverse drug reactions participants. Many other applications of ChatGPT were studied, such as differential diagnosis,16 clinical decision-making,17 and clinical decision support.16 The perceived benefits of incorporating ChatGPT in education were echoed in a mixed-method approach study conducted in Ghana. Bonsu and Koduah investigated the students’ perceptions and their willingness to use ChatGPT in education. Most participants expressed being comfortable utilizing ChatGPT, which guarantees a satisfying experience as they take it up in their academic careers. The students also agreed that the answers generated by artificial intelligence were accurate. The researchers recommended adopting these innovative technologies in higher education accompanied by regulatory measures concerning ethical challenges such as creativity, misuse, and copyright issues.17

The accuracy of the data provided by the ChatGPT was questionable, and less than half of the participants believed it could produce accurate information. This reflects the concerns regarding the accuracy of this AI model, which is not surprising since ChatGPT is trained on certain databases, and it follows instructions rather than enrolling in interactions and investigations. The reported “hallucinations”, which is content generated by the model that is not based on reality, in the AI model can restrict its usefulness in aspects where accuracy is required.18

These concerns were also reflected in medical students in Saudi Arabia. The students acknowledged many limitations of ChatGPT, despite its numerous benefits, such as lack of trust in the produced results, obscurity of references, and inability to conduct analysis that requires critical thinking. The participants emphasized the need for policies and regulations to detect and manage any academic misconduct.19 Another study that was conducted in Korea investigated the perceptions of ChatGPT as a feedback tool in medical school. The majority of students agreed that it was useful in providing answers and summarizing information, but it did not provide correct evidence.20 In Saudi Arabia, a large survey was conducted among 1057 healthcare workers to assess their perceptions of the advantages of ChatGPT and intentions for future utilization. Two-thirds of the participants believed that although it could positively contribute to healthcare provision, concerns regarding the accuracy and legal issues hinder its current use and should be accompanied by human supervision.21

Kusunose et al examined the ability of ChatGPT to accurately answer questions related to the management of hypertension. ChatGPT exhibited an overall accuracy rate of 64.5% and a higher accuracy for clinical questions of 80% compared to limited evidence-based questions, 80% and 36%, respectively.22 Another study that was conducted in the US revealed that ChatGPT responded appropriately to 21 of 25 questions (84%) related to cardiovascular disease prevention recommendations.23 A recent study by Johnson et al explored the accuracy and reliability of AI-generated responses to 284 medical questions developed by thirty-three physicians across 17 specialties. The accuracy was determined using a 6-point Likert scale (range 1 - completely incorrect to 6 - completely correct). The median accuracy score for all questions was 5.5, between almost completely and completely correct.24

Many participants agreed that ChatGPT can be helpful in providing patient education. Communication with patients seems to be an area where ChatGPT can be very helpful.25 However, the comprehension of online information by the patients may be an impediment and could create disparities among patients with different education levels.26

The views of the students concerning the utilization of ChatGPT in their clinical training and the peril of equal opportunities concur with the debate on its role in education and the learning process. Many educators consider it a threat to the training to acquire knowledge and problem-solving skills and augment the inequality in education.27

Ethical doubts that the participants expressed regarding the patient’s privacy are a legitimate concern. These echo a major limitation of ChatGPT, which is vulnerability to cyberattacks and the spread of information.28,29 If ChatGPT is to be used in clinical practice, it should guarantee the main ethical principles such as beneficence, justice, nonmaleficence, privacy, and responsibility. Maintaining these principles, especially privacy, is challenging, and the protection of patient data is essential to prevent misuse of information.30

The tasks that ChatGPT can be engaged in are diverse. The participants used ChatGPT in many clinical aspects, but the extent of use varies from one domain to another. Only one-third thought the ChatGPT utilized it to identify more sophisticated tasks such as wrongly prescribed medications, unnecessary medications, and dosage forms. Variation in the performance of this AI model was established, it was inferior in questions related to differential diagnosis and clinical management compared to general medical knowledge.31

Most participants agreed on the usefulness of ChatGPT for non-pharmacological care plans. This is probably due to the comprehensive information that the ChatGPT can provide on different aspects, especially if given sufficient parameters. Galido et al investigated the applications of ChatGPT in the management of Schizophrenia. The findings of the study showed that the AI model provided comprehensive nonpharmacologic treatment approaches, vocational and social skills training, and supportive services.32

The most positively received functionality of the ChatGPT by the participants who used it, compared to other sources typically utilized for assessing drug interactions, was drug-drug interactions. However, Moratth et al demonstrated that in real-world scenarios, ChatGPT answered most of the 50 drug-related questions wrong or partly wrong.33 Another study was conducted by Hsu et al that evaluated ChatGPT performance in Drug-Herb interactions for traditional Chinese medicine. They concluded that the responses were evaluated as inappropriate and lacked information that is needed to provide patients with clear recommendations.34

The positive attitude toward using ChatGPT by the students implies that it will play a major role, with its pros and cons, in their academic activities. This necessitates that universities and institutions should design strategies, policies, and specialized centers to regulate the use of this source of information and familiarize students with its limitations and ethical dilemmas that might be encountered. On the other hand, AI sources should be developed, continuously improved, and tested for their use in the clinical field. We should bear in mind that different AI Chatbots may differ in the type of response and information provided. Consequently, large language models should be improved to provide better accuracy and relevancy. This can be achieved by iterative refinement, using examples to mimic human-like responses, and expanding the training data to suit medical purposes and scenarios.

This study is the first in Jordan to evaluate the perceptions and attitudes of PharmD students towards the incorporation of ChatGPT in their clinical practice. However, one limitation of the study is that it did not examine the effect of ChatGPT utilization by students on the accuracy of their clinical decisions or patient outcomes. Also, data collection relied on convenience and snowball sampling techniques, which may introduce selection bias. Moreover, utilizing social media for participant recruitment prevents the calculation of a survey response rate.

Conclusion

Our study examining Pharm-D students’ perceptions, concerns, and practices regarding ChatGPT integration in pharmacy practice training revealed diverse perspectives. Participants acknowledged potential benefits in expediting treatment-related problem identification and drug interactions but expressed skepticism about its accuracy in clinical information provision. Significant concerns included over-reliance, digital divide implications, compromised personal interaction, and ethical considerations. While nearly half had not used ChatGPT, many expressed future intent. Recommendations varied, with prior users indicating higher benefits and lower concerns, suggesting potential links to adoption and recommendations. The study emphasizes nuanced perspectives and highlights the need for careful consideration in effectively integrating ChatGPT into pharmacy practice.

Acknowledgments

The authors extend their appreciation to the Deanship of Scientific Research at Northern Border University, Arar, KSA for funding this research work through the project number “NBU-FFR-2023-1069.

Author Contributions

All authors made a significant contribution to the work reported, whether that is in the conception, study design, execution, acquisition of data, analysis and interpretation, or in all these areas; took part in drafting, revising or critically reviewing the article; gave final approval of the version to be published; have agreed on the journal to which the article has been submitted; and agree to be accountable for all aspects of the work.

Disclosure

The authros report no conflicts of interest in this work.

References

- 1.Huang X, Estau D, Liu X, Yu Y, Qin J, Li Z. Evaluating the performance of ChatGPT in clinical pharmacy: a comparative study of ChatGPT and clinical pharmacists. Br J Clin Pharmacol. 2023;2023:1. [DOI] [PubMed] [Google Scholar]

- 2.Wang Y-M, Shen H-W, Chen T-J. Performance of ChatGPT on the Pharmacist Licensing Examination in Taiwan. J Chin Med Assoc. 2023;10:1097. [DOI] [PubMed] [Google Scholar]

- 3.Zong H, Li J, Wu E, Wu R, Lu J, Shen B. Performance of ChatGPT on Chinese national medical licensing examinations: a five-year examination evaluation study for physicians, pharmacists and nurses. medRxiv. 2023;2023:23292415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Blanco-Gonzalez A, Cabezon A, Seco-Gonzalez A, et al. The role of ai in drug discovery: challenges, opportunities, and strategies. Pharmaceuticals. 2023;16(6):891. doi: 10.3390/ph16060891 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lecler A, Duron L, Soyer P. Revolutionizing radiology with GPT-based models: current applications, future possibilities and limitations of ChatGPT. Diagn Interventional Imaging. 2023;104(6):269–274. doi: 10.1016/j.diii.2023.02.003 [DOI] [PubMed] [Google Scholar]

- 6.Gala D, Makaryus AN. The utility of language models in cardiology: a narrative review of the benefits and concerns of ChatGPT-4. Int J Environ Res Public Health. 2023;20(15):6438. doi: 10.3390/ijerph20156438 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Meo SA, Al-Khlaiwi T, AbuKhalaf AA, Meo AS, Klonoff DC. The scientific knowledge of bard and ChatGPT in endocrinology, diabetes, and diabetes technology: multiple-choice questions examination-based performance. J Diabetes Sci Technol. 2023;19322968231203987. doi: 10.1177/19322968231203987 [DOI] [PubMed] [Google Scholar]

- 8.Grünebaum A, Chervenak J, Pollet SL, Katz A, Chervenak FA. The exciting potential for ChatGPT in obstetrics and gynecology. Am J Clin Exp Obstet Gynecol. 2023;228(6):696–705. doi: 10.1016/j.ajog.2023.03.009 [DOI] [PubMed] [Google Scholar]

- 9.Liu S, Wright AP, Patterson BL, et al. Using AI-generated suggestions from ChatGPT to optimize clinical decision support. J Am Med Inform Assoc. 2023;30(7):1237–1245. doi: 10.1093/jamia/ocad072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhu Y, Han D, Chen S, Zeng F, Wang C. How can chatgpt benefit pharmacy: a case report on review writing; 2023.

- 11.Al-Ashwal FY, Zawiah M, Gharaibeh L, Abu-Farha R, Bitar AN. Evaluating the sensitivity, specificity, and Accuracy of ChatGPT-3.5, ChatGPT-4, Bing AI, and bard against conventional drug-drug interactions clinical tools. Drug Healthc Patient Saf. 2023;15:137–147. doi: 10.2147/DHPS.S425858 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hammour KA, Alhamad H, Al-Ashwal FY, Halboup A, Farha RA, Hammour AA. ChatGPT in pharmacy practice: a cross-sectional exploration of Jordanian pharmacists’ perception, practice, and concerns. J Pharm Policy Pract. 2023;16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Abu-Farha R, Fino L, Al-Ashwal FY, et al. Evaluation of community pharmacists’ perceptions and willingness to integrate ChatGPT into their pharmacy practice: a study from Jordan. J Am Pharm Assoc. 2023;63:1761–1767.e2. doi: 10.1016/j.japh.2023.08.020 [DOI] [PubMed] [Google Scholar]

- 14.Al-Dujaili Z, Omari S, Pillai J, Al Faraj A. Assessing the accuracy and consistency of ChatGPT in clinical pharmacy management: a preliminary analysis with clinical pharmacy experts worldwide. Res Social Administrative Pharm. 2023;19(12):1590–1594. doi: 10.1016/j.sapharm.2023.08.012 [DOI] [PubMed] [Google Scholar]

- 15.Korteling JEH, van de Boer-Visschedijk GC, Blankendaal RAM, Boonekamp RC, Eikelboom AR. Human- versus Artificial Intelligence. Front Artif Intell. 2021;4:622364. doi: 10.3389/frai.2021.622364 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Liu S, Wright AP, Patterson BL, et al. Assessing the value of ChatGPT for clinical decision support optimization. medRxiv. 2023;2023:1. [Google Scholar]

- 17.Bonsu EM, Baffour-Koduah D. From the consumers’ side: determining students’ perception and intention to use ChatGPT in Ghanaian higher education. J Educ Soc Multicult. 2023;4(1):1–29. doi: 10.2478/jesm-2023-0001 [DOI] [Google Scholar]

- 18.Shen Y, Heacock L, Elias J, et al. ChatGPT and Other Large Language Models Are Double-edged Swords. Radiology. 2023;307(2):e230163. doi: 10.1148/radiol.230163 [DOI] [PubMed] [Google Scholar]

- 19.Abouammoh N, Alhasan K, Raina R, et al. Exploring perceptions and experiences of ChatGPT in medical education: a qualitative study among medical College faculty and students in Saudi Arabia. medRxiv. 2023;2023:23292624. [Google Scholar]

- 20.Park J. Medical students’ patterns of using ChatGPT as a feedback tool and perceptions of ChatGPT in a Leadership and Communication course in Korea: a cross-sectional study. J Educ Evaluation Health Prof. 2023;20:29. doi: 10.3352/jeehp.2023.20.29 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Temsah M-H, Aljamaan F, Malki KH, et al. ChatGPT and the future of digital health: a study on healthcare workers’ perceptions and expectations. Healthcare. 2023;11(13):1812. doi: 10.3390/healthcare11131812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kusunose K, Kashima S, Sata M. Evaluation of the accuracy of ChatGPT in answering clinical questions on the Japanese society of hypertension guidelines. Circ J. 2023;87(7):1030–1033. doi: 10.1253/circj.CJ-23-0308 [DOI] [PubMed] [Google Scholar]

- 23.Sarraju A, Bruemmer D, Van Iterson E, Cho L, Rodriguez F, Laffin L. Appropriateness of cardiovascular disease prevention recommendations obtained from a popular online chat-based artificial intelligence model. JAMA. 2023;329(10):842–844. doi: 10.1001/jama.2023.1044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Johnson D, Goodman R, Patrinely J, et al. Assessing the Accuracy and Reliability of AI-generated medical responses: an evaluation of the Chat-GPT model. Res Sq. 2023;2023:1. [Google Scholar]

- 25.King MR. The future of AI in medicine: a perspective from a Chatbot. Ann Biomed Eng. 2023;51(2):291–295. doi: 10.1007/s10439-022-03121-w [DOI] [PubMed] [Google Scholar]

- 26.Rodriguez F, Ngo S, Baird G, Balla S, Miles R, Garg M. Readability of online patient educational materials for coronary artery calcium scans and implications for health disparities. J Am Heart Assoc. 2020;9(18):e017372. doi: 10.1161/JAHA.120.017372 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gill SS, Xu M, Patros P, et al. Transformative effects of ChatGPT on modern education: emerging Era of AI Chatbots. Inter Things Cyber-Phys Sys. 2024;4:19–23. doi: 10.1016/j.iotcps.2023.06.002 [DOI] [Google Scholar]

- 28.Deng J, Lin Y. The benefits and challenges of ChatGPT: an overview. Front Compu Intell Sys. 2023;2(2):81–83. doi: 10.54097/fcis.v2i2.4465 [DOI] [Google Scholar]

- 29.Sallam M, Salim NA, Al-Tammemi AB, et al. ChatGPT output regarding compulsory vaccination and COVID-19 vaccine conspiracy: a descriptive study at the outset of a paradigm shift in online search for information. Cureus. 2023;15:2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Cascella M, Montomoli J, Bellini V, Bignami E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J Med Syst. 2023;47(1):33. doi: 10.1007/s10916-023-01925-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rao A, Pang M, Kim J, et al. Assessing the utility of ChatGPT throughout the entire clinical workflow: development and usability study. J Med Internet Res. 2023;25:e48659. doi: 10.2196/48659 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Galido PV, Butala S, Chakerian M, Agustines D. A case study demonstrating applications of ChatGPT in the clinical management of treatment-resistant schizophrenia. Cureus. 2023;15(4):e38166. doi: 10.7759/cureus.38166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Morath B, Chiriac U, Jaszkowski E, et al. Performance and risks of ChatGPT used in drug information: an exploratory real-world analysis. Eur J Hosp Pharm;2023. 3750. doi: 10.1136/ejhpharm-2023-003750 [DOI] [PubMed] [Google Scholar]

- 34.Hsu H-Y, Hsu K-C, Hou S-Y, C-L W, Hsieh Y-W, Cheng Y-D. Examining real-world medication consultations and drug-herb interactions: chatGPT performance evaluation. JMIR Med Educ. 2023;9:e48433. doi: 10.2196/48433 [DOI] [PMC free article] [PubMed] [Google Scholar]