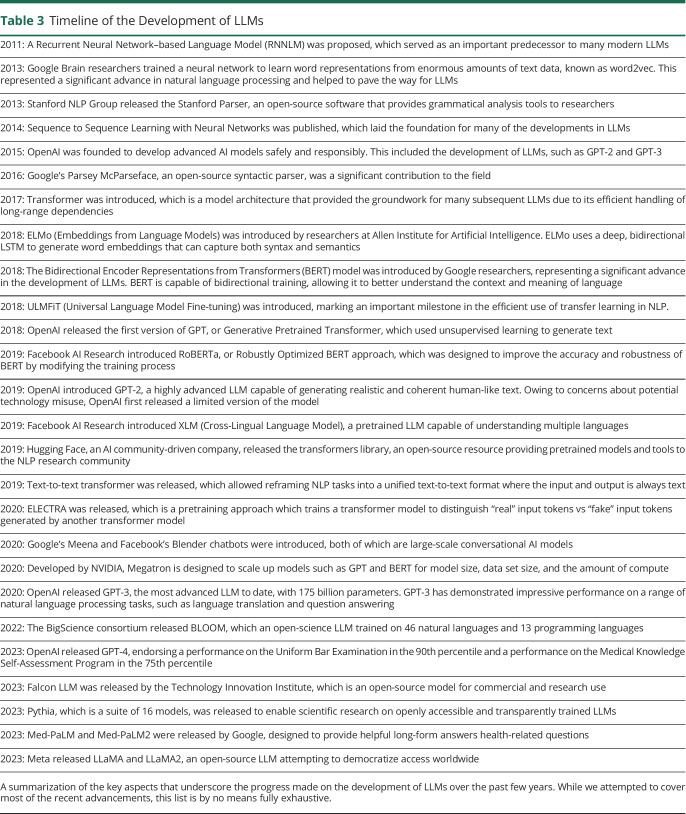

Table 3.

Timeline of the Development of LLMs

| 2011: A Recurrent Neural Network–based Language Model (RNNLM) was proposed, which served as an important predecessor to many modern LLMs |

| 2013: Google Brain researchers trained a neural network to learn word representations from enormous amounts of text data, known as word2vec. This represented a significant advance in natural language processing and helped to pave the way for LLMs |

| 2013: Stanford NLP Group released the Stanford Parser, an open-source software that provides grammatical analysis tools to researchers |

| 2014: Sequence to Sequence Learning with Neural Networks was published, which laid the foundation for many of the developments in LLMs |

| 2015: OpenAI was founded to develop advanced AI models safely and responsibly. This included the development of LLMs, such as GPT-2 and GPT-3 |

| 2016: Google's Parsey McParseface, an open-source syntactic parser, was a significant contribution to the field |

| 2017: Transformer was introduced, which is a model architecture that provided the groundwork for many subsequent LLMs due to its efficient handling of long-range dependencies |

| 2018: ELMo (Embeddings from Language Models) was introduced by researchers at Allen Institute for Artificial Intelligence. ELMo uses a deep, bidirectional LSTM to generate word embeddings that can capture both syntax and semantics |

| 2018: The Bidirectional Encoder Representations from Transformers (BERT) model was introduced by Google researchers, representing a significant advance in the development of LLMs. BERT is capable of bidirectional training, allowing it to better understand the context and meaning of language |

| 2018: ULMFiT (Universal Language Model Fine-tuning) was introduced, marking an important milestone in the efficient use of transfer learning in NLP. |

| 2018: OpenAI released the first version of GPT, or Generative Pretrained Transformer, which used unsupervised learning to generate text |

| 2019: Facebook AI Research introduced RoBERTa, or Robustly Optimized BERT approach, which was designed to improve the accuracy and robustness of BERT by modifying the training process |

| 2019: OpenAI introduced GPT-2, a highly advanced LLM capable of generating realistic and coherent human-like text. Owing to concerns about potential technology misuse, OpenAI first released a limited version of the model |

| 2019: Facebook AI Research introduced XLM (Cross-Lingual Language Model), a pretrained LLM capable of understanding multiple languages |

| 2019: Hugging Face, an AI community-driven company, released the transformers library, an open-source resource providing pretrained models and tools to the NLP research community |

| 2019: Text-to-text transformer was released, which allowed reframing NLP tasks into a unified text-to-text format where the input and output is always text |

| 2020: ELECTRA was released, which is a pretraining approach which trains a transformer model to distinguish “real” input tokens vs “fake” input tokens generated by another transformer model |

| 2020: Google's Meena and Facebook's Blender chatbots were introduced, both of which are large-scale conversational AI models |

| 2020: Developed by NVIDIA, Megatron is designed to scale up models such as GPT and BERT for model size, data set size, and the amount of compute |

| 2020: OpenAI released GPT-3, the most advanced LLM to date, with 175 billion parameters. GPT-3 has demonstrated impressive performance on a range of natural language processing tasks, such as language translation and question answering |

| 2022: The BigScience consortium released BLOOM, which an open-science LLM trained on 46 natural languages and 13 programming languages |

| 2023: OpenAI released GPT-4, endorsing a performance on the Uniform Bar Examination in the 90th percentile and a performance on the Medical Knowledge Self-Assessment Program in the 75th percentile |

| 2023: Falcon LLM was released by the Technology Innovation Institute, which is an open-source model for commercial and research use |

| 2023: Pythia, which is a suite of 16 models, was released to enable scientific research on openly accessible and transparently trained LLMs |

| 2023: Med-PaLM and Med-PaLM2 were released by Google, designed to provide helpful long-form answers health-related questions |

| 2023: Meta released LLaMA and LLaMA2, an open-source LLM attempting to democratize access worldwide |

A summarization of the key aspects that underscore the progress made on the development of LLMs over the past few years. While we attempted to cover most of the recent advancements, this list is by no means fully exhaustive.