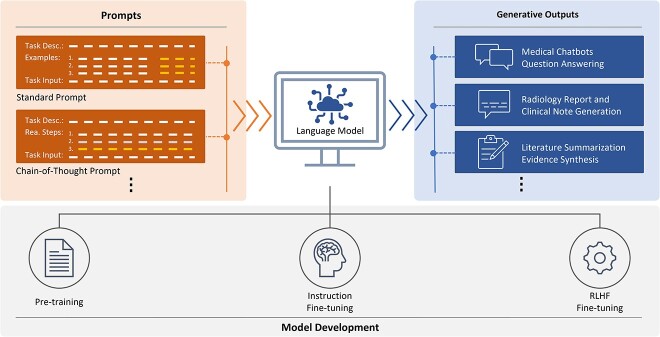

Figure 1.

The paradigm of LLMs. Pre-training: LLMs are trained on large scale corpus using an autoregressive LM; Instruction Fine-tuning: pre-trained LLMs are fine-tuned on a dataset of human-written demonstrations of the desired output behavior on prompts using supervised learning; RLHF Fine-tuning: a reward model is trained using collected comparison data, then the supervised model is further fine-tuned against the reward model using reinforcement learning algorithm. Prompts: the instruction and/or example text added to guide LLMs to generate expected outputs. Generative outputs: the outputs produced by the LLMs in response to the users’ prompts and inputs.