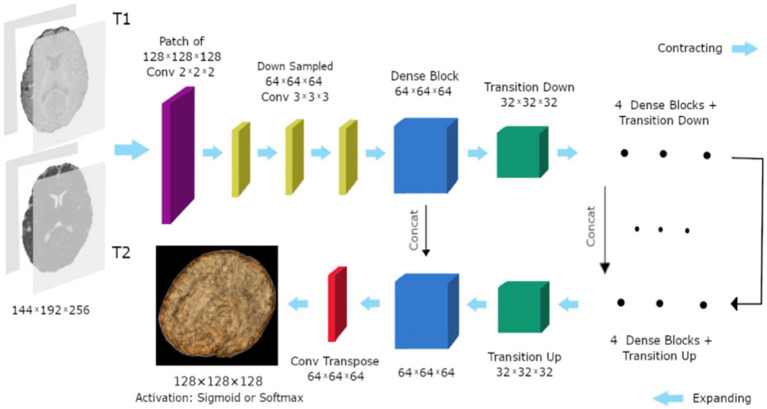

Figure 9.

The study’s 3D FC-DenseNet architecture uses a 222 convolution with stride 2 (purple) to downscale the input patch from 128 × 128 × 128 to 64 × 64 × 64 in the first layer. The patch is upsampled from 64 × 64 × 64 to 128 × 128 × 128 using a 222 convolution transpose with stride 2 (red) before the activation layer. With the help of this deep architecture, we were able to overcome memory size restrictions with big input patches, retain a wide field of vision, and add five skip connections to enhance the flow of local and global feature data. Reprinted with permission from, licensed under CC BY-4.0 (34).