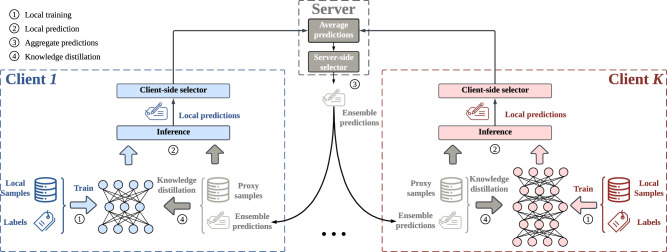

Fig. 1. The overall framework of Selective-FD.

The federated distillation involves four iterative steps. First, each client trains a personalized model using its local private data. Second, each client predicts the label of the proxy samples based on the local model. Third, the server aggregates these local predictions and returns the ensemble predictions to clients. Fourth, clients update local models by knowledge distillation based on the ensemble predictions. During the training process, the client-side selectors and the server-side selector aim to filter out misleading and ambiguous knowledge from the local predictions. Some icons in this figure are from icons8.com.