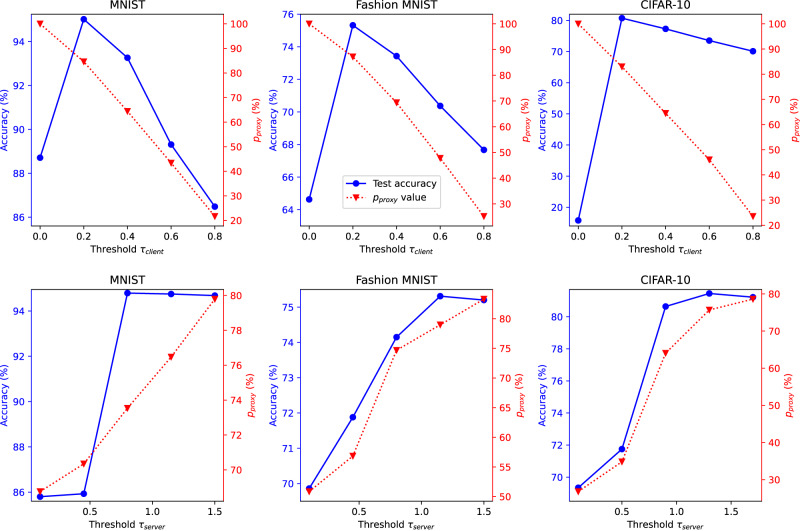

Fig. 6. Test accuracy and percentage pproxyunder (top) different values ofτclientand (bottom) different values ofτserver.

We denote the percentage of proxy samples selected for knowledge distillation as pproxy. When τclient is too large or τserver is too small, the selectors filter out most of the proxy samples, leading to a small batch size and increased training variance. Conversely, when τclient is too small, the local outputs may contain an excessive number of incorrect predictions, leading to a decrease in the effectiveness of knowledge distillation. Besides, when τserver is too large, the ensemble predictions may exhibit high entropy, indicating ambiguous knowledge that could degrade local model training. These empirical results align with the analysis in Remark 1.