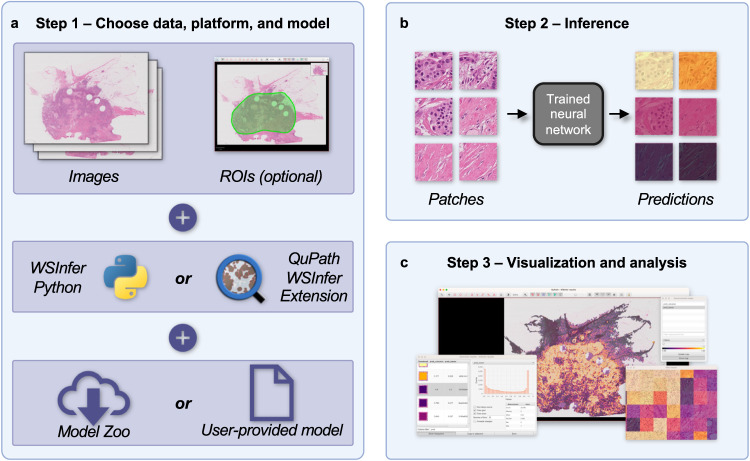

Fig. 1. WSInfer workflow.

The WSInfer ecosystem streamlines the deployment of trained deep neural networks on whole slide images through three steps. a In Step 1, users begin by selecting their WSIs and specifying the platform for model inference along with the choice of a pretrained model. If employing the WSInfer Python Runtime, the dataset is expected to be a directory containing WSI files. Alternatively, when using the WSInfer QuPath extension, the image currently open in QuPath serves as the input. QuPath users also have the option to designate a region of interest for model inference. The pretrained model can be selected from the WSInfer Model Zoo or users can provide their own model in TorchScript format. b In Step 2, WSInfer performs a series of processing steps, including the computation of patch coordinates at the patch size and spacing prescribed by the model. Image patches are loaded directly from the WSI and used as input to the patch classification model. The model outputs are aggregated and saved to CSV and GeoJSON files. c In Step 3, model outputs can be visualized and analyzed in QuPath or other software. This example shows breast tumor patch classification on a slide from TCGA.