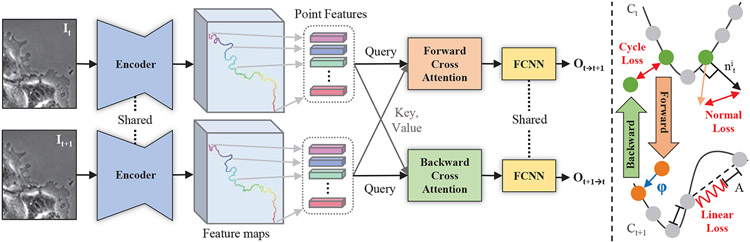

Figure 2. Our architecture on the left and unsupervised learning losses on the right.

Shared encoder comprised of VGG16 and FPN encodes first and second images. Point features are sampled at the location of ordered contour points indicated by rainbow colors from red to purple. Point features are inputted as query or key and value to the cross attentions. Lastly, shared FCNN takes the fused features and regresses forward or backward offsets. The cycle consistency, mechanical-normal, and mechanical-linear losses are shown in red color.