Abstract

Artificial intelligence (AI) has emerged as a transformative force in various sectors, including medicine and healthcare. Large language models like ChatGPT showcase AI’s potential by generating human-like text through prompts. ChatGPT’s adaptability holds promise for reshaping medical practices, improving patient care, and enhancing interactions among healthcare professionals, patients, and data. In pandemic management, ChatGPT rapidly disseminates vital information. It serves as a virtual assistant in surgical consultations, aids dental practices, simplifies medical education, and aids in disease diagnosis. A total of 82 papers were categorised into eight major areas, which are G1: treatment and medicine, G2: buildings and equipment, G3: parts of the human body and areas of the disease, G4: patients, G5: citizens, G6: cellular imaging, radiology, pulse and medical images, G7: doctors and nurses, and G8: tools, devices and administration. Balancing AI’s role with human judgment remains a challenge. A systematic literature review using the PRISMA approach explored AI’s transformative potential in healthcare, highlighting ChatGPT’s versatile applications, limitations, motivation, and challenges. In conclusion, ChatGPT’s diverse medical applications demonstrate its potential for innovation, serving as a valuable resource for students, academics, and researchers in healthcare. Additionally, this study serves as a guide, assisting students, academics, and researchers in the field of medicine and healthcare alike.

Keywords: ChatGPT, cellular imaging, medicine, healthcare, image, dental, disease, radiology and sonar, pharmaceutical

1. Introduction

Artificial intelligence (AI) has emerged as a powerful tool with transformative potential across various sectors, and the field of medicine and healthcare is no exception. One remarkable application of AI in this realm is the development of large language models, such as ChatGPT, which have gained significant attention for their ability to generate human-like text based on prompts. ChatGPT’s versatile capabilities hold promise for reshaping medical practices, enhancing patient care, and revolutionising the way healthcare professionals interact with both patients and data [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32].

OpenAI launched in last November 2022 the Chat Generative Pre-trained Transformer (ChatGPT) and revolutionised the approach in artificial intelligence to human–model interaction used for fields [4]. It was used in all areas: healthcare and management [5,6,7,8,9], aiding cosmetic orthognathic surgery consultation [10], revolutionising dental practices [8,11,12], medical scientific articles and education fields [6,9,13,14,15,16,17,18,19,20,21,22,23], support in disease diagnosis [24], revolutionising radiology, sonar imaging and writing assessment [13,25,26,27,28,29,30,31,32,33], pharmaceutical research and treatment [11,24,34,35,36,37,38], and navigating limitations and ethical considerations [18,19,31,35,38,39,40,41]. Addressing the open issues in AI applications, such as ChatGPT, particularly for medical use, involves the tackling of model interpretability. This is carried out to make the AI’s decision making transparent, especially in complex medical scenarios. Equally critical is the combatting of data bias in order to prevent healthcare disparities. There is also a pressing need for mechanisms that allow for continuous learning, which enables ChatGPT to stay abreast of the latest medical research and guidelines. Moreover, the management of integrating ChatGPT with existing healthcare IT ecosystems is crucial to guarantee seamless operation. Lastly, it is essential to strictly adhere to ethical and legal standards, with a focus on maintaining trust and compliance in healthcare applications through patient confidentiality and informed consent.

The innovative aspects of ChatGPT’s deployment in medicine include a variety of unique applications, such as assisting in the identification of rare diseases and providing support for men’s health, demonstrating the model’s versatility. Advanced methodologies are at the heart of its training and effectiveness evaluation, which are tailored specifically for healthcare contexts to ensure relevance and dependability. The work is fundamentally interdisciplinary, as it merges AI with expert medical insights in order to effectively tackle significant healthcare challenges. The aim of this synergy is to translate into tangible benefits, which include enhanced patient outcomes and streamlined healthcare processes. This, in turn, marks a substantial real-world impact on the medical field. The areas of ChatGPT’s application in medical fields and healthcare are as follows.

1.1. Aiding Cosmetic Orthognathic Surgery Consultations

Within the realm of surgical procedures, such as cosmetic orthognathic surgery, ChatGPT can function as a virtual assistant, offering patients crucial preoperative information. Potential candidates who are considering cosmetic orthognathic surgery may be seeking information regarding the procedure, recovery, risks, and benefits. ChatGPT can offer standardised and accurate responses, which prepare patients for their consultations and help them make informed decisions [5,6,7,8,9,10,42,43].

1.2. Enhancing Medical Education

Medical education can benefit significantly from AI-driven tools like ChatGPT. Medical students and professionals can engage with ChatGPT to access quick references, clarify doubts, and explore complex medical concepts. Its ability to explain intricate medical terminology in a comprehensible manner aids in knowledge acquisition, fostering continuous learning and improving medical literacy [6,9,13,14,15,16,17,18,19,20,21,22,23].

1.3. Support in Disease Diagnosis

The potential of ChatGPT as a diagnostic tool holds promise in the early detection of diseases. ChatGPT can generate potential differential diagnoses by analysing patient-reported symptoms and medical history, thereby aiding healthcare providers in narrowing down diagnostic possibilities. However, caution must be exercised as diagnosis requires domain-specific expertise [24].

1.4. Cellular Imaging, Revolutionizing Radiology, and Sonar Imaging

Cellular imaging pertains to the utilisation of diverse techniques and technologies for visualising and studying cells at the microscopic level. Scientists and researchers are allowed to examine the structure, function, and behaviour of individual cells or cell populations. Cellular imaging techniques comprise light microscopy, fluorescence microscopy, confocal microscopy, electron microscopy, and various other advanced imaging methods. These techniques are widely used in various fields, such as biology, medicine, and biotechnology, for the purpose of better understanding cellular processes, cell interactions, and disease mechanisms.

The interpretation of medical images, such as radiology and sonar scans, requires precision and accuracy. The potential of ChatGPT lies in its ability to analyse and generate descriptions for medical images, which can enhance the workflow of radiologists. It has the ability to provide initial observations, which can highlight regions of interest and assist radiologists in their analyses [13,25,26,27,28,29,30,31,32,33].

1.5. Pharmaceutical Research and Treatment

In the realm of pharmaceutical research, ChatGPT can contribute by sifting through vast volumes of scientific literature, identifying potential drug candidates, and suggesting innovative research directions. It can assist in drug discovery, research proposal writing, and summarising complex medical research, expediting the research process [11,24,34,35,36,37,38].

1.6. Navigating Limitations and Ethical Considerations

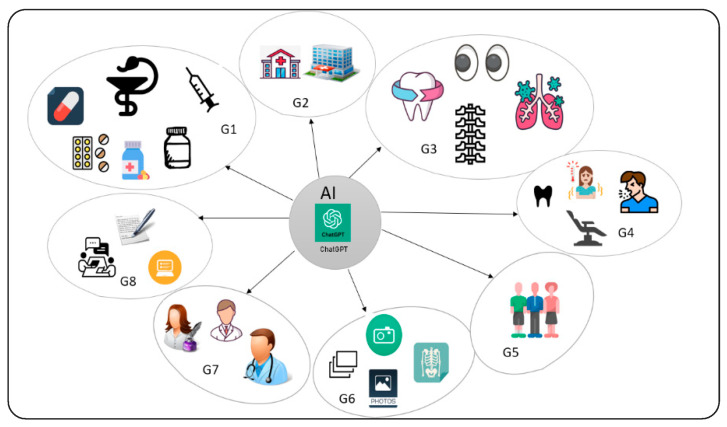

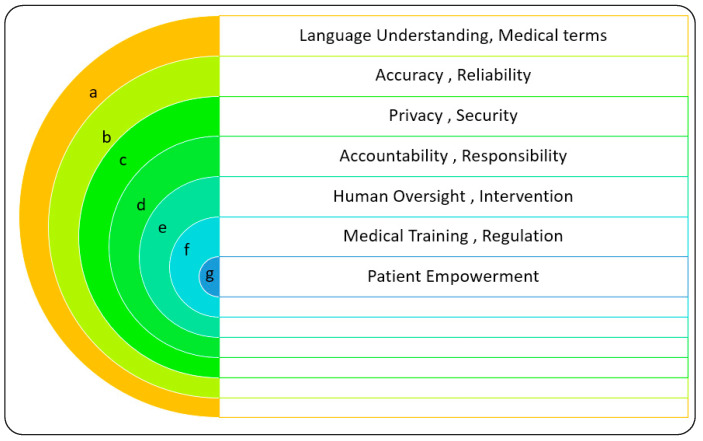

While ChatGPT offers numerous opportunities, it also poses certain limitations. The responses are based on the data on which it was trained, potentially resulting in biased or inaccurate information. Ethical concerns arise when content generated by AI is mistaken for the expertise of a human. The challenge of balancing the role of AI with the need for human judgement and expertise remains [18,19,27,31,35,38,39,40,41], as depicted in Figure 1.

Figure 1.

Categories and groups covered by all studies of the review. G1: treatment and medicine, G2: buildings and equipment, G3: parts of the human body and areas of the disease, G4: patients, G5: citizens, G6: cellular imaging, radiology, pulse, and medical images, G7: doctors and nurses, and G8: tools, devices and administration.

Johnson, D et al. [5] utilised the accuracy and completeness of ChatGPT in answering medical queries by academic physician specialists. The results generally show accurate information with limitations, which suggests a need for further research and model development. Mohammad H et al. [13] conducted a hybrid panel discussion that focused on the integration of ChatGPT, a large language model, in the fields of education, research, and healthcare. The event gathered responses from attendees, both in-person and online, by utilising an audience interaction platform. According to the study, approximately 40% of the participants had utilised ChatGPT, with a higher number of trainees compared to faculty members having experimented with it. Those individuals who had utilised ChatGPT demonstrated a heightened level of interest in its potential application across a multitude of contexts. Uncertainty was observed regarding its use in education, with pros and cons being discussed for its integration in education, research, and healthcare. The perspectives varied according to role (trainee, faculty, and staff), highlighting the need for further discussion and exploration of the implications and optimal uses of ChatGPT in these sectors. The study emphasises the significance of taking a deliberate and measured approach to adoption in order to mitigate potential risks and challenges. Sallam et al. [44] assessed the advantages and disadvantages of ChatGPT in healthcare education. While it is beneficial for learning, it lacks emotional interaction and poses plagiarism risks.

In conclusion, the integration of ChatGPT into medicine and healthcare holds significant potential to transform various aspects of patient care, education, diagnosis, and research. As technology continues its advancement, it becomes crucial to responsibly harness the capabilities of ChatGPT, while also addressing its limitations and ethical considerations. The exploration of ChatGPT’s applications across diverse medical domains emphasises its role as a catalyst for innovation and improvement in the medical landscape.

The main objectives of the study can be succinctly summarised as follows:

To investigate the various applications of ChatGPT in healthcare, such as pandemic management, surgical consultations, dental practices, medical education, disease diagnosis, cellular imaging, sonar imaging, radiology, and pharmaceutical research.

To investigate the potential benefits and risks of integrating ChatGPT in healthcare, assessing its impact on patient care, medical processes, and ethical considerations.

To investigate the ethical issues and the need to balance AI’s role in healthcare settings with human judgement.

To offer perspectives and recommendations for the responsible adoption and use of ChatGPT in medicine in general, and cellular imaging in particular, while addressing limitations and ethical concerns.

2. Materials and Methods

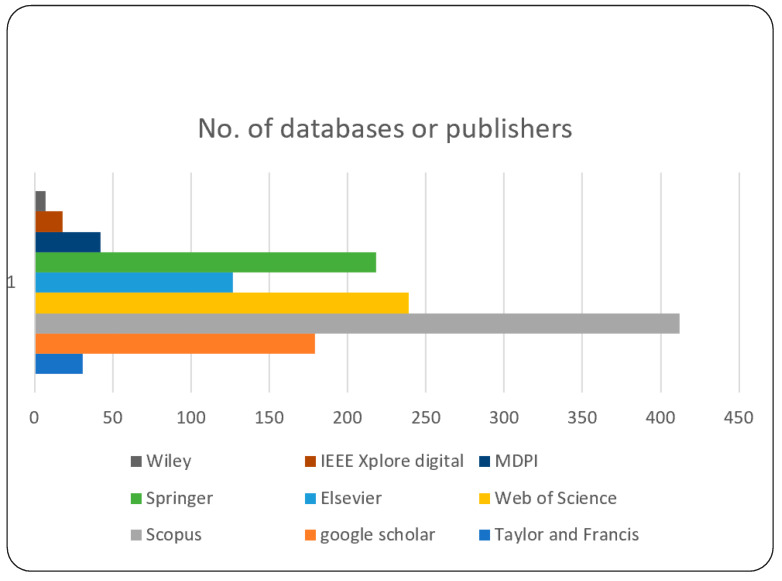

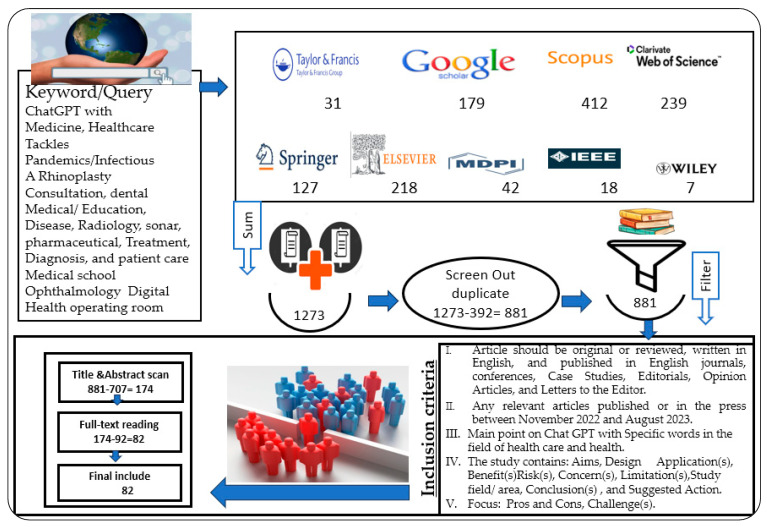

The field of this study is covered by very important query words (keywords), namely, ChatGPT with “Medicine”, “Healthcare”, “Tackles”, “Pandemics/Infectious”, “A Cosmetic orthognathic surgery Consultation”, “dental Medical/Education”, “Disease, Radiology”, “sonar”, “pharmaceutical”, “Treatment”, “Diagnosis” and “patient care”, “Medical school”, “Ophthalmology”, “Digital Health”, and “operating room”. Our study is closed to English-language studies only. The following digital databases and publishers were selected to search for target papers, and the numbers of articles included the following form: Taylor and Francis (31) article, Google Scholar (179) article, Scopus (412) article, Web of Science (239) article, Elsevier (127) article, Springer (218) article, MDPI (41) article, IEEE Xplore digital (18) article, and Wiley (7) article, as shown in Figure 2.

Figure 2.

No. of databases/publishers.

The studies were chosen by conducting literature searches, which were then followed by three rounds of screening and filtering. In the initial iteration, using Mendeley software (1.19.4-win32), only publications that were published in the last eight months were gathered after eliminating duplicate articles.

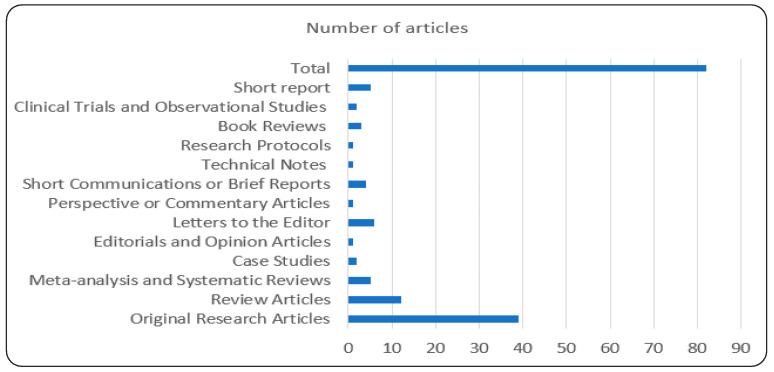

As part of this investigation, a systematic literature review was conducted using the PRISMA methodology (Supplementary Materials) [40,45,46,47,48,49,50]. We included all types of articles: original research articles, review articles, meta-analysis and systematic reviews, case studies, editorials and opinion articles, letters to the editor, perspective or commentary articles, short communications or brief reports, technical notes, research protocols, book reviews, clinical trials and observational studies, and short reports; the numbers included the following form (39, 12, 5, 2, 1, 6, 1, 4, 1, 1, 3, 2, and 5), respectively, as shown in Figure 3.

Figure 3.

Distribution by type of articles to number of articles.

2.1. Inclusion Criteria

The article should be original or reviewed, written in English, and published in English journals, conferences, case studies, editorials, opinion articles, and letters to the editor.

The study specifies that it contains the specified keywords.

Any relevant articles are published or in press between November 2022 and August 2023.

The main point is on Chat GPT with specific words in the field of healthcare and health.

The study includes the study’s objectives, design, applications, benefits, risks, concerns, limitations, study field/area, conclusions, and recommended actions.

The focus is on the pros and cons, challenges, as well as all the inclusion criteria shown in Figure 4.

Figure 4.

Flowchart for selecting studies with specific query and eligibility criteria.

2.2. Data Gathering Procedure

All included articles were reviewed, examined, and summarised in accordance with their basic classifications, then saved as Microsoft Word and Excel files to simplify the filtering process. For every piece, the authors read the complete text. We were able to develop the proposed taxonomy using a variety of highlights and comments on the surveyed works, as well as a running classification of all the articles. The remarks were recorded in either paper copy or electronic form, depending on the writing style of each contributor. After this, another procedure was conducted to characterise, describe, tabulate, and draw conclusions about the key findings.

2.3. Research Questions

The selection of research questions is a critical step in shaping the purpose of the study and the anticipated results. Consequently, we have formulated the following research questions to align with the primary objective of our systematic literature review:

RQ1: How does ChatGPT contribute to pandemic management and what specific advantages does it offer in disseminating critical information during health crises?

RQ2: How is ChatGPT utilised in the field of dental practices and how does it enhance the overall patient experience in this context?

RQ3: What challenges and ethical considerations are associated with the integration of ChatGPT into medical practices and healthcare settings?

RQ4: What are the key components associated with work related to ChatGPT in medicine and healthcare and their contributions in ChatGPT applications in the field?

3. Results

The results of the initial query search, which yielded 1273 articles, are as follows: there are 31 articles from Taylor and Francis, 179 articles from Google Scholar, 412 articles from Scopus, 239 articles from Web of Science, 127 articles from Elsevier, 218 articles from Springer, 41 articles from MDPI, 18 articles from IEEE Xplore digital, and 7 articles from Wiley from between November 2022 and August 2023.

The papers were filtered according to the sequence that was adopted in this research and were divided into two categories: 1273 articles were published in the last eight months (November 2022 to August 2023) and 392 papers appeared in all nine databases or publishers, resulting in a total of 1547 papers. Following a comprehensive scan of the titles and abstracts of the papers, an additional 1273 papers were excluded. After the final full-text reading, 92 papers were excluded. The final set consisted of 82 papers, which were divided into eight major categories/groups as follows: G1: treatment and medicine are essential; G2: buildings and equipment play a crucial role; G3: parts of the human body and areas of disease; G4: patients; G5: citizens; G6: the focus is on radiology, pulse, and medical images; G7: revolves around doctors and nurses; and G8: encompasses tools, devices, and administration.

The first category (G1), which comprised 27 articles (32.93%), was focused on treatment and medicine. The second category (G2) consisted of two articles (2.44%) that were focused on buildings and equipment. The third category, known as G3, comprised 14 articles, accounting for 17.07% of the total. These articles focused on parts of the human body and areas affected by disease. The fourth category (G4), which comprised eight articles (9.76%), focused on patients. The fifth category (G5), which comprised three articles (3.66%), was citizens. The sixth category (G6) comprised four articles (4.88%) on cellular imaging, radiology, pulse, and medical images. The seventh category, known as G7, consisted of 17 articles, accounting for 20.73% of the total. This category focused on doctors and nurses. The eighth category, known as G8, consisted of seven articles, accounting for 8.54% of the total. This category focused on tools, devices, and administration.

3.1. RQ1: How Does ChatGPT Contribute to Pandemic Management and What Specific Advantages Does It Offer in Disseminating Critical Information during Health Crises?

ChatGPT plays a crucial role in pandemic management by aiding in the swift and accurate dissemination of critical information. Its natural language processing capabilities enable it to generate coherent responses, making it a valuable tool for healthcare organisations. Specific advantages include its ability to provide rapid updates, preventive measures, and medical guidelines to the public. ChatGPT assists in addressing queries and concerns, ensuring accurate information flow and timely interventions during health crises [5,6,7,8,9,42,43].

3.2. RQ2: How Is ChatGPT Utilised in the Field of Dental Practices, and How Does It Enhance the Overall Patient Experience in This Context?

ChatGPT is integrated into dental practices in order to empower dental assistants and improve the overall patient experience. It assists in the handling of patient inquiries, the provision of oral health tips, and the guidance of patients through postoperative care instructions. ChatGPT ensures standardised and accurate responses, thereby assisting patients in making informed decisions regarding their dental care. This AI integration revolutionises patient communication, appointment scheduling, and postoperative support in dental practices [8,11,12].

3.3. RQ3: What Challenges and Ethical Considerations Are Associated with the Integration of ChatGPT into Medical Practices and Healthcare Settings?

The integration of ChatGPT into medical practices and healthcare settings presents challenges and ethical considerations. One challenge is that the responses of ChatGPT are based on the data on which it was trained, which may result in biased or inaccurate information. Ethical concerns arise when content generated by AI is mistaken for the expertise of a human. The challenge lies in striking a balance between the capabilities of AI and the necessity for human judgement and expertise. Ensuring that AI does not replace human healthcare professionals entirely while leveraging its advantages is a significant ethical consideration [18,19,27,31,35,38,39,40,41]. Careful thought and responsible adoption are essential to mitigate potential risks and challenges.

3.4. RQ4: What Are the Key Components Associated with Work Related to ChatGPT in Medicine and Healthcare and Their Contributions in ChatGPT Applications in the Field?

The findings of our studies are summarised in Table 1, which includes information about the purpose of the study (in the “aim of study” column), the design and application (in the “design, application(s)” column), the benefits and risks (in the “benefit(s), risks” column), the summary of results for concerns and limitations (in the “concern(s), limitation(s)” column), the main outcomes, the type of study (in the “study field/area” column), and the main outcomes reported by each article in the conclusion section (in the “Suggested Action” column). Table 2 summarises the pros and cons or challenges of some of the papers that were exposed.

Table 1.

The summary of related works includes the study’s goal, design, application(s), benefit(s), risk(s), concern(s), limitation(s), study field/area, conclusion(s), and suggested action for ChatGPT with medicine and healthcare.

| No. | References | Aims, Design | Application(s), Benefit(s) | Risk(s), Concern(s), Limitation(s) | Study Field/Area and Categories (G) | Conclusion(s), Suggested Action |

|---|---|---|---|---|---|---|

| 1 | [51] | Evaluate ChatGPT’s medical applications via systematic review of articles. | Streamline tasks, improve care, decision making, communication in medicine. |

|

Medicine, G4. | ChatGPT’s medical potential is promising but faces challenges. Ethical, safety considerations vital for transforming healthcare with AI. |

| 2 | [13] | Study examines ChatGPT’s integration in education, research, and healthcare perspectives. | ChatGPT’s applications: text generation, literature analysis, image learning in education, healthcare, and research. |

|

Education, healthcare, research, G1. |

Responsible AI use demands transparency, equity, reliability, and non-harm principles. |

| 3 | [44] | Examine ChatGPT’s utility in healthcare education, weigh pros and cons. | Enhances personalised learning, clinical reasoning, and skills development. |

|

Medical, dental, pharmacy, and public health, G1. |

ChatGPT’s integration in healthcare education offers potential advantages. Further research required to address ethical, bias, and accuracy concerns. |

| 4 | [5] | Assess ChatGPT’s medical query accuracy and completeness for physicians. | ChatGPT as medical information source with substantial accuracy and potential. |

|

Medical, G4, G5. | ChatGPT shows potential as medical resource but requires validation and improvement. |

| 5 | [15] | Evaluate ChatGPT’s performance in medical education, content generation, and deception. | Medical education, skill practice, patient interaction simulation, content generation. |

|

Medical, G1, G4, G5. | ChatGPT’s potential in education is transformative yet raises ethical concerns. |

| 6 | [6] | Evaluate ChatGPT’s utility in healthcare education, research, and practice. Systematic Review. |

Improved writing, efficient research, personalised learning, streamlined practice. |

|

Healthcare Education, Research, Practice, G5, G7 | Widespread LLM use is inevitable; ethical guidelines crucial. ChatGPT’s potential in healthcare must be carefully weighed against risks. Careful implementation with human expertise essential to avoid misuse and harm. |

| 7 | [6] | Assess ChatGPT’s utility and limitations in healthcare education, research, practice. Systematic Review. |

Efficient research, personalised learning, streamlined practice, enhanced writing. |

|

Healthcare Education, Research, Practice, G 5, G8. | Imminent LLM adoption, guided by guidelines, balances potential and risks. |

| 8 | [16] | Explore ChatGPT’s use in medical education perceptions and experiences. | ChatGPT can aid information collection, saving time and effort. |

|

Medical Education, G3, G4, G7. | Study offers insights: ChatGPT’s pros acknowledged, concerns raised, need further research for successful integration in medical education. |

| 9 | [7] | Investigate ChatGPT’s potential in simplifying medical reports for radiology, Radiology Reports (Case Study). |

ChatGPT simplifies radiology reports; improves patient-centred care. |

|

Medical/Radiology Reports, G6. | Positive potential of LLMs for radiology report simplification; need for technical improvements and further research. |

| 10 | [52] | Analyse differences between human-written medical texts and ChatGPT-generated texts, develop detection methods. | Enhance trustworthy medical text generation, improve detection accuracy. |

|

Medical Texts, G5. |

ChatGPT-generated medical texts differ from human-written ones, detection methods effective. Trustworthy application of large language models in medicine promoted. |

| 11 | [9] | Summarise ChatGPT’s role in medical education and healthcare literature, Hybrid Literature Review. | Insight into ChatGPT’s impact on medical education, research, writing. |

|

Presence G5, G6. | Review highlights ChatGPT’s medical role, urges criteria for co-authorship. |

| 12 | [8] | Review the use of ChatGPT in medical and dental research. | ChatGPT assists in academic paper search, summarisation, translation, and scientific writing. |

|

Medical/Dental Research, G7. | ChatGPT aids research but ethical concerns and limitations require examination. |

| 13 | [53] | Investigate ChatGPT’s healthcare applications via an interview. | Rapid, informative response generation; written content quality. |

|

Health Care, G1 G5, G6. | Not available. |

| 14 | [54] | Analyse ChatGPT’s use in healthcare, emphasising its status and potential. Taxonomy/Systematic Review. | Provides insights into ChatGPT’s medical applications, informs healthcare professionals. |

|

Healthcare G4, G5, G7, G8. |

This study evaluates ChatGPT’s medical applications, emphasising insights and limitations. Clinical deployment remains unfeasible due to current performance. |

| 15 | [39] | Analyse ChatGPT’s AI applications, benefits, limitations, ethics in healthcare. | Medical research, diagnosis aid, education, patient assistance, updates. |

|

Medicine, G4. | ChatGPT has healthcare applications, but ethical concerns and limitations need addressing. |

| 16 | [55] | Evaluate ChatGPT’s medical utility and accuracy in healthcare discourse. | Rapid response for medical queries, user-friendly interaction. |

|

Healthcare/research, G2, G5, G6. | ChatGPT offers limited utility in healthcare, requiring fact-checking and awareness. |

| 17 | [56] | Discuss opportunities and risks of ChatGPT’s implementation in various fields. | Easy communication, potential for improving content quality. |

|

Medicine, science, academic publishing, G1, G4, G5, G6 |

Embrace AI, but thoughtfully, considering benefits and risks. |

| 18 | [57] | Evaluate ChatGPT’s potential as a medical chatbot, addressing concerns. | Enhancing healthcare access with technology, while raising ethical concerns. |

|

Medical/ chatbots, G1, G5, G6, G8. |

Balancing ChatGPT’s healthcare potential and concerns requires careful consideration, safeguards, and ongoing improvement. |

| 19 | [17] | Evaluate ChatGPT’s performance in medical question answering. | Assessing ChatGPT’s accuracy in medical exam questions. |

|

Medical/ Licensing Exams, G1, G7. |

ChatGPT shows promise as a medical education tool for answering questions with reasoning and context. |

| 20 | [40] | Evaluate ChatGPT’s role in medical research. | Enhances drug development, literature review, report improvement, personalised medicine, and more. |

|

Medical/ Research, G4, G5. |

ChatGPT offers transformative potential but requires addressing accuracy, integrity, and ethical considerations for clinical application. |

| 21 | [58] | Investigate the use of ChatGPT in expediting literature review articles creation, focusing on Digital Twin applications in healthcare. Literature Review. | Utilising ChatGPT for literature review accelerates knowledge compilation, easing academic efforts and focusing on research. |

|

Healthcare/ Digital Twin, G1, G3, G4. |

ChatGPT generated Digital Twin in healthcare articles. Low plagiarism in author-written text, high similarity in abstract paraphrases. AI accelerates knowledge expression, academic validity monitored through citations. |

| 22 | [59] | Explore ChatGPT’s healthcare applications, discuss limitations, and benefits. | Medical data analysis, chatbots, virtual assistants, language processing. |

|

Healthcare, G6, G8. | ChatGPT has versatile healthcare applications but faces ethical, privacy, and accuracy concerns. |

| 23 | [60] | Examine AI impact on medical publishing ethics and guidelines. | AI-generated content, democratisation of knowledge dissemination, multi-language support. |

|

Medical/ publishing, G3, G5, G6. |

AI like ChatGPT can democratise knowledge, but ethical, accuracy, and access challenges need comprehensive consideration and guidelines. |

| 24 | [25] | Evaluate AI impact on academic writing integrity and learning enhancement. | Academic writing, learning enhancement, objective evaluation of AI-generated content. |

|

Medical/ imaging, G6. |

Conclusion: ChatGPT shows potential for learning enhancement but risks academic integrity and lacks depth for advanced subjects. |

| 25 | [61] | Explore ChatGPT’s capabilities for medical education and practice. | ChatGPT aids medical education, generates patient simulations, quizzes, research summaries, and promotes AI learning. |

|

Medical education, G1, G4, G5, G8. | ChatGPT shows potential for medical education, research, but faces limitations and challenges. JMIR Medical Education is launching a theme issue on AI. |

| 26 | [26] | To explore the potential applications of ChatGPT in assisting researchers with tasks such as literature review, data analysis, hypothesis creation, and text generation. | Literature review, data analysis, hypothesis creation, text generation. |

|

Medical research, G6, G8. | Scientific caution is needed in using ChatGPT due to plagiarism risks, misleading outcomes, lack of context, and AI limitations. |

| 27 | [62] | To examine the potential impact of large language models (LLM), specifically “ChatGPT”, on the nuclear medicine community and its reliability in generating nuclear medicine and molecular imaging-related content. | Text generation, collaborative tool |

|

Medicine/ nuclear, G6. |

ChatGPT’s multiple-choice answering accuracy was 34%, surpassing random guessing. Improving training and learning capabilities is crucial. |

| 28 | [63] | To explore applications and limitations of the language model ChatGPT in healthcare. | Investigating ChatGPT’s potential in clinical practice, scientific production, reasoning, and education. |

|

Healthcare, Clinical, Research Scenarios, G5, G7 |

ChatGPT’s utilisation in healthcare requires cautiousness, considering its capabilities and ethical concerns. |

| 29 | [64] | To comprehensively review ChatGPT’s performance, applications, challenges, and future prospects. | Explore ChatGPT’s potential across various domains, anticipate future advancements, and guide research and development. |

|

Mult, medical, G6. | Review identifies ChatGPT’s potential, applications, limitations, and suggests future improvements. |

| 30 | [65] | This study aims to examine the potential and limitations of ChatGPT in medical research and education, focusing on its applications and ethical considerations. | ChatGPT can support researchers in literature review, data analysis, hypothesis generation, and medical education. Its AI capabilities enable efficient information extraction and text generation, enhancing research and learning processes. |

|

Clinical, translational medicine, G3, G6. | ChatGPT’s applications in scientific research must be approached cautiously, considering evolving limitations and human input, with focus on research ethics and integrity. |

| 31 | [28] | To explore the potential of Large Language Models (LLMs) like OpenAI’s ChatGPT in medical imaging, investigating their impact on radiology and healthcare. | LLMs enhance radiologists’ interpretation skills, facilitate patient–doctor communication, and optimise clinical workflows, potentially improving medical diagnosis and treatment planning. |

|

Medical/ imaging, G6. |

Large Language Models (LLMs), in medical imaging promise revolutionary impact with research and ethics. |

| 32 | [66] | Investigate ChatGPT’s role in medical education, exploring its applications, benefits, limitations, and challenges; Scoping Review. | ChatGPT aids automated scoring, personalised learning, case generation, research, content creation, and translation in medical education. |

|

Medical/ Education, G1, G5. |

ChatGPT enhances medical education with personalised learning, yet its limitations, biases, and challenges warrant cautious implementation and evaluation. |

| 33 | [67] | This study examines if ChatGPT-4 can provide accurate and safe medical information to patients considering blepharoplasty. | ChatGPT-4 aids patient education, offers evidence-based information, and improves communication between medical professionals and patients. |

|

Medical/ Blepharoplasties, G1, G5, G7. |

ChatGPT-4 shows potential in patient education for cosmetic surgery, offering accurate and clear information, but its limitations require consideration. |

| 34 | [12] | To explore the capabilities and applications of ChatGPT, a large language model developed by OpenAI. | Chatbots, language translation, text completion, question answering. |

|

The Use of Cybersecurity to Protect Medical Information, G1, G4, G5. | Not available. |

| 35 | [10] | Explore AI language model ChatGPT’s viability as a clinical assistant. Evaluate ChatGPT’s ability to provide informative and accurate responses during initial consultations about cosmetic orthognathic surgery. |

ChatGPT can assist patients with medical queries. |

|

A Cosmetic orthognathic surgery consultation, G3, G4. | ChatGPT demonstrates potential in offering valuable medical information to patients, particularly when access to professionals is restricted. However, its limitations and scope should be further investigated for safe and effective use in healthcare. |

| 36 | [34] | To examine the impact of artificial intelligence (AI) on dental practice, particularly in CBCT data management, and explore the potential benefits and limitations. | AI-driven Cone-beam-computed tomography (CBCT) data management can revolutionise dental practice workflow by improving efficiency and accuracy. Segmentation automation aids treatment planning and patient communication, enhancing overall care. |

|

Standard Medical Diagnostic/ dental, G3. |

AI integration in dental practice, particularly CBCT data management, shows promise in enhancing efficiency, accuracy, and patient communication while facing bias and reliability challenges. |

| 37 | [35] | To explore the potential applications of ChatGPT, in managing and controlling infectious diseases, focusing on information dissemination, diagnosis, treatment, and research. | ChatGPT can enhance infectious disease management by providing accurate information, aiding diagnosis, suggesting treatment options, and supporting research efforts, ultimately improving patient care and public health. |

|

Tackles Pandemics/Infectious Disease, G4. |

ChatGPT exhibits transformative potential in infectious disease management, though data reliance, medical accuracy, ethical concerns, and misuse risks require careful consideration. |

| 38 | [68] | To explore the integration of AI and algorithms to enhance physician workflow, maintain patient–physician rapport, and streamline administrative tasks. | AI can seamlessly assist doctors in clinical note generation, order selection, coding, history gathering, inbox filtering, and billing processes, improving efficiency and accuracy. |

|

Medicine/ De-Tether the Physician, G2. |

Not available. |

| 39 | [69] | To explore the implications of large language models (LLMs), particularly ChatGPT, for the field of academic paper authorship and authority, and to address the ethical concerns and challenges they introduce in health professions education (HPE). | Authorship and authority assessment, scholarly communication enhancement, technological advancement reflection. |

|

Artificial scholarship: LLMs in health professions education research, G5, G7, G8. | Not available. |

| 40 | [70] | To assess ChatGPT’s accuracy and reproducibility in responding to patient queries about bariatric surgery. | ChatGPT serves as an information source for patient inquiries about bariatric surgery, aiding patient education and enhancing their understanding of the procedure. |

|

Bariatric Surgery, G5, G4. | ChatGPT offers accurate responses for bariatric surgery inquiries. It is a valuable adjunct to patient education alongside healthcare professionals, fostering better outcomes and quality of life through technology integration. |

| 41 | [71] | Investigate ChatGPT’s behaviour, geolocation impact, and grammatical tuning, and address performance concerns. | Inform ChatGPT’s reliable use in education and medical assessments. |

|

Medical Licensing/Certification Examinations. | Not available. |

| 42 | [18] | Explore ethical considerations in integrating AI applications into medical education, identifying concerns and proposing recommendations. | Integration in medical education for interactive learning. Benefits include personalised instruction and improved understanding. |

|

Medical/Education Biomedical Ethical Aspects. G3. |

Integration of AI in medical education offers enhanced learning but demands a robust ethical framework, iteratively updated for evolving advancements and user input. |

| 43 | [72] | To investigate whether the use of ChatGPT technology can enhance communication in healthcare settings, leading to improved patient care and outcomes. | To explores the potential application of ChatGPT technology to address communication challenges in hospitals. By generating clear and understandable medical information, ChatGPT can bridge the communication gap between healthcare providers and patients. This could result in improved patient understanding, reduced miscommunication, and enhanced patient care quality. |

|

Communication in hospitals, G4, G7. |

ChatGPT aids hospitals in enhancing patient care and communication efficiency. |

| 44 | [24] | Evaluate ChatGPT’s reliability in diagnosing diseases and treating patients. | Preliminary medical assessments; quick information for users seeking advice. |

|

Diagnosis and patient care, G3, G4, G7. | ChatGPT’s case responses need improvement; users require expertise for interpretation. |

| 45 | [73] | To assess ChatGPT’s abilities in generating ophthalmic discharge summaries and operative notes. | ChatGPT can potentially aid in creating accurate and rapid ophthalmic discharge summaries. |

|

Ophthalmology, G3. | ChatGPT’s performance in ophthalmic notes is promising, rapid, and impactful with focused training and human verification. |

| 46 | [36] | To assess healthcare workers’ knowledge, attitudes, and intended practices towards ChatGPT in Saudi Arabia. | ChatGPT can be used to support medical decision making, patient support, literature appraisal, research assistance, and enhance healthcare systems. |

|

Digital Health, G4, G5. | This study highlights ChatGPT’s potential benefits in healthcare but concerns about accuracy and reliability persist. Trustworthy implementation requires addressing these issues. |

| 47 | [74] | To evaluate ChatGPT’s performance in comprehending complex surgical clinical data and its implications for surgical education. | Assessing ChatGPT (GPT-3.5 and GPT-4) in understanding surgical data for improved education and training. |

|

Operating room, G1, G3. | ChatGPT, especially GPT-4, excels in comprehending surgical data. Achieving 76.4% accuracy on the board exam, its potential must be combined with human expertise. |

| 48 | [19] | To assess ChatGPT’s factual medical knowledge by comparing its performance with medical students in a progress test. | ChatGPT’s AI offers easy medical knowledge access. It aids medical education and testing. |

|

Medical school, G3, G4, G7, G8. | ChatGPT accurately answered most MCQs in Progress Test Medicine, surpassing students’ performance in years 1–3, comparable to latter-stage students. |

| 49 | [20] | To explore the utility and accuracy of using ChatGPT-3, an AI language model, in understanding and discussing complex psychiatric diagnoses such as catatonia. | It investigates the potential of ChatGPT-3 as a tool for medical professionals to assist in understanding and discussing complex psychiatric diagnoses. It demonstrates the feasibility of using AI in medical research and education. |

|

Medical Education, G1, G7. | Not available. |

| 50 | [75] | To compare ChatGPT’s performance in answering medical questions with medical students’ performance in a progress test. | ChatGPT can aid medical education by providing factual knowledge. It offers quick access to information and can complement teaching materials. |

|

Medical education, clinical management, G1. | ChatGPT is a valuable aid in medical education, research, and clinical management but not a human replacement. Despite limitations, AI’s rapid progress can enhance medical practices if embraced thoughtfully. |

| 51 | [29] | To explore the potential application of ChatGPT as a medical assistant in Mandarin-Chinese-speaking outpatient clinics, aiming to enhance patient satisfaction and communication. | ChatGPT enhances clinic communication, aids patient satisfaction, excels in exams, and improves interactions in non-English medical settings. |

|

Outpatient clinic settings, G4. | Not available. |

| 52 | [30] | To explore the role of AI in scientific article writing, particularly editorials, and its potential impact on rheumatologists. | AI, like ChatGPT, can aid rheumatologists in writing, improving efficiency. AI’s growing role in medicine, including image analysis. |

|

Rheumatologist + medical writing, G6. | AI offers scientific progress but caution is needed to avoid shallow work and hindered education. Beware AI dominance. |

| 53 | [76] | To evaluate the accuracy and effectiveness of using ChatGPT for simulating standardised patients (SP) in medical training. | ChatGPT is utilised to simulate patient interactions, saving time, resources, and eliminating complex preparation steps. Offers intelligent, colloquial, and accurate responses, potentially enhancing medical training efficiency. |

|

Clinical training, G4 | Not available. |

| 54 | [77] | To evaluate the accuracy and effectiveness of using ChatGPT for simulating standardised patients (SP) in medical training. | ChatGPT is utilised to simulate patient interactions, saving time, resources, and eliminating complex preparation steps. Offers intelligent, colloquial, and accurate responses, potentially enhancing medical training efficiency. |

|

Education, healthcare, research, G1. |

Not available. |

| 55 | [21] | To evaluate the performance, potential applications, benefits, and limitations of ChatGPT in medical practice, education, and research. | ChatGPT demonstrates proficiency in medical exams and academic writing, potentially aiding medical education, research, and patient–provider communication. |

|

Medical practice + education + research, G1, G7. |

ChatGPT holds potential for medical practice, education, and research but requires refinements before widespread use. Human judgment remains crucial despite its sophistication. |

| 56 | [78] | It is examining the necessity of traditional ethics education in healthcare training, given the capabilities of ChatGPT and other large language models (LLMs). | ChatGPT and LLMs can assist in fostering ethics competencies among future clinicians, aligning with bioethics education goals. |

|

Healthcare+ Ethics, G6, G7, G8. | Considering strengths and limitations, ChatGPT can be an adjunctive tool for ethics education in healthcare training, accounting for evolving technology. |

| 57 | [37] | To determine if ChatGPT can support multidisciplinary tumour board in breast cancer therapy planning. | ChatGPT’s application in aiding breast cancer therapy decisions; benefits include efficiency and broader information access. |

|

Cancer cases, G3. | Artificial intelligence aids personalised therapy; ChatGPT’s potential in clinical medicine is promising, but it lacks specific recommendations for primary breast cancer patients. |

| 58 | [22] | The aim of this study was to assess the performance of ChatGPT models in higher specialty training for neurology and neuroscience, particularly in the context of the UK medical education system. | It demonstrates the potential application of ChatGPT models in medical education, specifically in the field of neurology and neuroscience. It highlights their ability to perform at or above passing thresholds in specialised medical examinations, offering a tool for enhancing medical training and practice. |

|

Medical + Neurology, G7, G8. | ChatGPT-4’s progress showcases AI’s promise in medical education, but close collaboration is vital for sustained relevance and reliability in healthcare. |

| 59 | [79] | Evaluate ChatGPT’s performance in answering patients’ gastrointestinal health questions. | ChatGPT assists patients with health inquiries, potentially enhancing information accessibility in healthcare. |

|

Gastrointestinal Health, G3, G5. | ChatGPT’s potential in health info provision exists, but development and source quality improvement are necessary. |

| 60 | [80] | Investigate ChatGPT’s potential applications, especially in pharmacovigilance. | ChatGPT transforms human–machine interactions, offering innovative solutions, such as enhancing pharmacovigilance processes. |

|

Pharmacovigilance, G3, G4, G5. | Not available. |

| 61 | [38] | Evaluate the impact of AI, particularly ChatGPT, in the field of surgery, considering both its benefits and potential harms. | AI, including ChatGPT, can enhance surgical outcomes, diagnostics, and patient experiences. It offers efficiency and precision in surgical treatments. |

|

Intelligence, Surgery, G3, G6. | The growing influence of AI in surgery demands ethical contemplation. |

Table 2.

Summary of associated works includes all fields of study, study aim, pros, and cons or challenges.

| No. | References | Field of Study | Study Aim | Pros | Cons, Challenge(s) |

|---|---|---|---|---|---|

| 1 | [38] | Surgery, Implications, Ethical Considerations. | Assess AI’s Impact on Surgery. |

|

|

| 2 | [23] | Medical, education. | Integrate ChatGPT into Medical Education. |

|

|

| 3 | [6] | Healthcare Education, Research, Practice. | To assess the utility of ChatGPT in healthcare education, research, and practice, highlighting its potential advantages and limitations. |

|

|

| 4 | [81] | medicine, ChatGPT. | Questioning AI, Role in Medicine. |

|

|

| 5 | [41] | Healthcare. | Explore ChatGPT’s Role in Medicine. |

|

|

| 6 | [31] | Medicine. | Explore AI’s Impact on Paediatric Research. |

|

|

| 7 | [32] | Medical Imaging, Radiologist. |

Aims to explore the challenges faced in communicating radiation risks and benefits of radiological examinations, especially in cases involving vulnerable groups like pregnant women and children. |

|

|

| 8 | [82] | medical examination, records, Chinese education. | To assess ChatGPT’s performance in understanding Chinese medical knowledge, its potential as an electronic health infrastructure, and its ability to improve medical tasks and interactions, while acknowledging challenges related to hallucinations and ethical considerations. |

|

|

| 9 | [83] | Infectious Disease. | This study aims to evaluate the potential utilisation of ChatGPT in clinical practice and scientific research of infectious diseases, along with discussing relevant social and ethical implications. |

|

|

| 10 | [84] | Medical, Test (Turing). | To evaluate the feasibility of using AI-based chatbots like ChatGPT for patient–provider communication, focusing on distinguishing responses, patient trust, and implications for healthcare interactions. |

|

|

| 11 | [85] | Medical applications. | Promoting Sustainable Practices in Medical 5G Communication. |

|

|

| 12 | [86] | Patient Outcomes, Healthcare. | To investigate the potential applications of humanoid robots in the medical industry, considering their role during the COVID-19 pandemic and future possibilities, while emphasising the irreplaceable importance of human healthcare professionals and the complementary nature of robotics. |

|

|

| 13 | [87] | Medical. | Evaluating AI as Collaborative Research Partners. |

|

|

| 14 | [88] | Medicine, History. | To explore the importance of simplifying operations and creating user-friendly interfaces in AI-based medical applications, drawing insights from the success of ChatGPT and its impact on user adoption and clinical practice. |

|

|

| 15 | [89] | medical, education. | The aim of this specific aspect of the study is to critically analyse the manuscript and offer valuable feedback to improve its content, quality, and overall presentation. |

|

|

| 16 | [11] | Dental assistant, Nurse. | To explores AI’s impact on dental assistants and nurses in orthodontic practices, examining evolving treatment workflows. |

|

|

| 17 | [33] | ultrasound image guidance. | To assess the potential of using the Segment Anything Model (SAM) for intelligent ultrasound image guidance. It explores the application of SAM in accurately segmenting ultrasound images and discusses its potential contribution to a framework for autonomous and universal ultrasound image guidance. |

|

|

| 18 | [90] | Health records. | Evaluate electronic medical records. (EMRs) foundation. models. |

|

|

| 19 | [91] | Rheumatology. | Explore ChatGPT’s potential in rheumatology. |

|

|

| 20 | [40] | Medical Research. | Evaluate ChatGPT’s impact on medical research. |

|

|

| 21 | [92] | Clinical Practice. | Explore ChatGPT’s applications and implications in clinical practice. |

|

|

| 22 | [93] | Bioethics. | Explore bioethical implications of ChatGPT. |

|

|

4. Challenges

In the field of healthcare and the medical field, refer to the difficult or complex issues, obstacles, or problems that healthcare professionals, researchers, organisations, and technologies face while providing medical care, conducting research, and addressing public health concerns. These challenges can arise due to various factors such as scientific advancements, technological limitations, ethical considerations, regulatory frameworks, economic constraints, patient expectations, and more.

In the context of healthcare and the medical field, challenges can encompass a wide range of issues, including but not limited to medical advancements, patient care, resource allocation, healthcare access, disease prevention and control, chronic disease management, healthcare costs, medical ethics, data privacy and security, protecting patient data and ensuring compliance with privacy regulations, interdisciplinary collaboration, patient education, and public health initiatives. In Figure 5, there were numerous challenges that Chatbot models such as GPT-3 could face in the field of healthcare and medicine.

Figure 5.

Challenges of healthcare and the medical fields.

4.1. Language Understanding and Medical Terms

Medical professionals frequently use specialised terminology and jargon that may be difficult for a general-purpose chatbot to understand. It is difficult to ensure that the chatbot understands medical terminology correctly.

Importance: this paper can illustrate the sophistication needed in natural language processing for medical contexts, underscoring the potential of advanced AI models to bridge communication gaps in healthcare.

Benefits: readers will discover the critical role of contextually aware AI in comprehending patient interactions, resulting in better patient support and care.

4.2. Accuracy and Reliability

Of utmost importance in healthcare is ensuring that the information provided by the chatbot is accurate and reliable. Medical information, which can be complex and critical, must be accurate to avoid misinformation and potential harm to patients.

Importance: this paper’s contribution to the ongoing conversation about the reliability of AI in clinical settings is highlighted, presenting the chatbot as a tool to augment, rather than replace, human judgement.

Benefits: readers can derive advantages from comprehending the significance of error-checking mechanisms and the ongoing updating of medical databases that AI systems must integrate.

4.3. Privacy and Security

Healthcare data are highly sensitive and subject to strict privacy regulations (like HIPAA in the United States). Chatbots need to adhere to these regulations and ensure that patient data are handled securely and confidentially.

Importance: the stress is on the advanced security protocols and compliance standards that AI systems must adhere to, as they are a cornerstone of healthcare technology.

Benefits: this paper educates readers on the stringent data protection measures required for AI integration in healthcare, with an emphasis on the technology’s potential to maintain confidentiality.

4.4. Accountability and Responsibility

Decisions made by chatbots in healthcare settings can indeed have real-life consequences. Determining who is accountable for incorrect advice or recommendations provided by a chatbot can be a complex matter.

Importance: enriching the discourse on the role of AI in healthcare decision making by examining the legal and ethical frameworks that regulate AI.

Benefits: the survey can educate readers on the complex interaction between AI recommendations and human decision making, as well as the legal implications.

4.5. Human Oversight and Intervention

Although chatbots can assist in various healthcare tasks, there is a requirement for human oversight and intervention, particularly in critical situations. The challenge lies in balancing automation with the human touch.

Importance: the need for human experts to oversee AI systems is underlined, reinforcing the notion of AI as a supportive tool rather than a replacement.

Benefits: healthcare professionals can learn about the importance of their experience in supervising AI, ensuring patient safety and providing high-quality care.

4.6. Medical Training and Regulation

Chatbots that provide medical advice may unintentionally bypass the regulatory frameworks established for traditional healthcare providers. It is crucial to ensure that chatbots are developed and used within established medical guidelines.

Importance: address the incorporation of AI within existing medical regulations, emphasising how the paper advances the conversation about regulatory adaptations for AI tools.

Benefits: this paper can help medical professionals understand the importance of regulatory compliance for AI, as well as encourage proactive engagement with technology.

4.7. Patient Empowerment

While chatbots can provide information, they should also encourage patients to consult qualified healthcare professionals for personalised advice. It is critical to strike a balance between empowerment and not substituting professional medical care.

Importance: it highlights the chatbot’s contribution to promoting well-informed patient decision making, underscoring the paper’s adherence to patient-centred care models.

Benefits: readers will appreciate how AI can provide information to patients while also understanding AI’s limitations in providing personalised medical advice.

5. Limitations and the Motivation

The limitations of these studies include a tendency to focus on theoretical potential rather than practical implementation, which leaves some real-world challenges unaddressed. Furthermore, significant concerns persist regarding the ethical and legal issues surrounding the roles, accuracy, and originality of AI. There exists a necessity for more comprehensive evaluations of content generated by AI and its impact on tasks performed by humans. Additionally, there is a potential risk of overreliance, which could result in a decrease in human critical thinking and involvement. Current studies frequently lack a thorough exploration of the broader implications, which necessitates adopting a more holistic approach to comprehending the complete scope and consequences of integrating AI, such as ChatGPT, into diverse fields.

The integration of ChatGPT in medicine and healthcare, though promising, does come with certain limitations. One key concern is the potential for inaccuracies in medical information provided by ChatGPT, as it lacks the ability to fully comprehend complex medical contexts. Serious medical errors could occur if patient queries are misinterpreted or incorrect diagnoses are generated. Furthermore, the reliance of ChatGPT on training data may introduce biases, which could potentially impact the quality and fairness of the provided information. Ethical challenges arise regarding patient data privacy and consent, as well as accountability for AI-generated medical content. The sharing of outdated or obsolete medical information could be a potential consequence of the lack of real-time updates and dynamic learning in ChatGPT. Lastly, there is a risk of overdependence on AI, which may diminish the role of healthcare professionals, thereby reducing critical thinking and human interaction in medical care.

The motivation for integrating ChatGPT with medicine and healthcare stems from the potential to improve patient care, medical research, and clinical decision-making processes. ChatGPT’s natural language processing capabilities provide a user-friendly interface for patients to seek medical information, resulting in increased patient engagement and empowerment. Its ability to analyse large amounts of medical literature assists healthcare professionals in staying up to date on the latest research and treatment options. ChatGPT can assist in the generation of accurate and concise medical documentation, thereby streamlining administrative tasks for healthcare providers. In addition, it holds promise in facilitating medical education, supporting remote consultations, and optimising clinical workflows. In general, the integration of ChatGPT with medicine and healthcare is in line with the objective of utilising AI technology to progress medical practices and improve patient outcomes.

6. Conclusions

As part of this investigation, a PRISMA-based systematic literature review was conducted. AI has the potential to transform many industries, including medicine and healthcare. Large language models, such as ChatGPT, have attracted attention for their ability to generate human-like text, revolutionising the healthcare landscape. This paper investigated ChatGPT’s applications in medicine, including pandemic management, surgical consultations such as cosmetic orthognathic surgery, dental practices, medical education, disease diagnosis, radiology and sonar imaging, pharmaceutical research, and treatment. The use of ChatGPT in disseminating critical information during pandemics and infectious diseases has showcased its ability to efficiently communicate essential updates and guidelines to the public. Furthermore, ChatGPT demonstrated its role as a virtual assistant in the context of cosmetic orthognathic surgery consultations. It provided standardised information and facilitated informed patient decisions. The integration of AI into dental practices has enhanced patient experiences by efficiently addressing inquiries and providing guidance.

The ability of ChatGPT to simplify complex medical concepts has enhanced medical education, leading to improved knowledge acquisition. It also displayed potential in disease diagnosis by offering differential diagnoses based on patient-reported symptoms and medical history. In the fields of cellular imaging, radiology, and sonar imaging, the descriptive capabilities of ChatGPT could be helpful for radiologists in the analysis of medical images and cellular imaging. Pharmaceutical research, benefiting from ChatGPT, was another realm that expedited research processes. It accomplished this by sifting through scientific literature and suggesting research directions. However, ChatGPT offers immense potential; however, it also has limitations that stem from its training data and ethical concerns that are related to its output. In conclusion, the integration of ChatGPT into medicine and healthcare holds vast transformative potential. It is crucial to responsibly utilise the capabilities of ChatGPT as technology advances, while also addressing its limitations and ethical considerations. The role of ChatGPT as a catalyst for innovation, advancement, and improved patient care within the medical landscape is underscored by this exploration.

Future work for ChatGPT in AI for healthcare includes the enhancement of diagnostic precision, the personalisation of care, the improvement of EHR integration, the assurance of regulatory compliance, the expansion of telemedicine capabilities, the advancement of medical education tools, and addressing ethical considerations in patient AI interactions.

Acknowledgments

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through a large group Research Project under grant number (RGP2/52/44).

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/diagnostics14010109/s1, Figure S1: PRISMA 2020 flow diagram for updated systematic reviews which included searches of databases and registers only; File S1: PRISMA 2020 checklist.

Author Contributions

Conceptualization, H.A.Y. (Hussain A. Younis), T.A.E.E. and I.M.H.; methodology, M.N., H.A.Y. (Hussain A. Younis) and T.M.S.; software, H.A.Y. (Hameed AbdulKareem Younis) and O.M.A.; writing—original draft, A.A.N., H.A.Y. (Hussain A. Younis), I.M.H. and H.A.Y. (Hameed AbdulKareem Younis); writing—review and editing, I.M.H., T.M.S., I.M.H., M.N., T.A.E.E. and S.S.; supervision, H.A.Y. (Hussain A. Younis) and T.A.E.E.; project administration, A.A.N., M.N., O.M.A. and T.M.S.; funding acquisition, S.S. and M.N. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

The Deanship of Scientific Research at King Khalid University funded this work through a large-group research project under grant number (RGP2/52/44).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Agathokleous E., Saitanis C.J., Fang C., Yu Z. Use of ChatGPT: What Does It Mean for Biology and Environmental Science? Sci. Total Environ. 2023;888:164154. doi: 10.1016/j.scitotenv.2023.164154. [DOI] [PubMed] [Google Scholar]

- 2.McGowan A., Gui Y., Dobbs M., Shuster S., Cotter M., Selloni A., Goodman M., Srivastava A., Cecchi G.A., Corcoran C.M. ChatGPT and Bard Exhibit Spontaneous Citation Fabrication during Psychiatry Literature Search. Psychiatry Res. 2023;326:115334. doi: 10.1016/j.psychres.2023.115334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Choudhary O.P. Priyanka ChatGPT in Travel Medicine: A Friend or Foe? Travel Med. Infect. Dis. 2023;54:102615. doi: 10.1016/j.tmaid.2023.102615. [DOI] [PubMed] [Google Scholar]

- 4.Kocoń J., Cichecki I., Kaszyca O., Kochanek M., Szydło D., Baran J., Bielaniewicz J., Gruza M., Janz A., Kanclerz K., et al. ChatGPT: Jack of All Trades, Master of None. Inf. Fusion. 2023;99:101861. doi: 10.1016/j.inffus.2023.101861. [DOI] [Google Scholar]

- 5.Mueen Sahib T., Younis H.A., Mohammed A.O., Ali A.H., Salisu S., Noore A.A., Hayder I.M., Shahid M. ChatGPT in Waste Management: Is it a Profitable. Mesopotamian J. Big Data. 2023;2023:107–109. doi: 10.58496/MJBD/2023/014. [DOI] [Google Scholar]

- 6.Sallam M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare. 2023;11:887. doi: 10.3390/healthcare11060887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jeblick K., Schachtner B., Dexl J., Mittermeier A., Stüber A.T., Topalis J., Weber T., Wesp P., Sabel B., Ricke J., et al. ChatGPT Makes Medicine Easy to Swallow: An Exploratory Case Study on Simplified Radiology Reports. Eur. Radiol. 2022. ahead of print . [DOI] [PMC free article] [PubMed]

- 8.Fatani B. ChatGPT for Future Medical and Dental Research. Cureus. 2023;15:e37285. doi: 10.7759/cureus.37285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Temsah O., Khan S.A., Chaiah Y., Senjab A., Alhasan K., Jamal A., Aljamaan F., Malki K.H., Halwani R., Al-Tawfiq J.A., et al. Overview of Early ChatGPT’s Presence in Medical Literature: Insights from a Hybrid Literature Review by ChatGPT and Human Experts. Cureus. 2023;15:e37281. doi: 10.7759/cureus.37281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Xie Y., Seth I., Hunter-smith D.J., Rozen W.M., Ross R., Lee M. Aesthetic Surgery Advice and Counseling from Artificial Intelligence: A Rhinoplasty Consultation with ChatGPT. Aesthetic Plast. Surg. 2023;47:1985–1993. doi: 10.1007/s00266-023-03338-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Surovkov J., Strunga M., Lifkov M., Thurzo A. The New Role of the Dental Assistant and Nurse in the Age of Advanced Artificial Intelligence in Telehealth Orthodontic Care with Dental Monitoring: Preliminary Report. Appl. Sci. 2023;13:5212. doi: 10.3390/app13085212. [DOI] [Google Scholar]

- 12.Mijwil M., Aljanabi M., Ali A.H. ChatGPT: Exploring the Role of Cybersecurity in the Protection of Medical Information. Mesopotamian J. Cyber Secur. 2023;2023:18–21. doi: 10.58496/MJCS/2023/004. [DOI] [Google Scholar]

- 13.Hosseini M., Gao C.A., Liebovitz D., Carvalho A., Ahmad F.S., Luo Y., MacDonald N., Holmes A.K. An Exploratory Survey about Using ChatGPT in Education, Healthcare, and Research. PLoS ONE. 2023;18:e0292216. doi: 10.1371/journal.pone.0292216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mohammed A.O., Salisu S.A., Younis H., Salman A.M., Sahib T.M., Akhtom D., Hayder I.M. ChatGPT Revisited: Using ChatGPT-4 for Finding References and Editing Language in Medical Scientific Articles. 2023. [(accessed on 18 November 2023)]. Available online: https://ssrn.com/abstract=4621581. [DOI] [PubMed]

- 15.Khairatun H.U., Miftahul A.M. ChatGPT and Medical Education: A Double-Edged Sword. J. Pedagog. Educ. Sci. 2023;2:71–89. doi: 10.56741/jpes.v2i01.302. [DOI] [Google Scholar]

- 16.Abouammoh N., Alhasan K.A., Raina R., Children A., Aljamaan F. Exploring Perceptions and Experiences of ChatGPT in Medical Education: A Qualitative Study Among Medical College Faculty and Students in Saudi Arabia Original Research: Exploring Perceptions and Experiences of ChatGPT in Medical Education: A Qualitativ. Cold Spring Harb. Lab. 2023. preprint . [DOI]

- 17.Gilson A., Safranek C.W., Huang T., Socrates V., Chi L., Taylor R.A., Chartash D. How Does ChatGPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med. Educ. 2023;9:e45312. doi: 10.2196/45312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Busch F., Adams L.C., Bressem K.K. Biomedical Ethical Aspects Towards the Implementation of Artificial Intelligence in Medical Education in Medical Education. Med. Sci. Educ. 2023;33:1007–1012. doi: 10.1007/s40670-023-01815-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Friederichs H., Friederichs W.J., März M., Friederichs H., Friederichs W.J., Chatgpt M.M., Friederichs H., Friederichs W.J. ChatGPT in Medical School: How Successful Is AI in Progress Testing? ChatGPT in Medical School: How Successful Is AI in Progress Testing? Med. Educ. Online. 2023;28:2220920. doi: 10.1080/10872981.2023.2220920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Grabb D. ChatGPT in Medical Education: A Paradigm Shift or a Dangerous Tool? Acad. Psychiatry. 2023;47:439–440. doi: 10.1007/s40596-023-01791-9. [DOI] [PubMed] [Google Scholar]

- 21.Sedaghat S. Early Applications of ChatGPT in Medical Practice, Education and Research. Clin. Med. 2023;23:278–279. doi: 10.7861/clinmed.2023-0078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Giannos P. Evaluating the Limits of AI in Medical Specialisation: ChatGPT’s Performance on the UK Neurology Specialty Certificate Examination. BMJ Neurol. Open. 2023;5:e000451. doi: 10.1136/bmjno-2023-000451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Guo A.A., Li J. Harnessing the Power of ChatGPT in Medical Education. Med. Teach. 2023;45:1063. doi: 10.1080/0142159X.2023.2198094. [DOI] [PubMed] [Google Scholar]

- 24.Huh S. Can We Trust AI Chatbots’ Answers about Disease Diagnosis and Patient Care? J. Korean Med. Assoc. 2023;66:218–222. doi: 10.5124/jkma.2023.66.4.218. [DOI] [Google Scholar]

- 25.Currie G., Singh C., Nelson T., Nabasenja C., Al-Hayek Y., Spuur K. ChatGPT in Medical Imaging Higher Education. Radiography. 2023;29:792–799. doi: 10.1016/j.radi.2023.05.011. [DOI] [PubMed] [Google Scholar]

- 26.Dahmen J., Kayaalp M.E., Ollivier M., Pareek A., Hirschmann M.T., Karlsson J., Winkler P.W. Artificial Intelligence Bot ChatGPT in Medical Research: The Potential Game Changer as a Double-Edged Sword. Knee Surg. Sports Traumatol. Arthrosc. 2023;31:1187–1189. doi: 10.1007/s00167-023-07355-6. [DOI] [PubMed] [Google Scholar]

- 27.Mohammed O., Thaeer M.S., Israa M.H., Sani S., Misbah S. ChatGPT Evaluation: Can It Replace Grammarly and Quillbot Tools? Br. J. Appl. Linguistics. 2023;3:34–46. doi: 10.32996/bjal.2023.3.2.4. [DOI] [Google Scholar]

- 28.Yang J., Li H.B., Wei D. The Impact of ChatGPT and LLMs on Medical Imaging Stakeholders: Perspectives and Use Cases. arXiv. 2023 doi: 10.1016/j.metrad.2023.100007.2306.06767 [DOI] [Google Scholar]

- 29.Zhu Z., Ying Y., Zhu J., Wu H. ChatGPT’s Potential Role in Non-English-Speaking Outpatient Clinic Settings. Digit. Health. 2023;9:1–3. doi: 10.1177/20552076231184091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Verhoeven F., Wendling D., Prati C. ChatGPT: When Artificial Intelligence Replaces the Rheumatologist in Medical Writing. Ann. Rheum. Dis. 2023;82:1015–1017. doi: 10.1136/ard-2023-223936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Corsello A., Santangelo A. May Artificial Intelligence Influence Future Pediatric Research?—The Case of ChatGPT. Children. 2023;10:757. doi: 10.3390/children10040757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Pozzessere C. Optimizing Communication of Radiation Exposure in Medical Imaging, the Radiologist Challenge. Tomography. 2023;9:717–720. doi: 10.3390/tomography9020057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ning G., Liang H., Jiang Z., Zhang H., Liao H. The Potential of “Segment Anything” (SAM) for Universal Intelligent Ultrasound Image Guidance. Biosci. Trends. 2023;17:230–233. doi: 10.5582/bst.2023.01119. [DOI] [PubMed] [Google Scholar]

- 34.Strunga M., Thurzo A., Surovkov J., Lifkov M., Tom J. AI-Assisted CBCT Data Management in Modern Dental Practice: Benefits, Limitations and Innovations. Electronics. 2023;12:1710. [Google Scholar]

- 35.Pratim P., Poulami R. AI Tackles Pandemics: ChatGPT’s Game–Changing Impact on Infectious Disease Control. Ann. Biomed. Eng. 2023;51:2097–2099. doi: 10.1007/s10439-023-03239-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Temsah M., Aljamaan F., Malki K.H., Alhasan K. ChatGPT and the Future of Digital Health: A Study on Healthcare Workers’ Perceptions and Expectations. Healthcare. 2023;11:1812. doi: 10.3390/healthcare11131812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lukac S., Dayan D., Fink V., Leinert E., Hartkopf A., Veselinovic K., Janni W., Rack B., Pfister K., Heitmeir B., et al. Evaluating ChatGPT as an Adjunct for the Multidisciplinary Tumor Board Decision–Making in Primary Breast Cancer Cases. Arch. Gynecol. Obstet. 2023;308:1831–1844. doi: 10.1007/s00404-023-07130-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kavian J.A., Wilkey H.L., Parth A., Boyd C.J. Harvesting the Power of Arti Fi Cial Intelligence for Surgery: Uses, Implications, and Ethical Considerations. Am. Surg. 2023:2–4. doi: 10.1177/00031348231175454. [DOI] [PubMed] [Google Scholar]

- 39.Dave T., Athaluri S.A., Singh S. ChatGPT in Medicine: An Overview of Its Applications, Advantages, Limitations, Future Prospects, and Ethical Considerations. Front. Artif. Intell. 2023;6:1169595. doi: 10.3389/frai.2023.1169595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ruksakulpiwat S., Kumar A., Ajibade A. Using ChatGPT in Medical Research: Current Status and Future Directions. J. Multidiscip. Healthc. 2023;16:1513–1520. doi: 10.2147/JMDH.S413470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Tustumi F., Andreollo N.A., Aguilar-Nascimento J.E., No E., Em S., Prazo L. Future of the Language Models in Healthcare: The Role of Chatgpt. ABCD. Arq. Bras. Cir. Dig. 2023;34:e1727. doi: 10.1590/0102-672020230002e1727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kaarre J., Feldt R., Keeling L.E., Dadoo S., Zsidai B., Hughes J.D., Samuelsson K., Musahl V. Exploring the Potential of ChatGPT as a Supplementary Tool for Providing Orthopaedic Information. Knee Surg. Sports Traumatol. Arthrosc. 2023;31:5190–5198. doi: 10.1007/s00167-023-07529-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ollivier M., Pareek A., Dahmen J., Kayaalp M.E., Winkler P.W., Hirschmann M.T., Karlsson J. A Deeper Dive into ChatGPT: History, Use and Future Perspectives for Orthopaedic Research. Knee Surg. Sports Traumatol. Arthrosc. 2023;31:1190–1192. doi: 10.1007/s00167-023-07372-5. [DOI] [PubMed] [Google Scholar]

- 44.Sallam M., Salim N.A., Barakat M., Al-Tammemi A.B. ChatGPT Applications in Medical, Dental, Pharmacy, and Public Health Education: A Descriptive Study Highlighting the Advantages and Limitations. Narra J. 2023;3:e103. doi: 10.52225/narra.v3i1.103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Liberati A., Altman D.G., Tetzlaff J., Mulrow C., Gøtzsche P.C., Ioannidis J.P.A., Clarke M., Devereaux P.J., Kleijnen J., Moher D. The PRISMA Statement for Reporting Systematic Reviews and Meta-Analyses of Studies That Evaluate Health Care Interventions: Explanation and Elaboration. PLoS Med. 2009;6:e1000100. doi: 10.1371/journal.pmed.1000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Page M.J., McKenzie J.E., Bossuyt P.M., Boutron I., Hoffmann T.C., Mulrow C.D., Shamseer L., Tetzlaff J.M., Akl E.A., Brennan S.E., et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ. 2021;372:71. doi: 10.1136/bmj.n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Younis H.A., Ruhaiyem N.I.R., Badr A.A., Abdul-Hassan A.K., Alfadli I.M., Binjumah W.M., Altuwaijri E.A., Nasser M. Multimodal Age and Gender Estimation for Adaptive Human-Robot Interaction: A Systematic Literature Review. Processes. 2023;11:1488. doi: 10.3390/pr11051488. [DOI] [Google Scholar]

- 48.Salisu S., Ruhaiyem N.I.R., Eisa T.A.E., Nasser M., Saeed F., Younis H.A. Motion Capture Technologies for Ergonomics: A Systematic Literature Review. Diagnostics. 2023;13:2593. doi: 10.3390/diagnostics13152593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Younis H.A., Ruhaiyem N.I.R., Ghaban W., Gazem N.A., Nasser M. A Systematic Literature Review on the Applications of Robots and Natural Language Processing in Education. Electronics. 2023;12:2864. doi: 10.3390/electronics12132864. [DOI] [Google Scholar]

- 50.Götz S. Supporting Systematic Literature Reviews in Computer Science: The Systematic Literature Review Toolkit; Proceedings of the 21th ACM/IEEE International Conference on Model Driven Engineering Languages and Systems, MODELS 2018; Copenhagen, Denmark. 14–19 October 2018; pp. 22–26. [DOI] [Google Scholar]

- 51.Muftić F., Kadunić M., Mušinbegović A., Almisreb A.A. Exploring Medical Breakthroughs: A Systematic Review of ChatGPT Applications in Healthcare. Southeast Eur. J. Soft Comput. 2023;12:13–41. [Google Scholar]