Abstract

This review provides an overview of the application of artificial intelligence (AI) in radiation therapy (RT) from a radiation oncologist’s perspective. Over the years, advances in diagnostic imaging have significantly improved the efficiency and effectiveness of radiotherapy. The introduction of AI has further optimized the segmentation of tumors and organs at risk, thereby saving considerable time for radiation oncologists. AI has also been utilized in treatment planning and optimization, reducing the planning time from several days to minutes or even seconds. Knowledge-based treatment planning and deep learning techniques have been employed to produce treatment plans comparable to those generated by humans. Additionally, AI has potential applications in quality control and assurance of treatment plans, optimization of image-guided RT and monitoring of mobile tumors during treatment. Prognostic evaluation and prediction using AI have been increasingly explored, with radiomics being a prominent area of research. The future of AI in radiation oncology offers the potential to establish treatment standardization by minimizing inter-observer differences in segmentation and improving dose adequacy evaluation. RT standardization through AI may have global implications, providing world-standard treatment even in resource-limited settings. However, there are challenges in accumulating big data, including patient background information and correlating treatment plans with disease outcomes. Although challenges remain, ongoing research and the integration of AI technology hold promise for further advancements in radiation oncology.

Keywords: radiotherapy, artificial intelligence, auto-segmentation, auto-planning

INTRODUCTION

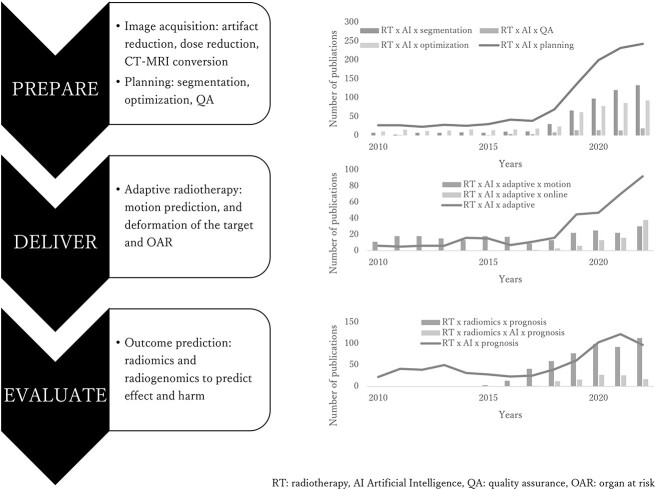

Radiotherapy has a history of improvement along with the advances in diagnostic imaging. With the advent of computed tomography (CT), the ability to depict tumors not as shadows but as 3D structures has advanced radiotherapy from 2D to 3D [1]. Furthermore, the diagnosis of tumor spread and boundaries was made by contrasting preoperative images with surgical pathology [2–5]. As the boundary between tumors and normal organs has become clearer and with improved computational power, intensity-modulated radiotherapy (IMRT) technology has enabled the reduction of the radiation dose to normal organs, while delivering a high dose to the entire tumor, even for more complex tumor shapes. The improved spatial positioning accuracy of images and the capability to capture tumor motion during treatment have made it possible to further lower the dose to normal organs while administering a very high dose to the tumor, thus enabling stereotactic radiotherapy, even at metastatic sites if feasible [6–10]. However, as the treatment plan becomes more precise, the standardization of contouring becomes more critical. Although contouring atlases have been created in various countries to standardize contouring [11–19], treatment plans are subject to the preferences and styles of planners [20]. Therefore, there are several problems with standardizing the segmentation and treatment plans. Several attempts have been made to reduce the time required for treatment planning while promoting standardization by incorporating artificial intelligence (AI)-based automation [21–24]. Over the past 5 years, numerous studies on AI-adapted radiation therapy (RT) have been published. RT consists of three crucial steps: preparation, delivery and evaluation. If we want to apply AI in these three steps, they all start with ‘segmentation’. After appropriate segmentation, we can proceed further with planning, optimization and online adaptive radiotherapy and then evaluate and predict the outcome (Fig. 1).

Fig. 1.

Number of publications on AI in RT since 2010.

This review summarizes the use of AI in RT, focusing on the clinician’s perspective rather than on the technical aspects of AI development. First, we summarize how auto-segmentation has progressed, followed by the current trends in the use of AI for planning, optimization and prognostic evaluation and prediction, and our expectations that AI will benefit both patients and medical staff.

For the literature review, we searched the PubMed database through 30 June 2023 for studies related to radiotherapy evaluation using AI. As a basic policy, we extracted reports from the most recent 5 years. For each section, ‘radiotherapy’ and ‘artificial intelligence’ were used as keywords, with ‘segmentation’, ‘quality assurance’, ‘optimization’, ‘planning’, ‘adaptation’ and ‘radiomics and/or prognosis’ added. The citations and references of the retrieved studies were used as additional sources of information for this narrative review and were manually searched.

SEGMENTATION AND DEFORMABLE MEDICAL IMAGE REGISTRATION

One of the most important steps in the preparation, delivery and evaluation of radiotherapy, as well as the time-consuming tasks in radiotherapy planning, is the segmentation of the target and organs at risk (OARs). Since the advent of CT and its use in treatment planning, radiation oncologists have spent a lot of time contouring targets and OARs. In the past, auto-segmentation was used with intensity thresholds; however, this method was inadequate because it could only automatically segment the lungs, intracranial and spinal canal. Subsequently, atlas-based segmentation methods were introduced [25–27] wherein a mono- or multi-atlas is used, and segmentation is performed through an installed atlas using the deformable medical image registration (DIR) technique.

Segmentation of OAR

DIR has been widely used and validated in commercial and open-source applications, and various algorithms are currently in use. The most common DIR method is intensity-based DIR, which enables the segmentation of organs with similar intensities, such as the liver and kidneys. To improve the accuracy of multi-atlas-based auto-segmentation, it is recommended that more atlases be read to select those that are similar to the target image. Therefore, one approach to improve the accuracy of multi-atlas-based auto-segmentation is to have the system select atlases that are similar to the target case by reading more data. Schipaanboord et al. reported that auto-contouring performance of a level corresponding to clinical quality could be consistently expected with a database of 5000 atlases, assuming perfect atlas selection [28].

The number of patients newly treated with radiotherapy in Japan in 2019 was reported to be 237 000, and 3D conformal RT using CT, or an even more precise treatment, is currently being implemented [29]. If contour data from all patients in Japan could be centrally collected, it would be possible to create a highly accurate contour atlas; however, this method may be more time-consuming.

DIRs are most effective when used to change plans for patients. Ideally, DIRs in the same patient should be perfectly matched, but this is not always possible due to organ motion and deformation. In particular, organs such as the bladder and intestines are highly deformed, both intra- and inter-operatively. This deformation necessitates adaptation during treatment, but it also makes automatic contouring challenging. This could be best described with prostate cancer, a common cancer with a high incidence rate, which has been the focus of extensive research in recent years [30–35]. During prostate cancer radiotherapy, it is difficult for therapists to control the rectum, which is located on the dorsal side of the prostate. Maintaining the same bladder volume and rectal condition at the time of treatment planning remains a persistent challenge for those involved in radiotherapy. However, with the advent of adaptive radiotherapy, it may be possible to auto-contour the rectum, which changes daily, rather than adjusting the bowel and bladder to the planned CT and to instantaneously change the irradiation plan [36–39]. Takayama et al. compared the accuracy of DIR for the prostate, rectum, bladder and seminal vesicles between intensity-based and hybrid-based DIR using Dice and a shift of emphasis. They reported that the accuracy of DIR for the prostate was 0.84 ± 0.05 and that for the rectum was 0.75 ± 0.05, relatively high agreement rates, even with intensity-based DIR [30]. The same report showed that using the hybrid-based method with an anatomically constrained deformation algorithm resulted in a dice similarity coefficient (DSC) of 0.9 or higher for all organs. However, the hybrid-based method requires contour creation for both DIR images, making it unsuitable for creating deformation plans during irradiation, although it is very useful for the dosimetry assessment of plans irradiated at different body positions. In recent years, there have been several reports on segmentation using deep learning (DL) methods. Highly applied methods include the encoder–decoder-type convolutional neural network (CNN) and 2D U-net or 3D U-net [40–42]. Xiao et al. described the usefulness of new 2D and 3D automatic segmentation models based on Refine Net for the clinical target volume (CTV) and OARs for postoperative cervical cancer based on CT. Their generated RefineNetPlus3D demonstrated good performance with a DSC of 0.97, 0.95, 0.91, 0.98 and 0.98 for the bladder, small intestine, rectum, and right and left femoral heads, respectively. Furthermore, the average manual CTV and OAR contouring time for one patient with cervical cancer patient was 90–120 min, and the mean computation time of RefineNetPlus3D for these OARs was 6.6 s [43, 44]. These results show great potential for the development of adaptive radiotherapy. However, the major limitation of this report is that the patient underwent postoperative irradiation for cervical cancer: that is, there was no gross tumor volume (GTV), and the bowel bag, space potentially occupied by the small and/or large bowel at any time during the treatment or at the time of imaging [45], was contoured, not the intestine itself. They described the difficulty in achieving a good DSC for the rectum owing to its small volume and unclear outline. Because the gastrointestinal tract is constantly moving, the ultimate goal is to transform the dose distribution according to movement while monitoring during irradiation; however, there are still issues to be solved. A recent report by Liao et al. also demonstrated the successful segmentation of 16 OARs in the abdomen using the DL technique (3D U-Net was used as the baseline model). They reported perfect contouring of the liver, kidneys and spleen. The most common achievement of their algorithm was its robustness. Their results were acquired from heterogeneous CT scans and patients, whereas most previous studies have used more homogeneous data. However, they failed to achieve satisfactory results in the duodenum (DSC < 0.7) [46]. Although auto-segmentation of OAR does have room for improvement, it can be inferred with a reasonable degree of confidence that OAR segmentation is nearing completion.

The attainment of targets is promising; however, more research is needed to determine its full potential. As described previously, postoperative adjuvant radiotherapy is typically applied to the CTV, where bone structures and other indices are targeted. A typical example is postoperative radiotherapy for cervical or head-and-neck cancers [47–49]. The advent of automated segmentation and planning techniques for these domains is imminent, and their successful implementation is anticipated in the near future given the trajectory of advancements.

Segmentation of the tumor

The segmentation of GTVs themselves has also been studied. Many reports have been on the auto-segmentation of GTVs [50–55]. Although most of these were small internal cohort studies, one interesting observational study performed external validation [56]. The participants were patients with lung cancer who typically had relatively clear primary tumor boundaries. In this study, 3D U-Net models were employed to segment lungs, primary tumors and involved lymph nodes. The architecture and model hyperparameters were fine-tuned using nnU-Net, a deep-learning-based segmentation method that does not create a new network architecture, loss function or training scheme (hence its clever name: ‘no new net’), including preprocessing, network architecture, training and postprocessing for any new task [57, 58]. An expert radiation oncologist delineated the target to create discovery data, and validation was performed using external sources. Volumetric dice and surface dice were used for the assessment. Although the models demonstrated enhanced performance compared to the inter-observer benchmark and achieved results within the intra-observer benchmark during internal data validation (performed by the same expert), their performance did not surpass the benchmark when evaluated using external data (segmented by different experts). This outcome may indicate the presence of variations in segmentation styles and preferences among experts, as substantial variability exists in the manual delineation of tumors [20]. However, AI assistance leads to a 65% reduction in segmentation time (5.4 min) and a 32% reduction in inter-observer variability. Therefore, it may be very useful in helping residents create segmentations that are satisfactory to senior radiation oncologists at the facility where the residents work or at a satellite hospital where experts are not always present.

RADIOTHERAPY PLANNING

AI has long been widely used in RT planning. Second-generation AI, a system that responds to conditioned reflexes by teaching AI knowledge in the form of rules, called an expert system, has allowed the widespread use of IMRT. Treatment planning for IMRT involves inverse planning, in which dose distribution (or fluence map) optimization calculations are performed to determine the behavior of the multi-leaf collimator and to calculate the final dose distribution [59]. However, this optimization process requires repeated trial and error by the treatment planner and a treatment planning time of several hours. Another drawback is that the quality of treatment planning is influenced by the planner’s skill level [60]. Knowledge-based treatment planning, a machine learning model, has been implemented in commercial treatment planning systems (TPSs) since 2014 and is widely used today [61]. Knowledge-based treatment planning is a system that registers past treatment planning data with the TPS and creates a semiautomatic treatment plan. This technology has significantly reduced the planning time from days to hours. Then, DL emerged as a promising new approach to treatment planning. In the past 5 years, there have been 257 reports, including 26 reviews, on DL optimization. DL methods learn the contour and dose distribution inputs to the CT for treatment planning and automatically generate dose distributions by inputting new contours [62, 63]. DL methods have been reported to generate treatment plans comparable to knowledge-based treatment planning [64]. Therefore, DL has the potential to fully automate planning from segmentation to optimization in hours, minutes or even seconds.

Quality control and quality assurance

The next step in planning is the quality control (QC) and quality assurance (QA) of the treatment plan. Once IMRT treatment planning is complete, dose verification is required. Currently, this is performed by actual measurements using dosimeters, films or multidimensional detectors, which can take a few hours for staff [65]. Recently, research was conducted to predict the results of gamma analysis results for IMRT QA using machine learning of the gamma analysis results measured using a 2D detector [66]. This shortens the QA time, and the results suggest that QA using DL is a promising direction for clinical radiotherapy.

DELIVERING THE PLAN

Image-guided radiation therapy

AI has also been studied in the irradiation process in radiotherapy treatment rooms. Before irradiation with the treatment beam, the patient’s position is verified using an image-guided radiation therapy (IGRT) system. Cone-beam computed tomography (CBCT) images used for IGRT have a lower soft tissue contrast and a higher noise ratio than CT images, which affects the accuracy of image registration [67]. Recent studies have been conducted to improve CBCT image quality using U-Net and CycleGAN [68, 69]. Generating CT-like images from CBCT by learning the conversion between treatment-planning CT images and CBCT images has been proposed. In addition, dynamic tumor-tracking irradiation is sometimes used for moving tumors, such as lung or liver cancer. To date, irradiation has been performed while observing a gold marker placed near the target, or using a correlation model created from the movement of an external marker placed on the abdominal wall [70–73]. However, these methods have some limitations, such as being invasive and not always correlating the external marker with the tumor position [74]. Consequently, research is underway to achieve real-time image tracking using X-ray projection images without markers, and to improve the accuracy of tumor position prediction using AI to compensate for the time delay of the device [75, 76].

Adaptive radiotherapy

Finally, a technology called online adaptive radiotherapy, which completes image acquisition, treatment planning (modification) and irradiation of the treatment beam while the patient lies on the treatment bed, is currently in practical use. There are two major methods for implementing adaptive radiotherapy: using magnetic resonance imaging (MRI)-equipped equipment and using CT (or CBCT)-equipped equipment [77–79]. The former method was initially put into practical use through an MR-Linac, which can accurately obtain MRI images of tumors and soft tissues prior to treatment. However, CT value data is essential for dose calculation, and technology to generate virtual CT images from MRI scans using AI has been developed and implemented in clinical settings [80]. Although the conversion is limited to certain areas and the accuracy is not perfect, this technology has significant future potential. On the other hand, in the latter method, CBCT is used for adaptation; however, dose calculation cannot be performed directly on the CBCT images acquired on the treatment table either, because CBCT has a limited field of view, incorrect CT value, or increased amount of image artifact. At present, the system generates virtual CT image by deforming the pretreatment planning CT to the CBCT using mutual information, and AI support segmentation and adaptive planning workflow to shorten the time. Over the past 5 years, 257 papers have been published in this area, including 15 reviews, showing high expectations for the future development of this technology (Fig. 1).

Predicting prognosis with AI

Reports on radiomics and prognostication have increased considerably in recent years, and many reports have been published in the field of radiotherapy. Although numerous studies have addressed lung cancer and head and neck cancer, in comparison to overall survival (OS), rectal cancer has been the most widely reported [81–86]. This trend may be due to the National Comprehensive Cancer Network guidelines that recommend concurrent chemoradiotherapy followed by surgical resection for locally advanced rectal cancer after a systematic review by the Colorectal Cancer Collaborative Group revealed that preoperative radiotherapy reduces the risk of local recurrence and death from rectal cancer, especially in young, high-risk patients [87, 88]. Due to this approach, patients with locally advanced rectal cancer usually have pretreatment and posttreatment MRI scans and pathological results. The overall trend is to examine whether pathologic complete response at surgery can be predicted using pre- or post-CRT images [42, 89, 90].

Numerous studies have constructed prediction models using textural features within a retrospective single-institution analysis, utilizing T2W images or DWI/ADC map in MRI and employing a training and validation set. Although some highly promising outcomes have been reported, the regions of interest are typically contoured manually; the single-center nature with no external validation means that their versatility is limited, and it is not yet possible to advocate a standardized radiomics efficacy assessment with the data currently available [91–93]. However, manual segmentation can be replaced by auto-segmentation. A recent report by Li et al. constructed an automatic pipeline from tumor segmentation to outcome prediction using pretreatment MRI. U-Net with a codec structure was used for segmentation, and a three-layer CNN was used to build the prediction models and achieve a DSC segmentation accuracy of 0.79, complete clinical response (cCR) prediction accuracy of 0.789, specificity of 0.725 and sensitivity of 0.812 [94]. With the recent introduction of total neoadjuvant therapy for rectal cancer and the ability to watch and wait for surgery in cCR cases, prognostication in this area is expected to become even more important in the future. Conversely, although there are many reports on OS prediction models and a subset of these yield promising results, it is difficult to predict OS only from image data, as it is significantly affected by treatment methods and patient-specific factors. Therefore, big data processing, which includes data other than images, is necessary for prediction.

Future perspectives of AI in radiation oncology: What will AI bring and what is required?

The ability to significantly shorten the time from segmentation to planning using AI is a major advantage, but efforts to address inter-observer differences in tumor segmentation are still needed, which persists as a challenge at the current stage. The ability to use AI for OAR segmentation is a great advantage in daily practice; however, it can also play a major role in standardizing treatment when conducting large-scale clinical trials. In a study using data from the RTOG0617 trial, which aimed to assess the impact of radiation dose escalation on OS in patients with inoperable non-small cell lung cancer, Thor et al. compared cardiac segmentation in patients enrolled in a study with auto-segmentation using a DL algorithm and found that cardiac doses calculated by auto-segmentation tended to be higher and correlated more strongly with OS than those obtained in clinical trials [95]. Radiotherapy planning attempts to standardize the dose to the tumor while imposing dose constraints on the OAR, but differences in segmentation at the initial stage can affect the evaluation of the treatment. To conduct appropriate clinical trials, a considerable amount of time is spent centrally evaluating treatment plans. If auto-segmentation of OARs with dose constraints can be achieved, not only will data collection be simplified, but it may also allow for the correct evaluation of treatment efficacy. Finally, this approach could facilitate an evaluation of the true dose adequacy and provide a foundation for considering the appropriate prescribed dose corresponding to the heterogeneity within the tumor.

The use of MRI for treatment planning not only avoids unnecessary radiation exposure but also allows for precise contouring of the rectum and uterus, which are difficult to isolate with CT. However, it is necessary to convert MRI images to electron density because it is not possible to create a simple conversion table between electron density and signal intensity for MRI images. CT-MRI conversion using AI is being promoted to overcome this challenge [96, 97]. Currently, many challenges need to be overcome, such as the fact that bone density varies among individuals. However, as this, MRI to CT conversion research progresses, clearly delineating the boundaries of soft tissues like rectal and others from the subtle differences in density in CT may become possible, as in MRI. This may enable the same level of segmentation as with MRI, even in countries with limited medical resources that do not have MRI and must use only CT for treatment planning. The successful incorporation of AI into radiotherapy has the potential to standardize cancer treatment worldwide [98].

Another promising area is the development of large-scale language models (LLMs) and their applications in RT. Language understanding has been a central research topic in the field of AI for many years, with its history taking many forms, from early rule-based systems to modern highly sophisticated models. LLMs learn patterns from vast amounts of textual data to understand and generate natural languages. Significant progress has been made in the development of LLMs, primarily in the past few years. One example is the Generative Pretraining Transformer (GPT) series trained using OpenAI. Since the introduction of the first GPT, its successors have been rapidly scaled up, from GPT-3 to GPT-4 [99, 100]. This rapid progress has given the models highly sophisticated natural language understanding and generation capabilities, resulting in diverse applications such as question-and-answer systems, document creation, code generation and even the generation of poetry and creative writing.

In the medical field, the potential of LLMs has been widely recognized, and their range of applications has expanded. These applications include diagnostic support, medical document generation and organization, research support, drug selection, telemedicine support, image analysis support, medical education, preventive medicine, lifestyle improvement and clinical trial design and analysis [101, 102]. These applications are made possible by combining the ability to find patterns in large amounts of data with the ability of the LLM to generate natural language. However, challenges remain in the medical applications of LLMs, such as data privacy, model interpretability, risk of misdiagnosis and misinformation and consistency. Overcoming these ‘AI hallucinations’ and other challenges requires not only technological advances but also the establishment of appropriate regulations and guidelines.

In radiotherapy, further development of LLMs is expected to make a significant contribution to the area of prognosis prediction, where we must consider how to accumulate big data by integrating data other than images, such as concomitant medications and other patient background information. Furthermore, they may not only predict adverse events and effects but may also be able to conduct a preliminary consultation. LLMs have the potential to encompass the dissemination of information to patients, elucidation of terminology and addressing commonly posed patient inquiries [103–105].

CONCLUSION

Although there are still many issues to be addressed regarding the use of AI in RT, the introduction of AI in treatment is a step toward standardizing RT. Auto-OAR segmentation is nearly complete and DL has the potential to fully automate planning from segmentation to optimization within very short time. Adaptive radiotherapy is now available, and LLMs may guide patients with necessary information. Once AI can help with planning, delivery and data collection, radiation oncologists can devote more time to patient care. This will allow us to have more meaningful conversations with patients, which will lead to improved treatment outcomes.

CONFLICT OF INTEREST

The authors declare that they have no conflicts of interest.

FUNDING

This work did not receive any grant from funding agencies in the public, commercial or not-for-profit sectors.

PRESENTATION AT A CONFERENCE

None.

Contributor Information

Mariko Kawamura, Department of Radiology, Nagoya University Graduate School of Medicine, 65 Tsurumaicho, Showa-ku, Nagoya, Aichi, 466-8550, Japan.

Takeshi Kamomae, Department of Radiology, Nagoya University Graduate School of Medicine, 65 Tsurumaicho, Showa-ku, Nagoya, Aichi, 466-8550, Japan.

Masahiro Yanagawa, Department of Radiology, Osaka University Graduate School of Medicine, 2-2 Yamadaoka, Suita, 565-0871, Japan.

Koji Kamagata, Department of Radiology, Juntendo University Graduate School of Medicine, 2-1-1 Hongo, Bunkyo-ku, Tokyo, 113-8421, Japan.

Shohei Fujita, Department of Radiology, University of Tokyo, 7-3-1 Hongo, Bunkyo-ku, Tokyo, 113-8655, Japan.

Daiju Ueda, Department of Diagnostic and Interventional Radiology, Graduate School of Medicine, Osaka Metropolitan University, 1-4-3, Asahi-machi, Abeno-ku, Osaka, 545-8585, Japan.

Yusuke Matsui, Department of Radiology, Faculty of Medicine, Dentistry and Pharmaceutical Sciences, Okayama University, 2-5-1 Shikata-cho, Kitaku, Okayama, 700-8558, Japan.

Yasutaka Fushimi, Department of Diagnostic Imaging and Nuclear Medicine, Kyoto University Graduate School of Medicine, 54 Shogoin Kawaharacho, Sakyo-ku, Kyoto, 606-8507, Japan.

Tomoyuki Fujioka, Department of Diagnostic Radiology, Tokyo Medical and Dental University, 1-5-45 Yushima, Bunkyo-ku, Tokyo, 113-8510, Japan.

Taiki Nozaki, Department of Radiology, Keio University School of Medicine, 35 Shinanomachi, Shinjuku-ku, Tokyo, 160-8582, Japan.

Akira Yamada, Department of Radiology, Shinshu University School of Medicine, 3-1-1 Asahi, Matsumoto, Nagano, 390-8621, Japan.

Kenji Hirata, Department of Diagnostic Imaging, Faculty of Medicine, Hokkaido University, Kita15, Nishi7, Kita-Ku, Sapporo, Hokkaido, 060-8638, Japan.

Rintaro Ito, Department of Radiology, Nagoya University Graduate School of Medicine, 65 Tsurumaicho, Showa-ku, Nagoya, Aichi, 466-8550, Japan.

Noriyuki Fujima, Department of Diagnostic and Interventional Radiology, Hokkaido University Hospital, Kita15, Nishi7, Kita-Ku, Sapporo, Hokkaido, 060-8638, Japan.

Fuminari Tatsugami, Department of Diagnostic Radiology, Hiroshima University, 1-2-3 Kasumi, Minami-ku, Hiroshima, 734-8551, Japan.

Takeshi Nakaura, Department of Diagnostic Radiology, Kumamoto University Graduate School of Medicine, 1-1-1 Honjo, Chuo-ku, Kumamoto, 860-8556, Japan.

Takahiro Tsuboyama, Department of Radiology, Osaka University Graduate School of Medicine, 2-2 Yamadaoka, Suita, 565-0871, Japan.

Shinji Naganawa, Department of Radiology, Nagoya University Graduate School of Medicine, 65 Tsurumaicho, Showa-ku, Nagoya, Aichi, 466-8550, Japan.

REFERENCES

- 1. Kinoshita T, Takahashi S, Anada M et al. A retrospective study of locally advanced cervical cancer cases treated with CT-based 3D-IGBT compared with 2D-IGBT. Jpn J Radiol 2023;41:1164–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Hiyama T, Kuno H, Sekiya K et al. Subtraction iodine imaging with area detector CT to improve tumor delineation and measurability of tumor size and depth of invasion in tongue squamous cell carcinoma. Jpn J Radiol 2022;40:167–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Baba A, Hashimoto K, Kuno H et al. Assessment of squamous cell carcinoma of the floor of the mouth with magnetic resonance imaging. Jpn J Radiol 2021;39:1141–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Medvedev O, Hedesiu M, Ciurea A et al. Perineural spread in head and neck malignancies: imaging findings – an updated literature review. Bosn J Basic Med Sci 2022;22:22–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Chen YX, Jiang CS, Kang WY et al. Development and validation of a CT-based nomogram to predict spread through air space (STAS) in peripheral stage IA lung adenocarcinoma. Jpn J Radiol 2022;40:586–94. [DOI] [PubMed] [Google Scholar]

- 6. Ito K, Nakajima Y, Ikuta S. Stereotactic body radiotherapy for spinal oligometastases: a review on patient selection and the optimal methodology. Jpn J Radiol 2022;40:1017–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Sanuki N, Takeda A, Tsurugai Y, Eriguchi T. Role of stereotactic body radiotherapy in multidisciplinary management of liver metastases in patients with colorectal cancer. Jpn J Radiol 2022;40:1009–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Kimura T, Fujiwara T, Kameoka T et al. Stereotactic body radiation therapy for metastatic lung metastases. Jpn J Radiol 2022;40:995–1005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Palma DA, Olson R, Harrow S et al. Stereotactic ablative radiotherapy versus standard of care palliative treatment in patients with oligometastatic cancers (SABR-COMET): a randomised, phase 2, open-label trial. Lancet 2019;393:2051–8. [DOI] [PubMed] [Google Scholar]

- 10. Chinniah S, Stish B, Costello BA et al. Radiation therapy in oligometastatic prostate cancer. Int J Radiat Oncol Biol Phys 2022;114:684–92. [DOI] [PubMed] [Google Scholar]

- 11. Lin D, Lapen K, Sherer MV et al. A systematic review of contouring guidelines in radiation oncology: analysis of frequency, methodology, and delivery of consensus recommendations. Int J Radiat Oncol Biol Phys 2020;107:827–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Ohno T, Wakatsuki M, Toita T et al. Recommendations for high-risk clinical target volume definition with computed tomography for three-dimensional image-guided brachytherapy in cervical cancer patients. J Radiat Res 2017;58:341–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Lee AW, Ng WT, Pan JJ et al. International guideline for the delineation of the clinical target volumes (CTV) for nasopharyngeal carcinoma. Radiother Oncol 2018;126:25–36. [DOI] [PubMed] [Google Scholar]

- 14. Robin S, Jolicoeur M, Palumbo S et al. Prostate bed delineation guidelines for postoperative radiation therapy: on behalf of the Francophone Group of Urological Radiation Therapy. Int J Radiat Oncol Biol Phys 2021;109:1243–53. [DOI] [PubMed] [Google Scholar]

- 15. Kaidar-Person O, Vrou Offersen B, Hol S et al. ESTRO ACROP consensus guideline for target volume delineation in the setting of postmastectomy radiation therapy after implant-based immediate reconstruction for early stage breast cancer. Radiother Oncol 2019;137:159–66. [DOI] [PubMed] [Google Scholar]

- 16. Niyazi M, Andratschke N, Bendszus M et al. ESTRO-EANO guideline on target delineation and radiotherapy details for glioblastoma. Radiother Oncol 2023;184:109663. [DOI] [PubMed] [Google Scholar]

- 17. Small W Jr, Bosch WR, Harkenrider MM et al. NRG oncology/RTOG consensus guidelines for delineation of clinical target volume for intensity modulated pelvic radiation therapy in postoperative treatment of endometrial and cervical cancer: an update. Int J Radiat Oncol Biol Phys 2021;109:413–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Iwai Y, Nemoto MW, Horikoshi T et al. Comparison of CT-based and MRI-based high-risk clinical target volumes in image guided-brachytherapy for cervical cancer, referencing recommendations from the Japanese radiation oncology study group (JROSG) and consensus statement guidelines from the Groupe Européen de Curiethérapie-European Society for Therapeutic Radiology and Oncology (GEC ESTRO). Jpn J Radiol 2020;38:899–905. [DOI] [PubMed] [Google Scholar]

- 19. Damico N, Meyer J, Das P et al. ECOG-ACRIN guideline for contouring and treatment of early stage anal cancer using IMRT/IGRT. Pract Radiat Oncol 2022;12:335–47. [DOI] [PubMed] [Google Scholar]

- 20. Joskowicz L, Cohen D, Caplan N, Sosna J. Inter-observer variability of manual contour delineation of structures in CT. Eur Radiol 2019;29:1391–9. [DOI] [PubMed] [Google Scholar]

- 21. Liu X, Li K-W, Yang R, Geng LS. Review of deep learning based automatic segmentation for lung cancer radiotherapy. Front Oncol 2021;11:717039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Vrtovec T, Močnik D, Strojan P et al. Auto-segmentation of organs at risk for head and neck radiotherapy planning: from atlas-based to deep learning methods. Med Phys 2020;47:e929–50. [DOI] [PubMed] [Google Scholar]

- 23. Kneepkens E, Bakx N, van der Sangen M et al. Clinical evaluation of two AI models for automated breast cancer plan generation. Radiat Oncol 2022;17:25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Li G, Wu X, Ma X. Artificial intelligence in radiotherapy. Semin Cancer Biol 2022;86:160–71. [DOI] [PubMed] [Google Scholar]

- 25. Teguh DN, Levendag PC, Voet PWJ et al. Clinical validation of atlas-based auto-segmentation of multiple target volumes and normal tissue (swallowing/mastication) structures in the head and neck. Int J Radiat Oncol Biol Phys 2011;81:950–7. [DOI] [PubMed] [Google Scholar]

- 26. Cardenas CE, Yang J, Anderson BM et al. Advances in auto-segmentation. Semin Radiat Oncol 2019;29:185–97. [DOI] [PubMed] [Google Scholar]

- 27. Urago Y, Okamoto H, Kaneda T et al. Evaluation of auto-segmentation accuracy of cloud-based artificial intelligence and atlas-based models. Radiat Oncol 2021;16:175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Schipaanboord B, Boukerroui D, Peressutti D et al. Can atlas-based auto-segmentation ever be perfect? Insights from extreme value theory. IEEE Trans Med Imaging 2019;38:99–106. [DOI] [PubMed] [Google Scholar]

- 29. Numasaki H, Nakada Y, Ohba H et al. Japanese structure survey of radiation oncology in 2019. JASTRO Database Committee. 2022. https://www.jastro.or.jp/medicalpersonnel/data_center/JASTRO_NSS_2019-01.pdf (20 September 2023, date last accessed). [DOI] [PMC free article] [PubMed]

- 30. Takayama Y, Kadoya N, Yamamoto T et al. Evaluation of the performance of deformable image registration between planning CT and CBCT images for the pelvic region: comparison between hybrid and intensity-based DIR. J Radiat Res 2017;58:567–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Duan J, Vargas CE, Yu NY et al. Incremental retraining, clinical implementation, and acceptance rate of deep learning auto-segmentation for male pelvis in a multiuser environment. Med Phys 2023;50:4079–91. [DOI] [PubMed] [Google Scholar]

- 32. Nemoto T, Futakami N, Yagi M et al. Simple low-cost approaches to semantic segmentation in radiation therapy planning for prostate cancer using deep learning with non-contrast planning CT images. Phys Med 2020;78:93–100. [DOI] [PubMed] [Google Scholar]

- 33. Duan J, Bernard M, Downes L et al. Evaluating the clinical acceptability of deep learning contours of prostate and organs-at-risk in an automated prostate treatment planning process. Med Phys 2022;49:2570–81. [DOI] [PubMed] [Google Scholar]

- 34. Tong N, Gou S, Chen S et al. Multi-task edge-recalibrated network for male pelvic multi-organ segmentation on CT images. Phys Med Biol 2021;66:035001. [DOI] [PubMed] [Google Scholar]

- 35. Belue MJ, Harmon SA, Patel K et al. Development of a 3D CNN-based AI model for automated segmentation of the prostatic urethra. Acad Radiol 2022;29:1404–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Guberina M, Santiago Garcia A, Khouya A et al. Comparison of online-onboard adaptive intensity-modulated radiation therapy or volumetric-modulated arc radiotherapy with image-guided radiotherapy for patients with gynecologic tumors in dependence on fractionation and the planning target volume margin. JAMA Netw Open 2023;6:e234066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Chen W, Li Y, Yuan N et al. Clinical enhancement in AI-based post-processed fast-scan low-dose CBCT for head and neck adaptive radiotherapy. Front Artif Intell 2020;3:614384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Nachbar M, Lo Russo M, Gani C et al. Automatic AI-based contouring of prostate MRI for online adaptive radiotherapy. Z Med Phys 2023. 10.1016/j.zemedi.2023.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Cusumano D, Lenkowicz J, Votta C et al. A deep learning approach to generate synthetic CT in low field MR-guided adaptive radiotherapy for abdominal and pelvic cases. Radiother Oncol 2020;153:205–12. [DOI] [PubMed] [Google Scholar]

- 40. van Dijk LV, Van den Bosch L, Aljabar P et al. Improving automatic delineation for head and neck organs at risk by deep learning contouring. Radiother Oncol 2020;142:115–23. [DOI] [PubMed] [Google Scholar]

- 41. Wu Y, Kang K, Han C et al. A blind randomized validated convolutional neural network for auto-segmentation of clinical target volume in rectal cancer patients receiving neoadjuvant radiotherapy. Cancer Med 2022;11:166–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Yardimci AH, Kocak B, Sel I et al. Radiomics of locally advanced rectal cancer: machine learning-based prediction of response to neoadjuvant chemoradiotherapy using pre-treatment sagittal T2-weighted MRI. Jpn J Radiol 2022;41:71–82. [DOI] [PubMed] [Google Scholar]

- 43. Xiao C, Jin J, Yi J et al. RefineNet-based 2D and 3D automatic segmentations for clinical target volume and organs at risks for patients with cervical cancer in postoperative radiotherapy. J Appl Clin Med Phys 2022;23:e13631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Eminowicz G, Hall-Craggs M, Diez P, McCormack M. Improving target volume delineation in intact cervical carcinoma: literature review and step-by-step pictorial atlas to aid contouring. Pract Radiat Oncol 2016;6:e203–13. [DOI] [PubMed] [Google Scholar]

- 45. Orton E, Ali E, Mayorov K et al. A contouring strategy and reference atlases for the full abdominopelvic bowel bag on treatment planning and cone beam computed tomography images. Adv Radiat Oncol 2022;7:101031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Liao W, Luo X, He Y et al. Comprehensive evaluation of a deep learning model for automatic organs at risk segmentation on heterogeneous computed tomography images for abdominal radiation therapy. Int J Radiat Oncol Biol Phys 2023;117:994–1006. [DOI] [PubMed] [Google Scholar]

- 47. Dai X, Lei Y, Wang T et al. Automated delineation of head and neck organs at risk using synthetic MRI-aided mask scoring regional convolutional neural network. Med Phys 2021;48:5862–73. [DOI] [PubMed] [Google Scholar]

- 48. Costea M, Zlate A, Durand M et al. Comparison of atlas-based and deep learning methods for organs at risk delineation on head-and-neck CT images using an automated treatment planning system. Radiother Oncol 2022;177:61–70. [DOI] [PubMed] [Google Scholar]

- 49. Wong J, Fong A, McVicar N et al. Comparing deep learning-based auto-segmentation of organs at risk and clinical target volumes to expert inter-observer variability in radiotherapy planning. Radiother Oncol 2020;144:152–8. [DOI] [PubMed] [Google Scholar]

- 50. Buchner JA, Kofler F, Etzel L et al. Development and external validation of an MRI-based neural network for brain metastasis segmentation in the AURORA multicenter study. Radiother Oncol 2023;178:109425. [DOI] [PubMed] [Google Scholar]

- 51. Shapey J, Wang G, Dorent R et al. An artificial intelligence framework for automatic segmentation and volumetry of vestibular schwannomas from contrast-enhanced T1-weighted and high-resolution T2-weighted MRI. J Neurosurg 2019;134:171–9. [DOI] [PubMed] [Google Scholar]

- 52. Wang S, Mahon R, Weiss E et al. Automated lung cancer segmentation using a PET and CT dual-modality deep learning neural network. Int J Radiat Oncol Biol Phys 2023;115:529–39. [DOI] [PubMed] [Google Scholar]

- 53. Wang H, Qu T, Bernstein K et al. Automatic segmentation of vestibular schwannomas from T1-weighted MRI with a deep neural network. Radiat Oncol 2023;18:78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Kihara S, Koike Y, Takegawa H et al. Clinical target volume segmentation based on gross tumor volume using deep learning for head and neck cancer treatment. Med Dosim 2023;48:20–4. [DOI] [PubMed] [Google Scholar]

- 55. Wong LM, Ai QYH, Mo FKF et al. Convolutional neural network in nasopharyngeal carcinoma: how good is automatic delineation for primary tumor on a non-contrast-enhanced fat-suppressed T2-weighted MRI? Jpn J Radiol 2021;39:571–9. [DOI] [PubMed] [Google Scholar]

- 56. Hosny A, Bitterman DS, Guthier CV et al. Clinical validation of deep learning algorithms for radiotherapy targeting of non-small-cell lung cancer: an observational study. Lancet Digit Health 2022;4:e657–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Savjani R. nnU-net: further automating biomedical image autosegmentation. Radiol Imaging Cancer 2021;3:e209039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Isensee F, Jaeger PF, Kohl SAA et al. nnU-net: a self-configuring method for deep learning-based biomedical image segmentation. Nat Methods 2021;18:203–11. [DOI] [PubMed] [Google Scholar]

- 59. Sasaki M, Tominaga M, Kamomae T et al. Influence of multi-leaf collimator leaf transmission on head and neck intensity-modulated radiation therapy and volumetric-modulated arc therapy planning. Jpn J Radiol 2017;35:511–25. [DOI] [PubMed] [Google Scholar]

- 60. Sasaki M, Nakaguchi Y, Kamomae T et al. Impact of treatment planning quality assurance software on volumetric-modulated arc therapy plans for prostate cancer patients. Med Dosim 2021;46:e1–6. [DOI] [PubMed] [Google Scholar]

- 61. Kubo K, Monzen H, Ishii K et al. Dosimetric comparison of RapidPlan and manually optimized plans in volumetric modulated arc therapy for prostate cancer. Phys Med 2017;44:199–204. [DOI] [PubMed] [Google Scholar]

- 62. Nguyen D, Long T, Jia X et al. A feasibility study for predicting optimal radiation therapy dose distributions of prostate cancer patients from patient anatomy using deep learning. Sci Rep 2019;9:1076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Nguyen D, Jia X, Sher D et al. 3D radiotherapy dose prediction on head and neck cancer patients with a hierarchically densely connected U-net deep learning architecture. Phys Med Biol 2019;64:065020. [DOI] [PubMed] [Google Scholar]

- 64. Kajikawa T, Kadoya N, Ito K et al. A convolutional neural network approach for IMRT dose distribution prediction in prostate cancer patients. J Radiat Res 2019;60:685–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Kawabata F, Kamomae T, Okudaira K et al. Development of a high-resolution two-dimensional detector-based dose verification system for tumor-tracking irradiation in the CyberKnife system. J Appl Clin Med Phys 2022;23:e13645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Nyflot MJ, Thammasorn P, Wootton LS et al. Deep learning for patient-specific quality assurance: identifying errors in radiotherapy delivery by radiomic analysis of gamma images with convolutional neural networks. Med Phys 2019;46:456–64. [DOI] [PubMed] [Google Scholar]

- 67. Chen Z, Wang P, Du L et al. Potential of dosage reduction of cone-beam CT dacryocystography in healthy volunteers by decreasing tube current. Jpn J Radiol 2021;39:233–9. [DOI] [PubMed] [Google Scholar]

- 68. Sun M, Star-Lack JM. Improved scatter correction using adaptive scatter kernel superposition. Phys Med Biol 2010;55:6695–720. [DOI] [PubMed] [Google Scholar]

- 69. Kida S, Kaji S, Nawa K et al. Visual enhancement of cone-beam CT by use of CycleGAN. Med Phys 2020;47:998–1010. [DOI] [PubMed] [Google Scholar]

- 70. Yasue K, Fuse H, Asano Y et al. Investigation of fiducial marker recognition possibility by water equivalent length in real-time tracking radiotherapy. Jpn J Radiol 2022;40:318–25. [DOI] [PubMed] [Google Scholar]

- 71. Imaizumi A, Araki T, Okada H et al. Transarterial fiducial marker implantation for CyberKnife radiotherapy to treat pancreatic cancer: an experience with 14 cases. Jpn J Radiol 2021;39:84–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Imaizumi A, Araki T, Okada H et al. Correction to: Transarterial fiducial marker implantation for CyberKnife radiotherapy to treat pancreatic cancer: an experience with 14 cases. Jpn J Radiol 2023;41:569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Nishioka T, Nishioka S, Kawahara M et al. Synchronous monitoring of external/internal respiratory motion: validity of respiration-gated radiotherapy for liver tumors. Jpn J Radiol 2009;27:285–9. [DOI] [PubMed] [Google Scholar]

- 74. Inoue M, Okawa K, Taguchi J et al. Factors affecting the accuracy of respiratory tracking of the image-guided robotic radiosurgery system. Jpn J Radiol 2019;37:727–34. [DOI] [PubMed] [Google Scholar]

- 75. Ruan D. Kernel density estimation-based real-time prediction for respiratory motion. Phys Med Biol 2010;55:1311–26. [DOI] [PubMed] [Google Scholar]

- 76. Zhou D, Nakamura M, Mukumoto N et al. Feasibility study of deep learning-based markerless real-time lung tumor tracking with orthogonal X-ray projection images. J Appl Clin Med Phys 2023;24:e13894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Stanley DN, Harms J, Pogue JA et al. A roadmap for implementation of kV-CBCT online adaptive radiation therapy and initial first year experiences. J Appl Clin Med Phys 2023;24:e13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Uno T, Tsuneda M, Abe K et al. A new workflow of the on-line 1.5-T MR-guided adaptive radiation therapy. Jpn J Radiol 2023;41:1316–22. 10.1007/s11604-023-01457-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Arivarasan I, Anuradha C, Subramanian S et al. Magnetic resonance image guidance in external beam radiation therapy planning and delivery. Jpn J Radiol 2017;35:417–26. [DOI] [PubMed] [Google Scholar]

- 80. Spadea MF, Maspero M, Zaffino P, Seco J. Deep learning based synthetic-CT generation in radiotherapy and PET: a review. Med Phys 2021;48:6537–66. [DOI] [PubMed] [Google Scholar]

- 81. Fh T, Cyw C, Eyw C. Radiomics AI prediction for head and neck squamous cell carcinoma (HNSCC) prognosis and recurrence with target volume approach. BJR Open 2021;3:20200073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Bang C, Bernard G, Le WT et al. Artificial intelligence to predict outcomes of head and neck radiotherapy. Clin Transl Radiat Oncol 2023;39:100590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. Liang Z-G, Tan HQ, Zhang F et al. Comparison of radiomics tools for image analyses and clinical prediction in nasopharyngeal carcinoma. Br J Radiol 2019;92:20190271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84. Chen N-B, Xiong M, Zhou R et al. CT radiomics-based long-term survival prediction for locally advanced non-small cell lung cancer patients treated with concurrent chemoradiotherapy using features from tumor and tumor organismal environment. Radiat Oncol 2022;17:184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Astaraki M, Wang C, Buizza G et al. Early survival prediction in non-small cell lung cancer from PET/CT images using an intra-tumor partitioning method. Phys Med 2019;60:58–65. [DOI] [PubMed] [Google Scholar]

- 86. Zhang N, Liang R, Gensheimer MF et al. Early response evaluation using primary tumor and nodal imaging features to predict progression-free survival of locally advanced non-small cell lung cancer. Theranostics 2020;10:11707–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Colorectal Cancer Collaborative Group . Adjuvant radiotherapy for rectal cancer: a systematic overview of 8,507 patients from 22 randomised trials. Lancet 2001;358:1291–304. [DOI] [PubMed] [Google Scholar]

- 88. Benson AB, Venook AP, Al-Hawary MM et al. Rectal cancer, version 2.2022, NCCN Clinical Practice Guidelines in Oncology. J Natl Compr Cancer Netw 2022;20:1139–67. [DOI] [PubMed] [Google Scholar]

- 89. Yang F, Hill J, Abraham A et al. Tumor volume predicts for pathologic complete response in rectal cancer patients treated with neoadjuvant chemoradiation. Am J Clin Oncol 2022;45:405–9. [DOI] [PubMed] [Google Scholar]

- 90. Liu Z, Zhang X-Y, Shi Y-J et al. Radiomics analysis for evaluation of pathological complete response to neoadjuvant chemoradiotherapy in locally advanced rectal cancer. Clin Cancer Res 2017;23:7253–62. [DOI] [PubMed] [Google Scholar]

- 91. Shin J, Seo N, Baek S-E et al. MRI radiomics model predicts pathologic complete response of rectal cancer following chemoradiotherapy. Radiology 2022;303:351–8. [DOI] [PubMed] [Google Scholar]

- 92. Shaish H, Aukerman A, Vanguri R et al. Radiomics of MRI for pretreatment prediction of pathologic complete response, tumor regression grade, and neoadjuvant rectal score in patients with locally advanced rectal cancer undergoing neoadjuvant chemoradiation: an international multicenter study. Eur Radiol 2020;30:6263–73. [DOI] [PubMed] [Google Scholar]

- 93. Cui Y, Yang X, Shi Z et al. Radiomics analysis of multiparametric MRI for prediction of pathological complete response to neoadjuvant chemoradiotherapy in locally advanced rectal cancer. Eur Radiol 2019;29:1211–20. [DOI] [PubMed] [Google Scholar]

- 94. Li L, Xu B, Zhuang Z et al. Accurate tumor segmentation and treatment outcome prediction with DeepTOP. Radiother Oncol 2023;183:109550. [DOI] [PubMed] [Google Scholar]

- 95. Thor M, Apte A, Haq R et al. Using auto-segmentation to reduce contouring and dose inconsistency in clinical trials: the simulated impact on RTOG 0617. Int J Radiat Oncol Biol Phys 2021;109:1619–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96. Nousiainen K, Santurio GV, Lundahl N et al. Evaluation of MRI-only based online adaptive radiotherapy of abdominal region on MR-linac. J Appl Clin Med Phys 2023;24:e13838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97. Johnstone E, Wyatt JJ, Henry AM et al. Systematic review of synthetic computed tomography generation methodologies for use in magnetic resonance imaging-only radiation therapy. Int J Radiat Oncol Biol Phys 2018;100:199–217. [DOI] [PubMed] [Google Scholar]

- 98. Krishnamurthy R, Mummudi N, Goda JS et al. Using artificial intelligence for optimization of the processes and resource utilization in radiotherapy. JCO Glob Oncol 2022;8:e2100393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99. Brown TB, Mann B, Ryder N et al. Language models are few-shot learners. Adv Neural Inf Proces Syst 2020;33:1877–901. [Google Scholar]

- 100. OpenAI . GPT-4 Technical Report. arXiv:2303.08774 [cs.CL]. 2023. 10.48550/arXiv.2303.08774. [DOI]

- 101. Doi K, Takegawa H, Yui M et al. Deep learning-based detection of patients with bone metastasis from Japanese radiology reports. Jpn J Radiol 2023;41:900–8. [DOI] [PubMed] [Google Scholar]

- 102. Derton A, Guevara M, Chen S et al. Natural language processing methods to empirically explore social contexts and needs in cancer patient notes. JCO Clin Cancer Inform 2023;7:e2200196. [DOI] [PubMed] [Google Scholar]

- 103. Bitterman DS, Goldner E, Finan S et al. An end-to-end natural language processing system for automatically extracting radiation therapy events from clinical texts. Int J Radiat Oncol Biol Phys 2023;117:262–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104. Rebelo N, Sanders L, Li K, Chow JCL. Learning the treatment process in radiotherapy using an artificial intelligence-assisted chatbot: development study. JMIR Form Res 2022;6:e39443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105. Dayawansa S, Mantziaris G, Sheehan J. Chat GPT versus human touch in stereotactic radiosurgery. J Neuro-Oncol 2023;163:481–3. [DOI] [PubMed] [Google Scholar]