Abstract

The neural underpinnings of perceptual awareness have been extensively studied using unisensory (e.g., visual alone) stimuli. However, perception is generally multisensory, and it is unclear whether the neural architecture uncovered in these studies directly translates to the multisensory domain. Here we use electroencephalography (EEG) to examine brain responses associated with the processing of visual, auditory, and audiovisual stimuli presented near threshold levels of detectability, with the aim of deciphering similarities and differences in the neural signals indexing the transition into perceptual awareness across vision, audition and combined visual-auditory (multisensory) processing. More specifically, we examine: 1) the presence of late evoked potentials (~ > 300 ms), 2) the across trial reproducibility, and 3) the evoked complexity associated with perceived vs. non-perceived stimuli. Results reveal that while perceived stimuli are associated with the presence of late evoked potentials across each of the examined sensory modalities, between trial variability and EEG complexity differed for unisensory versus multisensory conditions. Whereas across trial variability and complexity differed for perceived versus non-perceived stimuli in the visual and auditory conditions, this was not the case for the multisensory condition. Taken together, these results suggest that there are fundamental differences in the neural correlates of perceptual awareness for unisensory versus multisensory stimuli. Specifically, the work argues that the presence of late evoked potentials, as opposed to neural reproducibility or complexity, most closely tracks perceptual awareness regardless of the nature of the sensory stimulus. In addition, the current findings suggest a greater similarity between the neural correlates of perceptual awareness of unisensory (visual and auditory) stimuli when compared with multisensory stimuli.

Keywords: Multisensory Integration, Awareness, Complexity, EEG, Variance, Consciousness

Introduction

During waking hours, signals are continually impinging upon our different sensory organs (e.g., eyes, ears, skin), conveying information about the objects present and the events occurring within our environment. This flood of information challenges the limited processing capabilities of our central nervous system (James, 1890). As a consequence, much work within cognitive psychology and neuroscience has sought to understand how the human brain tackles this challenge by effectively filtering, segregating, and integrating the various pieces of sensory information to generate a coherent perceptual Gestalt (Broadbent, 1958; Treisman & Gelade, 1980; Murray & Wallace, 2012).

The bulk of the evidence to date with regard to the intersection between the bottleneck of information processing and perceptual awareness has been derived from studies focused on the visual system (Zeki et al., 2003; Koch, 2004; Dehaene et al., 2017). In fact, all major neurobiological theories regarding perceptual awareness, all emphasizing the importance of engaging widely distributed brain networks (Naghavi & Nyberg, 2005; Tallon-Baudry, 2012; van Gaal & Lamme, 2012), have been derived from observations within the visual neurosciences (Sanchez et al., 2017; Faivre et al., 2017). In parallel, the neural markers associated with perceptual awareness have been derived from observations probing the visual system. Early functional Magnetic Resonance Imaging (fMRI; Dehaene et al., 2001), electroencephalographical (EEG; Sergent et al., 2005; Del Cul et al., 2007) and electrocorticographical (ECoG; Gaillard et al., 2009) studies suggested that perceptual awareness was associated with the broadcasting of neural signals beyond primary (visual) cortex (Lamme, 2006), and more specifically engaging fronto-parietal regions (Dehaene et al., 2006). Arguably the most consistent signature associated with this generalized neural recruitment is the P3b event-related potential. Namely, while early EEG components are similar regardless of whether or not stimuli enter perceptual awareness, stimuli that are perceived (vs. non-perceived) additionally yield components at later latencies. Subsequent studies converged on the observation that perceived stimuli broadcasted or triggered activity beyond that disseminated by non-perceived stimuli, but emphasized that, the neural ignition associated with awareness resulted in neural patterns that were both more reproducible (Schurger et al., 2010) and stable (Schurger et al., 2015) than patterns seen for non-perceived stimuli. In the latest itineration of the argument emphasizing the recruitment of global neural networks, researchers have highlighted the pivotal role of neural networks that are both integrated and differentiated (Tononi et al., 2016; Koch et al., 2016; Cavanna et al., 2017). Within this latter framework, the complexity of both resting state and evoked neural responses has emerged as a marker for perceptual awareness (Casali et al., 2013; Sarasso et al., 2015; Andrillon et al., 2016; Schartner et al., 2015, 2017).

It has been assumed that these theories of and neural markers for perceptual awareness gleaned from the visual system apply across sensory domains, an assumption that indeed comes with some supporting evidence. For example, there is late sustained neural activity in perceived as opposed to non-perceived auditory stimulation conditions (Sadaghiani et al., 2009). However, there are also important differences across sensory modalities, such as the association of auditory awareness with neural activity in fronto-temporal, as opposed to fronto-parietal, networks (Joos et al., 2014). In an important recent contribution, Sanchez and colleagues (2017) demonstrated that by applying machine learning techniques it is possible to decode perceptual states (i.e., perceived vs. non-perceived) across the different sensory modalities (i.e., vision, audition, somatosensory). While it is interesting that decoding of perceptual states across modalities is feasible, this observation does not tell us whether (and how) the brain performs this task. Lastly, Sanchez and colleagues (2017) have probed perceptual states across unisensory modalities, but to the best of our knowledge no study has characterized differences between perceived and non-perceived stimuli across both unisensory and multisensory modalities. This knowledge gap is important, as in recent years, keen interest has emerged concerning the role played by multisensory integration in the construction of perceptual awareness (Deroy et al., 2014; Spence & Deroy, 2013 Faivre et al., 2017; O’Callaghan, 2017). Indeed, as discussed above, theoretical models posit an inherent relationship between the integration of sensory information and perceptual awareness. For example, mathematical and neurocognitive formulations, such as integrated information theory (IIT; Tononi, 2012), global neuronal workspace theory (Dehaene & Changeaux, 2011), and recurrent/reentrant networks (Lamme, 2006), postulate – explicitly or implicitly – that the integration of sensory information is a prerequisite for perceptual awareness. For example, IIT posits that a particular spatio-temporal configuration of neural activity culminates in subjective experience when the amount of integrated information is high. In many of these views, subjective experience (i.e., perceptual awareness) relates to the degree to which information generated by a system as a whole exceeds that independently generated by its parts.

Motivated by this theoretical perspective emphasizing information integration in perceptual awareness, and noting that our perceptual Gestalt is built upon a multisensory foundation, we argue that multisensory neuroscience is uniquely positioned to inform our understanding of perceptual awareness (Faivre et al., 2014; Mudrik et al., 2014; Blanke et al., 2015; Noel et al., 2015; Salomon et al., 2017; in addition, see Deroy et al., 2014, for a provocative argument implying that unisensory-derived theories of perceptual awareness cannot be applied to multisensory experiences). Consequently, in the current work we aim to characterize electrophysiological indices of perceptual awareness across both unisensory (visual alone, auditory alone) and multisensory (combined visual-auditory) modalities. More specifically, we aim to establish whether previously reported neural markers of visual awareness generalize across sensory modalities (from vision to audition) onto the promotion of multisensory experiences. In the current study we examine EEG responses to auditory, visual, and combined audiovisual stimuli presented close to the bounds of perceptual awareness. Analyses are centered around previously reported indices of visual awareness; the presence of late components in evoked potentials during perceived but not non-perceived trials (e.g., Dehaene & Changeaux, 2011; Dehaene et al., 2017), as well as changes in neural reproducibility (Schurger et al., 2010) and complexity (Tononi et al., 2016; Koch et al., 2016).

Methods

Participants

Twenty-one (mean age = 22.5 ± 1.9, median = 21.2, range: 19-25, 9 females) right-handed graduate and undergraduate students from Vanderbilt University took part in this experiment. All participants reported normal hearing and had normal or corrected-to-normal eyesight. All participants gave written informed consent to take part in this study, the protocols for which were approved by Vanderbilt University Medical Center’s Institutional Review Board. EEG data from 2 participants were not analyzed as we were unsuccessful in driving their target detection performance within a pre-defined range (see below; See Figure 1. dotted lines), and thus data from 19 participants formed the actual analyses presented here.

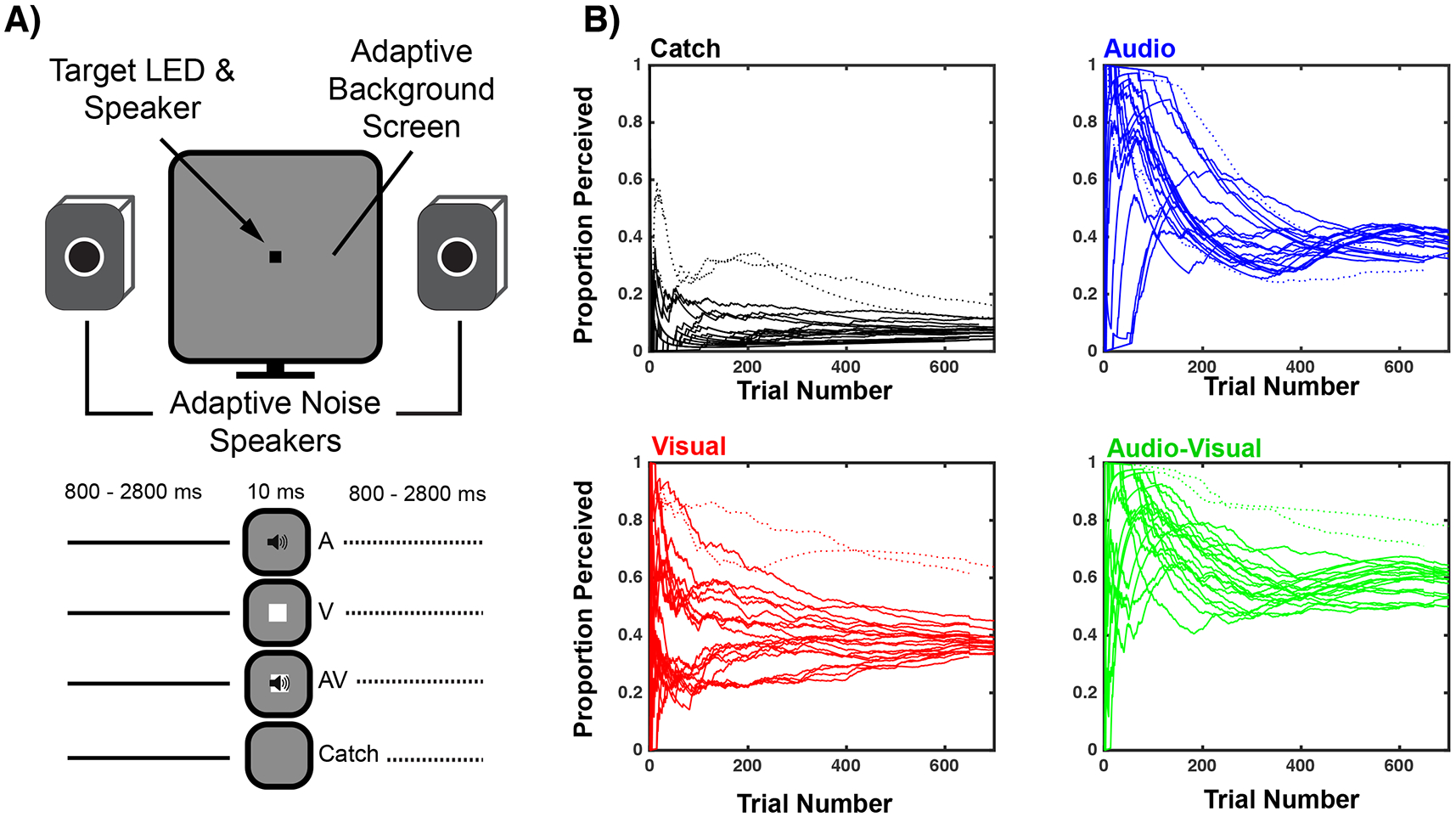

Figure 1. Experimental Design and Methods.

A) Experimental Design. Participants fixated a small audiovisual device controlled via a micro-controller and reported via button press the detection of targets (audio, visual, or audiovisual). Targets were presented within visual and auditory noise whose levels were adaptively adjusted over trials. Catch trials (no targets) were also presented. B) Auditory and visual noise levels were adjusted online for each participant to generate auditory and visual detection rates between 30 and 45%. Each line represents a single participant and plots their detection rate as a function of trial number. Participants converged on stable performance after approximately 200 trials per stimulus condition. Thus, these first 200 trials were not analyzed. Two participants exhibited high false detection rates (catch trial; dotted lines) and thus their EEG data were not analyzed. These same participants are depicted with dotted lines for audio (blue), visual (red), and audiovisual (green) conditions. Note that false alarms (catch trials) remain stable across the duration of the experiment, indicating that results are not likely to be affected by training or fatigue effects.

Materials and Apparatus

Visual and auditory target stimuli were controlled via a micro-controller (SparkFun Electronics, Redboard, Boulder CO) under the control of purpose written MATLAB (MathWorks, Natick MA) and Arduino (Arduino™) scripts. The micro-controller drove the onset of a green LED (3 mm diameter, 596-572nm wavelength, 150 mcd) and a Piezo Buzzer (12 mm diameter, 9.7 mm tall, 60 dB(SPL), 4kHz, 3V rectangular wave). Target stimuli were 10 ms in duration (square-wave, onset and offset <1 ms, as measured via oscilloscope). The LED was mounted on the Piezo Buzzer thus forming a single audiovisual object that was placed at the center of a 24-inch computer monitor (Asus VG248QE, LED-backlit, 1920x1080 resolution, 60Hz refresh rate). In addition to the targets, to adjust participant’s detection rates, we online adjusted the luminance and amplitude of background visual and auditory white noise with the psychophysics toolbox (Brainard, 1997; Pelli, 1997). The luminance (achromatic and uniform) of the screen upon which the audio and visual targets were mounted was adjusted between 0 and 350cd/m2 in steps of 4 RGB units (RGB range = 0 to 255; initial = [140, 140, 140] RGB) while auditory noise comprised variable intensity white noise broadcast from two speakers placed symmetrically to the right and left side of the monitor (Atlas Sound EV8D 3.2 Stereo). The white noise track initialized at 49 dB and adjusted in 0.4 dB increments (44.1 kHz sampling rate). Visual and auditory noise were adjusted by a single increment every 7 to 13 trials (uniform distribution) to maintain unisensory detection performance between 30 and 45%. This low unisensory detection rate was chosen to assure satisfactory bifurcation between ‘perceived’ and ‘non-perceived’ trials in both unisensory and multisensory trials (Murray & Wallace, 2012).

Procedure and Experimental Design

Participants were fitted with a 128-electrode EGI Netstation EEG and seated 60 cm away from the stimulus and noise generators. Participants completed 12 to 14 blocks containing 200 repetitions of target detection, in which no-stimulus (catch trials), auditory-only, visual-only, and audiovisual trials were distributed equally and interleaved pseudo-randomly. We employed a subjective measure of awareness (similar to a yes/no detection judgment; Merkile et al., 2001; see Figure 1) in conjunction with an extensive set of EEG analyses (electrical neuroimaging framework; Brunet et al., 2011, see below). Thus, albeit perceptual awareness may arguably occur without the capacity for explicit report (see Eriksen, 1960) here we operationalize perceptual awareness as the detection and report of sensory stimuli (see below for signal detection analyses suggesting that criterion for detection was unchanged across experimental condition and hence detection reports likely reflected perceptual awareness). Participants were asked to respond, via manual response (button press), as quickly as possible when they detected a stimulus. Inter-stimulus interval comprised a fixed duration of 800 ms, plus a uniformly distributed random duration between 0 and 2000 ms. The total duration of the experiment was approximately 3h30 min, with rest periods in between blocks of approximately 5 minutes.

EEG Data Acquisition and Rationale

We contrasted participants EEG responses for perceived (i.e., detected) versus non-perceived (i.e., non-detected) unisensory (i.e. either visual or auditory) and multisensory (i.e., conjoint visual and auditory) stimuli to determine whether indices of visual awareness generalize across sensory domains. High density continuous EEG was recorded from 128 electrodes with a sampling rate of 1000Hz (Net Amps 200 amplifier, Hydrocel GSN 128 EEG cap, EGI systems, Inc.) and referenced to the vertex. Electrode impedances were maintained below 50kΩ throughout the recording procedure and were reassessed at the end of every other block. Data was acquired with Netstation 5.1.2 running on a Macintosh computer and online high-pass filtered at 0.1 Hz.

Analysis

Behavioral.

Data were compiled for detection as a function of the sensory modality stimulated, where ‘detection’ refers to a manual response immediately following presentation of a stimulus or a pair of stimuli. Two participants generated false alarm rates (reports of stimulus detection on catch trials when no stimulus was presented) that exceeded 2.5 standard deviations of the population average (false alarm rates ~20% compared to 8.2%, See Figure 1), leading to exclusion of their data from further analysis. Data were analyzed for reaction times and in light of signal detection theory (SDT; Tanner & Swets, 1954; Macmillan & Creelman, 2005). To quantify sensitivity and response bias to the detection of near-threshold sensory stimuli across different sensory modalities reports of detection during the presence of an auditory, visual, or audiovisual stimuli were considered as hits. Analogously, reports of the presence of sensory stimulation during a catch trial were taken to index false alarms. Noise and signal distributions were assumed to have an equal variance, and sensitivity (i.e., d’) and response criteria (i.e., c) were calculated according to equations in Macmillan & Creelman, 2005. Note that the assumption of equal variance does not affect quantification of the response criteria, and simply scales sensitivity (Harvey, 2003). Regarding reaction times, data were trimmed for trials in which participants responded to stimuli within 100ms of stimulus (total 0.9 % data trimmed) and were then aggregated.

EEG Preprocessing.

As illustrated in Figure 1A, after 200 trials of each sensory condition (4 blocks) relatively few adjustments of auditory and visual noise were needed to maintain participants within the pre-defined range of 30-45% unisensory detection performance (see also Control Analyses in Supplementary Materials online). That is, 65.42% for all audio noise adjustments were undertaken during the first 200 trials (thus 34.58% were undertaken during the last 500 experimental trials) and 60.75% of visual noise adjustments happened during that same period (leaving 39.25% of visual noise changes occurring during the 500 trial experimental phase. Thus, EEG analysis (below) was restricted to the last 400-500 trials per sensory condition to reduce variability in the stimulus statistics. Data from these trials was exported to EEGLAB (Delorme & Makeig, 2004), and epochs were sorted according to sensory condition (i.e., A, V, AV, or none) and detection (perceived versus non-perceived). Epochs from −100 to 500 ms after target onset were high-pass (zero phase, 8th order Butterworth filter) at 0.1hz and low-pass at 40hz, notch filtered at 60hz, EEG epochs containing skeletomuscular movement, eye blinks, or other noise transients and artifacts were removed by visual inspection. After epoch rejection, every condition (4 [sensory modalities: none, audio, visual, and audiovisual] X 2 [perceptual report: perceived and non-perceived]) comprised an average of 179.16 ± 39 trials (average epoch rejection = 23.5%), with the exception of the catch-perceived condition, which had 23.2 ± 3.9 trials, and catch non-perceived condition, which had 307.45 ± 31.5 trials. Excluding catch trials, there was no effect of sensory modality, perceptual report, or interaction between these with regard total amount of trials, all p > .19). Channels with poor signal quality (e.g., broken or excessively noisy electrodes) were then removed (6.2 electrodes on average, 4.8%). Data were re-referenced to the average, and baseline corrected to the pre-stimulus period. Excluded channels were reconstructed using spherical spline interpolation (Perrin, Pernier, Bertrand, Giard, & Echallier, 1987). To account for the inherent multiple comparisons problem in EEG, we set alpha at < 0.01 for at least 10 consecutive time points (Guthrie & Buchwald, 1991), and hence most statistical reporting in the results states significant time-periods as ‘all p < 0.01’.

Global Field Power.

The global electric field strength was quantified using global field power (GFP; Lehman & Skrandies, 1980). This measure is equivalent to the standard deviation of the trial-averaged voltage values across the entire electrode montage at a given time point, and represents a reference- and topographic-independent measure of evoked potential magnitude. This measure is used here to index the presence (or absence) of late evoked potentials during perceived vs. non-perceived visual, auditory and audiovisual trials. On a first pass, we calculated average GFPs for each subject, as well as for the sample as a whole (i.e., grand average) and for every condition. Then, the topographic consistency test (TCT; Koening & Melie-Garica, 2010) was applied across the entire epoch (−100 to 500 ms post-stimulus) for each condition in order to determine whether there was statistical evidence for a consistent evoked potential. Subsequently, the TCT was applied at each time-point for those conditions demonstrating a significant evoked potential in order to ascertain time-period during which evoked potentials were reliably evoked. For these analyses alpha was a priori set to 0.05 FDR corrected (Genovese et al., 2002); the default alpha assumed by the test (Koening & Melie-Garica, 2010). After demonstrating the presence of evoked potentials relative to baseline (see above), we conducted a 3 (sensory modality: audio, visual, audiovisual) X 2 (perceptual state: perceived versus non-perceived) repeated measures ANOVA at each time-point (−100 pre-stimuli onset to 500 ms post-stimuli onset). Separate t-tests across states of perception (perceived vs. non-perceived) for the different modalities (audio, visual, and audiovisual) were equally conducted. Lastly, to ascertain true multisensory interactions, we contrasted the GFP evoked by the audiovisual condition, to the sum of the unisensory responses (e.g., Cappe et al., 2012). As a control, we equally index the GFP evoked by detected (i.e., false alarms) and non-detected (i.e., correct rejections) catch trials to ascertain whether either the noise features utilized to mask targets or the simple fact of reporting detection were sufficient to engender a GFP differentiation between conditions. The GFP analysis was solely conducted on participants with at least 20 false alarm trials (13/20 participants). For this analysis, a random subset of correct rejection trials were pulled for each individual in order to match the number of false alarm and correct rejection trials at an individual subject level. Complementing the GFP analyses, the topography exhibited by the different conditions were likewise examined. However, these are presented in the supplementary materials (see Figure S2) and not in the main text, as no strong theoretical prediction exist regarding a neural correlate of consciousness across unisensory and multisensory domains in topography (although see Britz et al., 2014).

Inter-Trial Variability Analyses.

To probe the reproducibility of evoked potentials during different perceptual states and as elicited by stimuli of different modalities in a relatively simple manner, PCA was performed within each participant. More specifically, PCA identified the number of orthogonal dimensions, expressed as a proportion of the total possible (e.g., number of trials analyzed), needed to express a certain amount of the trial-to-trial variability (90% in the present case) for each channel. In a deterministic system with highly stereotyped responses, only a few dimensions are needed to capture most of the variability. To the extent that trial-to-trial recordings differ from one another, total variability increases, and hence PCA dimensionality increases. In the present case, each participant’s data were divided into channel- and experimental-condition specific matrices of single trial data with trials as rows and time points as columns. The dimensionality of each matrix was determined as a minimum number of principal components capturing 90% of the variance across trials. This number was further expressed as a percent of the total number of dimensions and was taken as a measure of trial-to-trial variability for a given channel. For the audio, visual, and audiovisual conditions (for both perceived and non-perceived trials), the 120 trials whose mean most faithfully represented the average GFP’s (determined via minimization of absolute value residuals), and thus the average response, were analyzed to maintain the number of potential dimensions equal across conditions. For the catch trials, all false alarm catch trials were taken, and an equal number of correct-rejection catch trials were randomly selected on a participant-by-participant basis. This PCA analysis was performed on a 101 ms wide sliding window (first originating at −100 and terminating at 0 ms post-stimuli onset, 1 ms step size), to determine the temporal time-course of the trial-to-trial variability (note that this timecourse analysis is thus smoothed). Results (Figure 5) are reported as the percentage of extra dimensions need for each sensory modality to explain trial-to-trial variance in the perceived versus non-perceived condition. As for the GFP analyses, catch trials were separately analyzed as a control procedure. A random subset of correct rejection catch trials was sampled for each participant in order to match the number of correct rejections and false alarm trials. This last analysis was solely undertaken for participant with at least 20 false alarm trials (13/20 participants).

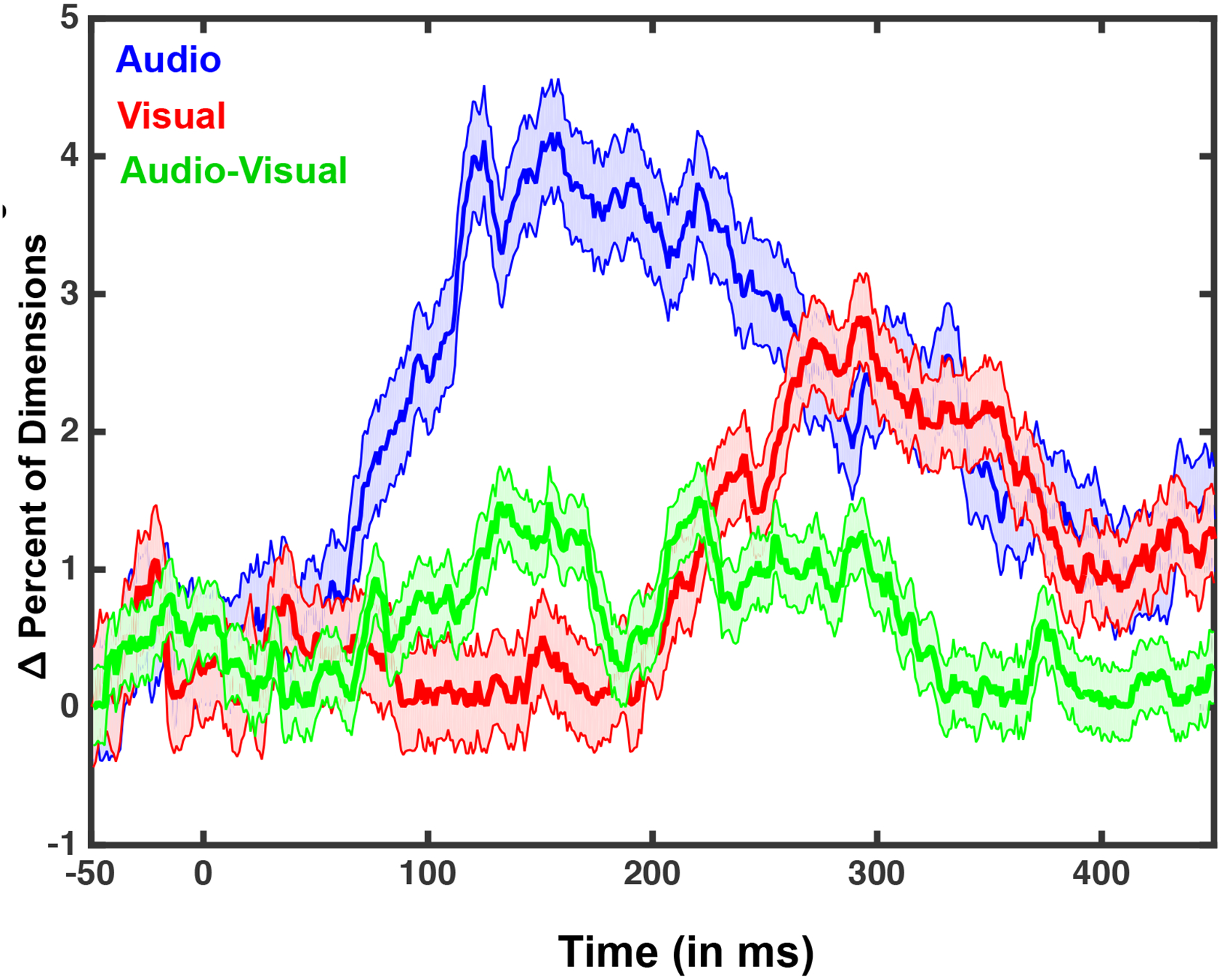

Figure 5. Trial-by-Trial EEG Variability as a Function of Sensory Modality and Perceived State.

For this analysis, principal component analysis (PCA) was performed on all channels and for every participant and the number of dimensions needed to explain 90% of the trial-by-trial variance was calculated. 120 trials were selected for each condition, giving a theoretical maximum dimensionality of 120. The figure illustrates the number of additional dimensions needed (in percentage) to explain trial-by-trial variability in the perceived as opposed to the non-perceived state as a function of time for the three sensory conditions. Results suggest that audio (blue) and visual (red) trials exhibit a marked increase in dimensions needed to explain trial-to-trial variance during the time-course of an epoch, a feature not seen in the audiovisual (green) condition. Shaded areas around curves represent S.E.M. over all participants.

Lempel-Ziv complexity.

Lastly, Lempel-Ziv (LZ) complexity was quantified for each condition, as a measure of complexity indirectly related to functional differentiation/integration (Casali et al., 2013; Koch et al., 2016; Tononi et al., 2016; Sanchez-Vives et al., 2017). LZ is the most popular out of the Kolmogorov class (routinely used to generate TIFF images and ZIP files), and measures the approximate amount of non-redundant information contained within a string by estimating the minimal size of the ‘vocabulary’ necessary to describe the entirety of the information contained within the string in a lossless manner. LZ can be used to quantify distinct patterns in symbolic sequences, especially binary signals. Before applying the LZ algorithm, as implemented in calc_lz_complexity.m (Quang Thai, 2012), we first down-sampled our signal from 1000 to 500 Hz, and converted it to a binary sequence. For every participant and every trial separately we first full-wave rectified the signal and then assigned a value of ‘1’ to a time point if the response was 2 standard deviations above the mean baseline value for that particular trial (−100 to 0 ms post-stimuli onset). If the response was not 2 standard deviations above the mean baseline, a value of ‘0’ was assigned (see Figure 7, left panel). Next, binary strings were constructed for each trial by column-wise concatenating the values at each of the 128 electrodes (Casali et al., 2013) for the entire period post-stimuli. Finally, the LZ complexity algorithm determined the size of the dictionary needed to account for the pattern of binary strings observed. The same procedure was repeated after shuffling the binary data after column-wise concatenation. This procedure was undertaken to calculate surrogate data with a-priori maximal complexity given the entropy in the original dataset. Finally, LZ was normalized by expressing it as the fraction of non-shuffled complexity divided by the shuffled version of the measure (see Andrillon et al., 2016 for a similar approach).

Results

Behavioral – Reaction Time

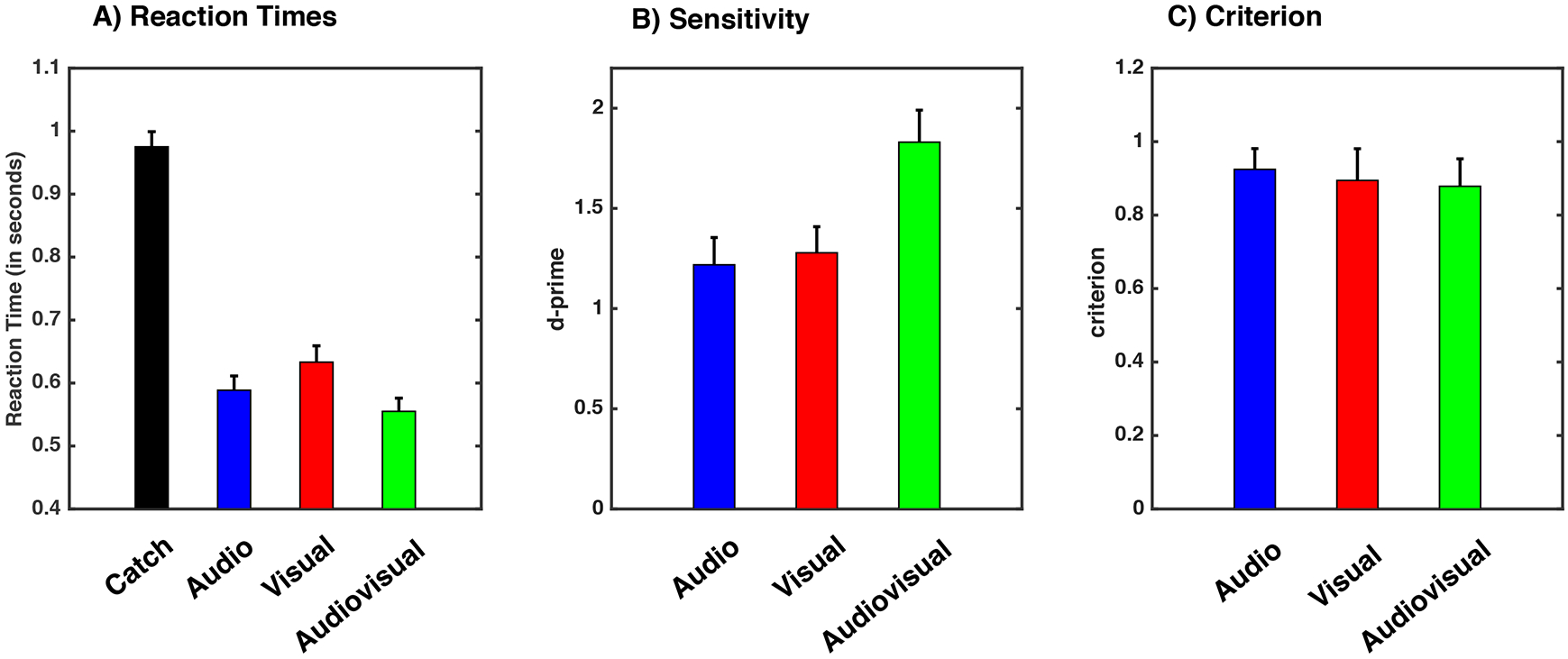

As expected from classical multisensory paradigms, a one-way repeated measures ANOVA with 4 sensory conditions (none, audio, visual, audiovisual) demonstrated a significant effect on reaction times (F(3, 60) = 103.193, p < 0.0001). As illustrated in Figure 2A, this effect may have been driven by false alarms during catch trials, which were very slow (mean catch trials = 0.975 ± 0.10 seconds [mean ± 1 S.E.M]) since neither stimulus was presented. In fact, the mean reaction time for catch trials (.975 seconds) was no different from the statistically expected value drawn from a fixed duration of 800 ms, plus a random duration between 0 and 2 seconds described by a uniform distribution (one-sample t-test to .9, p = 0.09). That is, on average participants false alarmed half way through the inter-stimulus interval. Thus, a one-way repeated measures ANOVA with 3 conditions (audio, visual, audiovisual) was performed and demonstrated a significant effect of sensory modality (F(1, 20) = 720.19, p < 0.001, η2 = 0.97). The main effect was driven by the multisensory condition being fastest (M = 0.555 ± 0.09 seconds), followed by the auditory (M = 0.588 ± 0.10 seconds), and then the visual (M = 0.633 ± 0.11 seconds) condition (all comparisons are paired-samples t-test with p < 0.046 Bonferroni-corrected). Detection of audiovisual stimuli was faster than detection of the fastest unisensory stimulus defined on a subject t-by-subject basis (audiovisual versus fastest unisensory, p = 0.012; see Figure 2A and Methods for details).

Figure 2. Psychophysical Results.

A) Mean reaction times per sensory modality condition in response to perceived stimuli. Please note y-axis does not commence at 0 ms, but 400 ms. B) Sensitivity (i.e., d’) and C) criterion for audio (blue), visual (red) and audiovisual (green) conditions. Error bars indicate +1 S.E.M. across participants.

Behavioral – Sensitivity

On average, participants responded “yes” on 8.2% (mean) ± 1.1% (standard error of the mean) of the catch trials (i.e., false alarms), 45.1% ± 3.9% of the audio trials (d’ = 1.21, c = 0.92), 41.7 ± 4.6 % of the visual trials (d’ = 1.27, c = 0.89), and 64.8 ± 4.7% (d’ = 1.83, c = 0.88) of the audiovisual trials (Figure 2B). Thus, we were successful in driving participant’s performance to a detection rate that allowed the bifurcation of data with regard to perceptual report; perceived versus non-perceived. Note, as illustrated in Figure 1, false alarm rates remained constant throughout the experiment, suggesting little fatigue or learning effects. A one-way ANOVA and subsequent paired-samples t-tests on sensitivity (i.e., d’) values extracted from signal detection analyses (SDT; Tanner & Swets, 1954; Macmillan & Creelman, 2005) suggested that participants were most sensitive to the multisensory presentations (F(2, 40) = 19.84, p < 0.001; paired-samples t-tests on audiovisual d’ versus most detected unisensory d’, p = 0.007). Lastly, response criterion (i.e., c) was unchanged across the different sensory conditions (F(2, 40) = 0.05, p = 0.94; See Figure 2B). Thus, the behavioral data from this task illustrates multisensory facilitation in the form of the frequency, sensitivity, and speed of stimulus detection, while showing no change in response criterion. This last observation is particularly important as it suggests that participants’ overt reports of stimulus detection reflect perceptual awareness as opposed to a change in what they consider ‘reportable’.

Global Field Power

Topographic consistency test (TCT; Koeing & Melie-García, 2010) over the entire post-stimuli interval demonstrated a reliable evoked potential when subjects were presented with auditory, visual, or audiovisual stimuli, both when participants reported perceiving or not perceiving the stimuli (all p < 0.01, FDR corrected). In contrast, no consistent evoked potential was apparent during catch trials, regardless of whether participants reported a stimulus or not (all p > 0.08, FDR corrected).

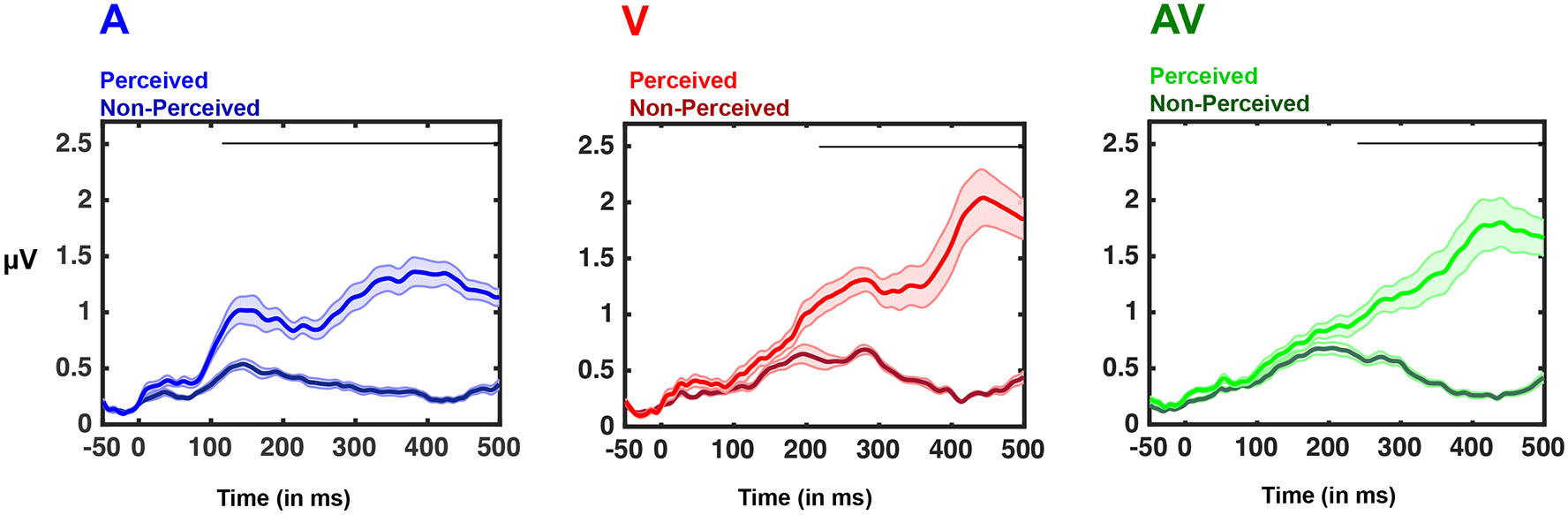

For auditory stimuli, examination of the temporal time-course of evoked potentials revealed deviations from baseline between 64 and 112 ms post-stimulus and then from 134 ms post stimulus throughout the rest of the epoch for trials in which the stimulus was perceived, and for the interval between 72 ms and 448 ms post-stimulus and then again from 461 ms post stimulus throughout the rest of the epoch when the stimuli were not perceived. For visual stimuli, deviations from baseline were seen between 76 and 90 ms post-stimulus and then from 138 ms post stimulus throughout the rest of the epoch when the stimuli were perceived, and between 90 ms and 354 ms post-stimulus and then from 387 ms post stimulus throughout the rest of the epoch when the stimuli were not perceived. Finally, for the audiovisual condition evoked potentials were consistently seen beginning at 45 ms post-stimulus and throughout the rest of the epoch when the stimuli were perceived, and beginning at 92 ms post-stimulus and throughout the rest of the epoch when the stimuli were not perceived.

Contrasts of the Global Fields (GFPs) between conditions demonstrated a significant difference between perceived versus non-perceived stimuli for each of the three sensory conditions (see Figure 3). The statistically significant difference between perceptual states (i.e., main effect of perceptual state in a 2 [perceptual state] x 3 [sensory modality (excluding catch trials)] repeated-measures ANOVA, N = 19, all p < 0.01) was transient for the interval spanning 53-72 ms post-stimulus onset and sustained after 102 ms, with an almost complete absence of late (i.e., +300 ms) response components for non-perceived stimuli (see Gaillard et al., 2009; Del Cul et al., 2007; Dehaene et al., 2001; Sergent et al., 2005; Sperdin et al., 2014; Sanchez et al., 2017, for similar results, as well as Dehaene & Changeux, 2011 for a review). Stated simply, both perceived and non-perceived stimuli generated similar early sensory responses (< ~120 ms post-stimuli onset). In contrast, the presence of relatively late (> ~ 120 ms post-stimuli onset) response components was associated with perceived stimuli. Also statistically significant was the main effect of stimulus modality in the intervals between 110-131 ms post-stimulus (N = 19, all p < 0.01; this likely reflects auditory evoked potentials) and between 194-240 ms post-stimulus (N = 19, all p < 0.01; this likely reflects visual evoked potentials; Luck, 2005). Not surprisingly given the lack of significant evoked potentials in these conditions (see above), paired-sampled t-tests revealed no difference in the GFP evoked by ‘perceived’ and ‘non-perceived’ catch trials (all t(12) < 1, all p > 0.57), although this analysis relied on a considerably reduced number of trials (see Methods). Further, results revealed a significant interaction between perceptual state and sensory condition 115 post-stimuli onset and onward. Separate t-tests across perceptual states (perceived vs. non-perceived) for the different sensory conditions (audio, visual, and audiovisual) revealed that for auditory stimuli, the GFP diverged for perceived vs. non-perceived stimuli at 121 ms post-stimulus onset. For visual stimuli this divergence occurred at 219 ms, while for multisensory stimuli the divergence began 234 ms after stimulus onset.

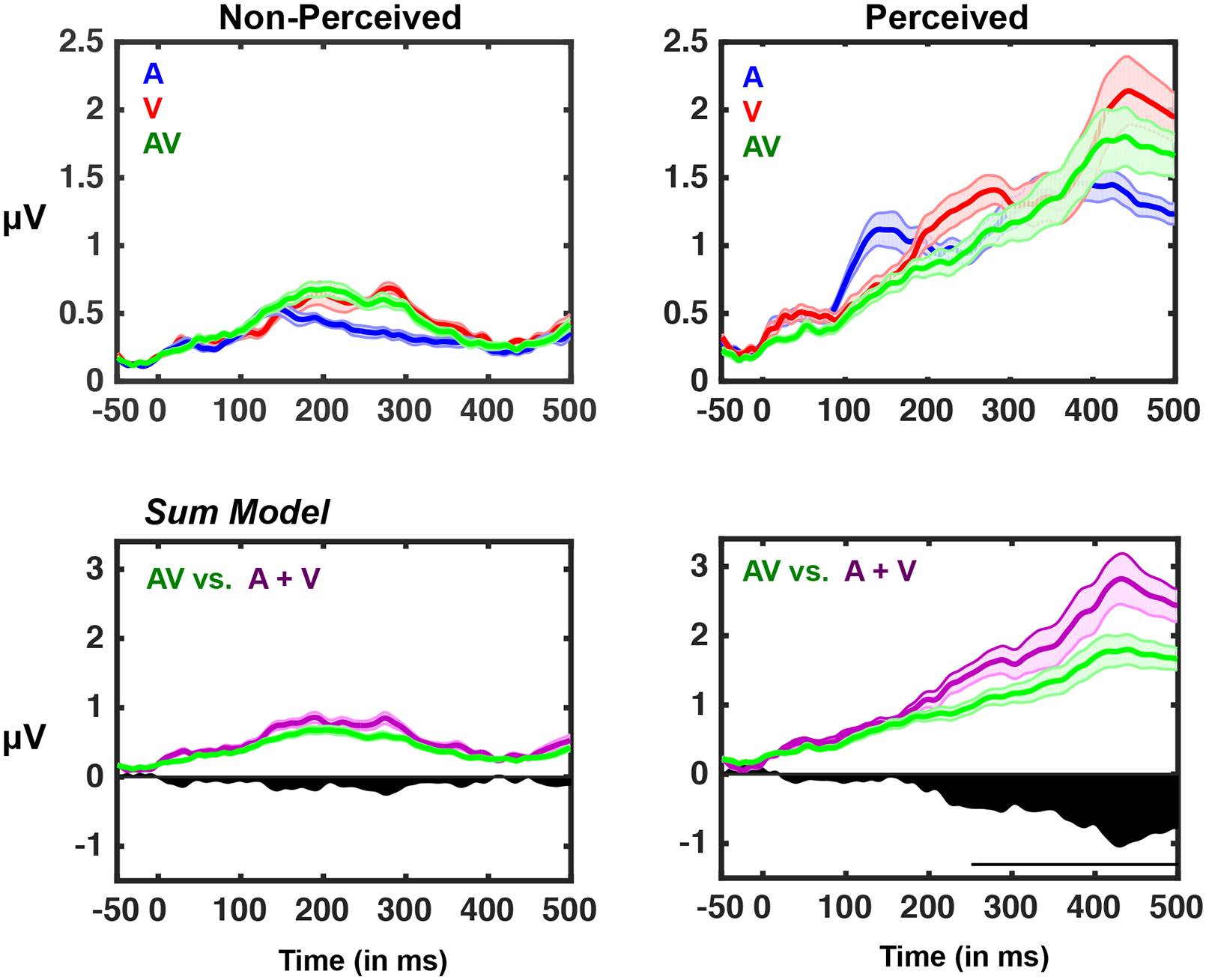

Figure 3. Audio, Visual, and Audiovisual Global Field Power.

Mean global field power (GFP) across the entire montage of electrodes for each experimental condition; auditory (blue), visual (red), and audiovisual (green). Lighter shades are used for perceived stimuli, while darker colors are used for non-perceived. Shaded areas represent S.E.M. over all participants and black bars indicate intervals over which GFP was significantly different (p < 0.01) across perceptual states. On the x-axis, 0 indicates stimulus onset.

Next, we determined whether the difference in GFP magnitude for perceived vs. non-perceived multisensory stimuli could be explained by a simple combination of the unisensory responses. To do so, we compared the multisensory responses (perceived and non-perceived) to the sum of the unisensory responses (perceived and non-perceived; see Cappe et al., 2010, 2012 for a similar analysis). To do so, the evoked potentials for the unisensory conditions were first summed and then the GFP was extracted (see Methods). This analysis showed a significant main effect of sensory modality (A + V > AV; see Figure 4) beginning at 183 ms (N = 19, repeated-measures ANOVA, all p < 0.01), and a main effect of perceptual state (perceived > non-perceived; See Figure 4 bottom panel) between 97-188 ms post-stimulus onset and from 222 ms onward (N = 19, repeated-measures ANOVA, all p < 0.01). Most importantly, the results indicated a significant interaction such that multisensory responses to perceived stimuli were weaker than the sum of the two unisensory responses in a manner that differed significantly from the comparison of multisensory responses to non-perceived stimuli (N = 19, 2 [perceptual state] x 2 [sum unisensory vs. multisensory] repeated-measures ANOVA interaction all p < 0.01, 251 ms onward; see Figure 4; dark area, and line indicating significance). Follow up analyses using paired t-tests showed no difference between the pair and the sum when stimuli were not perceived (all p > 0.043), but a difference between these conditions beginning194 ms post-stimulus onset (p < 0.01), when the stimuli were perceived.

Figure 4. Global Field Power as a Function of Sensory Modality, Perceived State and Comparisons between the Sum of Unisensory Conditions to Multisensory Condition.

Top row: Same as Figure 2, with average GFP traces over all participants for the three sensory conditions superimposed for non-perceived (left) and perceived (right) trials. Bottom row: a linear model of GFP depicting the actual multisensory response (green) relative to an additive model (sum; A + V) (purple). Black area below the 0 microvolts line represents the difference between predicted and actual multisensory responses. Note the strong deviations from summation for the perceived multisensory conditions, and the lack of such differences for the non-perceived conditions. The black horizontal bar indicates significant difference (p < 0.01) between the GFP of the summed unisensory evoked potential and the GFP of the multisensory condition when perceived. Shaded area represents +/− 1 S.E.M. across participants.

Collectively, these GFP results highlight that audiovisual stimuli that are perceived result in late evoked potentials that are not present when stimuli are not perceived, mirroring what has been well established within the visual neurosciences (e.g., see Dehaene & Changeaux for a review), and what seems to be emerging within the auditory neuroscience (e.g., see Sadaghiani et al., 2009),. Interestingly, the presence of this late component exhibits sub-additivity when contrasting the sum of unisensory and the multisensory condition (e.g., see Cappe et al., 2012 for a similar results), an observation that is not true when stimuli are not perceived – due to the lack of late evoked potentials.

Inter-Trial Variability

To extend analyses beyond response strength, we further employed measures that capture the variability (i.e., reproducibility) and complexity (next section) of EEG responses. Specifically, there are several measures that have been leveraged successfully for the characterization and differentiation of states of consciousness (e.g., coma versus awake versus anesthetized versus dreaming; Casali et al., 2013; Schurger et al., 2015; Ecker et al., 2014). In the current work, we implement a relatively straightforward version of this strategy. To evaluate response variability across sensory conditions and perceptual states, we performed principal component analysis (PCA) on the EEG signal for each trial and participant on an electrode-by-electrode basis, and identified the minimum number of principle components needed to capture 90% of the trial-to-trial variability (McIntosh et al., 2008). As illustrated in Figure 5, more dimensions were needed to account for inter-trial response variability of perceived (vs. non-perceived) conditions. However, this difference was more prominent for unisensory conditions compared to multisensory conditions (Figure 5). More specifically, a 2 (perceived vs. non-perceived) x 3 (sensory modality; A, V, AV) repeated measures ANOVA demonstrated a significant main effect of sensory modality beginning 107 ms post-stimulus onset and persisting throughout the entire epoch (p < 0.01), a main effect of perceptual state beginning at 95 ms post-stimulus onset and persisting throughout the rest of the epoch (p < 0.01), and a significant interaction between these variables beginning 99 ms post-stimulus onset and persisting throughout the rest of the epoch (p < 0.01). The interaction is explained by a difference in the time at which the PCA bifurcated between perceptual states (if at all) for the different sensory conditions. For the unisensory condition, beginning at 91 ms following the auditory stimulus, and at 239 ms following the visual stimulus, there was a significant increase in response variability trials in which the stimulus was perceived (p < 0.01; N = 19, paired-samples t-test, for both contrasts; Figure 5). In contrast, this increased variability for perceived trials was not apparent for the audiovisual stimuli (p > 0.09, N = 19, paired samples t-test). Inter-trial variability as quantified by the PCA analysis was similar across perceptual states for the catch trials (all t(12) < 1, p > 0.74).

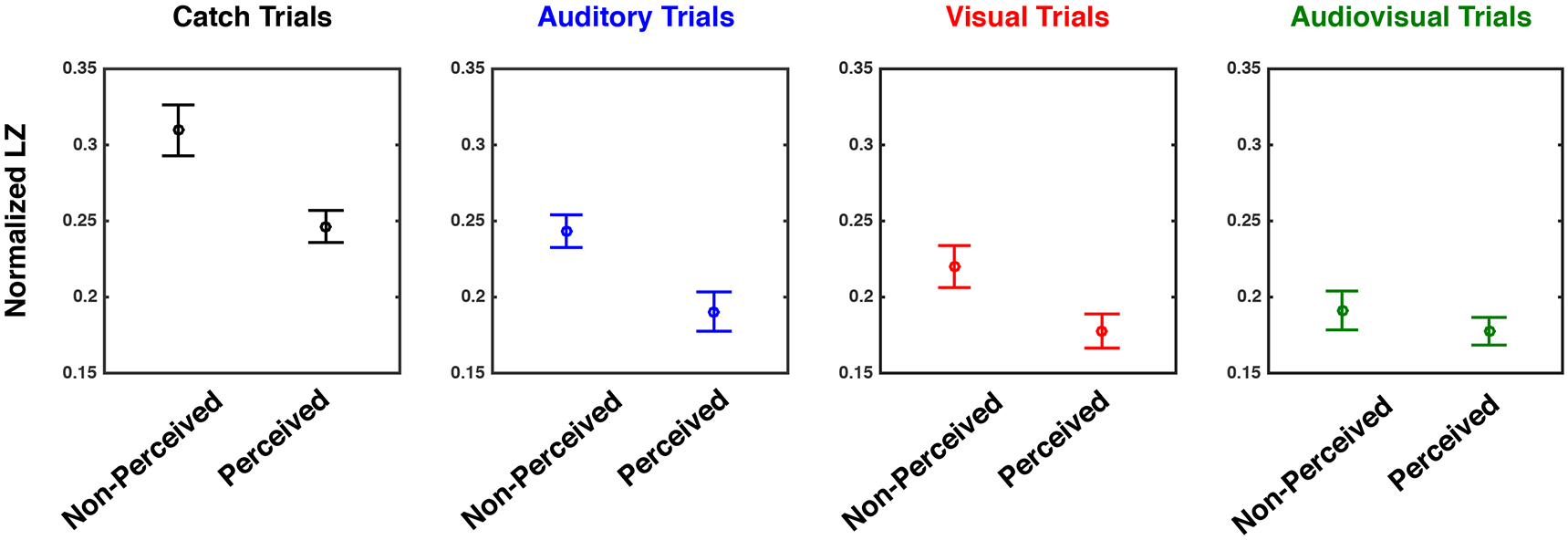

EEG Complexity

The final theory-driven measure of interest here is a measure of capacity for information reduction - Lempel Ziv (LZ) complexity. This measure is of interest due to recent observations indicating that perceptual awareness may not emanate simply for the recruitment of broadly distributed networks, but rather for the differentiation and integrations of activity among these networks (see Cavanna et al., 2017 for a recent review). These networks are postulated to fulfill axiomatic observations related to awareness (Tononi & Koch, 2015) that embody complex neural signatures of that mental state. Thus, here, LZ complexity – a measure of information reducibility – was measured across the post-stimuli period of audio, visual, and audiovisual stimuli that were either perceived or not, and we queried whether similar patterns complexity would apply across modalities (i.e., from visual to auditory) and number of modalities (i.e., from unisensory to multisensory). As illustrated in Figure 6, a 4 (sensory modality; none, audio, visual, audiovisual) x 2 (perceived vs. non-perceived) repeated measures ANOVA revealed a significant main effect for sensory modality (F(3, 57) = 44.92, p < 0.001), a significant main effect for perceptual state (F(1, 18) = 40.82, p < 0.001), and a significant interaction between these variables (F(3, 57) = 3.21, p = 0.029. The main effect of perceptual state was due to higher complexity for non-perceived stimuli (M = 0.24, S.E.M = 0.01) than for perceived stimuli (M = 0.19, S.E.M = 0.005; paired t-test, t(18) = 6.32, p < 0.001). Regarding the main effect of sensory modality, post-hoc paired t-tests (Bonferroni corrected) revealed that catch trials exhibited the most informationally complex patterns of activity, on average, (M = 0.27, S.E.M = 0.007, all p < 0.001), followed by auditory evoked potentials (M = 0.21, S.E.M. = 0.010, contrasts to catch and audiovisual conditions significant with all p < 0.03, but not the contrast to visual trials, p = 0.659), followed by visual evoked potentials (M = 0.19, S.E.M = 0.010, contrast to audiovisual trials being non-significant, p = 0.253), and finally by the multisensory evoked potentials (M = 0.18, S.E.M = 0.008). The complexity of these multisensory responses was not significantly different from those of visual responses. The significant interaction was driven by the fact that there was a significant difference in evoked complexity between perceptual states (perceived vs. non-perceived) for catch trials (perceived; M = 0.24, S.E.M = 0.03, non-perceived; M = 0.30, S.E.M = 0.06, t(19) = 3.40, p = 0.003), auditory trials (perceived; M = 0.19, S.E.M = 0.05, non-perceived; M = 0.24, S.E.M = 0.04, t(19) = 6.63, p < 0.001), and visual trials (perceived; M = 0.17, S.E.M = 0.04, non-perceived; M = 0.22, S.E.M = 0.05, t(19) = 4.45, p < 0.001) stimulation. In contrast, this difference was not seen for audiovisual trials (perceived; M = 0.17, S.E.M = 0.03, non-perceived; M = 0.19, S.E.M = 0.04, t(19) = 1.32, p = 0.203). In fact, for the multisensory condition, Bayesian statistics suggested that not solely there is no evidence against the null hypothesis (as inferred via Frequentists analyses described above), but in fact there was considerable evidence for it (BF10 = 0.298 < 0.03, typically suggested as cutoff favoring the null hypothesis; Jeffreys, 1961). Taken together, these analyses suggest that while EEG complexity is generally decreased when stimuli are perceived (vs. non-perceived and normalizing for overall entropy) for unisensory stimuli, this is not true for multisensory stimuli. Interestingly, the decrease in complexity is also observed during catch trials when participants report perceiving a stimulus that is not present. Thus, the decrease in EEG evoked complexity is not only associated with physical stimulation, but seemingly also with perceptual state.

Figure 6. Neural Complexity Differs as a Function of Perceived State and Sensory modality.

Lempel-Ziv Complexity as a function of experimental condition; catch (leftmost), auditory (second panel), visual (third panel), or audiovisual (rightmost) panels. Results suggest a significant difference between detected and non-detected stimuli for catch (black), auditory (blue), and visual (red) conditions, but not for audiovisual (green) trials. Y-axis is normalized Lempel-Ziv during the entire post-stimuli epoch (LZ for un-shuffled data divided by shuffled data). Error bars indicate +1 S.E.M. across participants.

Discussion

A number of different neural markers of perceptual awareness have been proposed; from “neural ignition” and the presence of late evoked potentials (P3, P300, P3b; Dehaene & Changeaux, 2011; Dehaene et al., 2017), to increased neural reproducibility (Schurger et al., 2010), to a high degree of information integration that can be indexed through measures such as EEG complexity (Casali et al., 2013; Tononi et al., 2016; Koch et al., 2016). Here, we sought to extend the use of these various measures posited to represent credible neural signatures of perceptual awareness for visual stimuli to multisensory perceptual processes – as much of our perceptual gestalt in constructed on a multisensory foundation. Collectively, our results support and extend prior work implicating neural signatures of perceptual awareness revealed in measures of EEG response strength, reproducibility, and complexity. We show, as has earlier work, that reproducibility and complexity indices of perceptual awareness are similar for visual and auditory conditions, but we also show that there exist significant differences in the indices of awareness associated with multisensory stimulation, differences that likely have important implications for furthering our understanding of multisensory perceptual awareness.

Neural Response Strength as a Modality-Free Indicator of Perceptual Awareness

More specifically, conditions in which visual, auditory or both visual and auditory stimuli were presented resulted in reliable variations in EEG response strength (as indexed via global field power – GFP) that covaried with perceptual state (i.e., was the stimulus perceived or not). In each of these conditions, comparison of perceived vs. non-perceived stimuli revealed the presence of late evoked potentials that were only present under perceived circumstances. Thus, the presence of late evoked potentials appears to be a strong index of perceptual awareness under both unisensory and multisensory conditions. The striking absence of late EEG components to non-perceived stimuli resembles “ignition”-like single unit responses to perceived stimuli that have been found in the temporal lobe of epileptic patients (Dehaene, 2014). This response pattern fits the assumption that conscious percepts arise late in the evolution of sensory responses, possibly because they necessitate more global brain activity (Dehaene & Changeux, 2011; Gaillard et al., 2009; Noy et al., 2015). This “ignition-like” effect, which at times has been difficult to capture in previous work (e.g., Silverstein et al., 2015), likely results from several aspects of the current experiment. First, it may be argued that the lack of observable late responses in EEG signals may be due to our adaptive, online method of adjusting stimulus intensity – and not reflective of the manner in which individuals become aware of stimuli. This account, however, does not fully explain the GFP effects, as EEG analyses were restricted to the last 400-500 trials and in which auditory and visual noise levels were relatively fixed in intensity and the minimal changes in stimuli intensity did not provoke a change in GFP (see Control Analyses; Figure S1 online). Second, the current experiment is different from most previous EEG studies presenting stimuli at threshold (and demonstrating the occurrence of late EEG components, e.g., see Koch et al., 2004) in that here we interleave stimuli from different modalities (see Sanchez et al., 2017 for a similar observation of abolished late evoked responses for undetected stimuli in a multisensory context). Finally, it is possible that the clear presence of late evoked potentials in perceived trials but not in non-perceived trials arises because participants were working below the 50% detection rate and not at threshold (most prior work presented stimuli at threshold).

EEG Subadditivity in Multisensory Integration is Associated with Perceived Stimuli

A second interesting observation regarding the GFP results relates to the comparison between the sum of unisensory evoked potentials (“sum”) and the multisensory response (“pair”). When stimuli were not perceived there was no significant difference between the multisensory GFP and the GFP predicted by the sum of unisensory responses (i.e., no difference between sum and pair). In contrast, when the stimuli were perceived, the GFP of the audiovisual condition was distinctly subadditive when compared with the sum of the unisensory responses. Hence, although neural response strength (i.e., GFP) differentiates between perceptual states under both under unisensory and multisensory conditions, the perceived multisensory response does not reflect a simple addition of the two unisensory responses. Indeed, subadditivity in EEG responses is often seen as a hallmark of multisensory processing (see Cappe et al., 2010, 2012, for example), and here it was evident only under perceived multisensory conditions, suggesting links between multisensory integration and perceptual awareness (see Baars, 2002, for a philosophical consideration arguing that conscious processing is involved in the merging of sensory modalities). While a number of studies suggest that multisensory interactions may occur when information from a single sense is below the threshold for perceptual awareness (Lunghi & Alais, 2013; Lunghi et al., 2014; Aller et al. 2015; Salomon et al., 2015; 2016), or when both are presented at subthreshold levels following a period of associative learning (Faivre et al., 2014), or even when participants are unconscious (Beh & Barratt, 1965; Ikeda & Morotomi, 1996; Arzi et al., 2012), evidence for multisensory integration in the complete absence of perceptual awareness (without prior training) is conspicuously lacking (Noel et al., 2015; Faivre et al., 2017). The current results provide additional support for the absence of multisensory integration outside of perceptual awareness, but, as null results, must be interpreted with caution.

Across trial EEG Reproducibility Differentiates Between Perceived and Non-Perceived Unisensory but not Multisensory Stimuli

The next putative index of perceptual awareness used in the current study was that of neural reproducibility (Schurger et al., 2015). This measure is predicated on the view that spatio-temporal neural patterns giving rise to subjective experience manifest as relatively stable epochs of neural activity (Fingelkurts et al., 2013; Britz et al., 2014). To address the stability of responses, we measured inter-trial variability via a relatively straightforward metric, i.e., PCA. Those results disclosed similar levels of neural reproducibility for visual and auditory conditions (although with different time-courses), and a categorically distinct pattern for multisensory presentations. Specifically, there was no difference in neural reproducibility across trials for perceived vs. non-perceived trials for the multisensory conditions, but there were reliable differences associated with the unisensory conditions. The increased variability for perceived unisensory stimuli runs counter to the view that responses to perceived trials are more reproducible (Schurger et al., 2010; Xue et al., 2010). However, we did not observe late response components to non-perceived stimuli, which reduces the amount of principle components that are needed to explain the variance of this part of the response. Indeed, the increase in principle components that are needed to explain the trial-to-trial variability for the perceived stimuli occurs very close in time to the bifurcation between perceived and non-perceived GFPs (auditory: GFP at 121 ms vs. PCA-dimensionality increase at 91 ms; visual: GFP at 219 ms vs. PCA-dimensionality increase at 239 ms). Thus, the relevant observation here is that both the strength (as indexed via GFP analyses) and the between-trial variability (as indexed via PCA analyses) seen in response to perceived multisensory stimuli are reduced in comparison to the unisensory conditions, with both of these effects appearing around the same time in the neurophysiological responses. On the other hand, in contrast to the observation that late evoked potentials seemingly index perceptual awareness regardless of sensory modality, the increase in reproducibility associated with perceived stimuli (Schurger et al., 2015) is most readily evident for multisensory stimuli. That is, while the observation derived from visual neurosciences indicating increased reproducibility for perceived stimuli (Schurger et al., 2015) may be applied to auditory neurosciences – same pattern of results between auditory and visual modalities, although at different latencies – the PCA seem categorically different when probing perceived and non-perceived multisensory stimuli. These results highlight that, at least in the case of neural reproducibility, conclusions drawn from unisensory studies may not generalize to multisensory studies for work attempting to better understand the neural correlates of perceptual awareness.

The finding that signals of neural variability under multisensory conditions changed little as a function of perceptual state is consistent with computational models based on Bayesian inference (e.g., Kording et al., 2007) and Maximum Likelihood Estimates (MLE). These models have been applied to psychophysical (Ernst & Banks, 2002), neuroimaging (Rohe et al., 2015; 2016) and electrophysiological (Fetsch, Deangelis, Angelaki, 2013; Boyle et al., 2017) observations concerning supra-threshold multisensory performance, and collectively illustrate that the combination of sensory information across different modalities tends to decrease variability (i.e., increases signal reliability). Although the current study was not designed or analyzed to specifically pinpoint neural concomitants of multisensory integration, our findings may inform the models mentioned above by showing that, at least for the task employed in the current study, variance in the evoked neural response is more comparable across perceptual states for multisensory conditions compared to unisensory conditions. Interestingly, stimulus-induced reduction in neural variability has been observed across a wide array of brain areas, and has been posited to be a general property of cortex in response to stimulus onset (Churchland et al., 2010). In subsequent work it will be informative to examine whether, at the level of single neurons, variability (as measured through indices such as Fano Factor; Eden, 2010) decreases equally across perceptual states (while maintaining stimulus intensity near detection threshold) and whether these changes differ for unisensory brain responses compared to multisensory responses.

EEG complexity Differentiates Between Perceived and Non-Perceived Unisensory but not Multisensory Stimuli

Finally, consider that aspect of our results dealing with measured neural complexity associated with evoked responses due to visual, auditory, or audiovisual stimuli and as a function of perceptual state. In previous work, a derivative of this measure has successfully categorized patients along the continuum ranging from awake to asleep to minimally conscious and, finally, to comatose (see Casalli et al., 2013). This work has shown that when neural responses are evoked via transcranial magnetic stimulation (TMS), they are less amenable to information compression when patients are conscious relative to when they are unconscious. To our knowledge, however, the present report is the first to examine EEG data complexity (compressibility) as a function of perceptual state and not as a function of level of consciousness. Our results indicate that evoked responses are less complex when either visual or auditory stimuli are perceived (compared to non-perceived). Interestingly, this difference was not evident under multisensory conditions. Further, this measure was able to differentiate between the catch trials that were correctly “rejected” (i.e., no stimulus reported when no stimulus was presented) and false alarms (i.e., reports of the presence of a stimulus when none was presented – a possible analog of an hallucination). The switch in effect direction between levels of consciousness (i.e., more complex when patients are conscious) and perceptual state (i.e., more complex when stimuli are not perceived) likely is due to the fact that in the former case neural responses are artificially evoked – thus recruiting neural networks in a non-natural manner – while in the current case neural responses are evoked by true stimulus presentations. As an example, in the case of visual stimulus presentations, the present results indicate that neural information in the visual neural network architecture is more stereotyped for perceived vs. non-perceived trials.

Conclusions

Taken together, the overall pattern of results: 1) question whether multisensory integration is possible prior to perceptual awareness (see Spence & Bayne 2014 and O’Callaghan 2017 for distinct perspectives on whether perceptual awareness may be uniquely multisensory or simply a succession of unisensory processes), and 2) question the implicit assumption that all indices of perceptual awareness apply across all sensory modalities and conditions. Indeed, if assumed that the search for the neural correlates of perceptual awareness must result in a set of features that are common across all sensory domains (e.g., visual awareness, auditory awareness, audiovisual awareness), then the current findings would argue that the presence of late evoked potentials, as opposed to neural reproducibility or complexity, most closely tracks perceptual awareness. On the other hand, if one instead assumes that visual awareness, auditory awareness, and audiovisual awareness are categorically distinct (or non-existent in the case of multisensory awareness; Spence & Bayne, 2014), then the current findings suggest a greater similarity between the neural correlates of perceptual awareness across visual and auditory modalities, and not between unisensory and multisensory perceptual processes.

Supplementary Material

Acknowledgements

The authors thank Dr. Nathan Faivre for insightful comments on an early draft. The work was supported by an NSF GRF to J.P.N., by NIH grant HD083211 to M.T.W, and by Centennial Research Funds from Vanderbilt University to R.B.

Footnotes

Competing Interests

The authors declare no competing interests.

References

- Aller M, Giani A, Conrad V, et al. A spatially collocated sound thrusts a flash into awareness. Front Integrat Neurosci 2015; 9:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andrillon T, Poulsen AT, Hansen LK et al. Neural markers of responsiveness to the environment in human sleep. J Neurosci 2016;36:6583–96 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arzi A, Shedlesky L, Ben-Shaul M et al. Humans can learn new information during sleep. Nat Neurosci 2012; 15:1460–5. [DOI] [PubMed] [Google Scholar]

- Baars BJ. (2002). The conscious access hypothesis: origins and recent evidence. Trends Cognit Sci; 6:47–52 [DOI] [PubMed] [Google Scholar]

- Beh HC, Barratt PEH. Discrimination and conditioning during sleep as indicated by the electroencephalogram. Science 1965; 147:1470–1. [DOI] [PubMed] [Google Scholar]

- Berman M, Yourganov G, Askren MK, Ayduk O, Casey BJ, Gotlib IH, Kross E, McIntosh AR, Strother SC, Wilson NL, Zayas V, Mischel W, Shoda Y, & Jonides J (2013). Dimensionality of brain networks linked to life-long individual differences in self-control. Nature Communications, Article # 1373 (doi: 10.1038/ncomms2374) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanke O, Slater M, & Serino A (2015). Behavioral, Neural, and Computational Principles of Bodily Self-Consciousness. Neuron, vol. 88, num. 1, p. 145–66. [DOI] [PubMed] [Google Scholar]

- Boyle SC, Kayser SJ, Kayser C (2017). Neural correlates of multisensory reliability and perceptual weights emerge at early latencies during audio-visual integration. European Journal of Neuroscience, DOI: 10.1111/ejn.13724 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH (1997). The psychophysics toolbox. Spatial Vision, 10, 433–436 [PubMed] [Google Scholar]

- Britz J, Díaz Hernàndez L, Ro T and Michel CM (2014) EEG-microstate dependent emergence of perceptual awareness. Front. Behav. Neurosci 8:163. doi: 10.3389/fnbeh.2014.00163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broadbent D (1958). Perception and Communication. London: Pergamon Press [Google Scholar]

- Brunet D, Murray MM, Michel CM (2011) Spatiotemporal analysis of multichannel EEG: CARTOOL. Comput Intell Neurosci 2011(813870):15. doi: 10.1155/2011/813870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Callaghan CO (2017). Grades of multisensory awareness, Mind Lang. 32155–181. [Google Scholar]

- Cappe C, Thelen T, Romei V, Thut G, Murray MM (2012) Looming signals reveal synergistic principles of multisensory integration. J Neurosci 32(4):1171–1182 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C, Thut G, Romei V, Murray MM, (2010). Auditory-visual multisensory interactions in humans: Timing, topography, Directionality, and sources. J. Neurosci 30, 12572–12580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casali AG et al. (2013) A theoretically based index of consciousness independent of sensory processing and behavior. Sci. Transl. Med 5, 198ra105. [DOI] [PubMed] [Google Scholar]

- Cavanna F, Vilas MG, Palmucci M, Tagliazucchi E (2017). Dynamic functional connectivity and brain metastability during altered states of consciousness. [E-pub ahead of press]; 10.1016/j.neuroimage.2017.09.065 [DOI] [PubMed] [Google Scholar]

- Churchland MM et al. (2010). Stimulus onset quenches neural variability: a widespread cortical phenomenon. Nature Neurosci. 13, 369–378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Changeux J-P, Naccache L, Sackur J, Sergent C (2006) Conscious, preconscious, and subliminal processing: a testable taxonomy. Trends Cogn Sci 683 10:204–211 [DOI] [PubMed] [Google Scholar]

- Dehaene S, Lau H, Kouider S. What is consciousness, and could machines have it? Science (New York, N.Y.). 358: 486–492. DOI: 10.1126/science.aan8871 [DOI] [PubMed] [Google Scholar]

- Dehaene S. (2014). Consciousness and the Brain: Deciphering How the Brain Codes Our Thoughts. Viking Press, 2014. [Google Scholar]

- Dehaene S & Changeux J-P (2011). Experimental and theoretical approaches to conscious processing. Neuron 70, 200–227. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Naccache L, Cohen L, Bihan DL, Mangin JF, Poline JB, and Riviere D (2001). Cerebral mechanisms of word masking and unconscious repetition priming. Nat. Neurosci 4, 752–758 [DOI] [PubMed] [Google Scholar]

- Del Cul A, Baillet S, and Dehaene S (2007). Brain dynamics underlying the nonlinear threshold for access to consciousness. PLoS Biol. 5, e260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delorme A and Makeig S (2004) EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21 [DOI] [PubMed] [Google Scholar]

- Deroy O, Chen YC, and Spence C (2014). Multisensory constraints on awareness. Philos. Trans. R. Soc. Lond. B Biol. Sci 369, 20130207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deroy O, Faivre N, Lunghi C, Spence C, Aller M, & Noppeney U (2016). The complex interplay between multisensory integration and perceptual awareness. Multisensory Research. doi: 10.1163/22134808-00002529 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ecker AS, Berens P, Cotton RJ, Subramaniyan M, Denfield GH, Cadwell CR, Smirnakis SM, Bethge M, Tolias AS (2014) State dependence of noise correlations in macaque primary visual cortex. Neuron 82:235–248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eden UT, and Kramer MA (2010). Drawing inferences from Fano factor calculations. J. Neurosci. Methods 190, 149–152 [DOI] [PubMed] [Google Scholar]

- Eriksen CW (1960). Discrimination and learning without awareness: A methodological survey and evaluation. Psychological Review 67, 5:279–300. [DOI] [PubMed] [Google Scholar]

- Ernst MO and Banks MS (2002) Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429–433 [DOI] [PubMed] [Google Scholar]

- Faivre N, Arzi A, Lunghi C, & Salomon R (2017). Consciousness is more than meets the eye: a call for a multisensory study of subjective experience. Neuroscience of Consciousness, 3(1): nix003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faivre N, Mudrik L, Schwartz N, & Koch C (2014). Multisensory Integration in Complete Unawareness: Evidence from Audiovisual Congruency Priming. Psychological Science, 1–11. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Deangelis GC, Angelaki DE (2013). Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat. Rev. Neurosci;14(6):429–442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fingelkurts A, Fingelkurts A, Bagnato S, et al. (2013) Dissociation of vegetative and minimally conscious patients based on brain operational architectonics: factor of etiology. Clin EEG Neurosci. 44:209–220. doi: 10.1177/1550059412474929. [DOI] [PubMed] [Google Scholar]

- Gaillard R, Dehaene S, Adam C, Clemenceau S, Hasboun D, Baulac M, Cohen L, and Naccache L (2009). Converging intracranial markers of conscious access. PLoS Biol. 7, e61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T (2002) Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage 4:870–878 [DOI] [PubMed] [Google Scholar]

- Glaser JI, Chowdhury RH, Perich MG, Miller LE, Kording KP (2017). Machine learning for neural decoding. ArXiv:1708.00909 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guthrie D, Buchwald JS (1991) Significance testing of difference potentials. Psychophysiology 28:240 –244), [DOI] [PubMed] [Google Scholar]

- Harvey LO, (2003). Detection sensitivity and response bias. Psychology of Perception, 1–15 [Google Scholar]

- Ikeda K, Morotomi T. Classical conditioning during human NREM sleep and response transfer to wakefulness. Sleep 1996; 19:72–74. [DOI] [PubMed] [Google Scholar]

- James W. (1890). The Principles of Psychology, in two volumes. New York: Henry Holt and Company. [Google Scholar]

- Jeffreys H. (1961). Theory of probability (3rd ed.), Oxford classic texts in the physical science. Oxford: Oxford University Press. [Google Scholar]

- Joos K, Gilles A, Van de Heyning P, De Ridder D, Vanneste S (2014) From sensation to percept: The neural signature of auditory event-related potentials. Neurosci Biobehav Rev 42:148–156 [DOI] [PubMed] [Google Scholar]

- King JR, and Dehaene S (2014). Characterizing the dynamics of mental representations: the temporal generalization method. Trends Cogn. Sci 18, 203–210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koch C, Massimini M, Boly M, Tononi G (2016). Neural correlates of consciousness: progress and problems. Nature Reviews Neuroscience, 17 (5), pp. 307–321. [DOI] [PubMed] [Google Scholar]

- Koch C, Massimini M, Boly M, Tononi G (2016). Posterior and anterior cortex - where is the difference that makes the difference? Nat Rev Neurosci. [DOI] [PubMed] [Google Scholar]

- Koch C (2004). The quest for consciousness: a neurobiological approach. Englewood, US-CO: Roberts & Company Publishers. ISBN 0-9747077-0-8 [Google Scholar]

- Koenig T, Kottlow M, Stein M, Melie-Garcia L, (2011). Ragu: a free tool for the analysis of EEG and MEG event-related scalp field data using global randomization statistics. Comput. Intell. Neurosci 2011, 938925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koenig T, Melie-Garcia L, 2010. A method to determine the presence of averaged event-related fields using randomization tests. Brain Topogr. 23, 233–242 [DOI] [PubMed] [Google Scholar]

- Körding KP, Beierholm U, Ma WJ, Tenenbaum JB, Quartz S, & Shams L (2007). Causal inference in multisensory perception. PLoS ONE, 2, e943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamme VA (2006) Towards a true neural stance on consciousness. Trends Cogn. Sci 10, 494–501 [DOI] [PubMed] [Google Scholar]

- Laurienti PJ, Perrault TJ, Stanford TR, Wallace MT, Stein BE (2005) On the use of superadditivity as a metric for characterizing multisensory integration in functional neuroimaging studies. Exp Brain Res 166:289–297 [DOI] [PubMed] [Google Scholar]

- Lehmann D & Skrandies W (1980). Spatial analysis of evoked potentials in man-a review. Progress in Neurobiology, vol. 23, no. 3, pp. 227–250. [DOI] [PubMed] [Google Scholar]

- Lehmann D, 1987. Principles of spatial analysis. In: Gevins AS, Remond A (Eds.), Handbook of electroencephalography and Clinical Neurophysiology. : Methods of Analysis of Brain Electrical and Magnetic Signals, vol. 1. Elsevier, Amsterdam, pp. 309–354. [Google Scholar]

- Lempel A & Ziv J (1976). On the complexity of finite sequences, IEEE Trans. Inform. Theory, vol. IT-22, pp. 75–81. [Google Scholar]

- Luck SJ. An introduction to the event-related potential technique. MIT Press; Cambridge, MA: 2005. [Google Scholar]

- Lunghi C, Alais D. Touch interacts with vision during binocular rivalry with a tight orientation tuning. PLoS ONE 2013; 8:e58754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunghi C, Morrone MC, Alais D. Auditory and tactile signals combine to influence vision during binocular rivalry. J Neurosci 2014; 34:784–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macmillan NA & Creelman CD (2005). Detection Theory: A User's Guide (2nd Ed.). Lawrence Erlbaum Associates. [Google Scholar]

- McIntosh AR, Kovacevic N & Itier RJ Increased brain signal variability accompanies lower behavioral variability in development. PLoS Comput. Biol 4, e1000106 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mercier MR, Foxe JJ, Fiebelkorn IC, Butler JS, Schwartz TH, Molholm S (2013) Auditory-driven phase reset in visual cortex: human electrocorticography reveals mechanisms of early multisensory integration. NeuroImage 79:19–29 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mudrik L, Faivre N, & Koch C (2014). Information Integration Without Awareness. Trends in Cognitive Sciences, 18(9), 488–496. [DOI] [PubMed] [Google Scholar]

- Murray MM, Wallace MT, (2012), The neural bases of multisensory processes. CRC Press, Boca Raton, FL. [PubMed] [Google Scholar]

- Naghavi HR, Nyberg L (2005) Common fronto-parietal activity in attention, memory, and consciousness: Shared demands on integration? Conscious Cogn 14:390–425 [DOI] [PubMed] [Google Scholar]

- Noel JP, Wallace MT, Blake R (2015). Cognitive Neuroscience: Integration of Sight and Sound outside of Awareness? Current Biology, 25 (4); DOI: 10.1016/j.cub.2015.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noy N. et al. (2015). Ignition’s glow: ultra-fast spread of global cortical activity accompanying local “ignitions” in visual cortex during conscious visual perception. Conscious. Cogn 35, 206–224 [DOI] [PubMed] [Google Scholar]

- Oizumi M, Amari S-i, Yanagawa T, Fujii N, Tsuchiya N (2016) Measuring Integrated Information from the Decoding Perspective. PLoS Comput Biol 12(1): e1004654. doi: 10.1371/journal.pcbi.1004654 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli DG (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10, 437–442. [PubMed] [Google Scholar]

- Perrin F, Pernier J, Bertrand O, Girad MH, Echalier JF. Mapping of scalp potentials by surface spline interpolation. Electroencephalogr Clin Neurophysiol 1987;66:75–81 [DOI] [PubMed] [Google Scholar]

- Prior PF (1987) The EEG and detection of responsiveness during anaesthesia and coma. In: Consciousness, Awareness and Pain in General Anaesthesia (Rosen M, Lunn JN, eds). London: Butterworths, 34–45 [Google Scholar]

- Ress D and Heeger DJ (2003). Neuronal correlates of perception in early visual cortex. Nature Neuroscience 6(4): 414–420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rohe T & Noppeney U (2015) Cortical hierarchies perform Bayesian causal inference in multisensory perception. PLoS Biol.,13, e1002073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rohe T and Noppeney U (2016) Distinct computational principles govern multisensory integration in primary sensory and association cortices. Curr. Biol 26, 509–514 [DOI] [PubMed] [Google Scholar]

- Sadaghiani S, Hesselmann G, and Kleinschmidt A (2009). Distributed and antagonistic contributions of ongoing activity fluctuations to auditory stimulus detection. J. Neurosci 29, 13410–13417 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salomon R, Kaliuzhna M, Herbelin B, et al. Balancing awareness: vestibular signals modulate visual consciousness in the absence of awareness. Conscious Cognit 2015; 36: 289–97. [DOI] [PubMed] [Google Scholar]

- Salomon R, Noel JP, Lukowska M, Faivre N, Metzinger T, Serino A, Blake O (2017). Unconscious of multisensory bodily inputs in the peripersonal space shapes bodily self-consciousness. Cognition. [DOI] [PubMed] [Google Scholar]

- Sanchez G, Frey JN, Fuscà M, Weisz N (2017). Decoding across sensory modalities reveals common supramodal signatures of conscious perception. bioRxiv 115535; doi: 10.1101/115535 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanchez-Vives MV, Massimini M, Mattia M. Shaping the default activity pattern of the cortical network. Neuron. 2017;94:993–1001. doi: 10.1016/j.neuron.2017.05.015 [DOI] [PubMed] [Google Scholar]

- Sarasso S, Boly M, Napolitani M, Gosseries O, Charland-Verville V, Casarotto S, Rosanova M, Casali AG, Brichant J, Boveroux P, others (2015). Consciousness and Complexity during Unresponsiveness Induced by Propofol, Xenon, and Ketamine. Current Biology, 25 (23), pp. 3099–3105. [DOI] [PubMed] [Google Scholar]

- Schartner M, Seth A, Noirhomme Q et al. Complexity of multi-dimensional spontaneous EEG decreases during propofol induced general anaesthesia. PloS One 2015;10: e0133532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schartner MM et al. (2017a) Increased spontaneous MEG signal diversity for psychoactive doses of ketamine, LSD and psilocybin. Sci. Rep 7, 46421; doi: 10.1038/srep46421 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schurger A, Pereira F, Treisman A, Cohen JD (2010) Reproducibility distinguishes conscious from non-conscious neural representations. Science 327: 97–99 [DOI] [PubMed] [Google Scholar]

- Schurger A, Sarigiannidis I, Dehaene S (2015) Cortical activity is more stable when sensory stimuli are consciously perceived. PNAS, 112(16): E2083–2092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sergent C, Baillet S, and Dehaene S (2005). Timing of the brain events underlying access to consciousness during the attentional blink. Nat. Neurosci 8, 1391–1400. [DOI] [PubMed] [Google Scholar]

- Silverstein BH, Snodgrass M, Shevrin H & Kushwaha R (2015). P3b, consciousness, and complex unconscious processing. Cortex 73, 216–227. [DOI] [PubMed] [Google Scholar]

- Spence C, Bayne T. Is consciousness multisensory? In Stokes D, Biggs S and Matthen M (eds.), Perception and its Modalities. New York: Oxford University Press, 2014, 95–132. [Google Scholar]

- Spence C, Deroy O. Multisensory Imagery. Lacey S and Lawson R (eds.). New York: Springer, 2013, 157–83. doi: 10.1007/978-1-4614-5879-1_9 [DOI] [Google Scholar]

- Sperdin HF, Spierer L, Becker R, Michel CM, & Landis T (2014). Submillisecond unmasked subliminal visual stimuli evoke electrical brain responses. Human Brain Mapping. doi: 10.1002/hbm.22716 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperry R. (1969) A modified concept of consciousness. Psychological review, 76.6: 532. [DOI] [PubMed] [Google Scholar]

- Summerfield C, Egner T, Mangels J, & Hirsch J (2005). Mistaking a house for a face: neural correlates of misperception in healthy humans. Cerebral Cortex, 16, 500–508. [DOI] [PubMed] [Google Scholar]

- Tallon-Baudry C (2012) On the Neural Mechanisms Subserving Consciousness and Attention. Front Psychol 2:397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanner WP Jr., & Swets JA (1954). A decision-making theory of visual detection. Psychological Review, 61(6), 401–409. [DOI] [PubMed] [Google Scholar]

- Tononi G (2012). Integrated information theory of consciousness: an updated account. Arch Ital Biol, 150 (2-3), pp. 56–90. [DOI] [PubMed] [Google Scholar]

- Tononi G, Boly M, Massimini M, Koch C (2016). Integrated information theory: from consciousness to its physical substrate. Nature Reviews Neuroscience, 17 (7), pp. 450–461. [DOI] [PubMed] [Google Scholar]

- Tononi G, Edelman GM (1998). Consciousness and complexity. Science, 282 (5395), pp. 1846–1851. [DOI] [PubMed] [Google Scholar]