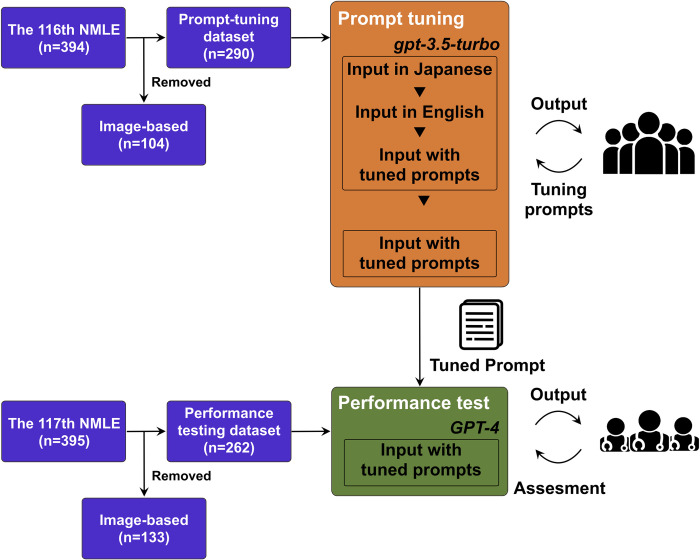

Fig 1. Study overview.

Questions from the 116th NMLE in Japan were used as the prompt optimization dataset and those from 117th NMLE were utilized as the performance-testing dataset after removing the image-based questions. During the prompt optimization process, questions from the prompt optimization dataset were input into GPT-3.5-turbo and GPT-4, using simple prompts in both Japanese and English along with optimized prompts in English. Subsequently, we evaluated the outputs from GPT-3.5-turbo and GPT-4 with optimized prompts. After adjusting the prompts, the GPT-4 model with the optimized prompts was tested on the performance-testing dataset (117th NMLE).