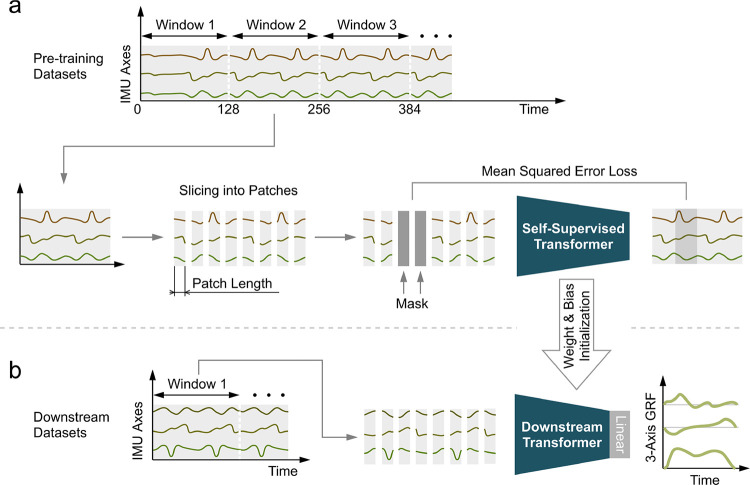

Fig. 2.

Self-supervised IMU representation learning to boost downstream prediction tasks. (a) Continuous IMU data are segmented into windows, sliced into patches, randomly masked, and finally fed into a transformer model to reconstruct the original data window. (b) The weights and biases of the self-supervised transformer were copied to initialize those of the transformer for downstream evaluation. A linear layer was appended to the transformer to map the transformer outputs to the final model outputs. During fine-tuning, we optimized the linear layer first and then fine-tuned the entire model to prevent distortion of pre-trained IMU representations [33], [34]. The fine-tuning data preprocessing steps are identical to those used in SSL, except for the exclusion of masking.