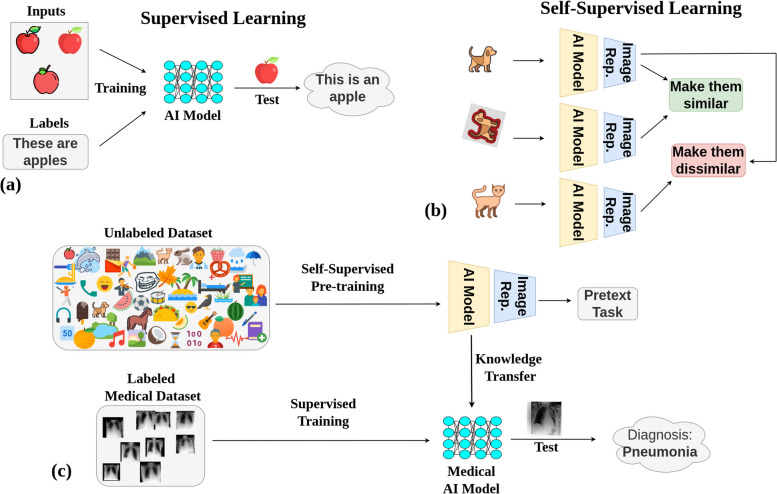

Fig. 1.

The process and advantages of utilizing self-supervised learning (SSL) as a pretraining method for medical AI models. a Supervised learning shows the traditional process of AI pretraining using labeled datasets, which can be resource- and time-intensive due to the need for manual annotation. b SSL paradigm where AI models are trained on unlabeled non-medical images, taking advantage of freely available data, bypassing the need for costly and time-consuming manual labeling. c Transfer of learnings from the SSL pretrained model using non-medical images to a supervised model for accurately diagnosing medical images, highlighting the potential for improved performance in medical AI models due to the large-scale knowledge gained from SSL