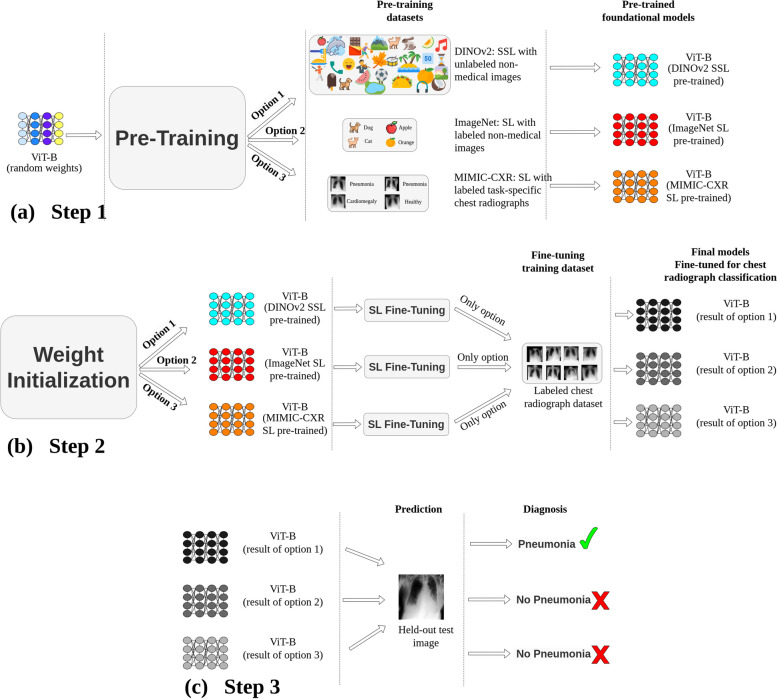

Fig. 2.

General methodology. a Pretraining: the vision transformer base (ViT-B) undergoes pretraining through three avenues: (i) self-supervised learning (SSL) on non-medical images (DINOv2(18)), (ii) supervised learning (SL) using ImageNet-21 K [13], and (iii) SL based on MIMIC-CXR [24] chest radiographs. b ViT-B models are subsequently fine-tuned using labeled chest radiographs from various datasets. c Prediction: diagnostic performance of these models is assessed using images from unseen test sets from various datasets. Although this figure exemplifies pneumonia prediction using a single dataset, steps 2 (fine-tuning) and 3 (systematic evaluation) were consistently implemented across six major datasets: VinDr-CXR (n = 15,000 training, n = 3,000 testing), ChestX-ray14 (n = 86,524 training, n = 25,596 testing), CheXpert (n = 128,356 training, n = 39,824 testing), MIMIC-CXR (n = 170,153 training, n = 43,768 testing), UKA-CXR (n = 153,537 training, n = 39,824 testing), and PadChest (n = 88,480 training, n = 22,045 testing). The refined models identify a total of 22 distinct imaging findings