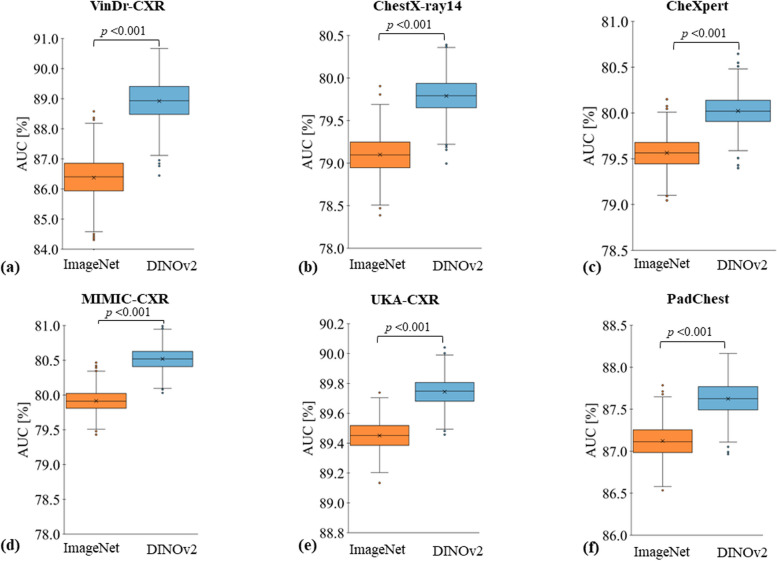

Fig. 3.

Evaluation contrasting pretraining using self-supervised learning (SSL) on non-medical images with supervised learning (SL). Models were either pretrained with SSL (DINOv2, shown in blue) or with SL (ImageNet [13], shown in orange) on non-medical, non-medical images. Subsequently, these models were fine-tuned on chest radiographs in a supervised manner for six datasets: (a) VinDr-CXR [21], (b) ChestX-ray14 [22], (c) CheXpert [23], (d) MIMIC-CXR [24], (e) UKA-CXR [3, 25–28], and (f) PadChest [29] with fine-tuning training images of n = 15,000, n = 86,524, n = 128,356, n = 170,153, n = 153,537, and n = 88,480, respectively, and test images of n = 3,000, n = 25,596, n = 39,824, n = 43,768, n = 39,824, and n = 22,045, respectively. The box plots present the mean area under receiver operating characteristic curve (ROC-AUC) values across all labels within each dataset. A consistent pattern emerges, showing SSL-trained models outperforming SL pretrained ones. Crosses denote means; boxes define the interquartile range (from Q1 to Q3), with the central line signifying the median (Q2). Whiskers stretch to 1.5 times the interquartile range above Q3 and below Q1. Points beyond this range are marked as outliers. Statistical differences between the DINOv2 and ImageNet approaches were evaluated through bootstrapping, with corresponding p-values displayed. Note the varying y-axis scales