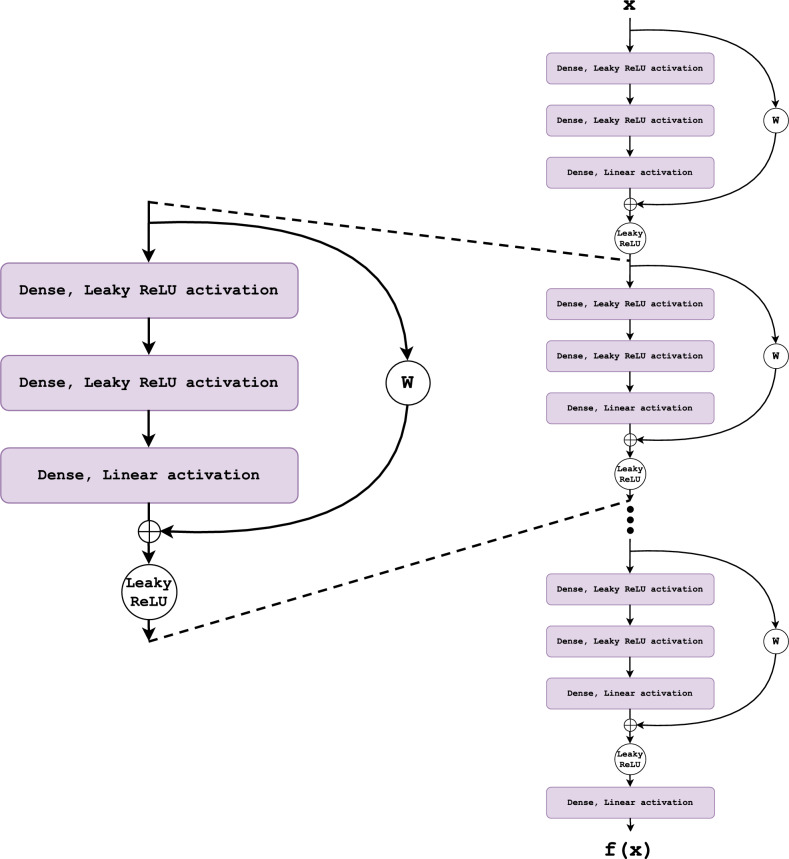

Figure 2.

The building block for a DNN with skip connections. The first two layers are fully connected with Leaky ReLU activation. The last layer has linear activation and is added to the input through the skip connection before being transformed with a non-linear Leaky ReLU function. The matrix is a weight matrix that is trainable if the input and output dimensions are different for the block and otherwise. The blocks are stacked sequentially to form the neural network.