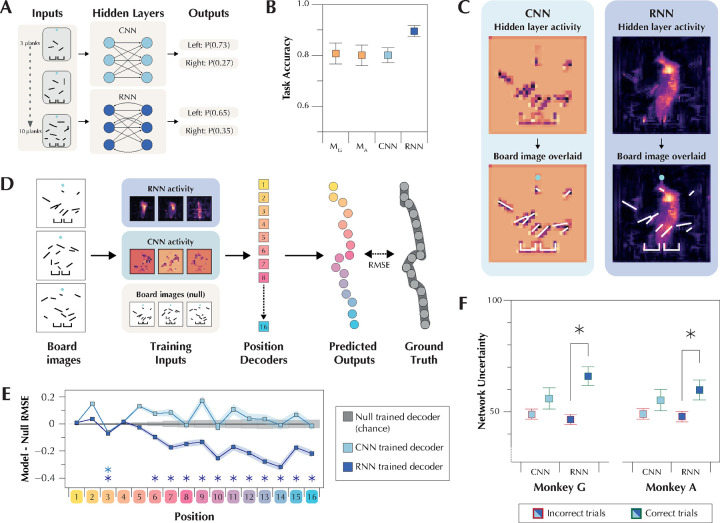

Figure 3:

A - Examples of two types of networks, a feedforward convolutional neural network (CNN) and a feedback recurrent neural network (RNN) that were trained to solve the Planko task. B - Each network’s task accuracy when tested on the same board sets from the monkeys’ task test days. Like the monkeys (MG and MA), both networks achieved above chance accuracy. C - A heat map showing the average activity of the hidden units on an example board for both the CNN and the RNN. The second row shows the same activity again, but with the input board image overlaid. D - A schematic depicting how we quantified whether the ball’s trajectory was represented in the network hidden layer activity. E - Average RMSE values for each predicted vs actual position for the CNN and RNN trained decoders, relative to the board image trained (null/chance) model. While the CNN trained decoders almost never achieved greater than chance prediction accuracy, the RNN trained decoders consistently predicted the position of the ball with a high degree of accuracy. F - Network uncertainty for the CNN and the RNN as a function of whether each monkey gave the correct or incorrect response on a given board. The CNN’s average network uncertainty was no different for boards that the monkeys got correct vs boards that they got incorrect, whereas the RNN’s average network uncertainty was significantly higher on boards that the monkeys got incorrect compared to boards they got correct.