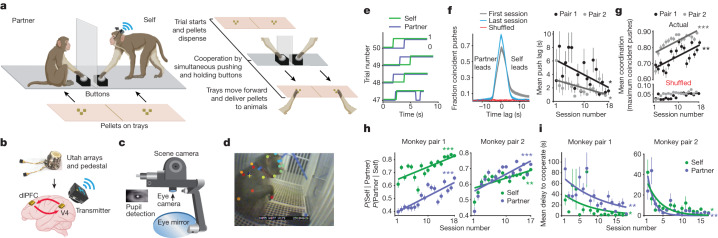

Fig. 1. Tracking of behavioural, oculomotor and neural events during learning cooperation.

a, Behavioural task. Two animals learned to cooperate for food reward. Left, cooperation paradigm. Right, trial structure. b, Wireless neural recording equipment (Blackrock Neurotech). Red arrows represent information processing between areas. c, Wireless eye tracker and components. d, DeepLabCut labelling of partner-monkey and buttons from the eye tracker’s scene camera. The yellow cross represents self-monkey’s point of gaze. e, Example voltage traces of each animal’s button-push activity from pair 1. A line increase to 1 indicates the monkey began pushing. f, Left, example CCGs of pair 1’s button pushes from the first and last session, using actual and shuffled data. Self-monkey leads cooperation more often in early sessions, as the peak occurs at positive time lag (2 s). Right, session average time lag between pushes when maximum coincident pushes occur. Pair 1: P = 0.03 and r = −0.5; pair 2: P = 0.02 and r = −0.5. g, Push coordination. Session average maximum number of coincident pushes (that is, peaks) from CCGs. Pair 1: P = 0.001 and r = 0.7; pair 2 P = 0.008 and r = 0.7. h, Session average conditional probability to cooperate for each monkey. Pair 1: P = 0.0004, r = 0.7 and P = 6.02 × 10−6, r = 0.8; pair 2: P = 0.001, r = 0.7 and P = 0.0004 and r = 0.8, self and partner, respectively. i, Session average delay to cooperate or response time for each monkey. Pair 1: P = 0.01, r = −0.6 and P = 0.001, r = −0.6; pair 2: P = 0.01, r = −0.6 and P = 0.006, r = −0.6, self and partner, respectively. All P values are from linear regression, and r is Pearson correlation coefficient. On all plots, circles represent the mean, with error bars s.e.m. *P < 0.05, **P < 0.01, ***P < 0.001. Illustrations in a and b were created using BioRender.