Summary

The advent of generative artificial intelligence (AI) and large language models (LLMs) has ushered in transformative applications within medicine. Specifically in ophthalmology, LLMs offer unique opportunities to revolutionize digital eye care, address clinical workflow inefficiencies, and enhance patient experiences across diverse global eye care landscapes. Yet, alongside these prospects lie tangible and ethical challenges, encompassing data privacy, security, and the intricacies of embedding LLMs into clinical routines. This Viewpoint article highlights promising applications of LLMs in ophthalmology, while weighing the practical and ethical barriers towards real-world implementation. Ultimately, this piece seeks to catalyze broader discourse on LLMs’ potential within ophthalmology and galvanize both clinicians and researchers to tackle the prevailing challenges, optimizing the benefits of LLMs while curtailing associated risks.

1. Introduction

Since its release in November 2022, ChatGPT (OpenAI, San Francisco, CA, USA) has impressed with its ability to carry out humanlike conversations and provide nuanced answers on a vast range of topics. ChatGPT is a chatbot, built on the GPT-3.5 (Generative Pretrained Transformer-3.5) and GPT-4 families of large language models (LLMs), which is in turn a subtype of deep learning (DL) systems (Table 1). The advanced LLM chatbots in use today have thrived in various academic tasks (Table 2(1–5) and Supplementary Table 1 (6–10) (appendix p7) and can be thought of as ‘personal virtual assistants’ to anyone with an internet connection – able to receive free-text ‘prompts’, understand its semantics, respond accordingly, and track the context of an ongoing personalized conversation.

Table 1.

Comparisons between Deep Learning Model, GPT and ChatGPT

| Deep Learning | GPT | ChatGPT | |

|---|---|---|---|

| Architecture and Definition | Subset of machine learning; typically uses artificial neural networks to perform tasks. There are many architectures, which can be broadly split into semi-supervised and unsupervised algorithms. | A specific implementation of DL, that uses LLMs based on transformer architecture for language generation tasks. LLMs refers to large model sizes such as GPT-3 and beyond, which are trained with a massive amount of linguistic data | A variant of GPT, with a chatbot interface to facilitate human interaction with the GPT LLM. This allows it to generate text in conversational manner. |

| Input Data | Varies, but able to decipher complex patterns from unstructured data. Neural networks thrive on image inputs. Tabular, text, or video data can be used as well. | Text data, in ‘Sequence-to-Sequence’ tasks. | Text input from a user/ conversational data. |

| Knowledge Coverage | Limited to training data, although it requires large amounts of data for training | Broad coverage, pre-trained on large amounts of text data from the internet. | Similar to GPT, but continuously improved with reinforcement learning from conversational data during user interaction |

| Efficiency and Alignment | One task for one model with high alignment | Multiple tasks with low alignment | Multiple tasks with high alignment |

| Interactivity | No interactivity | Low | High in a humanlike manner |

| General Applications | Can be used for a wide range of tasks, such as image recognition, speech recognition, language translation. | Primarily used for text generation tasks, such as extracting information from texts, summarizing and organizing texts, translation, answering questions. | Similar functions as GPT, but specifically designed for generating text in conversational manner. This is personalized using contextual information from prior responses. |

GPT, Generative Pre-trained Transformer; LLM, Large Language model

Table 2.

Performance of common large language models in reasoning, coding, and mathematics

| GPT41 (2023) | LLaMA2 (2023) | PaLM3 (2022) | BLOOM4 (2022) | Chinchilla5 (2022) | |

|---|---|---|---|---|---|

| Common Sense Reasoning* (Based on the ARC-challenge) (%) | 96.3 | 57.8 | 65.9 | 32.9 | NA |

| Common Sense Reasoning (Based on WinoGrande) (%) | 87.5 | 77 | 85.1 | NA | 74.9 |

| Sentence Completion (Based on HellaSwag) (%) | 95.3 | 84.2 | 83.8 | NA | 80.8 |

| Multitask Language Understanding (Based on MMLU) (%) | 86.4 | 63.4 | 69.3 | NA | 67.6 |

| Code Generation (Based on HumanEval) (%) | 67 | 79.3 | NA | 55.45 | NA |

| Reading Comprehension and arithmetic (Based on DROP) (%) | 80.9 | NA | 70.8 | NA | NA |

| Grade-school mathematics (Based on GSM-8K) (%) | 92 | 69.7 | 58 | NA | NA |

ARC = AI2 Reasoning Challenge; MMLU = Massive Multitask Language Understanding; DROP = Discrete Reasoning Over Paragraphs; GSM = Grade School Math. NA: scores not reported.

For patients and healthcare providers, the potential applications of LLM technology in medicine are numerous(11). Examples include facilitating virtual consults or organizing appointments, writing clinical memos or discharge summaries, suggesting treatment options, and helping patients to better self-organize and manage their health information, with a higher degree of personalization, enhanced scalability, and efficiency.

In Ophthalmology, continued heavy reliance on tertiary level service is increasingly unsustainable with growing and aging populations. The diverse applications of AI, DL, and new digital models of care(12–15) to augment, and even disrupt current systems of eye care is a topic of intense discussion. In an imaging-extensive medical specialty, DL algorithms have performed well in detecting diabetic retinopathy(16, 17), glaucoma(18), age-related macular degeneration(19), ocular surface diseases(20, 21), and other visual impairment cases(22). While these existing algorithms provide value in diagnosis and stratification of eye diseases, LLM technology could have benefits that lean toward tackling deficiencies in clinical workflows, or a patient’s journey through the tertiary eye care system.

The potential applications of LLM technology in ophthalmology herald a fresh domain in digital eye care, which we delve into in this Viewpoint. We categorize these applications into two main areas: first, enhancing the patient experience in eye care, and second, optimizing the delivery of care by providers. Recognizing that innovation often brings challenges, we conclude by addressing the barriers and limitations associated with these LLM applications.

2. Improving Patient’s Experience, Streamlining Patient’s Journey

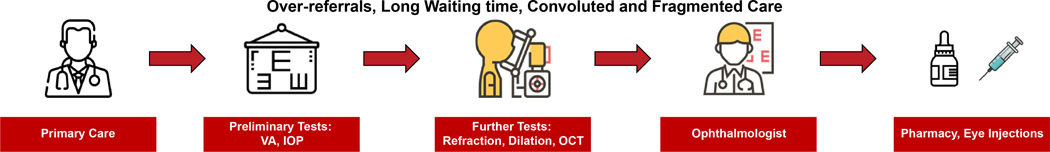

To understand how LLMs might improve a patient’s experience at a tertiary eye center, we kick start this discussion by reviewing current workflow in most ophthalmology facilities worldwide (Figure 1). At primary level, patients usually first present to the community optometrist or primary care physician, who make referrals to the eye hospital. Patients attend appointments for preliminary tests conducted by allied health staff, and nearly all patients receive a detailed examination and consultation by specialist ophthalmologists. This is followed by visits to the pharmacy or clinic procedure rooms where applicable. Subsequently, patients are followed-up at the tertiary center for treatment, surgery, regular screening, or long-term interval observation. This system suffers from several problems(23, 24), for instance: over-referral by primary care services; long wait lists for appointments; long wait times during appointments; convoluted and fragmented pathways from the patient’s perspective; patients ‘remain indefinitely’ in the care of tertiary hospital. This section discusses several applications via which LLMs could potentially overcome or mitigate these current challenges.

Figure 1.

Outline of the current clinical workflow in tertiary eye centres

2.1. Facilitating Triage and Appointment Prioritization

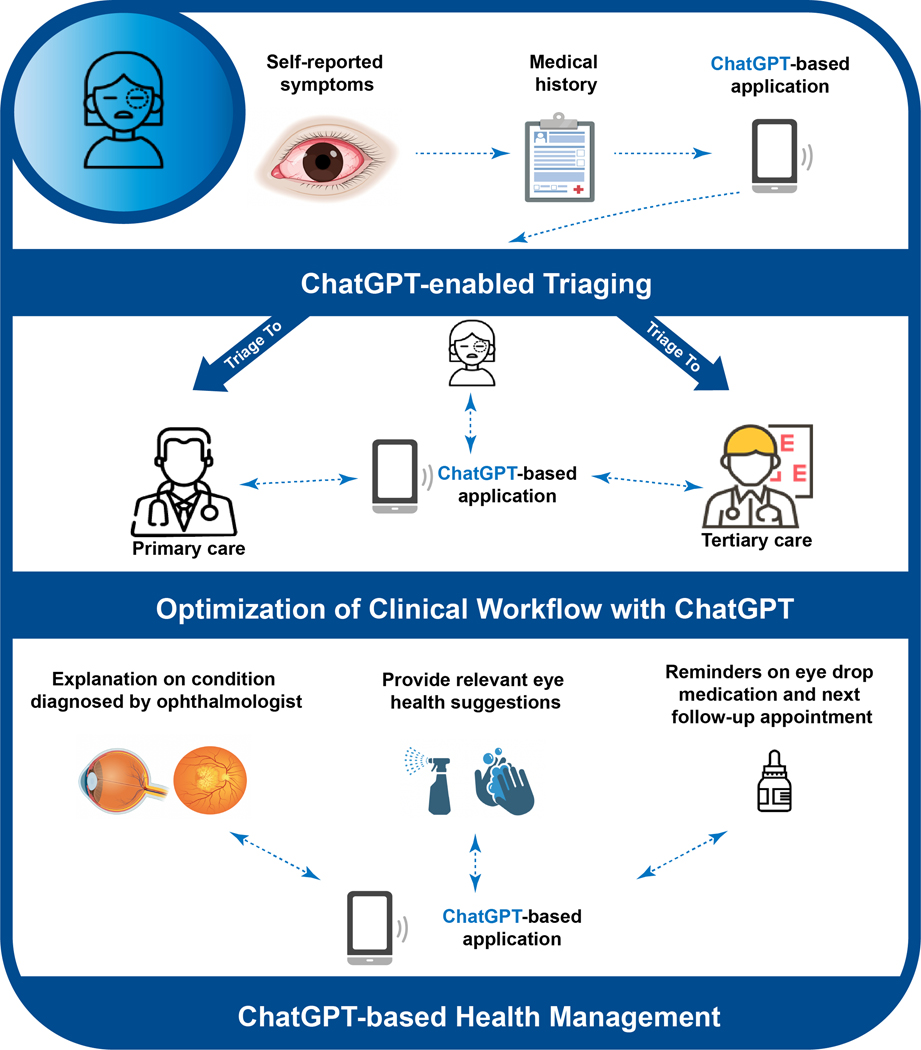

LLMs can potentially be a useful tool for remote triaging of patients by supplying information and responding to questions on topics ranging from common ocular symptoms (e.g. blurred vision, ocular pain, redness) to specific eye diseases (e.g. glaucoma, cataract, diabetic retinopathy). When it comes to implementation, LLM can be linked to mobile applications via an Application Programming Interface (API) or be linked to eye hospital websites. Through such interface, LLMs may aid in requesting specific information and chief complaints from patients, including their ocular symptoms, medical history, and other relevant details remotely – and subsequently coordinate for an appointment with the appropriate care provider (Figure 2). This is applicable in situations where patients cannot physically go to a hospital or clinic, or when quick advice is needed to determine urgency of professional medical attention.

Figure 2.

Use of ChatGPT to enhance delivery of patient-centric health services.

The LLM can adapt its recommendations based on context and new information provided by individual patients. Based on the case severity or likelihood of sight-threatening complications, LLMs could prioritize patients for same-day, same-week, or later in-person appointments. In the event of urgent cases, immediate tele-consultation may be prompted and facilitated by LLM. Given that non-emergent conditions account for almost half of all eye-related emergency department (ED) visits(25), interventions to improve triaging of these cases could render emergency services more dedicated to truly emergent ophthalmic issues. Furthermore, a large proportion of new referral cases to tertiary eye centers are currently attributed to visually insignificant cataracts, dry eyes, or correctable refractive error – most of which can be managed at primary care services without requiring consultation at tertiary settings (26).

The strength of LLMs resides in their ability to facilitate dynamic, two-way communication, enriched by personalized context, grasp of common-sense knowledge, and human-like cognitive abilities such as chain-of-thought reasoning. This adaptability enables LLM to modify its recommendations based on fresh information provided by the patient. In addition, LLM technology could also facilitate preliminary decision-making in risk stratification for eye cases. Typically, such stratification is the domain of ophthalmologists or specialized ophthalmic nurses, rather than primary care physicians. Altogether, when deployed appropriately, LLM may help to improve triaging.

2.2. Personalizing Patient Visit

LLMs can be harnessed to streamline administrative tasks tailored to a patient’s initial visit or subsequent hospital visits. This encompasses delineating visit sequences, intelligently scheduling appointments, coordinating medication deliveries, and automating form completion (Figure 2). (Figure 2). The transition between nurses, optometrists, ophthalmologists, and different clinic rooms during a visit is frequently confusing and time-consuming. Rather than relying on physical queue slips or multiple in-person interactions with healthcare staff for administrative matters, an LLM system can provide patients with sequential, personalized guidance, offering clarifications when needed. When scheduling appointments, instead of calling multiple agents for appointments, patients might only need to interact with the chatbot interface and type in their requests. Essentially, LLM technology has the potential to serve as an efficient, cost-effective virtual assistant, guiding patients seamlessly through their eye care experience.

In the context of cataract surgery which is the most common ocular surgery, LLMs could promote the transition towards virtual, risk-based preoperative medical evaluations (27). A pilot study by the Kellogg Eye Center has shown that the use of a preoperative risk assessment questionnaire, administered via virtual consult, is associated with safe and efficient outcomes(28), with fewer case delays, and no significant differences in intraoperative complications or same-day cancellations(28). Building on this, it is conceivable for LLM technology to assist in administering preoperative questionnaires. This might further streamline the preoperative process for low-risk cataract surgeries, while continuing to offer safe and high-value care.

2.3. Enhancing Patient Engagement in Eye Care with LLMs

LLM technology offers promising avenues to bolster health literacy and adherence in eye care (Figure 2).

For example, chatbot interactions could be used to explain diagnoses or care plans at various difficulty levels, tailored to the individual. A significant number of patients inadvertently or deliberately deviate from medical advice due to misunderstandings, forgetfulness, or neglect(29), leading to adverse effects on visual outcomes. For instance, poorly controlled intraocular pressure in glaucoma or uncontrolled blood glucose in diabetic retinopathy can lead to asymptomatic progression of the diseases (30, 31). Poor contact lens hygiene and wearing habits may lead to complications ranging from lens discomfort to infective keratitis(32). Non-adherence with post-surgical topical corticosteroids leads to excessive postoperative inflammation of the eye. Similarly, patients with meibomian gland dysfunction often neglect regular and proper lid hygiene practice (33). While no single approach guarantees universal patient adherence, LLMs can potentially address multiple facets of this challenge. They can potentialy: 1) automate reminders with enhanced frequency; 2) evaluate and reinforce a patient’s grasp of their treatment plan; 3) reiterate the rationale behind prescribed medications or eyedrops; 4) offer a platform for patients to voice concerns between in-person consultations; 5) provide translations in various languages, while ensuring context and nuance are retained.

Although LLMs cannot replace the expertise and guidance of a trained optometrist or ophthalmologist, their capacity for engaging in intuitive, personalized dialogues with patients is noteworthy. These capabilities can strengthen the patient-provider relationship between clinical visits, particularly in a time characterized by a surge in ophthalmology patients and healthcare staffing constraints.

3. Optimizing Eye Care Delivery by Providers

While LLM technology can be a valuable tool to support the eye patient, there are conceivable ways where it can support care providers – including ophthalmologists, optometrists, ophthalmic nurses, and allied health staff.

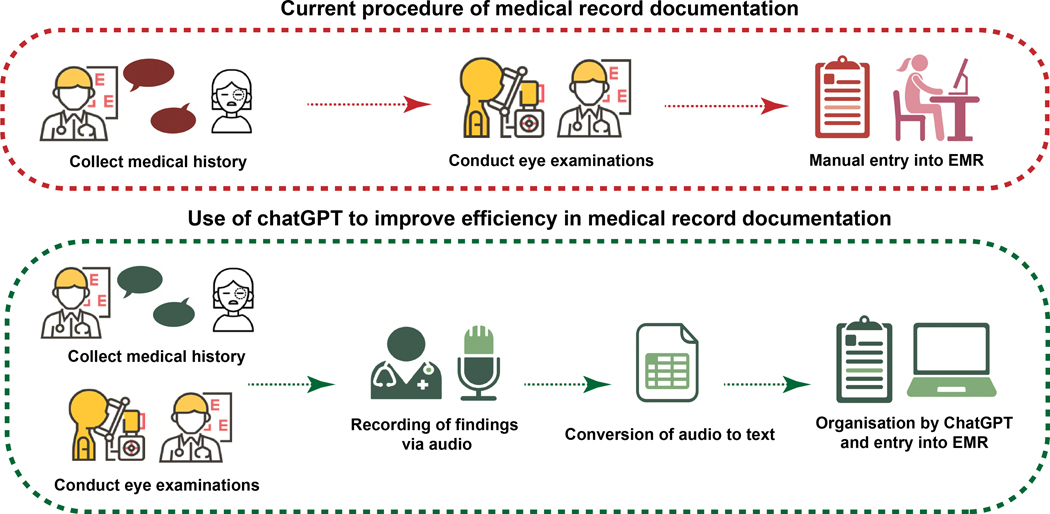

3.1. StreamliningMedical Record Documentation

A promising avenue for LLM application lies in assisting with EMR documentation, encompassing tasks like crafting discharge summaries, consultation notes, operative dictations, and clinic letters (Figure 3). While the potential of LLM in enhancing healthcare practices has been met with both enthusiasm and caution (34), the idea of LLM-assisted documentation is gaining traction. LLMs, such as ChatGPT, have been trained on vast human language datasets, acquiring a nuanced grasp of medical terminology, including ophthalmology-specific terms. The benefit of this feature may be further maximized when combined with other AI software’s’ speech-to-text and LLM’s transcription capabilities. Given that optometrists and ophthalmologists often allocate a significant portion of their time to examination and documentation(35), an LLM-assisted verbal narration system could offer a streamlined approach, allowing for more efficient documentation during patient examinations.

Figure 3.

Use of ChatGPT to enhance efficiency in medical record documentation.

Imagine a scenario where LLM software functions similarly to modern ‘smart speakers’, actively recording patient symptoms, concerns, and specific ocular examination details. At the clinician’s behest, the LLM could craft notes that align with the eye clinic’s documentation protocols, incorporate pertinent billing codes, and even propose subsequent investigations or follow-up appointments. As an explorative exercise, we utilized ChatGPT-3.5 to emulate clinical communication scenarios characteristic of a tertiary ophthalmology service (Supplementary Figure 1, appendix p2–5). The responses generated by ChatGPT were largely articulate and encompassing, though based on its current performance, it would likely still require amendments by an ophthalmologist to further ensure accuracy and patient-specific relevance. It is also worth noting that ChatGPT’s recommendations seemed to lean towards a more generalized approach, occasionally missing the subtleties of individualized treatment. Nonetheless, this methodology still holds promise in reducing the time spent on manual documentation. As LLMs evolve and are trained on more comprehensive EMR datasets, we can anticipate even more refined and efficient LLM-assisted documentation processes.

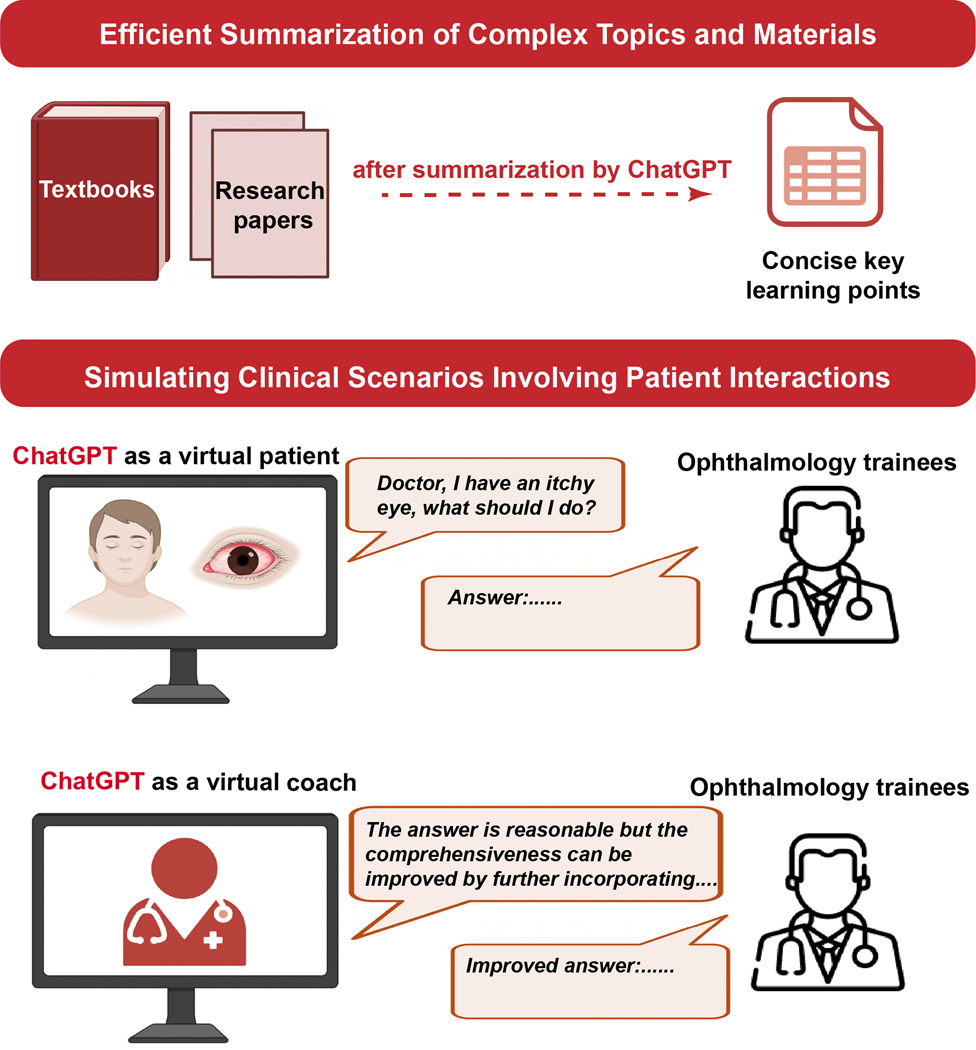

3.2. Enhancing Ophthalmic Education with LLMs

LLMs present a transformative potential in enhancing education for eye care professionals (Figure 4). One of their strengths lies in swiftly summarizing extensive texts, be it academic papers or comprehensive ophthalmology literature, offering concise overviews. LLMs can distill key pointers from clinical guidelines or relevant articles on specific subjects. Furthermore, LLMs can be used to simulate real-world interactions, excelling in role-playing and verbal simulations. On this note, they can also function as an immediate feedback tool to refine educator’s communicative techniques.

Figure 4.

Use of ChatGPT to facilitate medical education and learning.

While LLMs cannot replace hands-on training for procedures, they can serve as invaluable post-training ‘refreshers’, summarizing crucial steps in patient counseling or the procedure itself. For instance, ophthalmic nurses administering nurse-led intravitreal injections(36, 37) might review essential pointers pre-procedure, while residents could revisit the critical stages of surgeries. By generating case studies and simulating clinical scenarios, LLMs can help providers improve their decision-making skills and patient interaction acumen.

In clinical setting, LLMs could be integrated into EMR systems, to provide a quick summary and key readings of the patient before each consult. The provider can use this to get an immediate broad overview of the patient’s profile, and check for specifics as required. This might be particularly useful in clinics with high patient volume.

Recent studies (38, 39) suggest that LLMs exhibit proficiency in ophthalmology-related queries, even in the absence of specialized training in the ophthalmology domain. On the other hand, when evaluating ophthalmology board-style questions, Cai et al.(39) showed that ChatGPT-4.0 and Bing Chat performed comparably to human respondents. They (39) also observed areas where LLMs excelled, such as workup-type and single-step reasoning questions; and areas of challenge, like image interpretation—a domain still in its infancy for LLMs.

Beyond serving as topic ‘refreshers’, LLMs can also craft multiple-choice questions for exam topics, assisting in exam preparation. An ophthalmology trainee, for instance, could feed ChatGPT an EyeWiki article, prompting it to generate test questions for her/ his own subsequent review or mocked self-assessment. In Supplementary Figure 2 (appendix p6), we showcase examples where ChatGPT is able to formulate pertinent questions and provide accurate answers, using materials extracted from EyeWiki, an eye encyclopaedia website.

4. Challenges to Implementation and possible solutions

Despite the wide-ranging benefits LLMs might bring to eye care, concerns have also been raised about their applications(40, 41).

4.1. Constraints of LLMs in Ophthalmological Examinations and Procedurest

Much of ophthalmological practice hinges on meticulous physical examination of the eyes, an aspect unattainable through mere text-based interactions with a language model. Key procedures such as assessing symptoms, gauging visual acuity, observing eye movements, performing tonometry, and conducting fundoscopy necessitate direct observation and cannot be entirely replicated virtually.

While LLMs are intrinsically not tailored for clinical procedures, their integration with Application Programming Interface plugins and complementary software tools can significantly enhance their applicability. For instance, the seamless fusion of ChatGPT with the Argil plugin facilitates the creation of images derived from textual prompts.(42). By synergizing LLMs with ocular photo-based deep learning algorithms, there emerges a potential for automated quantification and textual interpretation of ocular imaging data. This collaborative methodology paves the way for AI-assisted communication of potential diagnoses to ophthalmologists, expanding the scope and promise of LLMs in the realm of ophthalmology.

4.2. Privacy and Security Concerns Related to LLMs

A pressing concern with the integration of LLMs into clinical settings revolves around cybersecurity and data privacy, especially when the software necessitates training on EMR data or is directly embedded into a live EMR system(43). For an LLM to be clinically pertinent, it would inevitably require access to comprehensive patient medical histories, encompassing prior eye conditions, ocular images, examination records, surgical histories, medications, and allergies. This leads to the pivotal issue of patient consent and acceptance.

Medical data protection in ophthalmology must adhere to various legal and regulatory requirements, such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States or the General Data Protection Regulation (GDPR) in the European Union. In particular, LLMs in ophthalmology clinics would need access to eye data management systems like the ZEISS FORUM or the Heidelberg Eye Explorer HEYEX 2 – ocular images can potentially be considered as a patient identifier, and the security of ophthalmic imaging with a new LLM tool must be reviewed before implementation.

Ensuring adherence to these regulations, coupled with the implementation of robust access controls, encryption measures, data backups, regular audits, and timely data breach notifications, can be intricate and demand significant resources. A collaborative approach, involving healthcare providers, tech vendors, regulatory agencies, and researchers, is imperative. Such collaboration aims to gain a clear understanding of how LLM algorithms interact with ocular EMR data, the feasibility of instituting safeguards to uphold individual data privacy, and ensuring alignment with global data protection mandates.

To address safety apprehensions associated with the integration of LLM systems into EMRs, blockchain technology emerges as a promising solution. Distinct from traditional centralized databases, blockchain operates on a decentralized and distributed ledger framework (44, 45). Every interaction between the EMR and LLM can be securely chronicled as distinct blocks within this chain. By harnessing cryptographic algorithms and dispersing data across a multitude of nodes, blockchain holds the potential to significantly mitigate data breach risks during interactions between EMR and LLM. Furthermore, the intrinsic transparency of blockchain facilitates immediate identification and tracing of discrepancies in EMR data, ensuring timely error identification and rectification.

4.3. False Responses by LLMs

A significant concern with LLMs is the potential for false or misleading responses, colloquially termed as ‘hallucinations’.(11) While these models have showcased impressive alignment with the United States Medical Licensing Exam (USMLE) questions(46), the software can produce factual or contextual medical errors, which can be deceptively convincing to patients. For instance, ChatGPT, in its current form, derives its knowledge primarily from the vast expanse of public internet text. This means it lacks a comprehensive understanding and can only mimic general cognitive knowledge based on prevalent linguistic patterns. It isn’t specifically tailored for intricate technical or medical tasks(6). A profound grasp of eye anatomy, physiology, and diseases might elude LLM’s current capabilities.

To truly excel as an auxiliary in eye care, LLMs would necessitate training on specialized ophthalmology research literature and clinical guidelines. However, a significant portion of this material is not publicly accessible, placing it outside the purview of current LLM training iterations. Hence, the onus would be on clinicians to proofread the outputs, and ensure they are grounded in appropriate fact.

In addition, previous deep learning applications in ophthalmology, such as those for fundus image interpretation, were built on narrowly defined models with clear outcome measures, such as diabetic retinopathy and cataract detection. In contrast, with the rapid evolution of LLMs, assessing their expansive intelligence and setting definitive clinical performance standards presents a more intricate challenge. Thorough and robust evaluation is essential to ascertain the reliability and safety of these tools in clinical settings.

4.4. Other capability limitations of LLMs

Another notable limitation of LLMs is their potential inability to keep pace with the latest advancements in diagnosis and treatment of eye diseases. Given the dynamic nature of medical knowledge, LLMs might lag behind if they do not incorporate latest research findings or clinical guidelines. Furthermore, when offering clinical recommendations outside a clinic setting where there is no linkage to patient’s EMR, LLMs might not be able to provide tailored advice, compromising the precision of their suggestions. Lastly, language support remains a concern. LLMs might not cater to all languages, especially in terms of specialized medical vocabulary. This limitation poses potential risks related to misinterpretations and raises broader issues about accessibility and health equity.

4.5. Ethical considerations

In the past months, several major disciplines and professions – not limited to Ophthalmology – have begun to consider what LLMs mean for them. Given how fast the technology is moving, there are ethical and legal concerns of liability, should medical errors arise from any of the above practical drawbacks(47). Until accuracy and safety standards deemed acceptable by the medical community are put in place, any prompts related to medical use should be limited, and ideally should contain explicit warnings. Arguably, many bioethics concerns associated with LLMs mirror those prevalent in existing AI applications in medicine, encompassing data ownership, consent, bias, and privacy. However, there are other ethical issues that have been brought much more to the forefront by LLMs(48) – including misinformation, medical deepfakes, the imperative of informing patients when AI analyzes their medical data, and potential inequities stemming from overly rapid technological advancements(48). On the flip side, LLMs also present promising avenues for democratizing knowledge and empowering patients

5. Conclusions

LLM holds significant promise in ophthalmology, offering transformative avenues to enhance clinical workflows and care paradigms. Yet, before integrating these models into existing health systems, it’s imperative to address pressing concerns about their robustness and reliability. While we remain optimistic about LLMs, drawing parallels with other digital ophthalmology tools like telemedicine and ocular photo-based deep learning, it is crucial to prioritize accuracy assessment, governance, and the establishment of protective measures.

Supplementary Material

Acknowledgements:

YCT is supported by grants (NMRC, MOH, HCSAINV21nov-0001) from the National Medical Research Council, Singapore. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

PVW declares financial interests as co-founder of Enlighten Imaging, an early-stage medical technology start-up company devoted to hyperspectral retinal imaging and image analysis, including the development of AI systems; research grant support from Roche, Bayer; honoraria from Roche, Bayer, Novartis, Mylan. RT declares receiving consulting fees for Novartis, AbbVie Allergan, Bayer, Alcon, Roche Genentech, Thea, Apellis, Iveric Bio, and Oculis; honoraria from Optic 2000; receiving support for attending meetings from Novartis, AbbVie Allergan and Bayer; participation on advisory board for Novartis, AbbVie Allergan, Bayer, Apellis, Iveric Bio, Oculis and Roche Genentech; his leadership role as president-elect in Euretina; stock of Oculis; receipt of equipment and materials from Zeiss. SS declares grants from Bayer and Boehringer Ingleheim; consulting fees from Roche, AbbVie, Apellis, Bayer, Biogen, Boehringer Ingelheim, Novartis, Janssen Pharmaceuticals, Optos, Ocular Therapeutix and OcuTerra; support for attending meetings and travel from Bayer and Roche; participation on data safety monitoring board or advisory board for Nova Nordisk and Bayer; leadership in RCOphth, Macular Society, and Editor EYE; stock in Eyebiotech; receipt of equipment and materials from Boehringer Ingleheim. AYL reports grants for affiliated institutes from Santen, Novartis, Carl Zeiss Meditec, Microsoft, NVIDIA, NIH/NEI K23EY029246, NIH OT2OD032644, Research to Prevent Blindness, and the Lantham Vision Innovation Award. AYL reports consulting fees from USDA, Genetech, Verana Health, Johnson & Johnson, Gyroscope, Janssen Research and Development, and Regeneron and payment for lectures from Topcon and from Alcon Research for various educational events and activities. ARR declares grants from Health and Medical Research Fund (ref.19201991). CSL reports grants for affiliated institution from NIH/NIA R01AG060942, NIH/NIA U19AG066567, NIH OT2OD032644, Research to Prevent Blindness, and the Latham Vision Innovation Award and consulting fees from Boehringer Ingelheim. TYW declares consulting fees from Aldropika Therapeutics, Bayer, Boehringer Ingelheim, Genetech, Iveric Bio, Novartis, Plano, Oxurion, Roche, Sanofi, and Shanghai Henlius; being an inventor, patent holder, and cofounder of start-up companies EyRiS and Visre.

Footnotes

Declaration of Interests:

The other authors declare no competing interests.

REFERENCES

- 1.OpenAI. GPT-4 Technical Report2023 March 01, 2023:[arXiv:2303.08774 p.]. Available from: https://ui.adsabs.harvard.edu/abs/2023arXiv230308774O.

- 2.Touvron H, Lavril T, Izacard G, Martinet X, Lachaux M-A, Lacroix T, et al. Llama: Open and efficient foundation language models. arXiv preprint arXiv:230213971. 2023.

- 3.Chowdhery A, Narang S, Devlin J, Bosma M, Mishra G, Roberts A, et al. Palm: Scaling language modeling with pathways. arXiv preprint arXiv:220402311. 2022.

- 4.Scao TL, Fan A, Akiki C, Pavlick E, Ilić S, Hesslow D, et al. Bloom: A 176b-parameter open-access multilingual language model. arXiv preprint arXiv:221105100. 2022.

- 5.Hoffmann J, Borgeaud S, Mensch A, Buchatskaya E, Cai T, Rutherford E, et al. Training compute-optimal large language models. arXiv preprint arXiv:220315556. 2022.

- 6.Nori H, King N, McKinney SM, Carignan D, Horvitz E. Capabilities of gpt-4 on medical challenge problems. arXiv preprint arXiv:230313375. 2023.

- 7.Yasunaga M, Leskovec J, Liang P. Linkbert: Pretraining language models with document links. arXiv preprint arXiv:220315827. 2022.

- 8.Yasunaga M, Bosselut A, Ren H, Zhang X, Manning CD, Liang PS, et al. Deep bidirectional language-knowledge graph pretraining. Advances in Neural Information Processing Systems. 2022;35:37309–23. [Google Scholar]

- 9.Taylor R, Kardas M, Cucurull G, Scialom T, Hartshorn A, Saravia E, et al. Galactica: A large language model for science . arXiv preprint arXiv:221109085. 2022.

- 10.Singhal K, Azizi S, Tu T, Mahdavi SS, Wei J, Chung HW, et al. Large language models encode clinical knowledge. arXiv preprint arXiv:221213138. 2022. [Google Scholar]

- 11.Lee P, Bubeck S, Petro J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N Engl J Med. 2023;388(13):1233–9. [DOI] [PubMed] [Google Scholar]

- 12.Ting DSW, Peng L, Varadarajan AV, Keane PA, Burlina PM, Chiang MF, et al. Deep learning in ophthalmology: The technical and clinical considerations. Prog Retin Eye Res. 2019;72:100759. [DOI] [PubMed] [Google Scholar]

- 13.Gunasekeran DV, Tham YC, Ting DSW, Tan GSW, Wong TY. Digital health during COVID-19: lessons from operationalising new models of care in ophthalmology. Lancet Digit Health. 2021;3(2):e124–e34. [DOI] [PubMed] [Google Scholar]

- 14.Tham YC, Husain R, Teo KYC, Tan ACS, Chew ACY, Ting DS, et al. New digital models of care in ophthalmology, during and beyond the COVID-19 pandemic. Br J Ophthalmol. 2022;106(4):452–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li JO, Liu H, Ting DSJ, Jeon S, Chan RVP, Kim JE, et al. Digital technology, tele-medicine and artificial intelligence in ophthalmology: A global perspective. Prog Retin Eye Res. 2021;82:100900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA. 2016;316(22):2402–10. [DOI] [PubMed] [Google Scholar]

- 17.Ting DSW, Cheung CY, Lim G, Tan GSW, Quang ND, Gan A, et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA. 2017;318(22):2211–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hood DC, De Moraes CG. Efficacy of a Deep Learning System for Detecting Glaucomatous Optic Neuropathy Based on Color Fundus Photographs. Ophthalmology. 2018;125(8):1207–8. [DOI] [PubMed] [Google Scholar]

- 19.Burlina PM, Joshi N, Pekala M, Pacheco KD, Freund DE, Bressler NM. Automated Grading of Age-Related Macular Degeneration From Color Fundus Images Using Deep Convolutional Neural Networks. JAMA Ophthalmol. 2017;135(11):1170–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhang Z, Wang Y, Zhang H, Samusak A, Rao H, Xiao C, et al. Artificial intelligence-assisted diagnosis of ocular surface diseases. Front Cell Dev Biol. 2023;11:1133680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fang X, Deshmukh M, Chee ML, Soh ZD, Teo ZL, Thakur S, et al. Deep learning algorithms for automatic detection of pterygium using anterior segment photographs from slit-lamp and hand-held cameras. Br J Ophthalmol. 2022;106(12):1642–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tham YC, Anees A, Zhang L, Goh JHL, Rim TH, Nusinovici S, et al. Referral for disease-related visual impairment using retinal photograph-based deep learning: a proof-of-concept, model development study. Lancet Digit Health. 2021;3(1):e29–e40. [DOI] [PubMed] [Google Scholar]

- 23.Khou V, Ly A, Moore L, Markoulli M, Kalloniatis M, Yapp M, et al. Review of referrals reveal the impact of referral content on the triage and management of ophthalmology wait lists. BMJ Open. 2021;11(9):e047246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hribar MR, Huang AE, Goldstein IH, Reznick LG, Kuo A, Loh AR, et al. Data-Driven Scheduling for Improving Patient Efficiency in Ophthalmology Clinics. Ophthalmology. 2019;126(3):347–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Channa R, Zafar SN, Canner JK, Haring RS, Schneider EB, Friedman DS. Epidemiology of Eye-Related Emergency Department Visits. JAMA Ophthalmol. 2016;134(3):312–9. [DOI] [PubMed] [Google Scholar]

- 26.Shah R, Edgar DF, Khatoon A, Hobby A, Jessa Z, Yammouni R, et al. Referrals from community optometrists to the hospital eye service in Scotland and England. Eye (Lond). 2022;36(9):1754–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chen CL, McLeod SD, Lietman TM, Shen H, Boscardin WJ, Chang HP, et al. Preoperative Medical Testing and Falls in Medicare Beneficiaries Awaiting Cataract Surgery. Ophthalmology. 2021;128(2):208–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cuttitta A, Joseph SS, Henderson J, Portney DS, Keedy JM, Benedict WL, et al. Feasibility of a Risk-Based Approach to Cataract Surgery Preoperative Medical Evaluation. JAMA Ophthalmol. 2021;139(12):1309–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Martin LR, Williams SL, Haskard KB, Dimatteo MR. The challenge of patient adherence. Ther Clin Risk Manag. 2005;1(3):189–99. [PMC free article] [PubMed] [Google Scholar]

- 30.Keenum Z, McGwin G Jr, Witherspoon CD, Haller JA, Clark ME, Owsley C. Patients’ Adherence to Recommended Follow-up Eye Care After Diabetic Retinopathy Screening in a Publicly Funded County Clinic and Factors Associated With Follow-up Eye Care Use. JAMA Ophthalmology. 2016;134(11):1221–8. [DOI] [PubMed] [Google Scholar]

- 31.Weinreb RN, Aung T, Medeiros FA. The pathophysiology and treatment of glaucoma: a review. Jama. 2014;311(18):1901–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Yee A, Walsh K, Schulze M, Jones L. The impact of patient behaviour and care system compliance on reusable soft contact lens complications. Cont Lens Anterior Eye. 2021;44(5):101432. [DOI] [PubMed] [Google Scholar]

- 33.Chuckpaiwong V, Nonpassopon M, Lekhanont K, Udomwong W, Phimpho P, Cheewaruangroj N. Compliance with Lid Hygiene in Patients with Meibomian Gland Dysfunction. Clin Ophthalmol. 2022;16:1173–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sallam M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare (Basel). 2023;11(6). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Baxter SL, Gali HE, Huang AE, Millen M, El-Kareh R, Nudleman E, et al. Time Requirements of Paper-Based Clinical Workflows and After-Hours Documentation in a Multispecialty Academic Ophthalmology Practice. Am J Ophthalmol. 2019;206:161–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Raman V, Triggol A, Cudrnak T, Konstantinos P. Safety of nurse-led intravitreal injection of dexamethasone (Ozurdex) implant service. Audit of first 1000 cases. Eye (Lond). 2021;35(2):388–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Teo AWJ, Rim TH, Wong CW, Tsai ASH, Loh N, Jayabaskar T, et al. Design, implementation, and evaluation of a nurse-led intravitreal injection programme for retinal diseases in Singapore . Eye (Lond). 2020;34(11):2123–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Antaki F, Touma S, Milad D, El-Khoury J, Duval R. Evaluating the Performance of ChatGPT in Ophthalmology: An Analysis of Its Successes and Shortcomings . Ophthalmol Sci. 2023;3(4):100324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cai LZ, Shaheen A, Jin A, Fukui R, Yi JS, Yannuzzi N, et al. Performance of Generative Large Language Models on Ophthalmology Board-Style Questions. Am J Ophthalmol. 2023;254:141–9. [DOI] [PubMed] [Google Scholar]

- 40.Harrer S. Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. eBioMedicine. 2023;90. [DOI] [PMC free article] [PubMed]

- 41.Will ChatGPT transform healthcare? Nature Medicine. 2023;29(3):505–6. [DOI] [PubMed] [Google Scholar]

- 42.Argil. How to Generate ChatGPT Images Using Argil Plugin. 2023. [Available from: https://www.argil.ai/blog/how-to-generate-chatgpt-images-using-argil-plugin.

- 43.Hasal M, Nowaková J, Ahmed Saghair K, Abdulla H, Snášel V, Ogiela L. Chatbots: Security, privacy, data protection, and social aspects. Concurrency and Computation: Practice and Experience. 2021;33(19):e6426. [Google Scholar]

- 44.Witte JH. The Blockchain: a gentle four page introduction. arXiv preprint arXiv:161206244. 2016.

- 45.Vazirani AA, O’Donoghue O, Brindley D, Meinert E. Blockchain vehicles for efficient medical record management. NPJ digital medicine. 2020;3(1):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kung TH, Cheatham M, Medenilla A, Sillos C, De Leon L, Elepaño C, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2(2):e0000198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Parviainen J, Rantala J. Chatbot breakthrough in the 2020s? An ethical reflection on the trend of automated consultations in health care. Med Health Care Philos. 2022;25(1):61–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Cohen IG. What Should ChatGPT Mean for Bioethics? Am J Bioeth. 2023:1–9. [DOI] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.