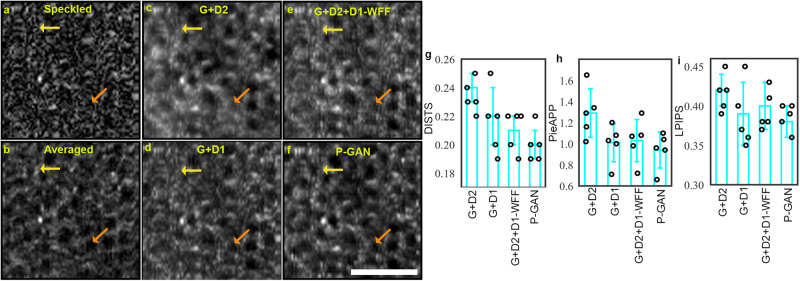

Fig. 2. Effect of parallel discriminator generative adversarial network (P-GAN) components on the recovery of retinal pigment epithelial (RPE) cells.

a Single speckled image compared to images of the RPE obtained via b average of 120 volumes (ground truth), c generator with the convolutional neural network (CNN) discriminator (G + D2), d generator with the twin discriminator (G + D1), e generator with CNN and twin discriminators without the weighted feature fusion (WFF) module (G + D2 + D1-WFF), and f P-GAN. The yellow and orange arrows indicate cells that are better visualized using P-GAN compared to the intermediate models. g–i Comparison of the recovery performance using deep image structure and texture similarity (DISTS), perceptual image error assessment through pairwise preference (PieAPP), and learned perceptual image patch similarity (LPIPS) metrics. The bar graphs indicate the average values of the metrics across sample size, n = 5 healthy participants (shown in circles) for different methods. The error bars denote the standard deviation. Scale bar: 50 µm.