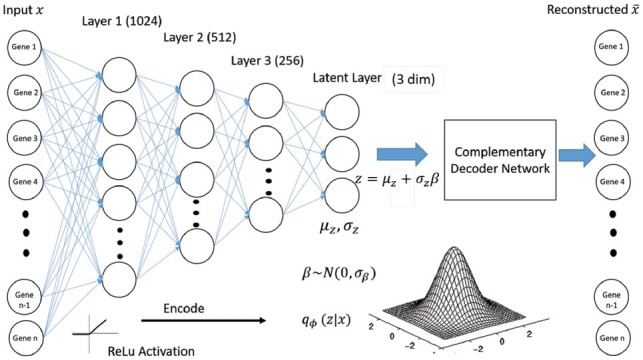

Fig. 1.

Structure of the VAE used in Dhaka. We have three intermediate dense layers of 1024, 512 and 256 nodes between the input and latent layer. All the layers in the encoder and decoder network use ReLu activation except the output layer (sigmoid activation). The latent layer has three nodes each for encoding mean and variances of the Gaussian distribution. The input of the decoder network, the latent representation z is then sampled from that distribution using the reparameterization trick (Kingma and Welling, 2013)