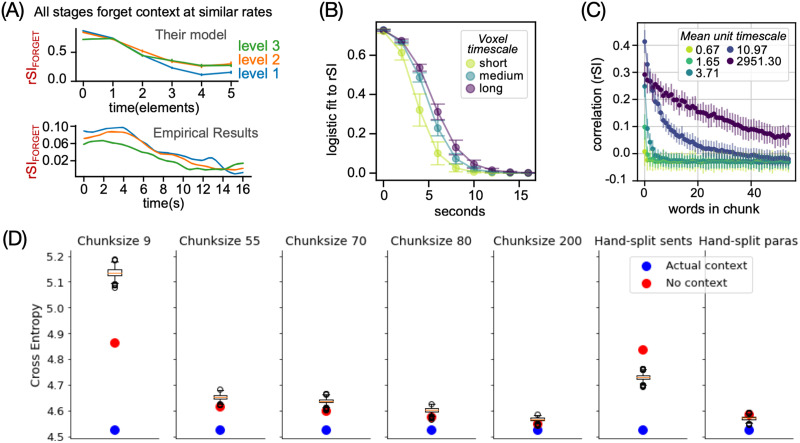

Figure 5. .

In silico adaptation of a study on forgetting behavior during natural language comprehension. In the original study, Chien and Honey (2020) scrambled paragraphs in a story and analyzed how quickly different brain regions forgot the incorrect context preceding each paragraph. The in silico adaptation used the MT-LSTM based encoding model to predict brain activity at different points in a paragraph when it was preceded by incorrect context. (A) The original study reported that each brain region (denoted by different colored lines) forgot information at a similar rate, despite differences in construction timescales. (B) In contrast, the in silico replication estimated that regions with longer construction timescales also forgot information slowly. (C) Within the MT-LSTM itself, the forgetting rate of different units was related to its attributed timescale. (D) Next, the MT-LSTM’s language modeling abilities were tested on shuffled sentences or paragraphs. The DL model achieved better performance at next-word prediction by using the incoherent, shuffled context as opposed to no context at all. This shows that the DL model retains the incoherent information, possibly because it helps with the original language modeling task it was trained on or because the model has no explicit mechanism to flush-out information when context changes (at sentence/paragraph boundary). The computational model’s forgetting behavior thus differs from the brain, revealing specific flaws in the in silico model that could be improved in future versions, such as a modified MT-LSTM. DL = deep learning; MT-LSTM = multi-timescale long short-term memory; rSI = correlation between scrambled and intact chunks.