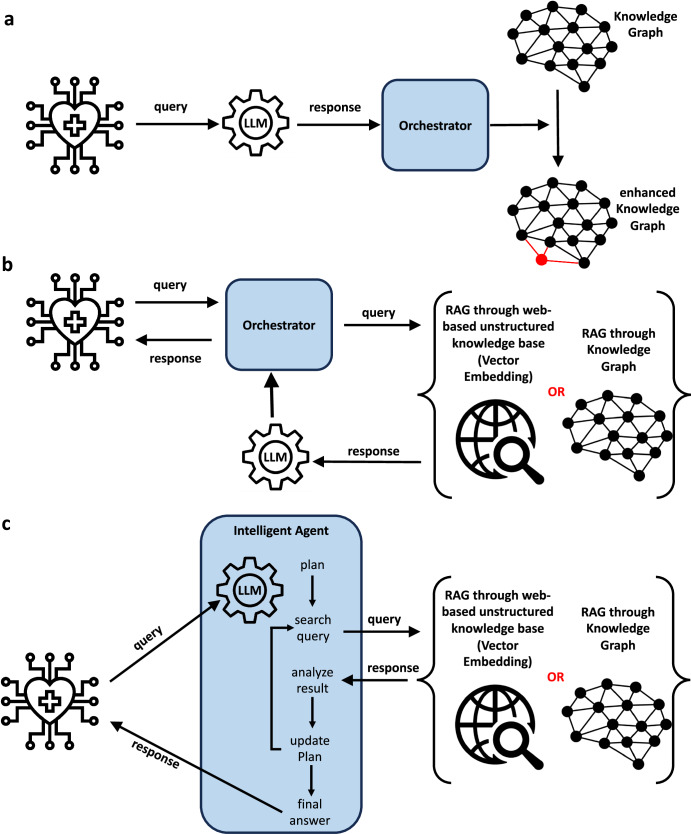

Fig. 1. The combination of large language models with KGs, including in retrieval augmented generation (RAG).

a LLMs can be used to automate the construction, enrichment and refinement of KGs from text queries, which can be generated from medical information systems; b RAG augments the performance of large language models (LLMs) through searching in either unstructured web-based knowledge bases (in vector embedding), or information retrieval from knowledge graphs, and using the output to refine LLM prompting; c in more sophisticated approaches to RAG, LLMs and KGs (or vector embedding) can be used side-by-side or be hybridized to address medical information reasoning tasks. Icons created by the authors, I Putu Kharismayadi, Lucas Rathgeb and Nubaia Karim Barshafrom from the Noun Project (https://thenounproject.com/).