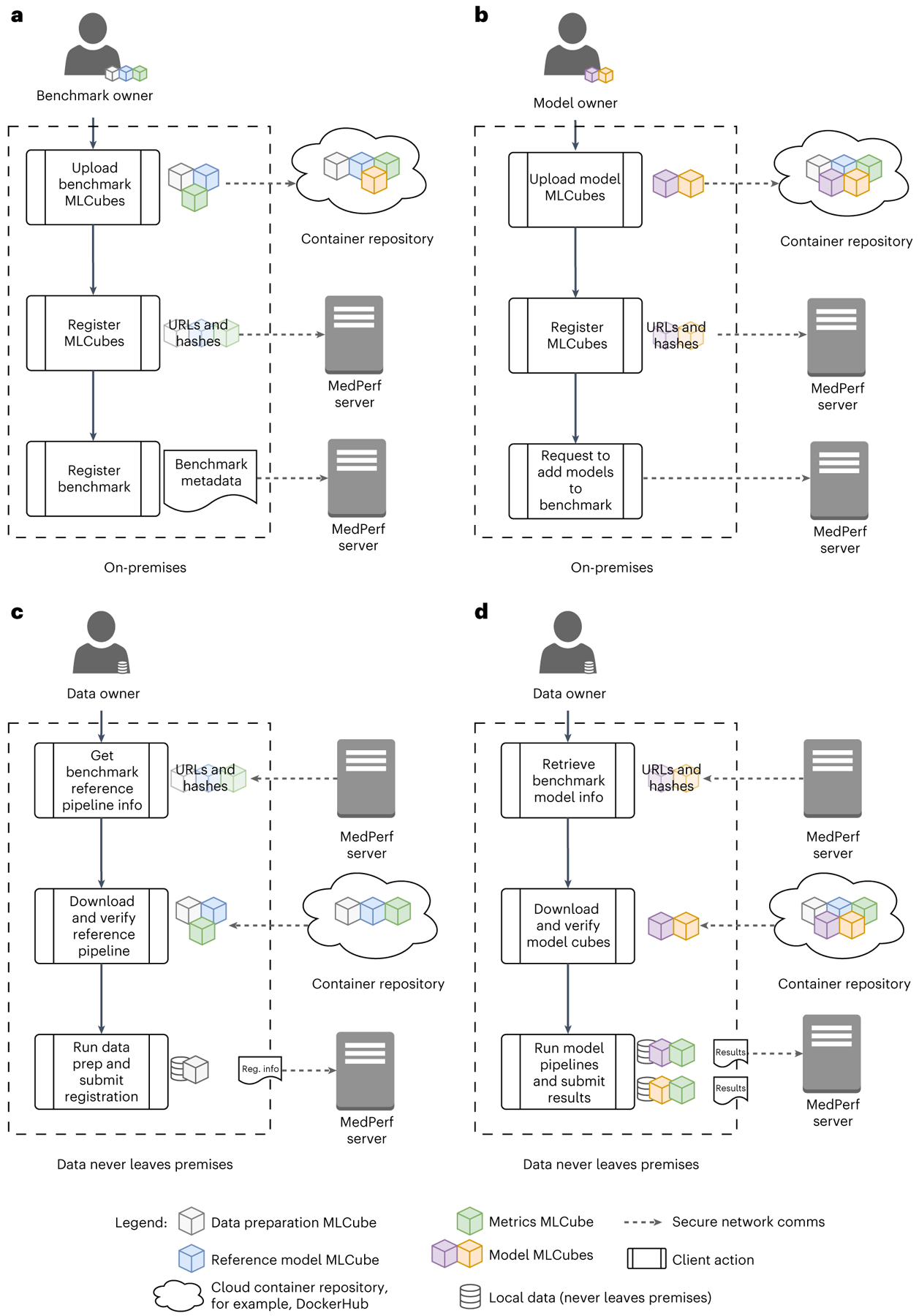

Fig. 4 |. Description of MedPerf workflows.

All user actions are performed via the MedPerf client, except uploading to container repositories. a, Benchmark registration by the benchmark committee: the committee uploads the data preparation, reference model and evaluation metrics MLCubes to a container repository and then registers them with the MedPerf Server. The committee then submits the benchmark registration, including required benchmark metadata. b, Model registration by the model owner: the model owner uploads the model MLCube to a container repository and then registers it with the MedPerf Server. They may then request inclusion of models in compatible benchmarks. c, Dataset registration by the data owner: the data owner downloads the metadata for the data preparation, reference model and evaluation metrics MLCubes from the MedPerf server. The MedPerf client uses these metadata to download and verify the corresponding MLCubes. The data owner runs the data preparation steps and submits the registration output via the data preparation MLCube to the MedPerf server. d, Execution of benchmark: the data owner downloads the metadata for the MLCubes used in the benchmark. The MedPerf client uses these metadata to download and verify the corresponding MLCubes. For each model, the data owner executes the model-to-evaluation-metrics pipeline (that is, the model and evaluation metrics MLCubes) and uploads the results files output by the evaluation metrics MLCube to the MedPerf server. No patient data are uploaded to the MedPerf server.