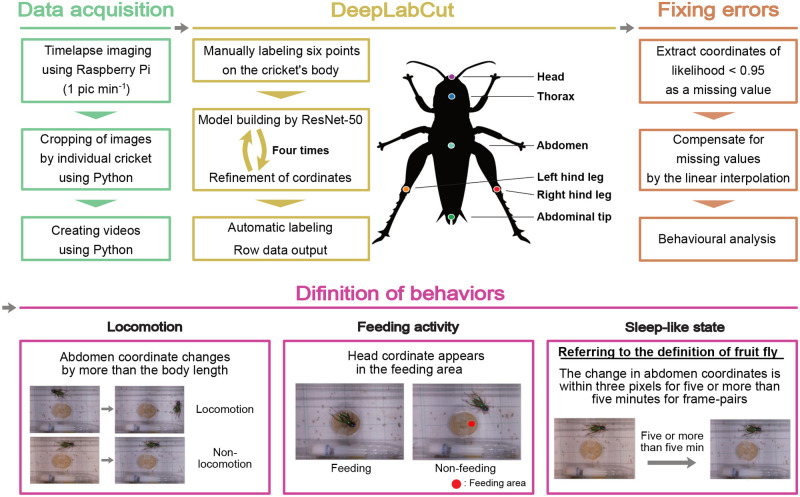

Fig. 2.

Outline view of the system. Scheme from image acquisition to behavioural quantification. First, image data were recorded with a camera module connected Raspberry Pi at an interval of one image per minute. Images were converted into videos, and a machine learning algorithm, ResNet-50 and DeepLabCut, was employed for training and refinement to construct a model with six automatically labelled keypoints on crickets’ head, thorax, abdomen, abdominal tip, left hind leg, and right hind leg. Subsequently, the coordinates of these labelled keypoints were extracted. After fixing errors, behaviours such as locomotion, feeding activity, and SLS were defined based on the coordinates and behaviours quantitatively analysed across all frames over a period of 14 days.