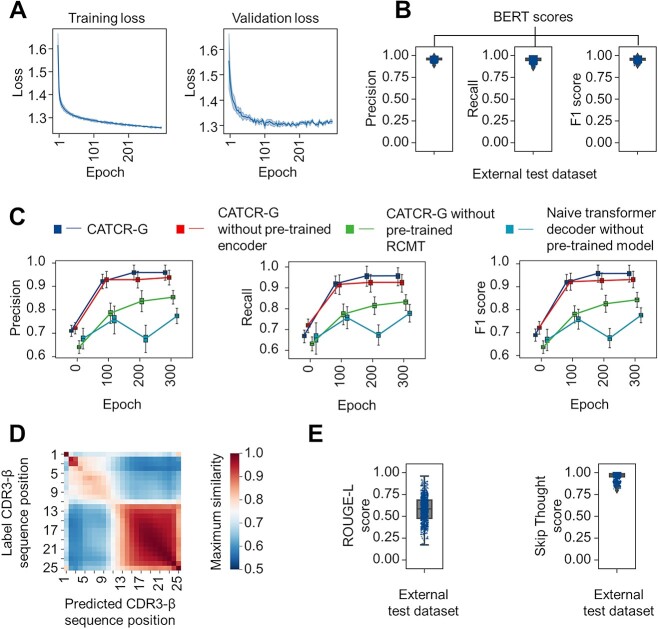

Figure 5.

Evaluation of the generative module (CATCR-G). (A) Loss curves for the training and validation sets. (B) The BERT score method, which assesses the semantic similarity between token embeddings, was used to compare the CDR3 sequences predicted by CATCR-G for unseen epitopes with a reference sequence set. (C) The performance of the complete CATCR-G is compared with that of models that do not use pretrained encoder weights, do not use weights from the RCMT module, or neither. This comparison is shown across different epochs for accuracy, recall and F1 scores, with evaluations based on the BERT score method. (D) The maximum similarity observed at different positions between the predicted CDR3 sequences and reference sequences indicate alignment accuracy. (E) The ROUGE-L metric, which evaluates the longest common subsequence between two sequences, and the skip-thought method, which assesses the semantic coherence of sentence embeddings, were used to score the predicted CDR3 sequences by CATCR-G against reference sequences.